Applicable Stroom version: “7.5”

Documentation generated on: 13 Feb 2026, 00:08 UTC

Documentation release version: stroom-docs-v3481To see the documentation for other versions of Stroom click the version drop-down at the top of the screen.

This is the multi-page printable view of this section. Click here to print.

Documentation

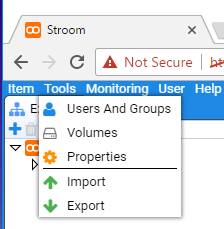

- 1: Quick Start Guide

- 1.1: Running Stroom

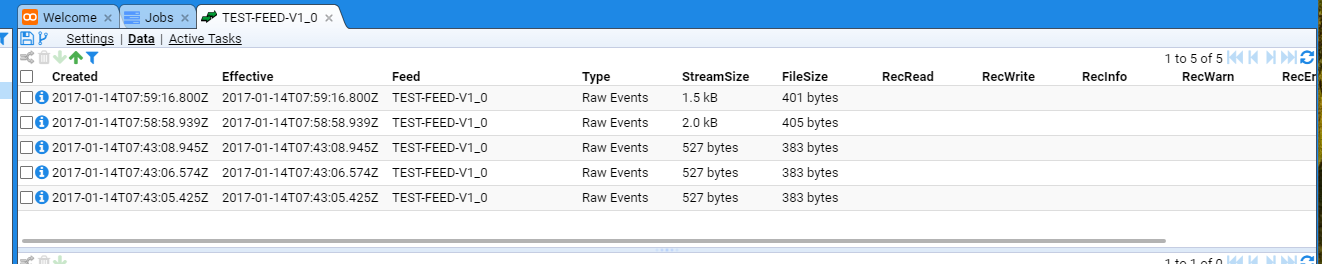

- 1.2: Feeds

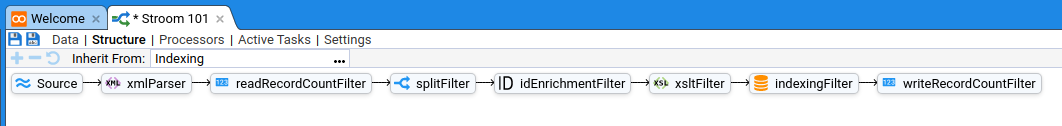

- 1.3: Pipeline Processing

- 1.4: Indexing

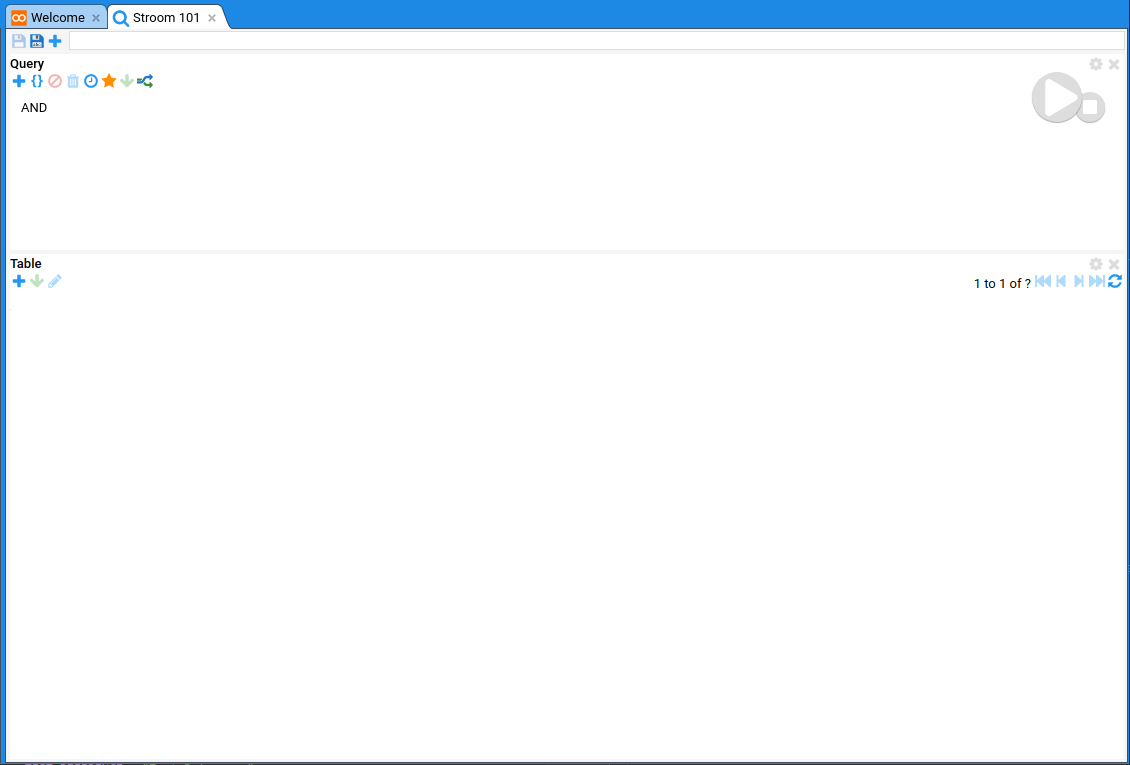

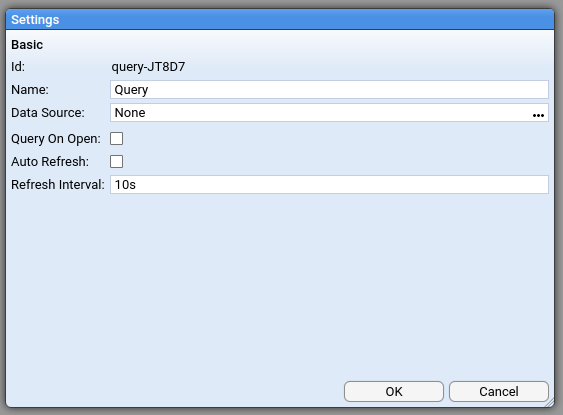

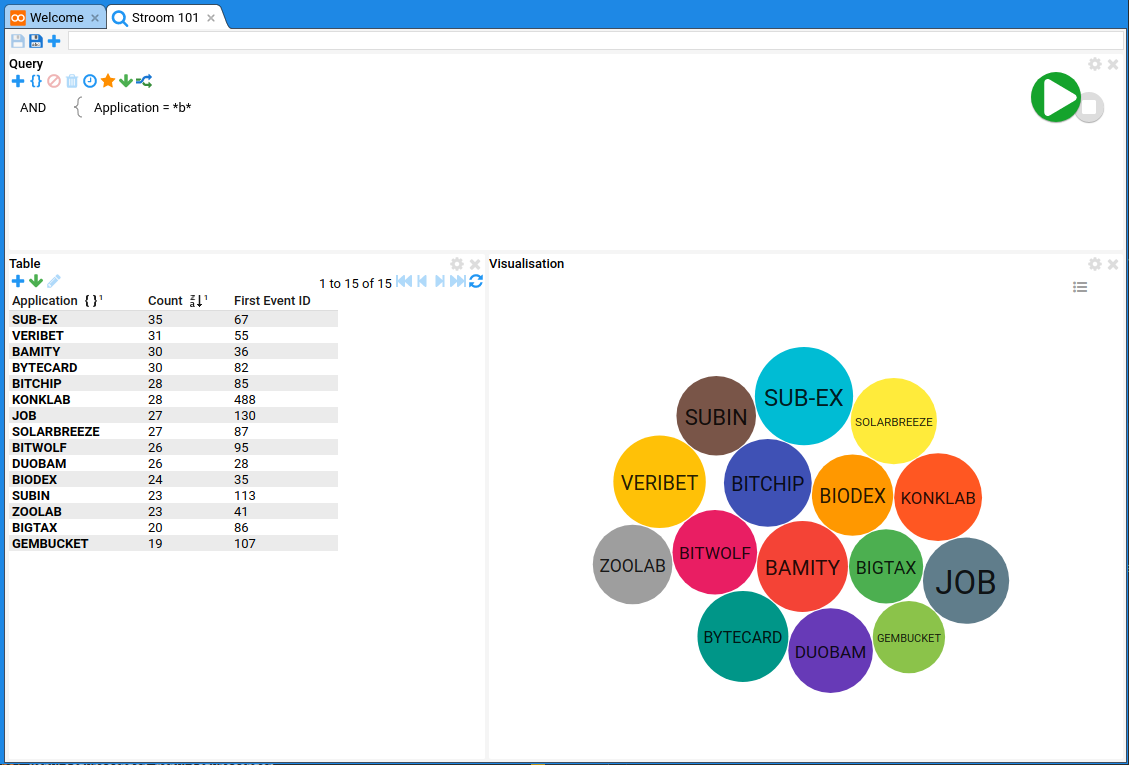

- 1.5: Dashboards

- 2: Stroom Architecture

- 3: Installation Guide

- 3.1: Single Node Docker Installation

- 3.2: Configuration

- 3.2.1: Stroom and Stroom-Proxy Configuration

- 3.2.1.1: Common Configuration

- 3.2.1.2: Stroom Configuration

- 3.2.1.3: Stroom Proxy Configuration

- 3.2.2: Nginx Configuration

- 3.2.3: Stroom Log Sender Configuration

- 3.2.4: MySQL Configuration

- 3.3: Installing in an Air Gapped Environment

- 3.4: Upgrades

- 3.4.1: Minor Upgrades and Patches

- 3.4.2: Upgrade from v5 to v7

- 3.4.3: Upgrade from v6 to v7

- 3.5: Setup

- 3.5.1: MySQL Setup

- 3.5.2: Securing Stroom

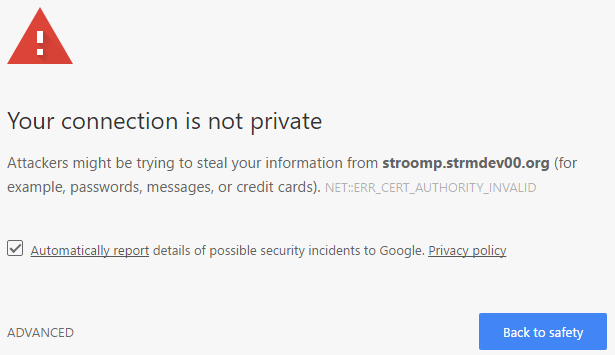

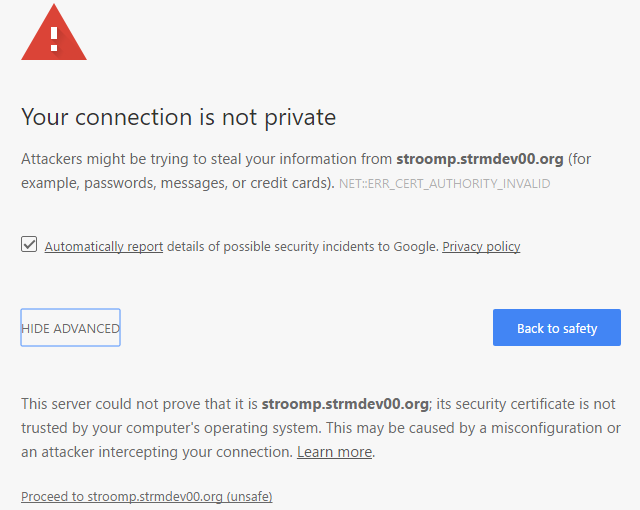

- 3.5.3: Java Key Store Setup

- 3.5.4: Processing Users

- 3.5.5: Setting up Stroom with an Open ID Connect IDP

- 3.5.5.1: Accounts vs Users

- 3.5.5.2: Stroom's Internal IDP

- 3.5.5.3: External IDP

- 3.5.5.4: Tokens for API use

- 3.5.5.5: Test Credentials

- 3.6: Stroom 6 Installation

- 3.7: Kubernetes Cluster

- 3.7.1: Introduction

- 3.7.2: Install Operator

- 3.7.3: Upgrade Operator

- 3.7.4: Remove Operator

- 3.7.5: Configure Database

- 3.7.6: Configure a cluster

- 3.7.7: Auto Scaler

- 3.7.8: Stop Stroom Cluster

- 3.7.9: Restart Node

- 4: How Tos

- 4.1: General

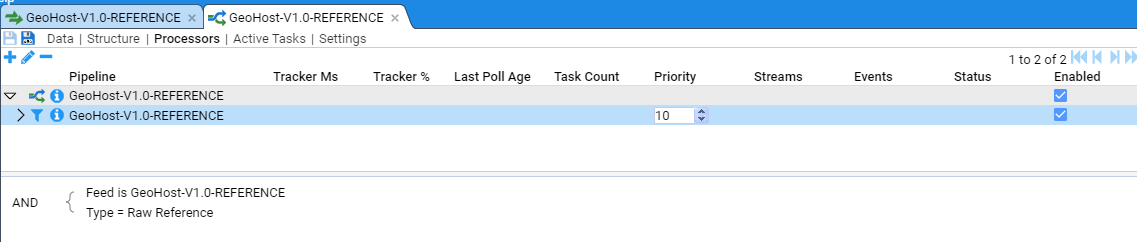

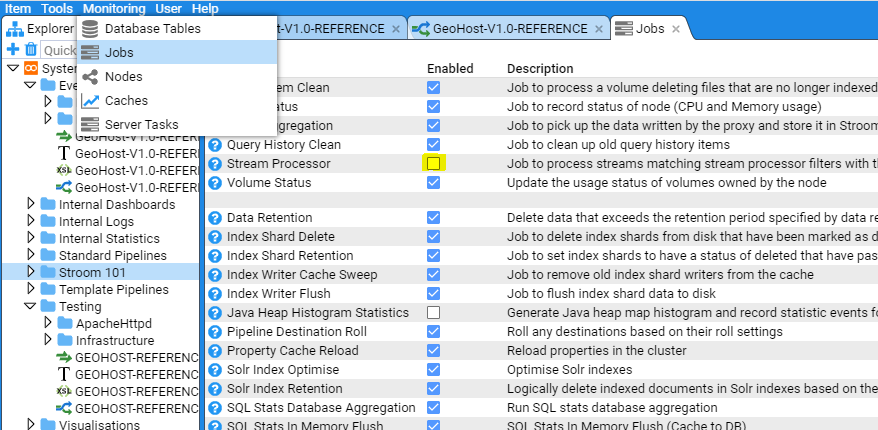

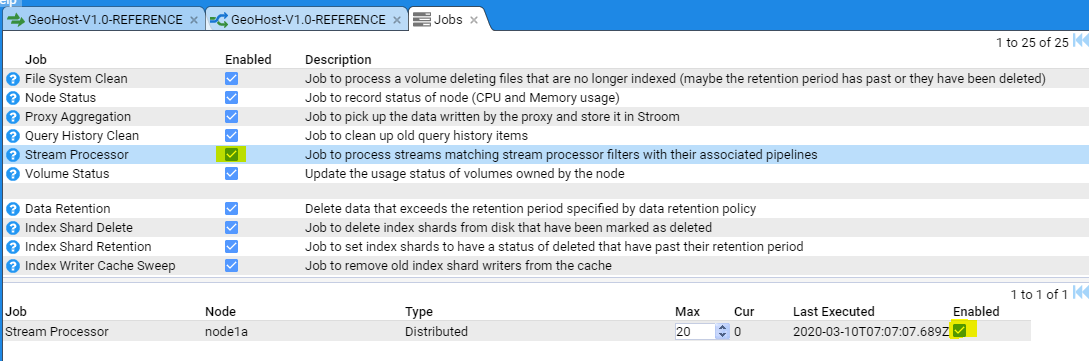

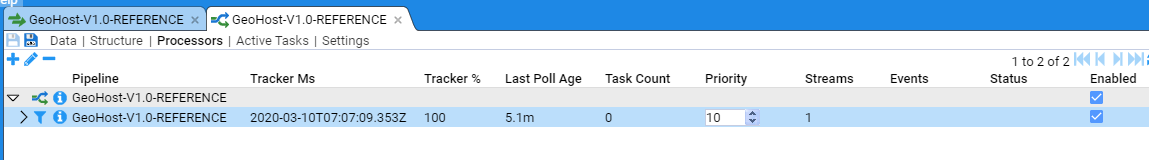

- 4.1.1: Enabling Processors

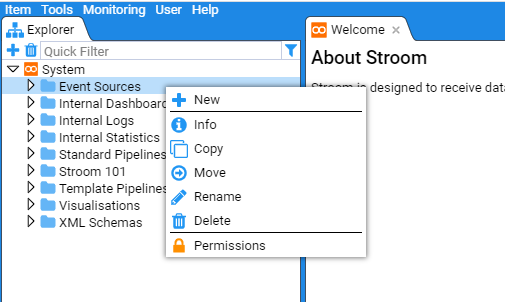

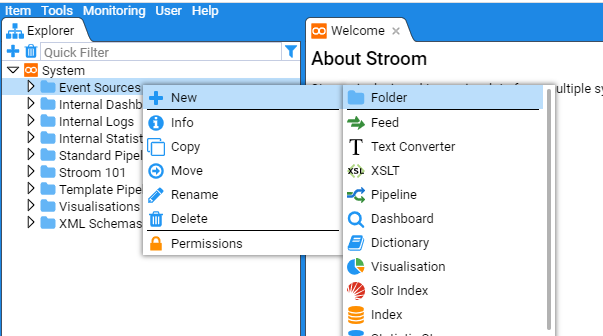

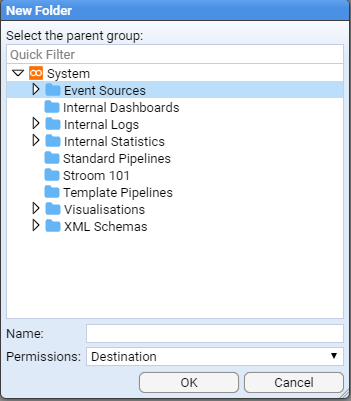

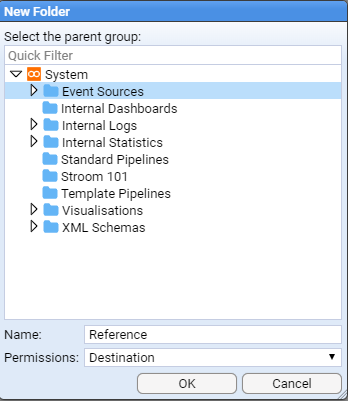

- 4.1.2: Explorer Management

- 4.1.3: Feed Management

- 4.1.4: Raw Source Tracking

- 4.1.5: Task Management

- 4.2: Administration

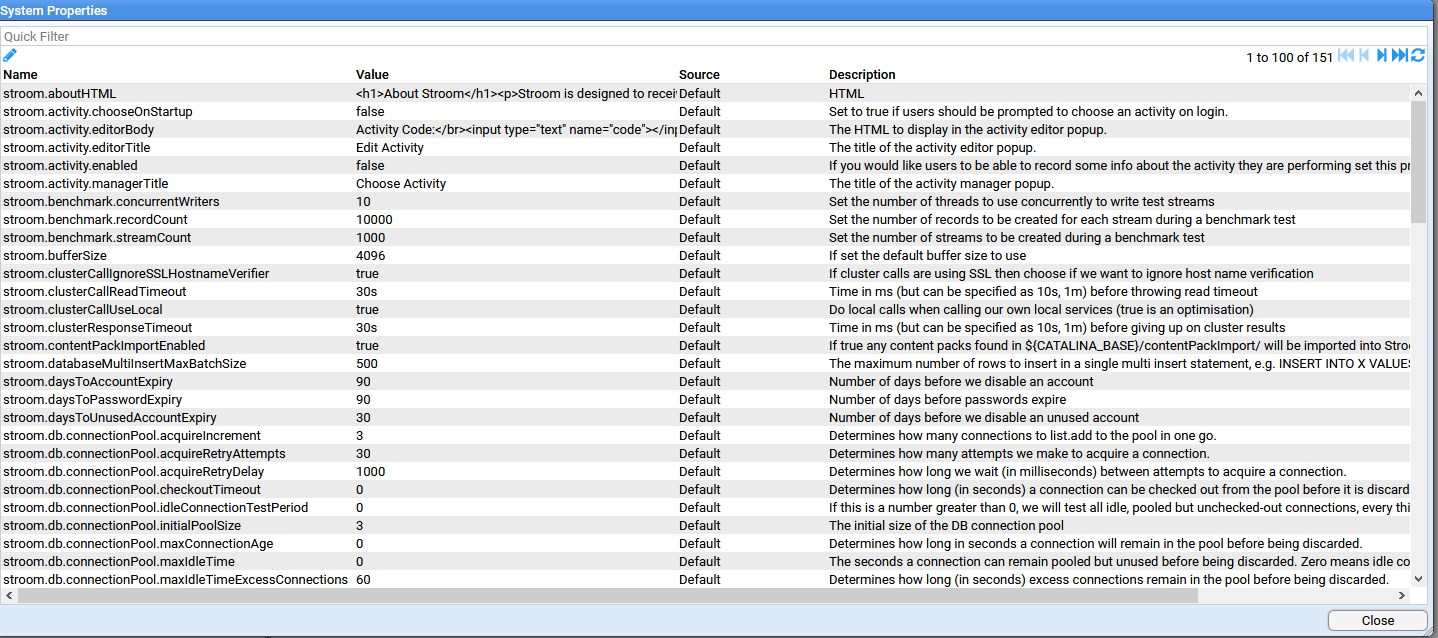

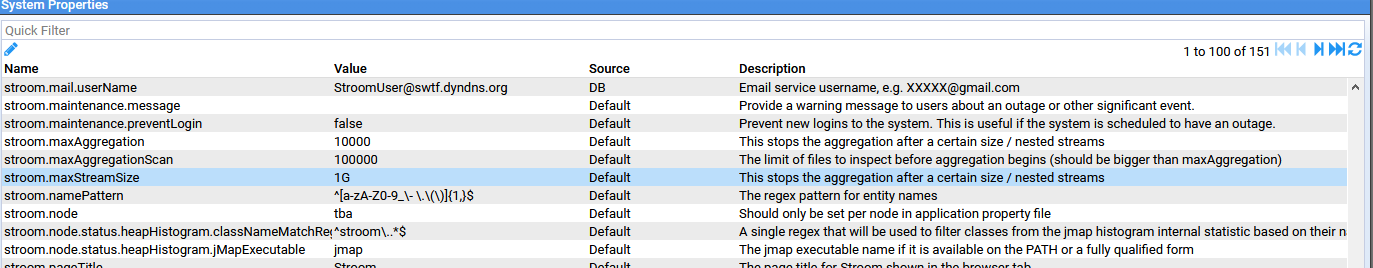

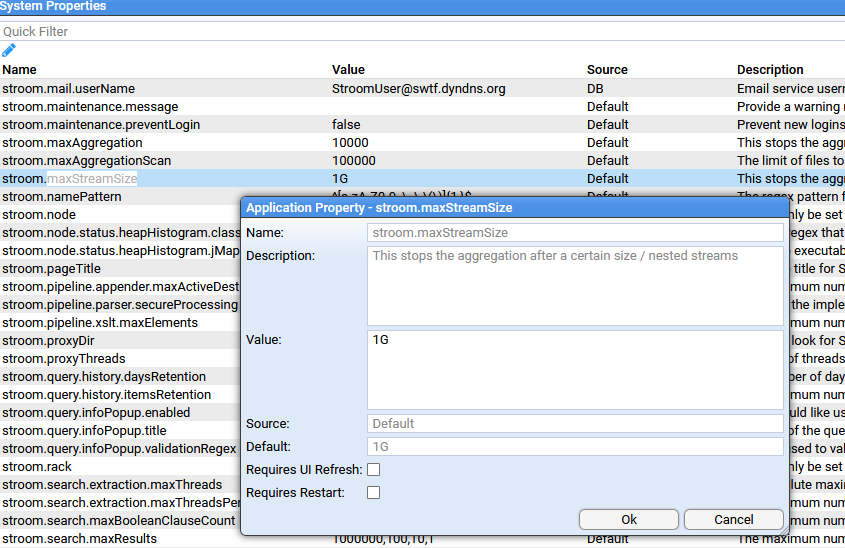

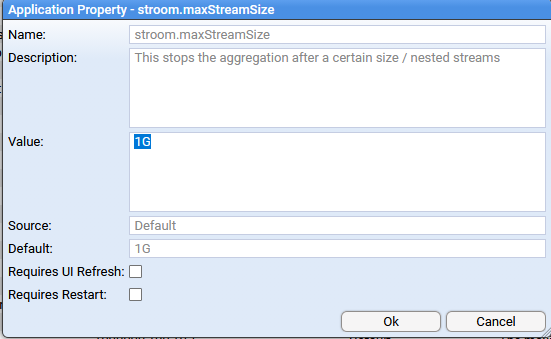

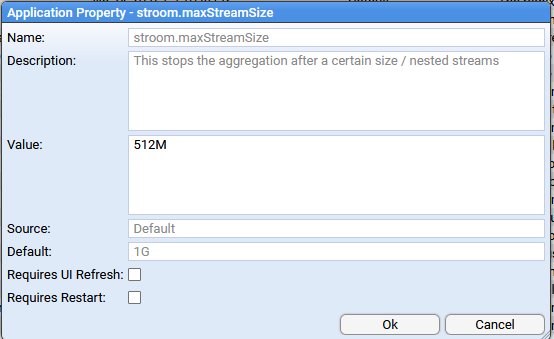

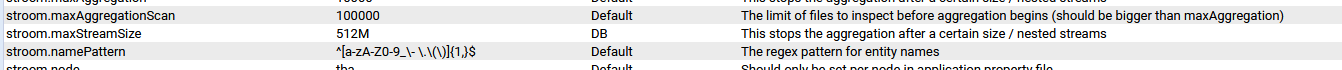

- 4.2.1: System Properties

- 4.3: Authentication

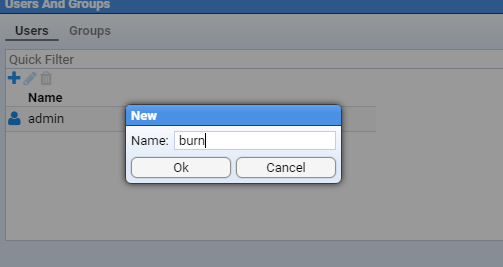

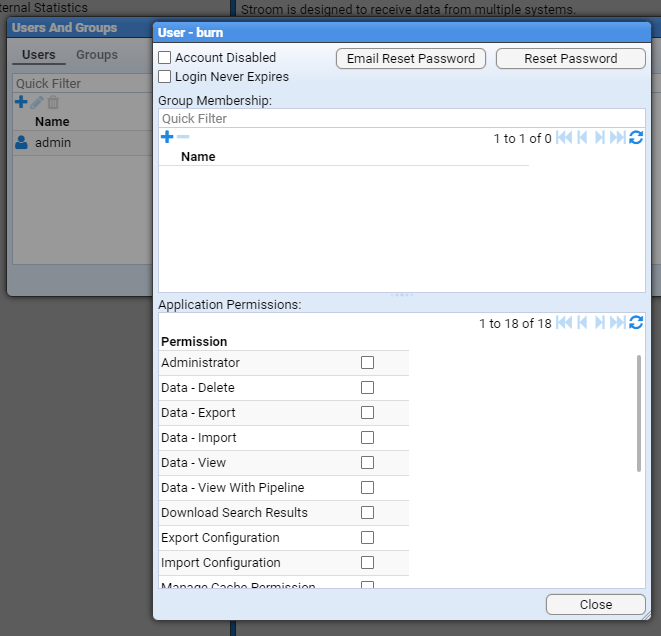

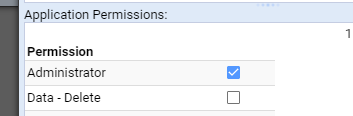

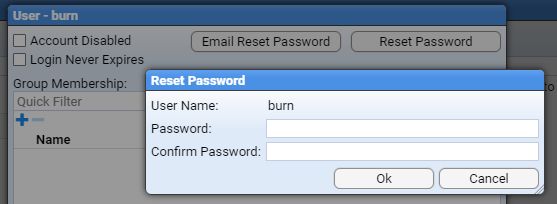

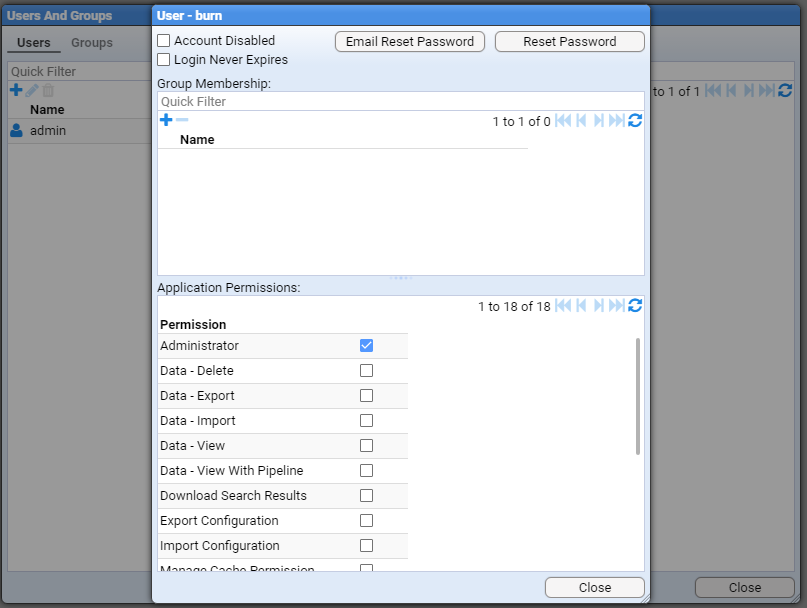

- 4.3.1: Create a user

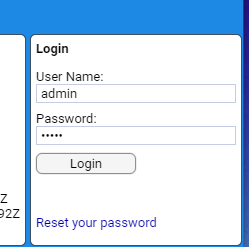

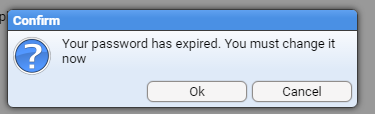

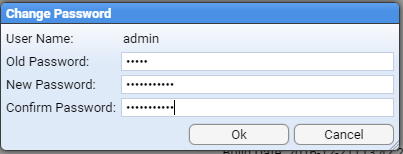

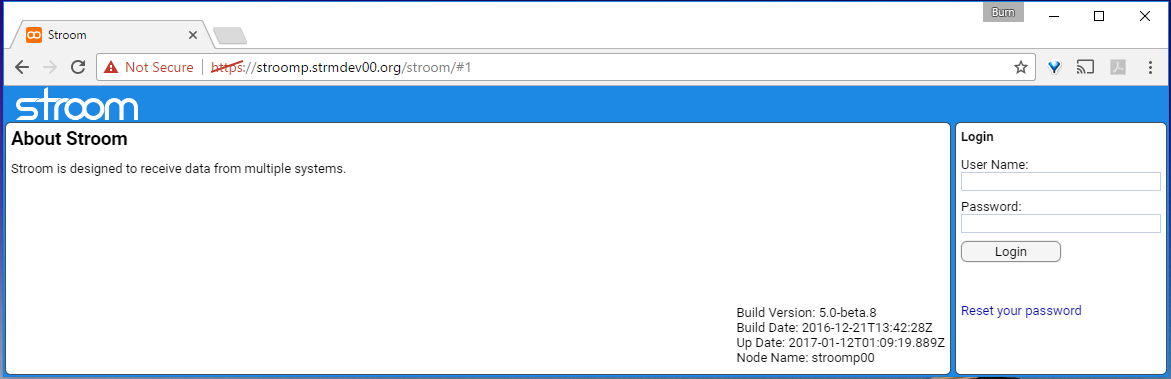

- 4.3.2: Login

- 4.3.3: Logout

- 4.4: Installation

- 4.4.1: Apache Httpd/Mod_JK configuration for Stroom

- 4.4.2: Database Installation

- 4.4.3: Installation

- 4.4.4: Installation of Stroom Application

- 4.4.5: Installation of Stroom Proxy

- 4.4.6: NFS Installation and Configuration

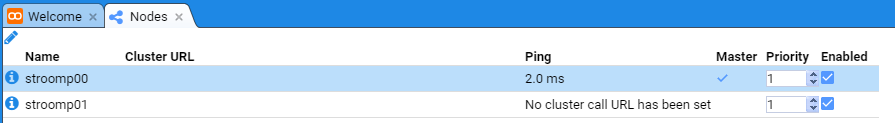

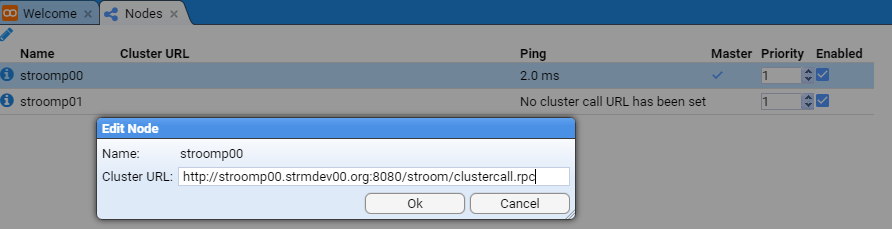

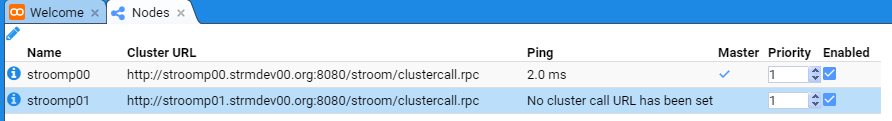

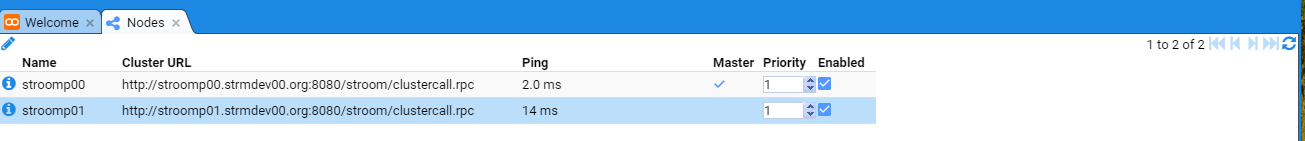

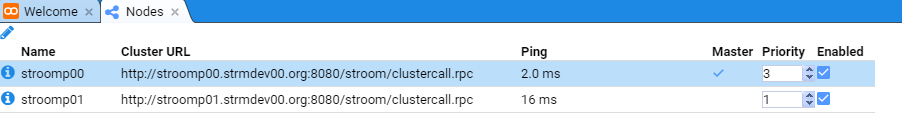

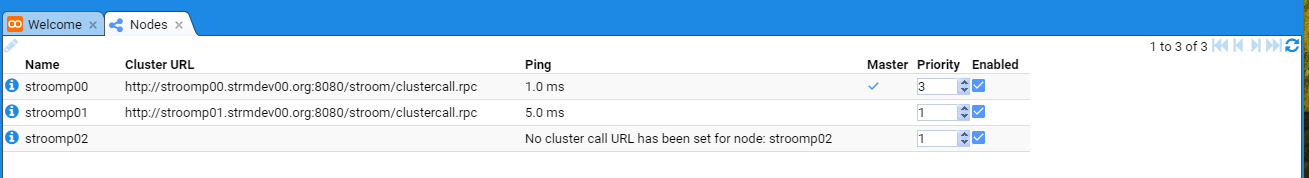

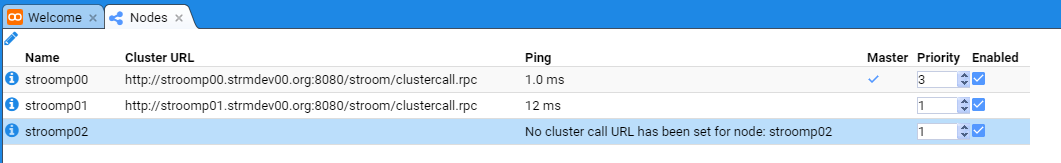

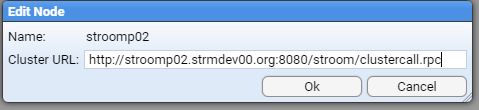

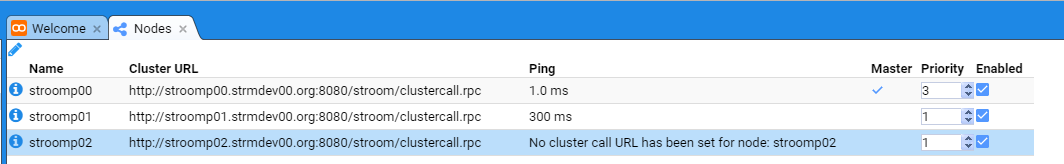

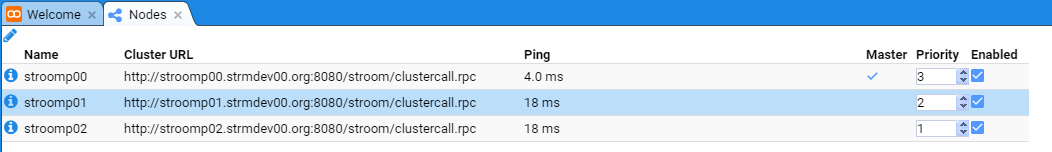

- 4.4.7: Node Cluster URL Setup

- 4.4.8: Processing User setup

- 4.4.9: SSL Certificate Generation

- 4.4.10: Testing Stroom Installation

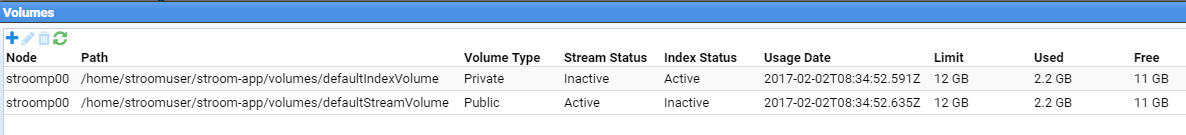

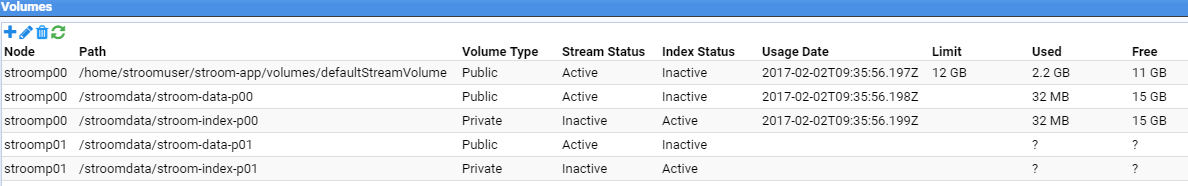

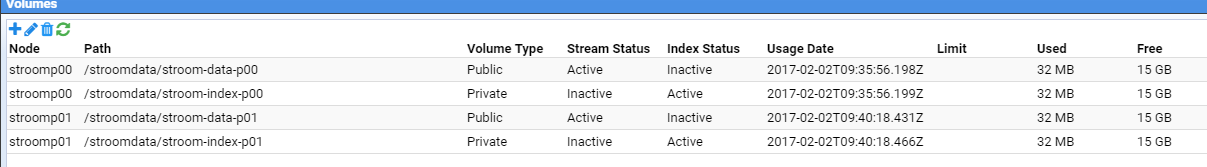

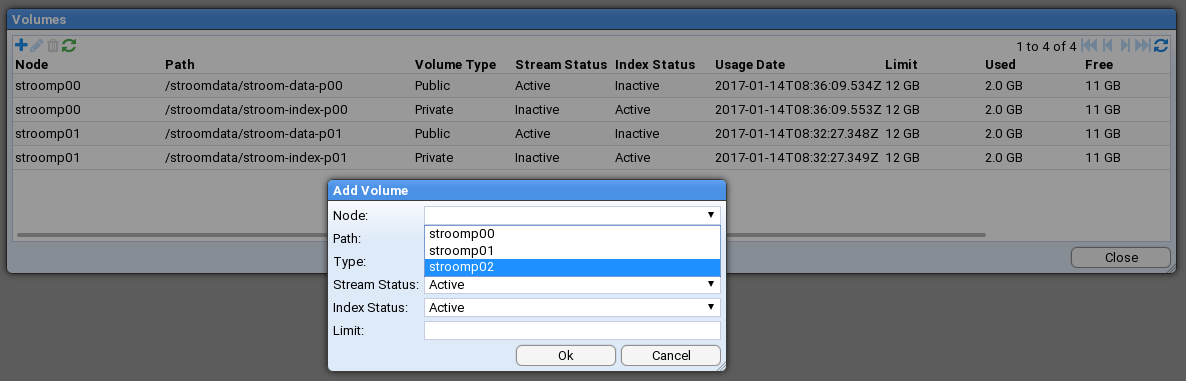

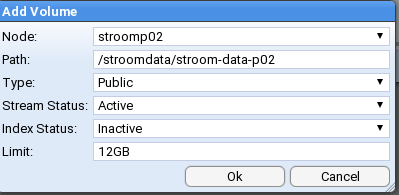

- 4.4.11: Volume Maintenance

- 4.5: Event Feeds

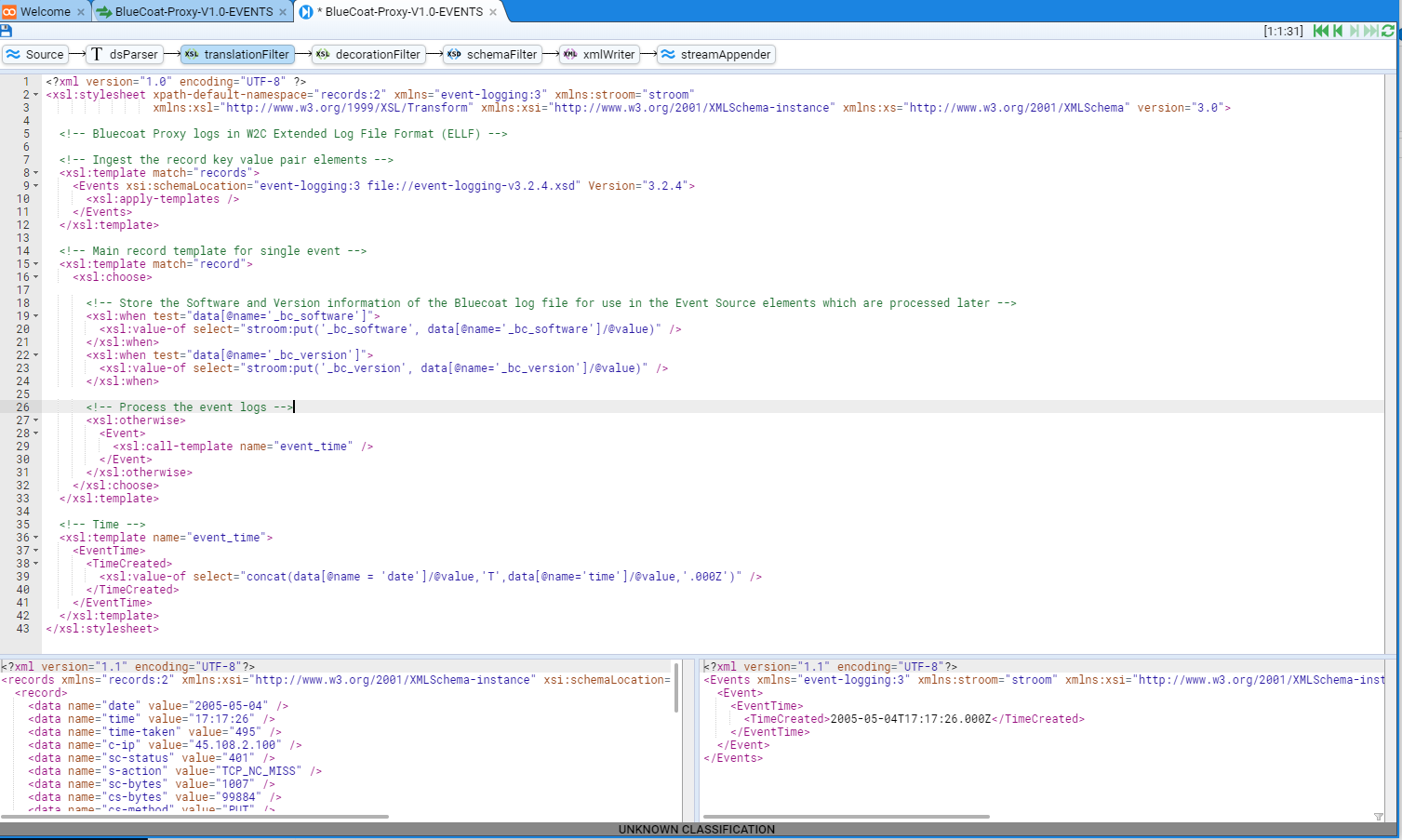

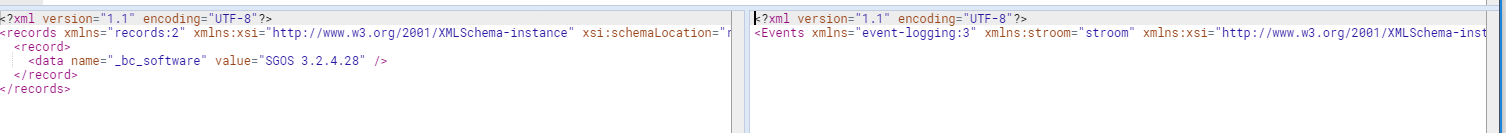

- 4.5.1: Writing an XSLT Translation

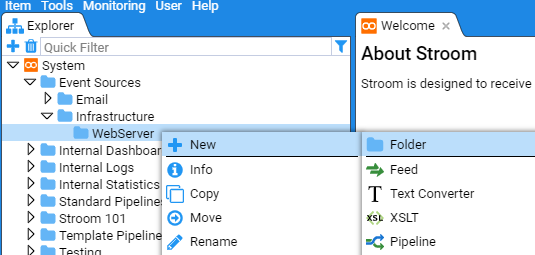

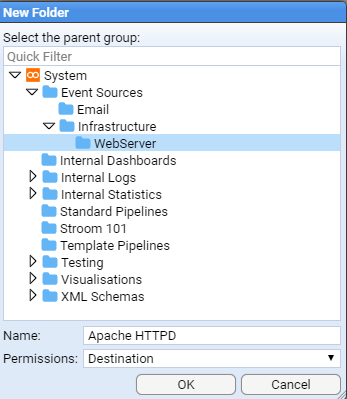

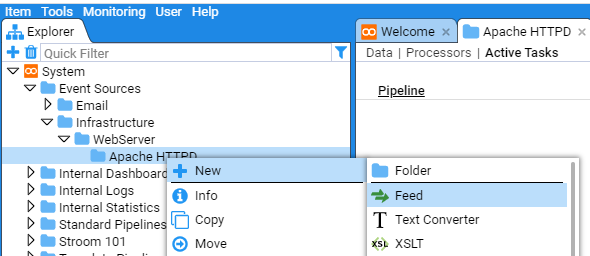

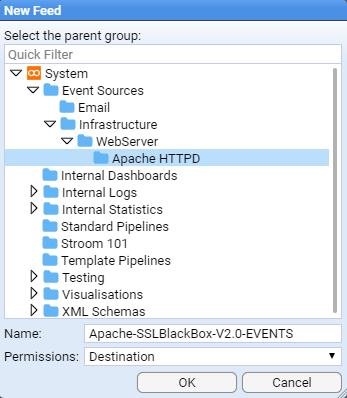

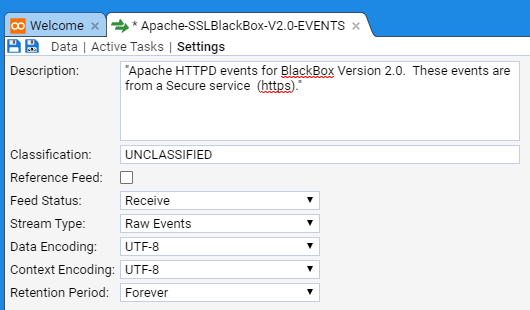

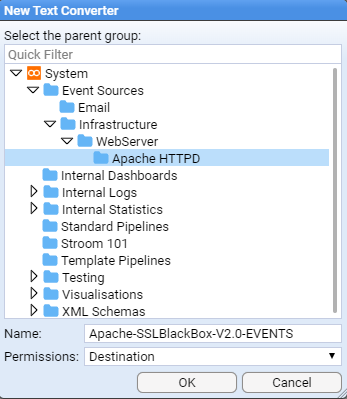

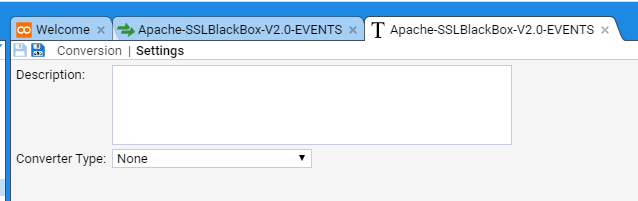

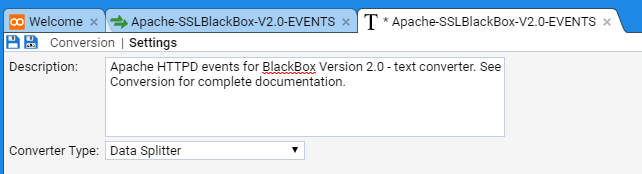

- 4.5.2: Apache HTTPD Event Feed

- 4.5.3: Event Processing

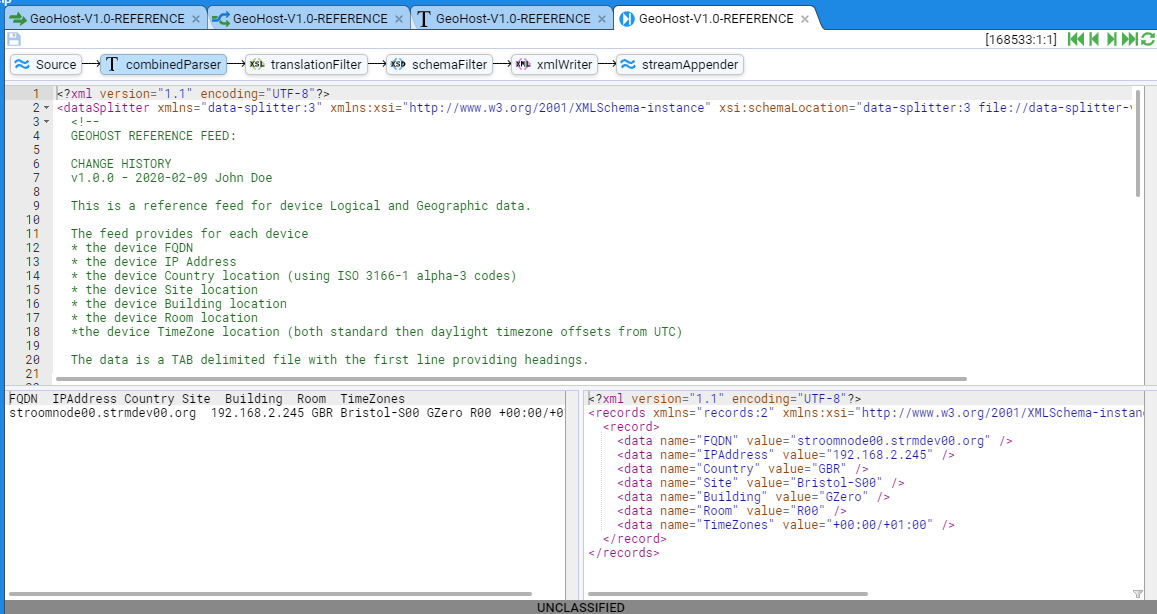

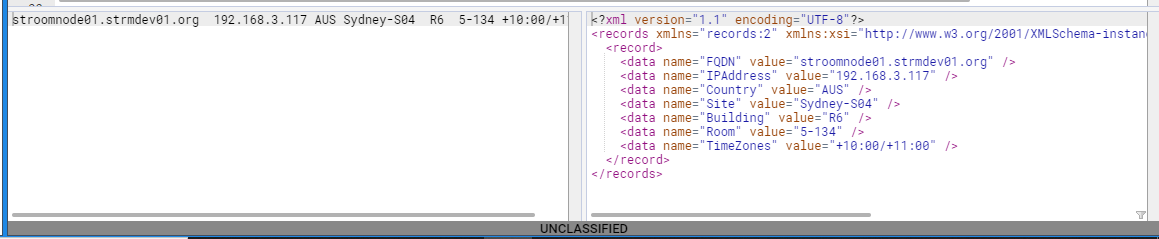

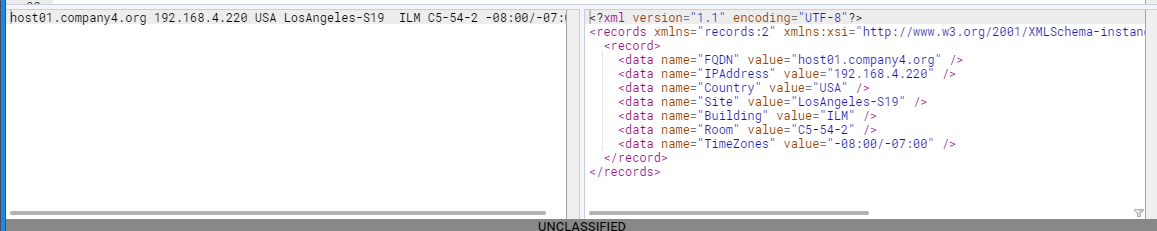

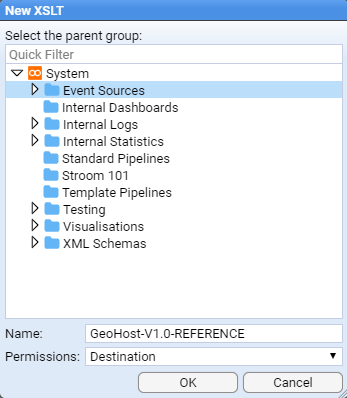

- 4.6: Reference Feeds

- 4.6.1: Use a Reference Feed

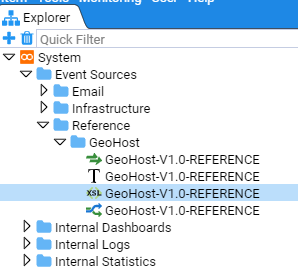

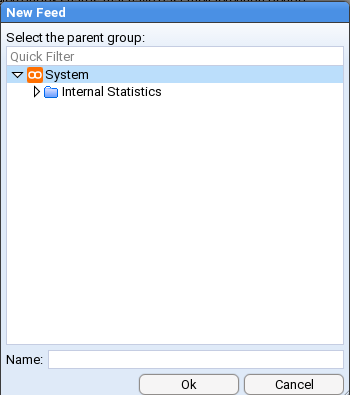

- 4.6.2: Create a Simple Reference Feed

- 4.7: Indexing and Search

- 4.7.1: Elasticsearch

- 4.7.2: Apache Solr

- 4.7.3: Stroom Search API

- 4.8: Event Post Processing

- 4.8.1: Event Forwarding

- 5: User Guide

- 5.1: Application Programming Interfaces (API)

- 5.1.1: API Specification

- 5.1.2: Calling an API

- 5.1.3: Query APIs

- 5.1.4: Export Content API

- 5.1.5: Reference Data API

- 5.1.6: Explorer API

- 5.1.7: Feed API

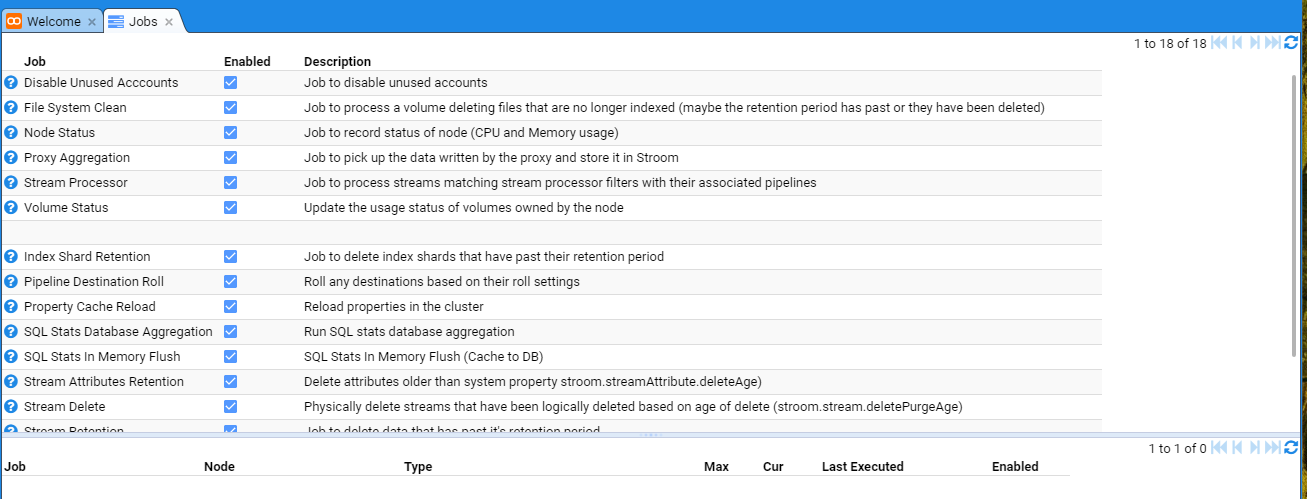

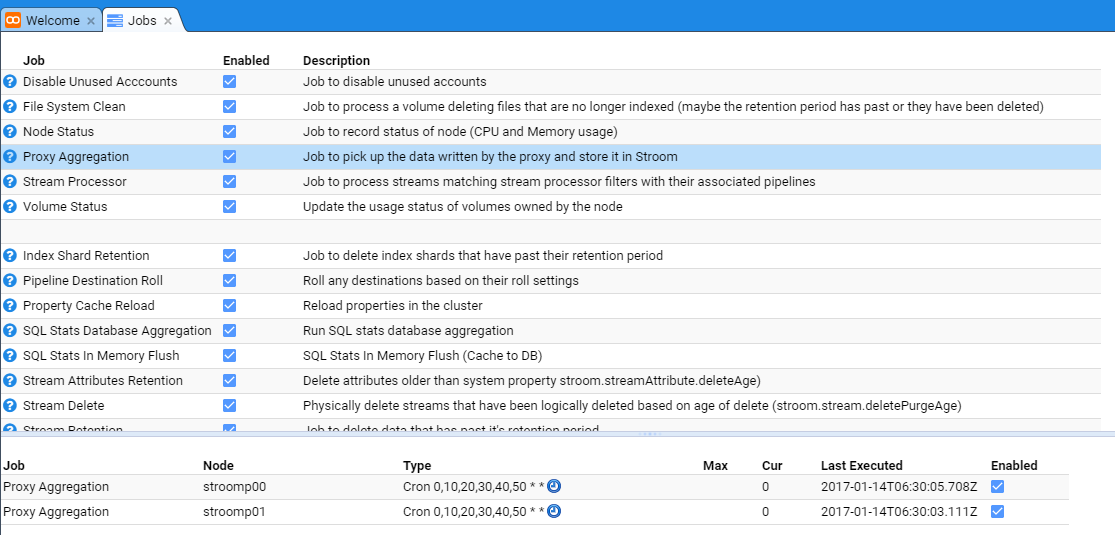

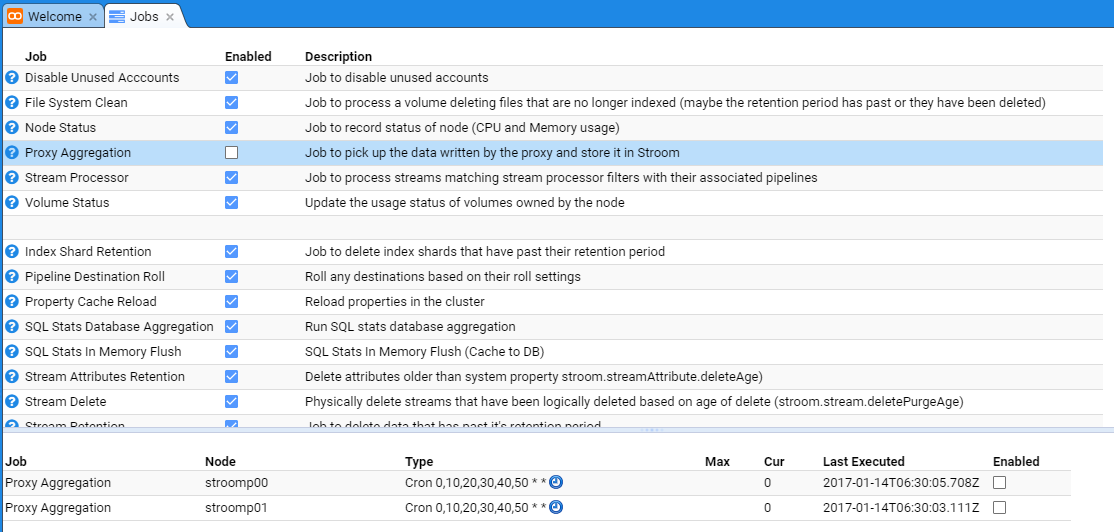

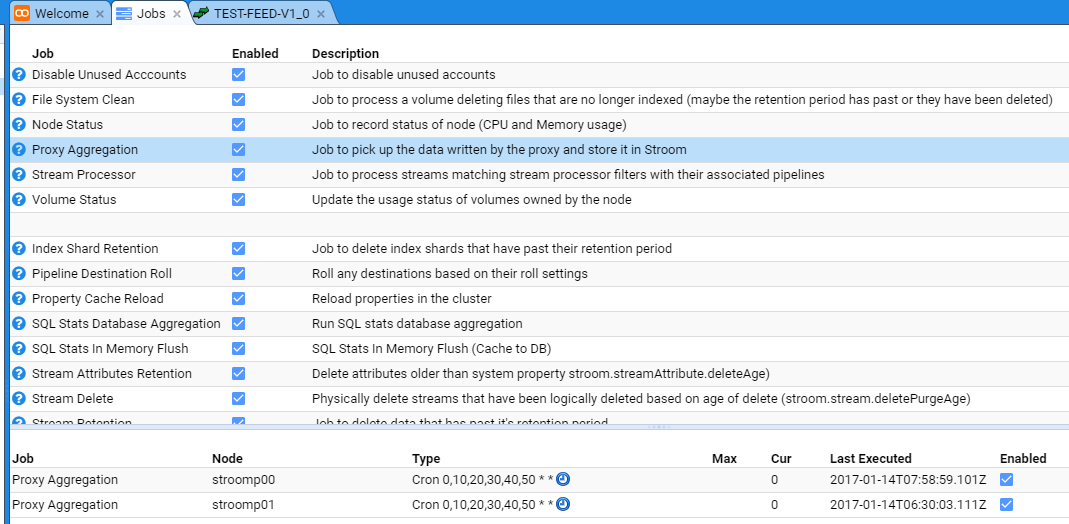

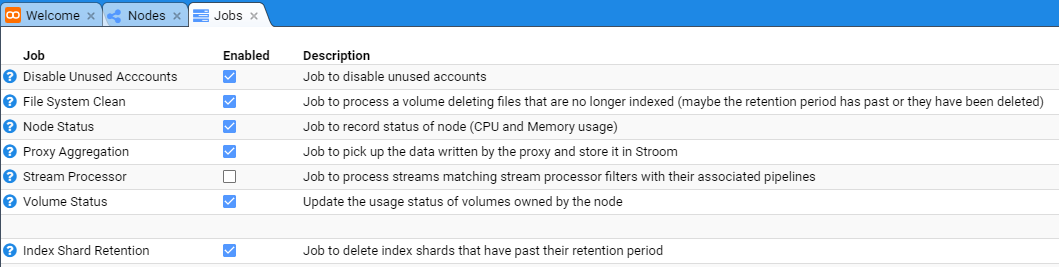

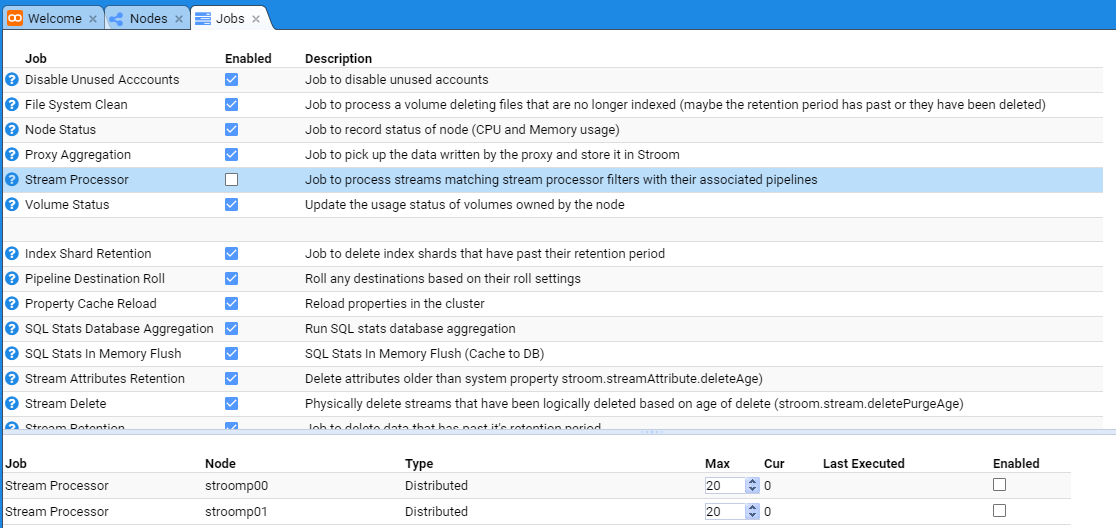

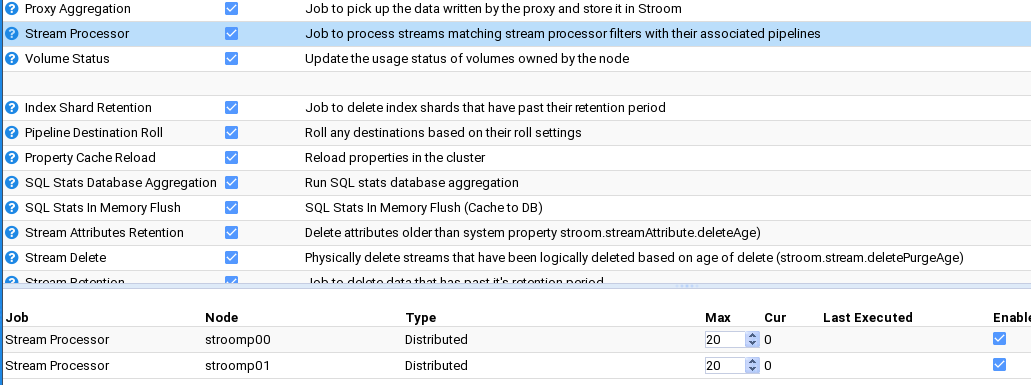

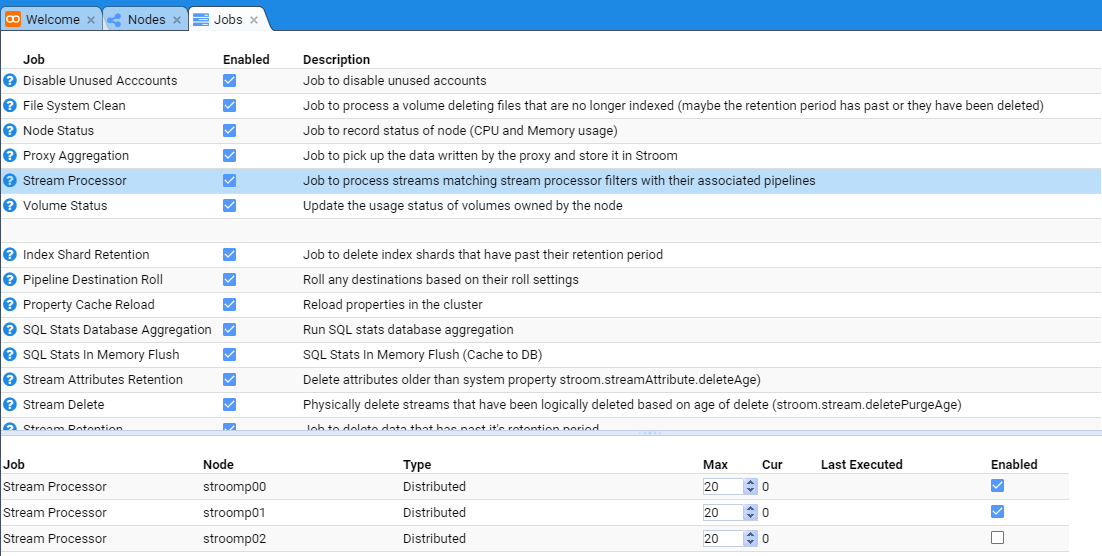

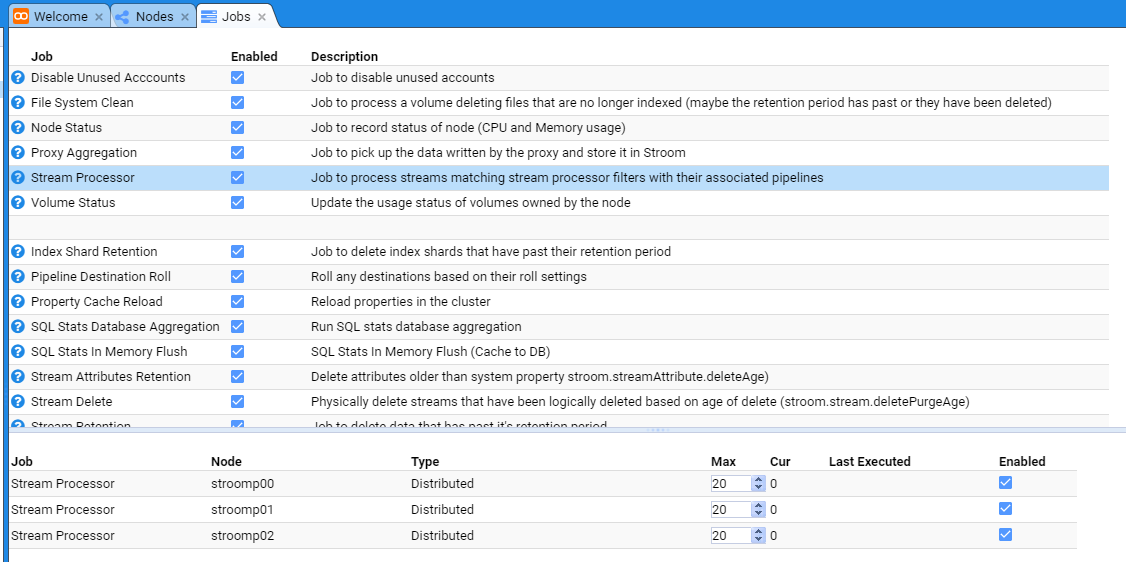

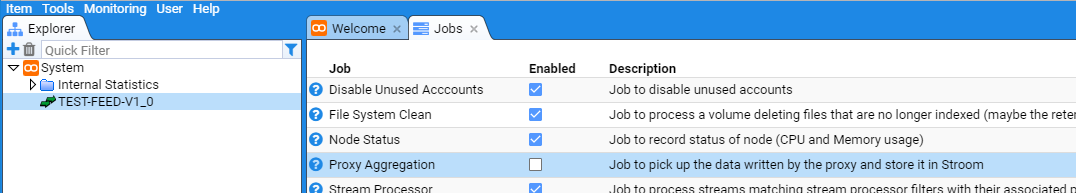

- 5.2: Background Jobs

- 5.2.1: Scheduler

- 5.3: Concepts

- 5.3.1: Streams

- 5.4: Data Retention

- 5.5: Data Splitter

- 5.5.1: Simple CSV Example

- 5.5.2: Simple CSV example with heading

- 5.5.3: Complex example with regex and user defined names

- 5.5.4: Multi Line Example

- 5.5.5: Element Reference

- 5.5.5.1: Content Providers

- 5.5.5.2: Expressions

- 5.5.5.3: Variables

- 5.5.5.4: Output

- 5.5.6: Match References, Variables and Fixed Strings

- 5.5.6.1: Expression match references

- 5.5.6.2: Variable reference

- 5.5.6.3: Use of fixed strings

- 5.5.6.4: Concatenation of references

- 5.6: Event Feeds

- 5.7: Indexing data

- 5.7.1: Elasticsearch

- 5.7.1.1: Introduction

- 5.7.1.2: Getting Started

- 5.7.1.3: Indexing data

- 5.7.1.4: Exploring Data in Kibana

- 5.7.2: Lucene Indexes

- 5.7.3: Solr Integration

- 5.8: Nodes

- 5.9: Pipelines

- 5.9.1: Pipeline Recipies

- 5.9.2: Parser

- 5.9.2.1: XML Fragments

- 5.9.3: XSLT Conversion

- 5.9.3.1: XSLT Basics

- 5.9.3.2: XSLT Functions

- 5.9.3.3: XSLT Includes

- 5.9.4: File Output

- 5.9.5: Reference Data

- 5.9.6: Context Data

- 5.10: Properties

- 5.11: Searching Data

- 5.11.1: Data Sources

- 5.11.1.1: Lucene Index Data Source

- 5.11.1.2: Statistics

- 5.11.1.3: Elasticsearch

- 5.11.1.4: Internal Data Sources

- 5.11.2: Dashboards

- 5.11.2.1: Queries

- 5.11.2.2: Internal Links

- 5.11.2.3: Direct URLs

- 5.11.3: Query

- 5.11.3.1: Stroom Query Language

- 5.11.4: Analytic Rules

- 5.11.5: Search Extraction

- 5.11.6: Dictionaries

- 5.12: Security

- 5.12.1: Deployment

- 5.12.2: User Accounts

- 5.12.3: Users and Groups

- 5.12.4: Application Permissions

- 5.12.5: Document Permissions

- 5.13: Tools

- 5.13.1: Command Line Tools

- 5.13.2: Stream Dump Tool

- 5.14: User Content

- 5.14.1: Editing Text

- 5.14.2: Naming Conventions

- 5.14.3: Documenting content

- 5.14.4: Finding Things

- 5.15: Viewing Data

- 5.16: Volumes

- 6: Reference Section

- 6.1: Cron Syntax

- 6.2: Dates & Times

- 6.3: Documents

- 6.4: Editor Completion Snippets

- 6.5: Expression functions

- 6.5.1: Aggregate Functions

- 6.5.2: Cast Functions

- 6.5.3: Date Functions

- 6.5.4: Link Functions

- 6.5.5: Logic Funtions

- 6.5.6: Mathematics Functions

- 6.5.7: Selection Functions

- 6.5.8: String Functions

- 6.5.9: Type Checking Functions

- 6.5.10: URI Functions

- 6.5.11: Value Functions

- 6.6: Background Jobs

- 6.7: Keyboard Shortcuts

- 6.8: Pipeline Elements

- 6.9: Templating

- 7: Sending Data to Stroom

- 7.1: Event Data Formats

- 7.1.1: Character Encoding

- 7.1.2: Event XML Fragments

- 7.2: Header Arguments

- 7.3: Response Codes

- 7.4: Payload Formats

- 7.5: SSL Configuration

- 7.6: Example Clients

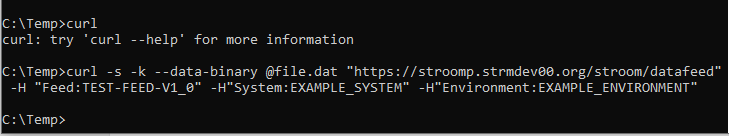

- 7.6.1: curl (Linux)

- 7.6.2: curl (Windows)

- 7.6.3: event-logging (Java library)

- 7.6.4: send_to_stroom.sh (Linux)

- 7.6.5: Simple C# Client

- 7.6.6: Simple Java Client

- 7.6.7: stroom-log-sender (Docker)

- 7.6.8: VBScript (Windows)

- 7.6.9: wget (Windows)

- 7.7: Token Authentication

- 7.8: Java Keystores

- 8: Stroom Proxy

- 9: Glossary

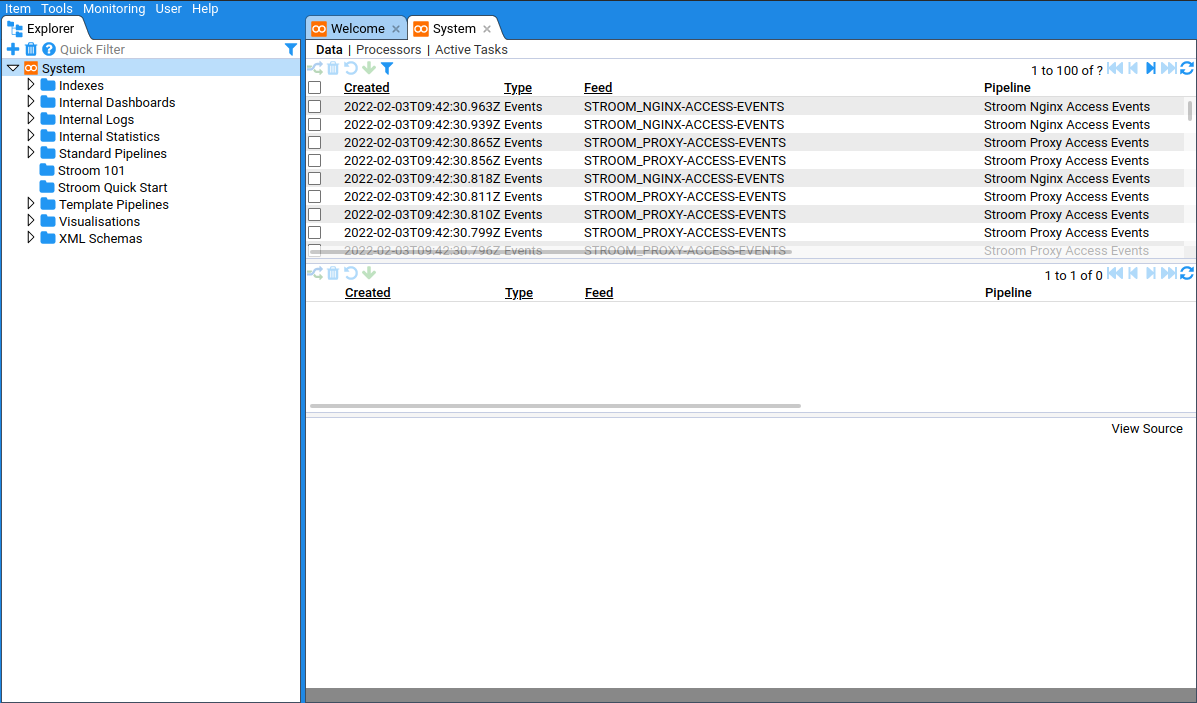

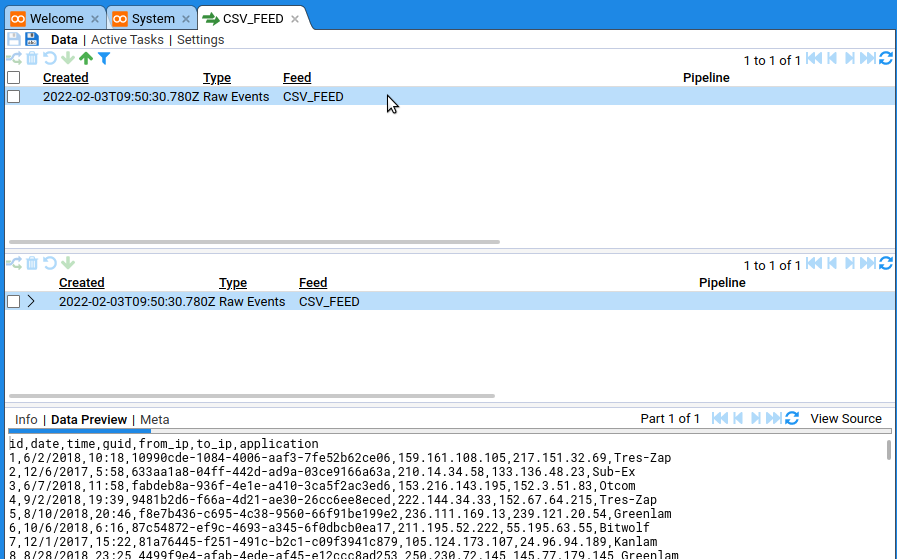

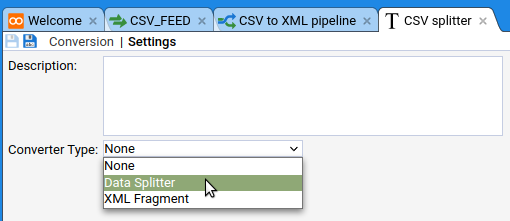

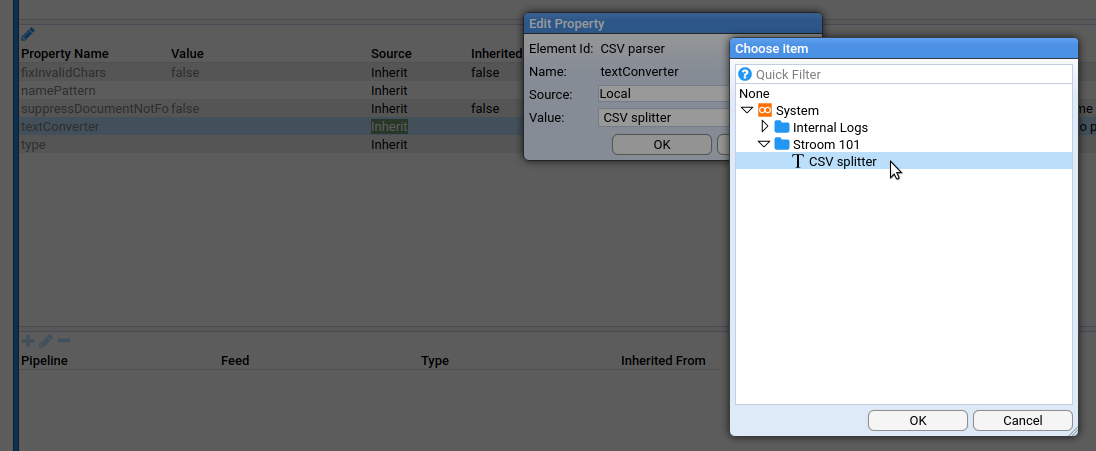

1 - Quick Start Guide

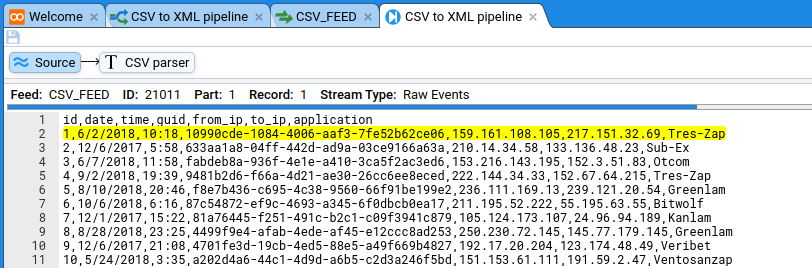

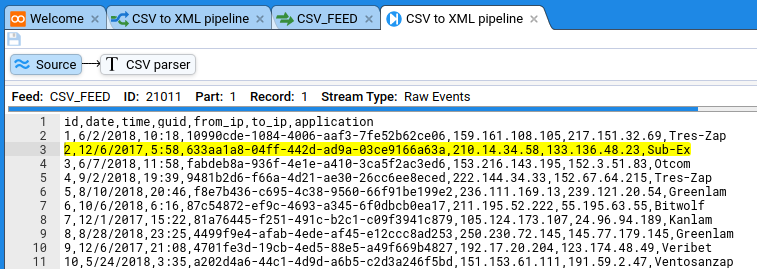

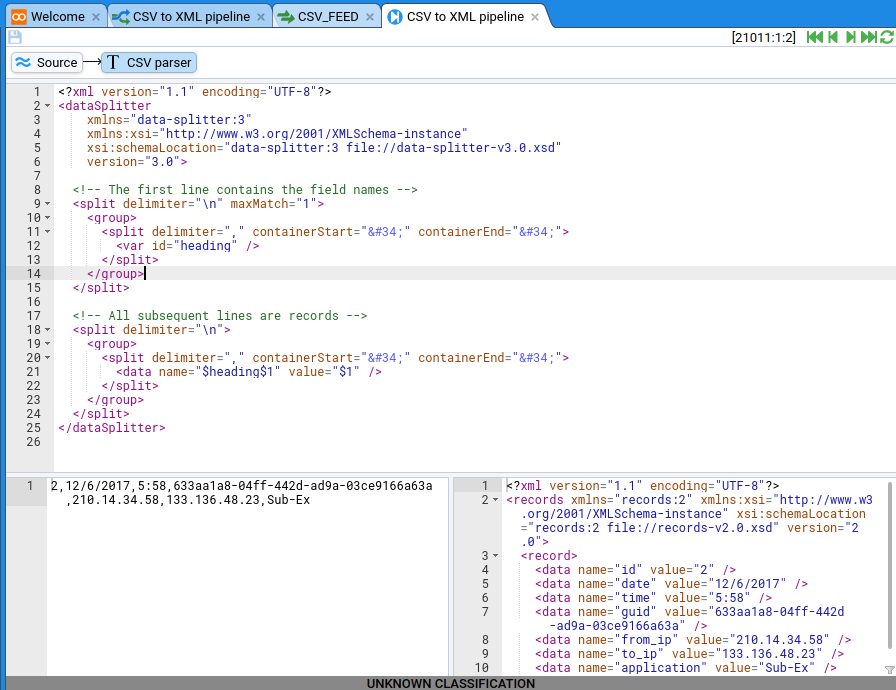

In this quick-start guide you will learn how to use Stroom to get from a file that looks like this:

id,date,time,guid,from_ip,to_ip,application

1,6/2/2018,10:18,10990cde-1084-4006-aaf3-7fe52b62ce06,159.161.108.105,217.151.32.69,Tres-Zap

2,12/6/2017,5:58,633aa1a8-04ff-442d-ad9a-03ce9166a63a,210.14.34.58,133.136.48.23,Sub-Ex

3,6/7/2018,11:58,fabdeb8a-936f-4e1e-a410-3ca5f2ac3ed6,153.216.143.195,152.3.51.83,Otcom

4,9/2/2018,19:39,9481b2d6-f66a-4d21-ae30-26cc6ee8eced,222.144.34.33,152.67.64.215,Tres-Zap

5,8/10/2018,20:46,f8e7b436-c695-4c38-9560-66f91be199e2,236.111.169.13,239.121.20.54,Greenlam

6,10/6/2018,6:16,87c54872-ef9c-4693-a345-6f0dbcb0ea17,211.195.52.222,55.195.63.55,Bitwolf

7,12/1/2017,15:22,81a76445-f251-491c-b2c1-c09f3941c879,105.124.173.107,24.96.94.189,Kanlam

8,8/28/2018,23:25,4499f9e4-afab-4ede-af45-e12ccc8ad253,250.230.72.145,145.77.179.145,Greenlam

9,12/6/2017,21:08,4701fe3d-19cb-4ed5-88e5-a49f669b4827,192.17.20.204,123.174.48.49,Veribet

10,5/24/2018,3:35,a202d4a6-44c1-4d9d-a6b5-c2d3a246f5bd,151.153.61.111,191.59.2.47,Ventosanzap

11,10/11/2018,16:06,73bf0fde-091c-41eb-9ef5-c6a742b447a6,112.210.36.45,89.113.178.178,Sub-Ex

12,3/22/2018,2:29,3f54a04f-3045-4400-927e-fa211c61daf6,22.113.244.30,213.13.252.165,Solarbreeze

13,11/26/2017,19:47,925ff706-ebd3-4045-a8c1-a938a196ba39,239.76.244.27,50.1.42.87,Stronghold

14,10/7/2018,1:21,71b37372-4498-47fa-bc7b-611c3dd6bd30,21.138.176.238,155.255.71.235,Cookley

15,5/26/2018,2:57,24e9e4d4-ae47-4d8d-8a24-58f6d4085329,246.103.138.18,73.162.117.95,Lotstring

16,4/27/2018,18:49,1778d0e7-5fae-4d48-b754-700d0e26fcc7,20.8.184.2,244.134.213.31,Veribet

17,10/7/2017,6:27,fa061c82-5f3d-4ab8-b1b3-ab7e8c206501,206.244.196.39,96.199.17.147,Zontrax

18,9/10/2018,11:06,99ea62f8-f13a-4730-af9a-aa9dbf515bd5,229.232.8.245,151.187.41.141,Sonair

19,10/11/2017,10:38,020c8302-a220-4f0c-b3e9-5704dafc2c6c,210.3.18.238,7.24.182.123,Veribet

20,10/8/2018,21:01,9f697626-dbf7-44cf-8304-bc23d270f9a4,79.91.164.95,65.228.111.8,Kanlam

21,12/14/2017,19:24,ecf60fc1-ed96-4689-a437-8cb021527910,230.75.202.62,160.37.195.14,Flowdesk

22,10/8/2017,15:22,54aa9db5-6dab-4180-aae0-9bc23b6d9a90,176.69.103.171,181.151.216.246,Stim

23,9/20/2018,23:35,eef29e7e-3ed6-4eed-8c04-d1f6bdd95c11,6.188.94.84,84.2.105.82,Lotlux

24,2/26/2018,6:14,75a63921-0319-4a1d-980a-dc9477ba3810,83.232.182.156,98.95.4.218,Tin

25,10/28/2018,12:50,8a6beaf1-c0b7-4f91-bcc0-586f4ac55ea6,167.227.0.230,34.218.236.86,Tampflex

26,6/13/2018,15:17,ed6434f8-7bda-4a40-92f7-3096a5b39d3e,23.75.75.27,153.54.203.153,Temp

27,12/28/2017,12:22,9bb67784-35a6-44a1-b2c4-46a1de49ef2e,17.22.178.242,193.74.181.212,Flexidy

28,4/8/2018,21:31,24d3706b-9f4a-4a0d-abf2-f398502a660e,22.7.145.209,227.22.142.54,Duobam

29,5/7/2018,21:24,aeb57761-9cef-48d9-87bf-2d053332e8d4,213.170.238.130,94.29.64.34,Sub-Ex

30,11/26/2017,21:45,9be35bf3-9607-4d3b-97fe-c6f1d787fd5e,96.155.134.182,45.208.223.58,Fixflex

31,3/3/2018,21:07,b5431a46-322c-4d61-b620-173bf80a9cc0,22.60.155.34,75.176.116.70,Treeflex

32,9/14/2018,19:30,2322cb82-8e79-469b-8de6-adace7947030,66.6.112.48,134.222.225.40,Y-Solowarm

33,10/23/2018,22:18,ade11826-fac9-4e06-ac2e-f32c82b643b5,35.102.108.189,255.67.89.29,Daltfresh

34,8/27/2018,16:13,42d4d562-e5cb-4504-8a2a-fee0d619117c,210.78.202.27,191.42.123.3,Bamity

35,8/29/2018,22:55,4e6d67b0-20f2-49e5-903a-2fc1a8de08a3,155.153.56.139,151.77.207.194,Biodex

36,12/26/2017,1:50,c0a4497f-2e6f-402b-bd88-c3adb86da85f,23.3.148.82,10.179.79.149,Bamity

37,11/28/2017,8:22,5f4f41ef-f7c9-4c41-9df8-f6ecf0634c33,245.209.118.240,48.28.91.123,Temp

38,6/29/2018,22:21,59d0bf23-80af-49d5-b216-398ce24cbf30,201.192.177.176,169.46.125.48,Alpha

39,10/16/2018,4:07,70a97207-0d71-4785-aa9c-3fcc2f0ebdab,183.15.192.131,23.94.228.168,Fix San

40,1/31/2018,20:11,eef84f7d-22fe-44c3-9681-d870949de034,150.117.217.147,147.20.93.58,Flowdesk

41,4/11/2018,10:16,66dfc774-873d-4f2a-9a0f-3600dc152dd7,101.227.202.214,53.157.66.129,Zoolab

42,11/28/2017,8:12,ddad6b94-c5c8-49a8-abca-505cdaf62332,101.21.101.124,234.107.46.72,Matsoft

43,2/23/2018,5:02,0963372f-b583-41d4-b2f5-f5db91a7ef64,155.149.83.56,209.206.127.59,Veribet

44,1/1/2018,15:22,9610cbed-14e5-4c69-a742-c224b9862e00,37.20.231.133,200.159.90.16,Cardguard

45,10/13/2017,14:39,eff31a8d-2942-4b9f-8a0f-4624466b61fa,52.252.121.31,137.195.3.132,Aerified

46,1/2/2018,13:48,a14aac0c-ff78-4b16-9fb6-dd3033a91131,18.91.250.133,89.124.11.172,Bigtax

47,6/8/2018,2:24,1896d637-bc27-4d49-b801-8a46a5a3b564,150.24.100.244,81.227.172.244,Y-Solowarm

48,10/26/2017,17:12,bca0aa24-5940-42b2-8993-0852ff510c29,141.1.48.101,214.87.149.212,Zontrax

49,12/21/2017,22:55,0ac743a4-88bd-4570-81e0-df46707a1b5c,124.224.230.100,238.250.63.80,Rank

50,12/23/2017,19:24,168770b9-3933-49c2-bde3-887207912b24,156.47.16.83,230.73.19.38,Treeflex

51,5/9/2018,17:38,83d8927c-ebdd-4219-ae11-e28db5faab31,102.120.152.228,174.180.251.72,Regrant

52,7/19/2018,3:19,8b991347-2be9-417b-8048-4396f9de115a,38.0.78.235,187.73.115.229,Ventosanzap

53,10/7/2017,0:24,8bd7db7e-4d36-4f41-b1a9-636d5a2eb1ef,8.253.72.127,82.88.214.98,Fixflex

54,5/7/2018,21:58,2b7337a4-e245-45c1-b15b-d182ddf7a446,199.15.196.199,150.132.238.116,Bitchip

55,11/23/2017,19:31,03ed4c11-783c-4208-8d85-71bc72838a74,71.84.168.61,184.203.62.40,Veribet

56,4/28/2018,7:21,c7acc412-7490-459d-9208-b9f4aa1c5c0a,126.35.238.179,0.51.223.13,Alphazap

57,3/29/2018,17:33,900a715e-96f2-470a-bbbf-76048fb8b77f,140.228.247.238,116.178.79.7,Tin

58,12/15/2017,19:15,296ff789-5b10-416a-acef-318dc8f666f2,226.217.207.110,171.94.142.208,Kanlam

59,6/11/2018,6:58,6037a7ae-df3d-489d-b5d1-a7faca916073,212.241.212.170,206.146.52.55,Latlux

60,8/23/2018,19:20,e46a4e65-79b8-457e-9860-008e49cc599c,99.46.226.254,67.81.66.1,Ventosanzap

61,8/17/2018,15:13,52c11e8a-ab45-4e95-b0b5-7d27b700c48f,44.195.55.31,211.84.161.160,Zoolab

62,11/26/2017,11:46,e72f2af8-cc39-4356-a463-671b8ed8bcff,25.92.147.157,44.140.226.157,Sub-Ex

63,8/28/2018,18:42,51daa082-308d-46a2-962a-b29ade9d8d17,134.90.134.103,105.215.16.119,Tempsoft

64,6/27/2018,21:36,a7f8e970-5b16-4175-b99b-df6ecf415d1d,191.31.234.129,109.226.93.112,Tres-Zap

65,7/18/2018,15:48,2267ece0-1f6d-4395-b728-fcb63294f7c6,54.28.24.238,227.112.143.253,Y-find

66,10/24/2018,0:17,f0d8a4f6-82d2-485a-a148-95460143fdf7,117.37.136.20,204.241.25.107,Cardify

67,12/27/2017,2:07,d9bdb997-7720-428a-96ea-945968634c4a,48.148.4.245,251.90.120.127,Sub-Ex

68,6/15/2018,1:20,55bc6e1f-5395-4c8c-ad4c-2d912175f643,211.41.36.194,21.56.236.232,Cardguard

69,9/6/2018,13:43,290a7c64-327b-4455-8141-1c172b576098,250.252.31.201,95.252.30.224,Redhold

70,1/19/2018,13:25,9781f97e-78ad-4a06-aeba-92d1f92d798c,94.253.197.169,29.246.50.135,Cardguard

71,1/11/2018,23:42,ac4e7281-de0f-46f7-a5bb-e44947d7fca3,103.223.119.34,21.238.189.98,Stronghold

72,3/31/2018,14:47,0aeb36d0-dbd0-4267-a142-21065473a3d7,143.89.28.63,111.150.218.124,Lotstring

73,3/12/2018,15:02,3140d07e-9db5-4e80-a1b5-6f11f2bfb4d3,80.48.160.166,170.89.37.90,Andalax

74,1/30/2018,17:00,0e912b71-5ef9-4435-9885-405cfe4afb36,81.218.97.44,46.42.61.99,Viva

75,5/13/2018,5:57,7ededbe9-c06f-4dbb-baf1-7242703f1a61,224.184.137.64,96.79.149.56,Opela

76,9/23/2018,21:55,703e3fea-1ece-4ef5-98ed-ea75658ac85f,192.229.153.21,1.207.209.27,Y-Solowarm

77,4/21/2018,1:35,f92db754-9898-4892-8317-b5c729094f04,171.218.41.176,39.123.55.10,Duobam

78,10/14/2018,10:46,95ed36a1-0664-4e4b-b7b2-e667ff84d365,25.90.242.94,141.119.146.29,Fintone

79,6/13/2018,18:08,a763008a-1d87-4be7-9ce2-aeecd4fa6c96,122.146.189.181,111.183.241.129,Regrant

80,12/2/2017,21:12,dd951713-31ff-4979-a7e7-3d885fe107bf,151.251.152.121,102.191.159.182,Regrant

81,5/23/2018,12:14,d963c509-5bc1-495b-a5c3-0c06876a49a0,44.186.148.218,50.220.247.240,Veribet

82,10/27/2017,1:06,dbc9b56e-bd77-4c40-90e4-d8939bd70f06,147.70.41.96,147.73.163.219,Bytecard

83,12/8/2017,8:36,fdd77368-9ec4-4adb-9c30-27ea35c9921c,25.84.90.75,252.15.3.183,Greenlam

84,2/10/2018,8:02,cdbc1785-294f-437c-9b0b-4e3a23574003,161.169.248.79,112.152.50.119,Redhold

85,8/27/2018,11:40,e28c6cec-525d-49cc-9960-f36773b71899,253.14.95.138,43.252.156.5,Bitchip

86,10/15/2017,7:29,773020e3-e501-4f44-b645-f48d3a870c06,170.143.86.114,221.96.158.107,Bigtax

87,7/11/2018,20:38,47a868ad-d264-4600-9ae3-bc726aba33cb,34.38.157.106,78.216.31.16,Solarbreeze

88,7/25/2018,8:23,418c7837-8511-4005-91a0-ecb1a305bc2a,185.239.181.119,213.56.32.1,Zathin

89,6/8/2018,7:06,9415d1c4-b821-4afa-8e59-72d5ce2e1ec0,227.131.149.25,203.122.150.8,Asoka

90,3/1/2018,2:43,263e689f-671c-4385-88b6-77479c5a905c,4.240.204.191,48.105.128.192,Overhold

91,1/31/2018,10:34,169522d6-1d62-4986-9d6c-89155a7cec38,229.141.65.124,178.60.253.94,Holdlamis

92,11/28/2017,6:32,ac5e8061-2a2d-48b1-ab1a-f3c63d8b4a90,155.14.247.251,203.228.183.148,Stringtough

93,12/24/2017,2:34,3dc0cc3f-95f4-4dbd-82f9-b891d7c900f6,88.131.197.5,223.60.102.11,Temp

94,7/8/2018,13:47,bba331ac-6bf9-4f9a-a638-936cbc46ef07,63.143.250.154,117.102.62.65,Redhold

95,12/14/2017,22:54,2ae48b9c-e5fb-4d88-8271-d9fcad65d9db,192.175.242.183,171.42.116.122,Bitwolf

96,10/1/2018,7:14,d5c13a4c-f8ee-4f1d-8491-8ee06342bf35,152.242.18.39,53.63.137.55,Duobam

97,2/11/2018,22:50,368da884-82b1-422c-a8ad-3e706d6c352b,56.196.99.77,29.124.101.139,Tresom

98,10/11/2018,14:10,f4e91b49-e9ed-4f68-ae78-32dcc7041390,85.105.54.201,42.28.93.183,Treeflex

99,10/11/2018,8:38,a881cb66-619e-4208-a37f-8be92c34cc17,198.15.225.66,140.186.190.29,Y-find

100,9/6/2018,9:38,97d87472-84ea-450c-bd22-06c34122ba54,165.193.100.176,204.7.185.150,Asoka

101,7/28/2018,13:08,6d6c1a8b-30bc-44c7-94ce-72b3625a72c0,171.117.220.80,85.157.194.63,Ventosanzap

102,10/25/2018,3:52,1455d1f4-0d6a-41bc-b6e2-2bd00dfd79f5,56.164.213.39,194.218.185.51,Bigtax

103,1/7/2018,6:37,8b19f201-528e-4a94-a328-27789f965149,14.105.155.22,199.108.108.152,Bamity

104,11/8/2017,0:30,e9f54eaa-b59a-4e90-a062-c8fc695d900d,193.4.171.203,55.126.99.98,Treeflex

105,8/7/2018,10:06,6f0ba07f-5308-4698-b5dc-72452c756012,236.203.140.239,149.64.29.243,Ventosanzap

106,5/7/2018,19:37,50938c35-1285-4ef8-ae06-fb6234219e1b,231.27.98.18,112.137.231.24,Home Ing

107,8/12/2018,20:58,c58d0088-6e16-4972-97bd-f00fea80c494,162.156.90.66,1.226.76.49,Gembucket

108,7/19/2018,8:22,3e037d4c-15e0-4b76-a68c-88935eeb7aea,251.42.126.121,192.110.197.53,Asoka

109,10/18/2018,18:47,86cc9595-c852-411c-9174-ab028185fb47,203.115.146.213,103.239.238.183,Regrant

110,8/4/2018,22:01,e3c84025-ee81-466a-b9db-949309a1e633,34.208.243.55,127.55.221.130,Mat Lam Tam

111,11/16/2017,14:07,bf87c3f6-78a2-4fba-b8db-b2cfe3e36f86,242.198.18.21,77.60.173.221,Lotlux

112,10/25/2018,15:32,6c406adc-197c-4ca7-b62a-5449977e5dd8,86.204.47.125,212.192.229.255,Stim

113,4/30/2018,14:08,8ea345f4-0bcf-484b-a83f-f5af0d027fa3,25.105.254.65,219.29.26.67,Subin

114,2/23/2018,6:18,473e50ed-13cc-4b6c-9658-21e5b82d9300,94.28.70.70,243.205.48.94,Tin

115,6/12/2018,21:05,37b1f7c2-030a-4f83-8429-a58b6ddb3575,201.212.156.41,169.244.172.122,Zontrax

116,11/26/2017,16:40,31f587e8-daae-44f7-bed2-9c53b6ebaa00,141.72.101.242,35.20.0.167,Cardify

117,7/13/2018,15:43,98115be1-7da7-4b18-ae1b-7232e4ce2c2e,112.116.179.227,212.202.61.141,Fixflex

118,3/31/2018,7:03,0a1170d9-b48f-4d27-930f-9887b011a064,237.115.43.201,31.193.195.36,Tres-Zap

119,10/2/2017,0:28,7bd02228-ba30-4d14-881f-c081d543e2ef,135.154.0.183,167.230.86.152,Pannier

120,5/22/2018,18:11,d1725895-2f56-469c-9165-4f79385b887f,220.252.81.137,227.185.62.161,Voltsillam

121,1/16/2018,14:45,45cb4853-a375-4783-a799-349114ef5de1,124.43.199.166,156.112.235.79,Zontrax

122,5/15/2018,10:31,459ea39f-6981-4a9f-8d1b-b8f9da12e0d6,213.244.144.70,232.36.50.128,Bitchip

123,10/6/2017,11:48,0207b462-3451-418b-aa57-4e10b66b6552,104.217.5.54,23.5.26.56,Y-Solowarm

124,10/22/2018,13:22,91ebf411-ae6a-43d2-89be-50787f10df86,217.101.201.176,211.74.194.81,Konklux

125,5/30/2018,21:34,bb2e20ab-a601-460e-945a-2dd24beb3785,227.187.108.133,42.78.225.230,Zathin

126,5/27/2018,9:15,6416271d-429f-4f72-a39b-55da7486c1eb,222.59.61.127,122.224.197.213,Zathin

127,3/22/2018,15:09,bad4c34b-a66a-444b-8866-25d25235a372,217.187.208.201,106.168.213.95,Cardify

128,4/7/2018,21:23,b4ec1e60-2ad7-4f01-a23e-8dc85fda0551,65.32.206.114,27.137.7.42,Zontrax

129,11/3/2017,22:32,a7d79619-ea5c-4284-8f0d-d7945addd2f8,15.121.168.158,102.178.97.10,Sub-Ex

130,9/9/2018,11:29,f6969107-b641-4a08-b5c9-51e290e9b436,35.247.115.234,7.91.15.103,Job

131,6/3/2018,21:49,053beae1-8f2b-4bd9-887f-d5531e40d042,241.102.48.179,133.218.199.41,It

132,4/23/2018,5:59,24850aba-5837-4b18-bc26-910c426e76df,199.198.5.234,28.247.147.246,Lotlux

133,10/21/2017,1:32,4a9bd9e4-af7e-4141-b807-54fb223d0738,40.25.69.75,240.55.180.125,Wrapsafe

134,7/3/2018,16:55,82ca0c3e-7fbc-41d4-a63d-f5b441d34491,53.59.127.129,102.183.11.218,Zoolab

135,5/6/2018,14:41,3025d1e5-896f-404b-a2f7-1890eb998bff,95.21.68.78,184.71.119.135,Mat Lam Tam

136,11/28/2017,5:08,ee9dbd0c-4291-4c39-8cda-e760f5517371,182.65.11.157,83.121.65.126,Job

137,10/19/2018,6:23,9bdc9e0c-4428-454b-b36e-88cf71311aac,237.113.238.178,96.144.8.214,Biodex

138,9/1/2018,3:11,217dcec5-2b5e-4be3-a4f6-4539915203c9,184.106.251.76,80.255.170.1,Bigtax

139,12/27/2017,23:46,79357c9a-7cf6-4752-a0fb-465df9077d6d,59.111.68.32,132.223.200.49,Redhold

140,2/13/2018,18:07,26aafe92-a6b0-42ce-9d13-e7b9c5f9d2e8,212.116.96.151,11.226.115.251,Konklab

141,3/14/2018,7:18,e91f510d-0f5d-4ed9-bcb1-c4e9dc02ff56,141.217.79.91,124.81.26.133,Stringtough

142,6/25/2018,1:06,0583c58d-27a7-45e1-8b0e-c89b31743738,234.39.214.221,238.170.243.26,Tempsoft

143,10/18/2018,1:27,2b33e5c8-0594-4895-90f1-94c8bcbf6ae4,113.124.104.238,252.174.136.66,Otcom

144,7/16/2018,19:20,eb05bfc4-9227-4683-a427-979063e20634,193.184.110.236,97.253.186.88,Subin

145,4/20/2018,16:28,77b8ae10-0fd9-4a2f-8b9e-4b9b84ade131,234.221.22.3,235.28.137.139,Holdlamis

146,1/1/2018,3:49,dc78c943-bdd0-4aa8-860c-f71c6ae64b5e,195.54.238.112,105.148.134.102,Andalax

147,8/16/2018,17:16,b89c686d-b6f1-4121-a3ca-ae6829293b5e,220.80.106.255,14.41.179.72,Greenlam

148,10/9/2017,11:43,0ad79c16-33e9-47d7-a3aa-75c19de7f26d,32.255.206.52,25.196.125.173,Flexidy

149,12/9/2017,3:11,6a6065b6-f480-4d51-b356-41e48e92adfa,184.127.106.30,52.121.56.176,Tin

150,11/22/2017,2:17,765b7ff2-0834-43c7-8647-7f0894217a93,39.128.149.70,111.8.169.145,Ronstring

151,8/7/2018,13:55,78cba361-ddcd-4b0f-9ab6-33a58d65b4aa,101.188.108.89,21.89.207.83,Trippledex

152,2/21/2018,14:49,aa8ffeb2-8d50-468a-93da-c0de3b675f68,188.238.208.25,214.69.87.114,Tresom

153,12/8/2017,13:09,ba0e78ed-6fad-4c2e-a67a-ad95ddf56db4,8.109.31.147,99.95.109.248,Tampflex

154,6/17/2018,7:25,8f6e86b4-95a9-4c03-8f2e-bdb92b082663,137.5.174.180,76.151.57.148,Voltsillam

155,8/4/2018,15:46,4becd2f7-ecb7-4590-9243-3f1840eb6949,183.83.212.201,252.245.181.198,Trippledex

156,11/14/2017,5:02,cc0e08dd-c7dd-4455-9e9c-cb3455f25391,170.152.44.49,241.14.74.130,Transcof

157,7/20/2018,20:44,74d3dc40-35d1-4230-88ce-089e7d87449f,7.47.125.183,10.127.43.169,Veribet

158,10/21/2017,20:26,78100f77-e26f-4317-a745-1513d876424d,34.166.201.83,179.90.130.39,Tempsoft

159,3/21/2018,3:23,f289b727-bc14-4118-ab00-a91eb19b7f0f,77.226.128.159,64.39.95.54,Duobam

160,10/8/2017,15:06,843eeb19-6a05-4019-bbf8-17e852b0bb33,17.18.153.27,109.238.89.42,Quo Lux

161,8/22/2018,21:50,65894008-6b4e-4815-ab0f-bd8399c86dda,153.193.198.29,38.42.31.35,Quo Lux

162,12/2/2017,19:40,00d74ac0-3da6-48b7-acd0-2620a27c5a48,243.224.135.148,99.158.204.102,Sub-Ex

163,7/25/2018,3:41,8f024ccc-25d0-4220-9796-b9589fb743b0,166.79.89.62,14.247.10.93,Sonsing

164,7/6/2018,9:33,c9024d5f-644a-47d8-b626-e5d50dcce248,246.118.142.77,147.244.20.77,Latlux

165,7/25/2018,15:53,22f8308e-e81f-47ec-a492-73fa46de07b3,146.124.72.13,213.13.178.141,Alpha

166,12/24/2017,8:43,51b6fd0c-ccfe-4e84-9207-d6f278eacd79,240.47.116.155,108.68.203.184,Cardify

167,5/27/2018,6:46,2a5396a5-e8a9-47a8-9b61-d14ff146713f,5.95.195.21,110.148.226.230,Bitwolf

168,7/6/2018,14:39,e89191b3-ad35-4103-bf71-8d42d08e4e26,43.86.242.207,15.225.62.35,Tres-Zap

169,4/5/2018,0:42,54c34e58-e2d0-4445-92c5-3bbffc2a9c30,57.12.210.118,25.116.51.166,Zaam-Dox

170,2/16/2018,9:20,d8baef66-7cb5-473f-b1ad-4472727b9f9d,17.218.246.254,161.165.208.200,Treeflex

171,5/23/2018,1:25,cc594af0-add0-470a-8510-d1a2b359168e,110.110.255.30,98.144.196.212,Treeflex

172,9/22/2018,5:56,462a316a-6097-40c9-b981-20d31c7a5a0c,47.93.206.95,219.106.197.48,Andalax

173,12/20/2017,7:36,8bb95161-cb0d-4cc6-84f0-90930b72cfcd,244.141.30.73,234.113.249.160,Viva

174,3/8/2018,4:45,8706289c-a080-433f-a822-f308b2e4061a,15.40.244.54,161.40.240.172,Span

175,6/28/2018,12:39,0873f2e9-8e8b-4e32-8125-ef28e5cf9bf6,91.113.224.147,137.249.156.3,Voltsillam

176,9/23/2018,2:13,95a2c8e3-ac1f-4b6a-a558-c400fcb58160,87.155.251.229,100.16.2.220,Hatity

177,12/10/2017,16:14,46a9ca5e-cba4-4794-9b62-251ce6f26218,100.164.14.11,232.197.42.38,Cardguard

178,11/25/2017,0:10,b9695949-042f-4391-8ea4-fe64e6090c86,219.224.42.202,239.141.39.103,Bamity

179,6/16/2018,15:51,8e237828-bb33-4dda-8916-f6daa6d15709,126.186.49.199,51.95.222.6,Stronghold

180,11/14/2017,10:56,bc223509-7163-4df8-b596-6e8c8431ff4d,38.17.99.97,217.146.56.139,Domainer

181,12/31/2017,19:07,ab4dee6a-67df-4e42-9ae3-ee12e203af28,87.10.120.66,93.86.41.0,Fintone

182,5/17/2018,22:21,c06c5e32-b7c5-4d7b-b43b-8304d97e4601,153.239.99.181,239.108.8.155,Konklab

183,1/9/2018,10:35,4f0571db-9333-45cb-851b-5996c8303370,2.110.64.80,110.160.112.125,Bigtax

184,10/11/2018,13:39,8cf36f7a-8342-4ca2-bc58-6abc94d8cc5f,169.94.246.143,117.182.133.105,Hatity

185,12/25/2017,15:00,e3dbbe95-2390-4344-9bb8-b289d0a02c9b,107.248.191.155,58.186.45.243,Zontrax

186,10/28/2018,21:39,0e83a23c-da1f-4e7b-b68c-ff9588f16a55,55.95.51.38,57.142.121.81,Redhold

187,3/28/2018,13:17,37f7ef6b-b4ef-4e43-aaf9-899c0acd7d89,64.5.59.101,189.85.164.110,Bytecard

188,4/5/2018,16:12,1348d73b-0050-478b-9800-cb1171c2ce56,83.206.48.154,32.152.230.214,Lotstring

189,3/12/2018,13:47,cb3de50e-1f47-444f-a0a2-d49db0ecbc7c,254.108.162.133,89.132.82.29,Cardify

190,9/27/2018,18:21,6058c749-3695-4298-a7e4-bbe6c9274254,152.109.136.236,228.20.77.117,It

191,8/2/2018,9:40,83fbea99-a7bb-407f-80c2-e44e400b3fd0,85.86.153.15,206.214.127.221,Wrapsafe

192,11/6/2017,3:08,0bf92182-1f59-40d2-8cb8-bf55f6e95ab8,131.78.230.183,104.62.97.221,Zamit

193,10/17/2017,10:07,e7e54895-77c6-4f99-81bb-4b9c21f29ce4,43.34.201.182,82.192.116.57,Rank

194,5/5/2018,17:58,bc321c25-389f-4eb4-b23b-c2eee8239aee,137.57.154.147,250.136.242.163,Home Ing

195,4/5/2018,1:26,4d204d97-be07-48de-81ee-1de376ef929e,115.97.174.195,209.198.0.208,Ventosanzap

196,10/29/2017,20:52,2c44193e-0ea1-48f8-8ea9-5827d934375e,117.44.191.183,104.77.192.21,Zoolab

197,8/5/2018,9:50,40f83f26-2aeb-4a37-aa0f-b9b1ea09fcab,80.18.164.60,124.155.7.128,Home Ing

198,3/29/2018,10:44,a61f2073-936f-45d7-8cac-f3d22ce33478,163.202.22.5,185.57.186.177,Redhold

199,11/22/2017,19:30,914de123-cde1-48c5-b140-346be2c3cc55,54.51.22.140,186.181.223.34,Treeflex

200,4/29/2018,11:07,2c0ef936-ee46-4573-b6b8-b1439f68c185,34.78.254.181,226.16.24.51,Opela

201,9/15/2018,5:53,d39fd7e4-97f5-49f5-9579-813aaee20fce,177.63.198.175,59.90.177.23,Wrapsafe

202,7/10/2018,17:35,ca991087-486e-4cb5-8cc8-044291778837,189.72.169.222,127.43.200.131,Pannier

203,4/16/2018,12:45,0ba20de7-bb3a-44bc-8202-a0fe9a98c58a,197.193.79.141,19.245.205.192,Tampflex

204,3/22/2018,6:30,0392bda2-c40a-46de-8c8b-54f554cb3b44,156.164.0.177,175.181.143.66,Toughjoyfax

205,4/21/2018,18:58,c944fbc5-19e4-4d46-a2f3-fc6e4992efee,59.31.209.62,74.205.130.71,Fintone

206,9/23/2018,10:38,9da18fb8-125c-47a5-a93e-cf56bdc88b3d,135.58.69.93,155.125.122.254,Lotstring

207,8/23/2018,17:01,098a7fc7-bb98-4532-b3fd-3390bec6bb7f,127.152.118.167,175.29.241.181,Job

208,7/7/2018,5:38,5c482d69-2cd4-44e0-9a36-d46c16657c43,201.91.20.214,205.158.19.116,Sonair

209,8/22/2018,20:48,bc3b2e1e-de3a-4dbc-8ff9-4de36f9d0db2,58.236.64.247,65.35.176.83,Konklab

210,2/26/2018,19:53,b91daa3f-2e6d-4117-bb65-0e19bd50b225,116.237.190.171,218.174.241.6,Temp

211,10/29/2018,11:20,92f306ab-71bb-484f-bf54-70eaba4e9d25,137.139.155.143,246.218.193.153,Flexidy

212,9/16/2018,2:39,14274166-fb89-4a8e-b0f6-646a3a53ece8,121.242.119.210,193.104.112.90,Ronstring

213,5/16/2018,19:27,c68dc8c3-d2c7-4432-a0e6-aede0aa9867e,166.74.204.52,218.223.78.56,Biodex

214,12/22/2017,1:48,a9473cff-6a1d-4059-8527-8cc414cd45c6,157.4.88.142,101.251.125.94,Sub-Ex

215,12/13/2017,4:15,cc7ce72c-c95c-44d0-a72a-92d4ef6eef73,104.234.25.80,30.36.99.85,Rank

216,10/17/2017,13:48,74d3fc0b-5b8d-403a-8bd0-84c83918693d,57.238.249.30,97.71.100.77,Zontrax

217,10/15/2018,15:51,25095d5e-629d-4d2f-b4f5-57060807f888,32.79.137.153,103.24.141.160,Duobam

218,5/15/2018,4:52,19c13a1e-2cbb-4801-bd3a-6ba91e02392e,81.28.79.27,39.49.68.167,Duobam

219,8/5/2018,4:36,6841c126-a7bf-4672-81e0-9aa4ce08368d,218.98.245.180,236.244.145.163,Span

220,9/7/2018,16:37,0debfbf5-076d-4fcf-a9b4-0033e10b075d,246.62.203.25,66.9.95.235,Tres-Zap

221,8/28/2018,13:04,960b8811-b3db-4796-9155-87cf9d34bf30,231.251.130.148,254.23.167.81,Rank

222,9/6/2018,3:21,1f516c2e-ba0b-4e42-93cc-469a0e59fc17,113.182.133.235,208.151.158.70,Cardguard

223,7/4/2018,18:31,44b436c6-d5ee-449b-9d0a-b0060a9adfc6,195.82.10.79,242.51.114.24,Voltsillam

224,10/1/2017,20:15,01ac9527-98a3-4527-9faa-f2a1fbcf3903,188.98.228.136,4.198.29.75,Kanlam

225,12/2/2017,16:07,f705d062-8ea7-4d1f-b434-fd87083546e4,151.180.115.123,225.142.116.180,Cardguard

226,4/30/2018,10:10,ed13639c-57b8-42d6-b590-97e359a05fb7,218.27.72.171,206.47.104.82,Y-Solowarm

227,8/4/2018,3:02,f560d268-73b7-48cf-8445-e6274fc6733c,239.106.173.192,161.49.189.160,Trippledex

228,9/24/2018,20:40,a03f325f-df50-4cd4-9ad5-9797dbb03275,224.137.237.195,125.149.130.91,Job

229,12/12/2017,21:16,325b304f-4939-4435-bf92-c2e18a74dc83,239.158.92.73,103.81.197.155,Cardguard

230,1/27/2018,18:26,34a58e28-07b1-4488-9a4f-0685ba2ad344,201.172.61.8,96.40.59.226,Subin

231,10/23/2017,13:41,176c906b-6ca2-47cf-9598-d6999eb3e122,238.200.134.8,155.25.15.204,Tresom

232,1/6/2018,21:30,96a281f5-e128-4660-aaac-f25afc496145,31.20.180.181,20.35.89.153,Cookley

233,9/27/2018,19:34,58b1c3e4-f634-46eb-b907-23b8406f013e,36.164.202.250,249.255.99.45,Namfix

234,6/14/2018,13:32,e2327b7d-fa2f-4e3f-b3a9-fbab8b598038,14.37.131.198,51.43.52.13,Span

235,10/30/2018,1:43,0d07bd12-7d49-4572-8ae0-06e021dd447b,142.24.52.237,7.208.87.9,Stronghold

236,10/28/2018,5:29,f280e9b5-d39a-4390-9c30-4fb8b2404fa7,31.88.186.151,131.191.170.7,Zathin

237,9/30/2018,9:44,96943b56-8250-4486-8e25-1947a527886e,22.221.1.143,126.83.14.128,Y-Solowarm

238,6/24/2018,14:20,71eb2943-c986-4b40-89b7-f0ecdc61c64a,170.242.55.129,175.175.54.106,Cookley

239,12/30/2017,11:42,227d04d8-30c3-4b30-84ab-80911a5c8c1d,5.252.108.10,159.29.69.112,Cardify

240,3/14/2018,17:56,34214faa-bae5-4378-8c00-b0d936197bac,217.183.171.86,140.229.108.193,Greenlam

241,5/25/2018,7:06,42562049-fe7e-48a0-885a-488ed997a805,237.150.248.213,185.220.242.148,Flexidy

242,12/15/2017,19:06,32daa691-22d3-4ef4-9545-12205a832279,69.87.13.101,116.245.47.109,Stringtough

243,4/18/2018,15:46,e7b9aed2-df17-4cba-a15f-4096f86b31da,148.7.93.196,156.41.77.240,Pannier

244,11/14/2017,0:10,698e46d6-dc87-4a84-8e52-7d72b1ef0c73,155.160.129.109,71.197.128.139,Lotlux

245,12/9/2017,9:56,49a2a01b-ec68-44a8-873c-10b66b928867,241.59.181.154,58.215.239.180,Veribet

246,4/16/2018,1:28,e73f80bb-b090-4591-bdf2-dc23a6e43ad1,150.42.94.199,175.126.65.179,Flexidy

247,10/25/2017,12:09,e78a59ab-9dd5-4009-8095-1b21eeafd87c,4.69.58.153,223.226.253.19,Cookley

248,12/8/2017,10:27,f650475a-881d-4871-9ac8-f1fc897831d4,185.230.227.100,214.98.110.57,Tampflex

249,12/24/2017,11:20,523086e5-ee02-4db5-abb4-8acb5a5cfed4,102.243.63.246,25.242.66.214,Duobam

250,2/21/2018,9:28,de54ff9a-6818-49e4-a776-a6bba5b3708e,197.43.103.63,172.46.49.148,Bytecard

251,8/13/2018,2:40,f5464f40-f0dd-4a55-8c1b-131e5ae16838,26.222.211.175,25.20.16.167,Fix San

252,7/18/2018,1:25,0ecc72ed-7164-432c-98fe-ce560e549839,2.101.24.94,220.211.123.187,Subin

253,8/26/2018,23:32,f5fbe33a-5c2e-49e4-b745-83697fb02859,150.77.163.1,107.79.83.191,Matsoft

254,10/10/2018,12:23,f04048ff-a988-44a2-a299-36e791700ec8,166.143.68.85,58.212.232.15,Biodex

255,9/22/2018,22:30,60df66d1-42c3-495f-9484-767a8297c008,166.43.87.22,235.111.18.137,Voyatouch

256,5/10/2018,8:24,cfed3cf3-615d-430c-8462-affe51496a17,70.138.252.4,211.142.151.122,Otcom

257,7/24/2018,20:04,8b724ea8-dfac-48b9-86c5-295c4ce0cf49,208.160.88.27,89.68.43.49,Voyatouch

258,8/27/2018,7:30,bffd1182-af7f-4e6d-9c24-009587bc1580,96.91.64.74,224.119.205.99,Pannier

259,1/20/2018,4:36,9e1c42c9-18d2-480b-98d2-e7fa0f253a97,43.167.166.246,126.4.25.218,Bamity

260,1/5/2018,22:01,33c5072c-a49d-4a23-924e-7c81ea44155f,70.100.57.100,152.41.206.110,Cardguard

261,10/15/2018,19:58,85f87752-a8c2-4f30-866f-151bc334ff24,158.35.140.116,154.148.248.163,Ronstring

262,11/10/2017,5:04,43571845-9033-4e9a-bcdb-c611272e8494,129.91.160.178,72.146.168.76,Bamity

263,4/4/2018,18:29,0158bcb2-b388-4dc0-b02b-fc30433669e1,231.23.191.183,39.246.130.167,Alphazap

264,10/11/2018,6:03,8499a22d-f542-40ab-9032-e89d553cfd39,207.118.108.11,193.219.177.124,Kanlam

265,3/6/2018,20:34,a1ce3f9c-01c1-46b1-92ee-f5595ec0480f,147.129.137.16,45.194.146.254,Gembucket

266,1/11/2018,3:04,d6be6f0b-19b0-444f-aebb-4597f826ff54,86.119.78.82,68.155.208.57,Solarbreeze

267,10/7/2018,3:52,6c360994-6592-4593-9818-94337e245231,108.37.141.193,52.160.52.225,Overhold

268,1/25/2018,22:57,8467602f-b884-4188-957d-27811cc2c04e,138.104.66.221,18.124.214.101,Tempsoft

269,6/23/2018,12:31,19058a43-8bf9-4637-8503-44987c81714f,141.29.192.22,16.52.156.70,Tempsoft

270,9/29/2018,9:30,7067d360-8b7e-4c21-8a0a-b455b9eaa69f,2.183.121.48,117.93.127.186,Vagram

271,3/28/2018,4:17,23d07958-e5fe-4fd6-a25d-285626d7f073,21.211.214.35,131.184.197.4,Quo Lux

272,11/26/2017,13:15,55d60a89-a944-4745-a6b0-d9490d1c3ef6,240.59.100.221,247.243.155.185,Alphazap

273,8/5/2018,20:16,405367db-d5d3-4aeb-80f6-64b5161ccca2,238.215.118.161,158.157.9.147,Cookley

274,6/26/2018,14:55,462402fa-5b74-4d20-954d-79c63a9609d2,35.181.96.172,162.50.227.183,Voyatouch

275,10/10/2018,0:23,fcb612af-0601-49a4-83ad-ec7f4d984292,229.205.133.43,90.72.84.72,Trippledex

276,5/30/2018,12:34,f80335c2-8aef-4dd6-99ea-0549fa0f3e76,25.232.8.76,211.184.201.165,Tresom

277,12/22/2017,15:46,e629ac44-a62d-47d4-a5a4-28d8fa64f827,233.208.25.137,203.130.73.198,Lotstring

278,6/20/2018,1:30,51696a07-017b-4180-8dcc-371f889f6604,11.135.18.35,168.56.122.142,Cardguard

279,4/5/2018,7:56,a1da43e2-ed9d-420b-8625-cdf6a2ab41bb,46.207.178.21,125.84.229.113,Job

280,6/27/2018,1:02,32427042-a64d-4554-a0f0-60b0307036ac,140.193.81.134,216.247.114.46,Temp

281,2/24/2018,5:44,be851bc1-5d5e-4d5b-87e6-4da6bf29c7d0,247.93.163.160,80.216.54.40,Voyatouch

282,9/2/2018,3:49,5b76efc4-4d04-469b-ad36-ec9a16d7ba10,80.9.215.221,81.0.227.94,Vagram

283,12/22/2017,5:32,99db40a9-f65b-4c0c-a046-0b10d4625209,227.216.234.80,40.212.57.42,Cardify

284,1/31/2018,3:41,d8a17ba3-70eb-4600-98ee-bb64681ba07c,154.15.14.189,132.242.42.13,Trippledex

285,10/25/2017,13:48,f2924a63-5b4b-49e6-8c22-275bd241b90a,147.202.35.88,239.254.189.5,Bigtax

286,2/28/2018,21:51,96027ba7-7b39-437e-bf11-f510b4bc1b5b,101.167.219.150,202.58.140.94,Tin

287,9/5/2018,22:07,04b63167-f249-4fc5-b5b2-41c37c8d98aa,80.223.171.49,170.252.11.196,Fix San

288,5/18/2018,5:53,d4f94982-9332-4afc-a561-9debedbbd657,2.173.12.163,67.170.92.148,Temp

289,8/9/2018,1:52,047cb186-fc2f-4b17-a87f-f85f2776fb94,89.56.2.31,108.201.188.254,Stronghold

290,4/11/2018,4:10,723b1976-0ada-4ba2-96f5-64eab7d7a6c9,83.8.72.185,94.173.181.220,Overhold

291,10/8/2017,6:58,b4d27f1c-49ff-4455-8edc-5cb81aa407fb,253.89.235.126,161.36.244.182,Job

292,10/6/2017,15:45,b8afb996-58b8-4a1c-97e4-18e2109cd8a5,23.60.103.108,118.223.242.144,Opela

293,1/6/2018,7:13,579857e3-ff4c-4a45-b48c-de316f3dd90b,19.91.236.110,52.173.110.145,Temp

294,10/24/2018,9:59,5b63200f-d42c-4373-b3cd-1abe55ba4ed6,204.39.94.169,60.190.205.39,Lotstring

295,2/9/2018,10:25,3196d2f2-deec-4515-8afd-b6800755d0aa,163.240.196.236,7.4.189.108,Quo Lux

296,8/18/2018,6:03,25e2d1a7-5b3d-4386-9f42-0978299e8d2f,63.235.23.53,4.20.62.55,Zoolab

297,12/14/2017,12:46,f13f929a-5b1d-4dca-b1e5-e9f27f709eb1,15.231.30.135,44.143.215.133,Vagram

298,8/19/2018,9:37,49506ac3-4d80-4673-a036-47263cc4ceae,21.57.177.251,17.70.244.86,Keylex

299,3/6/2018,10:16,d221fa32-9490-4061-946a-416a6c4356c5,97.252.92.83,69.79.187.234,Otcom

300,4/9/2018,1:36,a3d327f2-4eeb-4e88-bf06-d532fafb2318,118.7.167.180,167.195.223.75,Sonair

301,12/19/2017,20:51,b0ef4abb-817f-41a0-a6d4-39ea686d4126,21.118.47.148,214.10.138.134,Zathin

302,3/9/2018,7:16,87cf5a27-8e7d-4d0a-89e0-285d321af262,87.155.196.172,99.33.150.143,Regrant

303,5/19/2018,7:49,49e2eecf-2d1b-4ca7-90e1-1b8c67553195,221.8.4.82,165.8.198.157,Zontrax

304,11/15/2017,11:18,99a79801-f40e-4932-ac4f-a5952ebe4bfe,124.150.8.216,75.75.71.240,Subin

305,6/21/2018,7:04,4130bd95-f224-40a5-8b6c-002418184242,110.189.118.185,90.104.251.44,Mat Lam Tam

306,12/1/2017,5:12,0515b954-44bd-4551-8b83-7af420e88776,199.198.43.147,155.53.106.159,Zaam-Dox

307,1/11/2018,14:04,10b4a20d-3dc7-4860-85d9-f1fd6c2b665f,9.251.13.22,251.183.101.66,Matsoft

308,11/24/2017,23:24,2fec5dea-75d1-4bdb-9461-6d5d83c0faec,238.240.22.251,52.5.2.175,Lotlux

309,11/20/2017,18:14,d499a247-52c6-4b3e-add7-15d56bf11373,129.113.156.214,56.39.33.42,Zaam-Dox

310,9/2/2018,17:22,4af42402-fc3d-443f-9b32-e374ac7cc958,52.28.41.31,0.57.71.173,Bitwolf

311,2/27/2018,8:18,a53b06ee-1cd8-4fd6-b174-29591eb99d45,154.10.141.167,148.153.70.17,Andalax

312,10/14/2017,6:07,5d0ad2af-34b7-4dbb-ab53-4cdac9e05d0a,70.110.151.225,212.202.230.61,Stronghold

313,6/23/2018,12:58,7bb7d4b3-0607-42cf-b311-fe0f89fbfe51,21.128.178.165,221.59.225.199,Quo Lux

314,9/29/2018,6:16,4fcfcb09-204b-47b3-bd1f-a233b3665dc5,106.194.14.177,4.237.207.37,Otcom

315,9/16/2018,23:45,449a3dbc-f92e-4869-ae0b-ee05b0f750b9,198.243.80.21,200.14.248.86,Latlux

316,2/23/2018,9:08,58cb9eda-d0af-4062-adab-191e30e6048b,239.188.7.139,223.63.52.188,Viva

317,5/30/2018,1:32,43be237e-c8f4-4bd2-91f3-4d628738d9d0,177.29.245.27,53.25.196.61,Lotlux

318,8/30/2018,3:28,289b0ff3-a8f2-4883-8dae-351764c227e0,15.102.252.22,44.60.157.25,Stronghold

319,2/24/2018,17:10,bc490627-f0b5-451c-85af-cbf2906a641b,240.103.221.147,236.142.77.72,Span

320,7/26/2018,15:14,35592471-634d-489d-bc77-19edcbabd960,70.77.169.30,150.72.232.24,Andalax

321,6/22/2018,2:45,09d81d7f-efb7-406f-a984-1cea0142d7a9,205.79.186.86,92.74.79.16,Hatity

322,11/23/2017,3:43,1e0d916a-8950-4130-bbdf-ede9ec948f55,238.32.149.44,112.232.181.188,Rank

323,1/6/2018,6:00,9bb5db80-e751-44ef-8f44-022cb49a4a7e,189.34.136.55,215.189.145.144,Mat Lam Tam

324,11/2/2017,23:49,7172c006-48f2-4caa-8aab-6de202edd990,185.112.90.178,29.179.48.159,Holdlamis

325,12/10/2017,13:09,8d89820f-1f34-4169-988b-d099ffd4d85b,14.112.253.57,90.178.153.27,Biodex

326,2/26/2018,12:40,621201bc-b28f-4e43-8051-dc9359a416cc,210.245.84.9,22.100.233.53,Lotlux

327,12/24/2017,23:54,8e0cd302-2e6d-485f-972f-092d44bd18ca,254.63.19.156,32.164.159.10,Andalax

328,1/11/2018,9:03,5fb1f41c-35df-4375-a236-9352acca9238,149.106.205.41,75.113.173.236,Asoka

329,11/5/2017,8:04,b5897e1b-5299-406f-b800-d57a5e2ddb60,100.167.43.56,120.48.129.250,Voltsillam

330,3/30/2018,23:04,c939bb32-e36a-4b18-960c-0c3a598a1541,187.82.148.150,102.111.144.166,Cookley

331,4/9/2018,22:25,556b4b02-3d11-4ea6-9723-4b9d6e0b38b9,156.63.104.177,160.227.213.150,Bamity

332,1/19/2018,5:26,1feedb86-7940-485e-8aaa-b7700e53fe88,113.81.213.92,80.67.63.111,Opela

333,8/31/2018,13:01,87cd50cd-e62e-41c8-843b-2928b3d0d582,163.216.175.250,88.201.63.60,Bitwolf

334,7/25/2018,23:12,52cba144-d7af-403f-b030-bd116cc12948,243.176.254.91,253.196.83.132,Otcom

335,10/6/2017,19:46,1d724165-0c05-4fe1-9b34-73cb98eaa4d4,203.145.119.158,154.18.60.165,Keylex

336,9/28/2018,14:20,6f40f642-8874-4f30-85ae-dc6d70a82762,37.40.98.64,253.224.99.29,Tampflex

337,12/23/2017,6:47,58cdae84-1b21-4782-9155-b4b1c01f8359,4.174.17.229,194.210.145.191,Domainer

338,8/8/2018,9:48,10f8ba4c-83d5-4437-9b1e-7ab32d0b5da9,226.248.143.48,216.221.164.141,Kanlam

339,10/16/2017,7:16,83933c28-9bad-43a1-bfd0-15b77caf2363,141.19.180.217,47.187.140.156,Zathin

340,3/30/2018,18:08,0fe84282-560f-4711-9e77-be75e543ca76,124.156.78.188,102.104.8.7,Zoolab

341,1/5/2018,17:16,509c659d-c53d-46ee-a255-cdca0a9683a4,78.192.254.165,81.97.183.237,Namfix

342,12/17/2017,4:14,1e906431-9d6e-4fb1-b487-6067fd81761a,85.250.56.197,29.124.117.184,Stringtough

343,7/26/2018,4:02,3e92a3d9-5428-434b-adb3-58994dab152e,112.33.203.229,105.105.73.188,Ronstring

344,6/7/2018,14:09,60f00e81-fde5-4393-b6f5-6a80cc93ecf5,101.137.35.49,194.5.219.13,Cardguard

345,4/28/2018,18:24,e68ca68e-b74e-4375-b909-31204d27e6de,134.19.193.84,249.253.77.45,Zamit

346,10/25/2017,0:23,25e04e02-69d1-41d1-a069-feb8c084fb9f,72.74.202.81,38.56.206.189,Keylex

347,10/12/2018,4:14,1a8b5ec2-bbde-4a8c-a903-c6ffb8baaca9,130.48.69.24,129.233.112.21,Tresom

348,3/20/2018,1:11,51f56e09-cc19-43f2-b0cb-6c2b5329ec73,187.157.235.106,48.27.41.253,Zoolab

349,6/20/2018,8:17,3e84506f-8b6c-46d9-93af-a562ab0386f7,158.143.45.231,0.161.229.230,Bitchip

350,6/6/2018,16:09,02a22e0d-ac0c-4f06-a4e3-678a6b2bdbef,201.204.252.236,5.108.254.118,Alphazap

351,10/24/2018,18:09,d596fa81-7ece-4617-a457-2283751bf6b7,21.237.238.88,31.212.247.96,Hatity

352,10/20/2018,19:20,dfb94fe6-9025-4cae-87bd-ac5f29d7d299,99.184.77.16,196.65.241.224,Kanlam

353,10/22/2017,5:56,bb1d93dc-88dd-4f47-b16e-b3e31765f0e0,144.137.77.60,133.221.206.219,Solarbreeze

354,4/12/2018,11:02,56523b16-cfa0-4455-9d91-ed36410b1d85,175.69.164.42,35.83.118.110,Job

355,11/7/2017,15:26,d2642a34-d2f9-4933-8876-2071693c0f96,7.131.91.145,238.226.126.210,Flexidy

356,10/17/2018,0:18,b59dfb30-eb21-4878-a0c8-a1b1eeb2b799,205.24.243.21,244.232.52.242,Y-Solowarm

357,1/15/2018,10:44,352d2e56-636b-4739-8f38-9150ad08a63b,222.9.45.67,192.236.77.64,Trippledex

358,10/8/2017,3:50,18d35d83-bcc4-4ea6-8f03-d8c4f2de035e,206.31.233.43,143.52.168.172,Tin

359,4/18/2018,15:58,1afaa258-cbe8-467f-9f24-79f639f66919,177.18.67.142,196.207.225.156,Viva

360,10/10/2017,8:13,e21fc3d9-4fbb-47c3-9f9c-2ae476fd407f,170.228.160.104,76.154.161.64,Bigtax

361,8/8/2018,19:21,541eb3ed-6923-439d-ba7b-441574ac755f,145.143.71.181,17.82.177.0,Domainer

362,5/29/2018,3:36,f0076a5b-fde0-4b7e-9e6a-63bb84399fff,195.253.61.17,73.184.190.183,Trippledex

363,7/21/2018,11:39,abb5c12b-2b88-4174-a275-9e8b281822b7,228.106.241.186,238.76.117.88,Y-Solowarm

364,5/13/2018,14:47,e72d07d7-50b2-471e-8f5b-b171fd7f009f,13.230.4.249,228.149.10.156,Pannier

365,4/28/2018,13:09,d84ecf5c-8645-4d33-9674-560d874013b1,108.127.142.243,209.82.117.163,Subin

366,10/23/2018,18:51,df6d4bbb-809b-4876-8849-8d3ce356e5ec,70.208.148.191,53.48.252.22,Temp

367,4/10/2018,7:26,1fafb890-3335-4947-8896-430b26285782,180.104.33.235,126.134.66.101,Flowdesk

368,9/15/2018,8:54,3f278b58-f513-4e8d-b86e-7ad1e3b9bc5d,203.216.130.133,217.231.34.132,Konklab

369,7/19/2018,16:14,3d668bb6-7f99-489d-9b4e-2e8e7d161533,15.241.121.133,111.73.67.196,Alphazap

370,8/3/2018,12:59,39bbf389-382a-4378-a422-d9ba9b925929,167.39.64.212,103.244.119.109,Bytecard

371,2/28/2018,5:37,484527a1-cdd2-4493-81ca-6e6e767d55b4,95.201.240.167,220.178.29.108,Latlux

372,9/5/2018,1:19,6b8e636c-24d1-4782-a480-f5fb55318be6,15.37.115.61,144.125.187.92,Opela

373,1/5/2018,13:05,baf2e73c-ca4f-4713-bcfd-7d706c8a98d5,225.24.249.9,42.117.144.26,Bitchip

374,9/15/2018,21:33,7ec86b29-a3df-428a-9fb6-1b7608e071c9,128.80.195.57,80.12.125.31,Holdlamis

375,3/21/2018,21:14,f03db504-913d-438f-8133-7a479270fce0,17.153.142.150,200.13.243.106,Job

376,3/12/2018,17:15,3c82be38-1b3d-48f4-956a-04e8dfd66433,163.253.214.83,47.219.57.175,Y-Solowarm

377,10/30/2017,15:11,edfc8ee9-af20-4899-bb64-6227df1ea95d,102.129.161.28,52.208.201.217,Quo Lux

378,10/23/2017,18:12,ab5311fb-8c8e-44c9-8b09-1a1023bb6675,72.223.12.72,162.118.220.28,Domainer

379,10/11/2017,2:53,b8e97488-963d-45d2-a788-6ea9721a06da,192.142.132.236,140.207.168.33,Tampflex

380,10/29/2018,10:00,f2df7056-d924-45f2-95cb-fcfe72f7e468,205.23.192.141,15.73.69.234,Home Ing

381,7/10/2018,20:22,b4273cf0-199f-4d9a-91d8-0f38dc001524,126.59.37.70,195.94.115.39,Zamit

382,4/13/2018,16:39,4e779906-ce7a-42e0-9b27-237b2b7831ab,102.151.250.171,251.155.129.79,Bytecard

383,2/11/2018,22:58,67611663-3369-4773-af13-d0b145b332c9,41.148.236.95,231.93.153.72,Sonsing

384,1/8/2018,3:27,85d58d97-358a-48f8-94ec-a3656aecca25,137.141.235.134,10.105.102.36,Span

385,3/23/2018,16:48,26da8751-6328-4a44-a1ee-7e7026cf975f,121.173.142.31,237.240.107.229,Tampflex

386,4/10/2018,17:12,f435e756-ba5e-461e-b89a-26a0068dd764,0.70.79.115,93.188.21.253,Zaam-Dox

387,10/23/2018,1:37,6488aecb-4d93-451b-a03c-3ad5a63f343a,249.128.79.50,37.128.21.99,Transcof

388,6/26/2018,3:18,176b50a9-a1cf-4c33-a8a0-4358b050f6ea,173.34.87.173,76.21.101.10,Alphazap

389,1/27/2018,5:36,e8c3b1eb-c5b2-4067-9e72-0b73388bd1b3,122.85.31.12,122.197.93.251,Domainer

390,1/4/2018,15:35,a8b546f7-747a-4859-8783-31a43779d2d5,46.222.209.47,138.74.149.179,Voltsillam

391,4/28/2018,17:12,ad4582f2-356f-45a7-b5c6-caafa915261c,178.77.40.173,15.147.233.10,Zontrax

392,8/2/2018,23:07,78626fe5-eed1-4333-bab6-e61e93cfc391,114.225.92.140,45.61.211.42,Cookley

393,1/17/2018,22:23,9d2337e3-0684-4704-a6df-4a1f9f50f0fc,139.77.101.78,33.213.36.206,Pannier

394,10/21/2018,1:22,5fb5d6a8-ec94-46ac-9660-0fab507eeeb8,43.17.79.245,148.96.80.228,Overhold

395,6/26/2018,9:34,df8d9341-316d-43d7-ae29-c774f20536d2,217.248.128.46,94.67.17.172,Overhold

396,12/21/2017,22:46,5a3db57a-ca8f-49a1-b62f-bc1bd19516ab,68.77.63.61,170.236.146.207,Quo Lux

397,4/16/2018,18:41,bd390908-bf84-4dc0-a8ae-07d60dfceeef,110.66.5.163,245.150.50.243,Tin

398,10/8/2017,4:36,18bc28c3-65ea-4ea0-b025-f1514def1e78,1.11.42.229,254.229.145.167,Otcom

399,10/5/2017,16:56,06fa2ff0-018c-4846-91a4-cdf8a1c5877f,143.183.107.165,232.215.161.212,Alphazap

400,2/15/2018,3:23,1741b7b8-05fb-4b48-8839-03abc1265dcf,103.220.198.154,243.226.165.36,Toughjoyfax

401,1/6/2018,8:22,930ed695-4ab8-4a93-a95a-dd15edbdaeeb,44.163.23.255,157.37.249.204,Tempsoft

402,7/14/2018,23:10,483094cb-7602-4748-9d64-768f72eb3f5a,205.109.21.64,245.123.108.246,Domainer

403,8/14/2018,1:59,4fb06cbf-5884-4fde-aab7-dba25019603a,12.171.47.221,188.83.134.108,Mat Lam Tam

404,10/23/2017,3:02,b821adf3-2032-4342-ae0f-54278d219c10,197.17.153.232,213.18.114.209,Job

405,1/17/2018,4:12,a6a0967a-cbf2-48fb-a38a-94faeff50e24,187.3.240.101,249.126.124.237,Veribet

406,3/14/2018,14:09,faa07c25-925b-4748-bca5-747763f515ba,69.5.149.35,180.251.76.49,Treeflex

407,7/24/2018,13:19,b52de898-6e95-4fc7-ae08-0a97bf0a4730,58.200.173.160,2.49.158.177,Domainer

408,10/1/2017,20:43,4ef648b8-7324-49b4-8b0d-fa57b3d9bf9e,101.155.124.32,4.153.41.159,Voyatouch

409,5/8/2018,0:52,7dada472-a129-49fd-a06a-94c928b45e8b,43.80.201.103,157.216.116.47,Vagram

410,6/11/2018,7:11,03e63f7e-6461-4c0d-8fc8-c06e9b9f3c64,248.66.60.141,18.243.248.193,Daltfresh

411,6/20/2018,17:37,f637744c-89aa-49ba-a5f0-143122d07803,236.123.147.67,16.241.86.244,Quo Lux

412,4/22/2018,16:19,c4871956-36a5-4da6-be27-b3fc66d25775,237.145.35.5,42.77.71.29,Bytecard

413,6/2/2018,0:37,3b85fe9f-49da-414b-b924-b6ce3c098c30,134.231.20.101,242.178.106.91,Gembucket

414,5/15/2018,12:52,be285ea1-3085-4550-8628-672f9bfa932a,189.253.209.220,208.164.22.97,Holdlamis

415,5/11/2018,18:34,abcbdb2b-e0ba-45b0-9f07-23c4358ce7cb,44.231.230.151,80.6.132.101,Solarbreeze

416,4/21/2018,21:22,281686f7-61f4-44ea-a92f-bea9d3a6f894,196.35.168.0,142.132.135.75,Fintone

417,12/21/2017,2:59,d5382cc6-f5ca-49b5-8a97-8dcfce714859,123.114.47.65,244.134.176.207,Zathin

418,11/28/2017,2:10,6c57c9ab-cc8c-4405-aec7-eeece9bb443f,89.144.228.69,190.23.217.81,Aerified

419,5/14/2018,10:30,d015b9de-7c6b-4b79-97ac-fcffb5183ff3,78.161.122.120,193.4.54.105,Pannier

420,12/13/2017,18:02,798d0cb8-99fe-4e00-a83d-99882a1ab43a,49.186.28.124,119.252.228.250,Quo Lux

421,9/14/2018,17:58,ba1b68bc-954f-4df8-8626-9373cfec5da4,110.75.109.14,91.24.128.106,Kanlam

422,5/20/2018,13:33,5d3a32f3-1335-4a17-91fd-18c995663d21,178.223.93.168,118.154.154.55,Konklux

423,10/25/2017,11:25,95db4107-0304-4abe-ad89-eee70a505a6a,197.41.153.57,161.141.103.193,Matsoft

424,11/2/2017,6:27,14387400-82e7-439c-bcb5-cf89ecc3b486,182.74.74.150,240.67.84.74,Biodex

425,10/18/2018,13:06,55171006-36f9-404c-995a-3aa7c61924d0,148.181.15.149,146.224.79.118,Daltfresh

426,10/16/2017,22:23,3406875e-a1a1-4a07-8764-92dff4cff524,44.103.27.135,225.34.42.147,Regrant

427,8/8/2018,10:35,61592440-7bb2-41ac-909e-6aeac34fde66,235.120.205.142,12.36.212.187,Stringtough

428,6/4/2018,5:29,fde007a3-a8af-43e0-bd70-8319289e7f5e,147.97.47.118,174.88.168.66,Voyatouch

429,6/2/2018,22:48,94d8da1c-ad06-4570-8d51-584a5d2d42ae,228.57.76.228,6.38.124.60,Bytecard

430,8/20/2018,7:21,75dfab4e-a77d-4b35-930b-11f443b0bea0,191.92.7.247,33.81.101.42,Bytecard

431,7/12/2018,0:02,681173d9-43e0-4d7f-a1bd-2b5304babf95,51.144.153.199,78.8.64.33,Fixflex

432,9/17/2018,17:29,bdd5e8fa-525c-4933-93b8-c12468ed22f0,32.154.124.54,206.81.95.24,Holdlamis

433,6/17/2018,8:57,1233adfd-f7a7-45ba-8282-f412adf923eb,223.92.155.220,230.197.195.180,Ronstring

434,6/20/2018,20:29,2ad96e98-3fb1-46a4-82ef-5aa1db3c101b,108.228.115.180,170.23.8.139,Gembucket

435,2/20/2018,2:15,887b6fe9-53b0-49c6-a33f-8b7f34447505,150.240.153.210,149.62.140.232,Transcof

436,12/15/2017,1:49,7f0e9714-9747-4304-8b8f-b3a8b653e78a,98.201.244.27,23.163.219.89,Hatity

437,5/26/2018,6:31,67857d73-eec0-43e1-96cf-991c11db9ca6,14.156.170.54,134.86.104.59,Konklux

438,7/2/2018,15:59,98258d1c-ed81-4982-9b1d-5effee6321fa,249.248.10.140,9.147.222.146,Sonair

439,10/27/2017,22:44,11f0ec03-0edc-43e7-b1a4-7378bd65357a,204.136.121.180,177.27.110.124,Mat Lam Tam

440,3/17/2018,10:28,becb105f-5651-496e-995e-99da0a40bb5d,217.164.233.188,36.168.34.203,Daltfresh

441,7/27/2018,5:07,994a7b4d-fba6-472a-98c1-7cf974a0637f,49.224.23.223,101.90.255.70,Zathin

442,2/15/2018,0:53,cf44a620-2b7c-46a2-ae09-2f57a8f3dd6a,60.106.133.230,54.166.226.161,Toughjoyfax

443,1/28/2018,10:01,a6cd485b-bba4-47ef-a3ac-e29a3191ced3,179.85.241.53,205.180.146.55,Trippledex

444,8/31/2018,9:51,27fc8a05-af58-4637-80f5-96396c4f727c,250.75.211.37,210.63.127.185,Sonsing

445,11/15/2017,18:12,bc203dc7-4197-4074-9cbf-27838e28fe3b,211.29.168.111,156.4.36.160,Y-Solowarm

446,1/14/2018,9:14,718aafd8-fefe-4859-b010-b263ddbb3e99,219.219.187.88,70.123.206.185,Voyatouch

447,3/13/2018,2:33,e8b2711f-9522-4d5e-a9b6-023c5a9a5c65,238.25.162.117,2.117.21.142,Flowdesk

448,12/24/2017,7:32,16fdf1b5-8757-4d96-8172-5b36a55cf5a5,93.43.42.128,186.172.16.7,Alphazap

449,11/12/2017,7:48,a256afe9-709b-45ad-a1c1-f30eccc196be,166.72.24.21,190.141.154.213,Opela

450,6/12/2018,19:28,3be103f1-c262-40ce-82e9-de52cceae69e,73.89.175.161,128.170.174.170,Zoolab

451,8/19/2018,16:09,82027a68-b200-4232-917b-9ccc5c6250a7,207.167.238.86,93.44.141.158,Sub-Ex

452,12/9/2017,0:45,3c444b20-a726-4e0a-81e4-cbb155ee1ffd,208.254.232.175,226.70.247.29,Zaam-Dox

453,8/1/2018,6:02,bc8ca63c-f8e2-470c-89bb-a387ec303c40,2.45.4.249,191.245.69.3,Y-find

454,8/11/2018,5:07,3b865baa-9c59-4b37-b8da-bf092c76c30b,246.57.67.212,217.250.180.137,Bytecard

455,2/7/2018,19:28,c85f27ba-b2e1-4834-94cc-38139b8a0711,221.28.240.181,169.104.139.118,Bitchip

456,3/23/2018,14:10,cd110f6a-f570-4df2-a67c-709b14b4f08a,94.128.228.119,85.92.0.19,Zathin

457,1/20/2018,22:46,ced40628-f75d-44c1-bdc3-7735ff82c044,218.176.79.62,160.180.245.229,Cookley

458,8/12/2018,11:00,290c51d4-1d15-4787-bf22-cd52c261917c,221.101.173.1,40.173.28.161,Lotstring

459,11/24/2017,12:51,559df49c-cd51-40cf-973f-116233e67fcb,172.140.239.41,11.213.74.109,Flowdesk

460,9/23/2018,5:03,d11d6c59-c7e1-4054-afc7-63dd9f8e7e35,0.22.180.239,79.136.223.222,Veribet

461,12/26/2017,4:29,a6f5ab6f-ea08-43de-9666-1a17d18d5834,62.96.41.239,76.227.64.107,Transcof

462,2/5/2018,6:16,27e4af56-91a2-4045-8027-cd09538cded2,20.118.141.92,108.252.102.85,Daltfresh

463,11/17/2017,8:23,3ce60eea-426e-4b6e-a2a3-5a09374fcb17,89.11.103.184,146.240.252.197,Transcof

464,11/29/2017,20:19,448e4ad1-36c0-4ff5-95b1-ff92a660f808,100.223.241.7,82.176.43.134,Hatity

465,5/12/2018,10:04,a9ed539f-8f59-4770-87bb-0be0267c1d5c,145.169.56.214,37.154.147.88,Daltfresh

466,3/22/2018,1:29,5e531fde-3992-4a37-a920-0392b6665be0,48.153.223.182,239.145.40.195,Domainer

467,7/17/2018,20:57,f9bf81c9-1dc9-4fdd-be81-82499179ccd9,129.220.216.188,61.156.63.203,Sonair

468,11/6/2017,17:10,68c20949-2613-46b1-bdb8-02881d16b322,193.246.118.14,238.28.120.44,Fix San

469,5/15/2018,15:26,218ed02c-8aea-40f6-bf10-de677645ee34,83.79.162.185,57.148.82.74,Lotlux

470,7/4/2018,18:57,3c626350-897c-45d2-a509-10510270f163,216.240.180.147,127.94.224.66,Temp

471,3/13/2018,9:41,01cb73d9-24fe-4ad2-8d13-897ff0a0b4ad,96.119.190.193,81.49.32.32,Zoolab

472,10/25/2017,0:28,5fb37437-194f-421f-9903-9450a307b5fe,232.115.104.20,207.61.73.206,Tresom

473,8/25/2018,10:20,176823f3-b4de-46b1-ba48-603f4069f0b5,117.15.101.48,195.63.164.177,Bitwolf

474,5/8/2018,19:51,02c03c8a-f841-41dd-8bed-5af75b9a9407,150.60.89.61,74.89.251.190,Holdlamis

475,6/30/2018,14:46,d19b3a0e-4237-4094-85e2-4b8a188f93be,193.107.86.66,75.40.171.68,Fintone

476,10/16/2018,7:50,78457821-8919-4568-beb2-a3645cf07401,57.133.101.244,102.134.117.145,Stronghold

477,9/10/2018,6:12,9a81b7cd-e0a6-43f8-aae6-f1823a3244d0,174.194.162.156,161.248.41.184,Overhold

478,2/24/2018,9:27,8ddb6bb7-cf9f-48d3-805e-752568bc3d0d,113.134.179.248,139.211.234.224,Sub-Ex

479,5/24/2018,0:00,d182226f-d65e-419c-ae5f-89dcb4621500,93.241.52.254,95.187.25.90,Sub-Ex

480,8/2/2018,17:02,9e2e73ff-8159-4992-8aca-e511e94fae05,63.160.218.2,163.25.80.88,Cardify

481,8/26/2018,1:51,b781f99f-7e38-4bb4-bf1b-afd9fb7b3326,131.110.52.114,246.48.43.76,Solarbreeze

482,7/2/2018,7:37,da78399c-8cd0-4b8b-a7da-a3462a8df25c,8.32.68.212,128.243.134.94,Sonair

483,10/18/2018,15:45,e56783e8-31ab-4f53-b61d-b46ee94b50cd,172.201.253.192,73.175.34.121,Daltfresh

484,6/1/2018,18:53,5a2e8859-17b2-4922-9711-648ca714caf7,23.14.21.231,179.135.215.217,Fix San

485,10/24/2017,18:02,b9478067-830e-40f8-8997-34b1703ad7b9,213.12.25.131,125.106.239.77,Kanlam

486,10/18/2017,0:53,f236799b-5240-4041-8636-f9ee0de5d984,101.207.103.168,103.141.207.214,Temp

487,10/16/2017,22:19,ab374930-8260-4146-a74c-306daaefdffd,157.210.189.111,164.110.212.220,Voltsillam

488,11/20/2017,22:11,fa9677b4-4cf2-4a05-a145-8f8420eff070,7.211.99.139,30.41.35.191,Konklab

489,5/24/2018,14:28,cf1bd83b-2c66-4804-a5dd-a22d0dfa38e9,10.15.173.90,185.8.155.61,Bitwolf

490,9/22/2018,10:23,bb316440-1d96-4875-8d48-319506953c72,248.187.166.121,137.155.100.57,Transcof

491,7/25/2018,0:24,a7af5767-d073-4bdf-b2ec-4e44428991fa,163.199.105.135,24.15.56.186,Wrapsafe

492,10/15/2018,3:04,022d3d4e-21cf-4a22-939a-41af992c964f,78.48.237.31,47.139.108.247,Treeflex

493,8/11/2018,20:51,7dedff8a-e4bf-4fbe-97a1-c0514d51f009,227.86.181.70,189.68.249.249,Namfix

494,7/27/2018,15:49,e1102fd8-bcbe-4091-a39c-c98a4c4a1e3e,55.221.218.93,74.178.23.205,Cookley

495,3/30/2018,4:45,9a8fbcfe-d619-4ab8-b9c5-062afaf76855,26.56.146.247,29.193.118.175,Lotstring

496,3/20/2018,6:33,374d389c-10e4-470d-9546-99f19255e76c,12.233.142.78,150.172.247.231,Biodex

497,3/18/2018,4:21,21c79176-3c29-430f-80fb-3872d8b3611e,25.52.111.88,168.138.105.8,Y-find

498,5/27/2018,3:54,1b7e89e3-d700-4cd5-ad41-41bfa8b8aa44,242.108.151.46,214.88.44.135,Matsoft

499,10/10/2018,21:31,02e05335-b275-4b89-bd15-4a1b6bd407fa,146.67.193.59,61.93.124.14,Cardguard

500,3/31/2018,8:57,2b95e8f7-8c03-475e-abf1-ea43c34cc11e,66.197.232.247,70.74.243.0,Y-Solowarm

501,6/19/2018,1:12,aa1006b5-f080-4d60-9c9e-f6ccd17bfac2,26.106.206.240,157.69.109.39,Job

502,8/19/2018,5:23,1eb851ae-fc5d-4820-881e-c80ed73eb5fa,150.209.66.250,70.46.42.29,Zamit

503,2/7/2018,2:48,49d503f1-b7a7-471f-bfd6-afca854b8b7d,10.198.125.21,152.53.119.71,Tin

504,9/19/2018,9:07,c1b49781-bf9a-4b38-a03c-ccef911a93ae,122.139.80.20,248.168.98.217,Opela

505,10/14/2018,17:16,ad72ccff-0d23-4285-a958-886d5ef44ab4,123.90.152.157,25.49.228.157,Quo Lux

506,6/15/2018,7:58,119fad16-4ff1-4acd-bbd2-c890ebe6acea,240.71.165.4,90.188.114.86,Tempsoft

507,4/27/2018,15:26,4f7df3e5-35c9-444b-8e5e-e4be1add6d0d,52.84.24.159,114.141.117.92,Sonair

508,12/7/2017,16:35,c67f4d9d-9c13-4197-9f89-7af11a1a1e93,96.48.69.63,224.137.2.45,Opela

509,7/14/2018,12:06,b6a29560-6c05-44f1-92d0-0d9109b7419b,146.102.15.171,21.58.228.137,Zontrax

510,8/9/2018,13:03,7f9f74de-995e-4679-a6de-7a1a93501284,129.168.223.244,133.31.215.70,Trippledex

511,5/27/2018,0:28,4d66484b-7c0c-4691-a352-74950c096e39,173.161.123.107,174.46.29.51,Cardguard

512,4/18/2018,15:26,72a58166-80fa-4d5f-a9c5-164d044506e4,145.44.215.44,110.254.220.148,Temp

513,7/21/2018,8:06,6eb87dfd-747b-4b3b-9756-c421e0232646,170.39.153.205,67.149.177.112,Bigtax

514,4/12/2018,6:20,10393479-2273-4edc-99b0-ce1347b2c820,186.114.115.22,84.3.56.177,Andalax

515,3/22/2018,2:35,cb0cb027-492e-4ced-8737-8d5bf48cea6a,246.122.117.157,96.38.92.201,Y-find

516,3/26/2018,5:51,903ccd85-a2ed-4e18-94ad-9e0db054b7bc,89.63.214.135,241.123.210.143,Cardguard

517,9/18/2018,3:04,459660a4-8394-4c34-804c-09aa9d82718b,179.79.81.233,152.172.117.230,Greenlam

518,6/8/2018,23:18,c23131d8-592d-44ec-81d1-7ed4e8975179,95.91.29.241,136.88.132.193,Y-Solowarm

519,10/21/2018,7:06,9a293499-c629-4ec0-89de-edb3ec78a15f,171.148.15.34,16.133.210.220,Quo Lux

520,10/14/2017,6:40,e40b379d-8880-4791-a0af-9288dd495b37,236.94.12.187,182.46.238.179,Asoka

521,11/18/2017,18:19,825599b9-fe19-4f87-8784-671b9838c566,226.159.207.196,7.185.209.57,Rank

522,9/5/2018,12:04,04ef70b3-ae86-4d70-8dff-4fbc64b429ca,208.83.42.162,126.165.178.105,Voyatouch

523,7/3/2018,21:09,b9b2708e-b2f9-4d68-93f9-9705e6db20e4,244.14.221.143,94.170.246.238,Greenlam

524,10/16/2018,23:28,d7b7ab87-ac69-4c73-b5ba-01cad6bf36dc,172.136.47.122,59.67.130.19,Sonsing

525,5/11/2018,23:25,6919d894-dc22-462a-b5de-7881849ee313,194.57.88.230,126.135.218.135,Solarbreeze

526,7/23/2018,2:51,9386f984-e938-4e6a-9379-918eef5f76f5,111.112.217.205,203.227.192.178,Aerified

527,8/22/2018,9:54,e0454b2c-f613-4098-beeb-be2aca546431,108.146.127.21,103.231.243.62,Bamity

528,4/21/2018,1:59,c3200308-f5bf-4d1c-b82d-10e79f271b81,153.5.199.229,56.152.119.110,Latlux

529,1/9/2018,0:35,f0e57f3b-f59b-48b1-9e95-89427bedd45e,75.182.251.35,167.32.114.134,Matsoft

530,8/22/2018,4:15,f7113fac-5ccb-4c4f-b989-5863fd6d567b,63.130.6.142,192.123.54.196,Biodex

531,7/15/2018,17:15,f4325403-aff6-448f-bc22-dcc8a123c6d7,175.49.149.242,86.5.23.127,Bitchip

532,11/21/2017,3:29,8e5d52ec-9a33-49a9-8a95-a74ba771634a,75.215.96.88,141.15.195.61,Cardify

533,6/2/2018,5:10,1861d05d-522d-4c26-b349-861632c63887,136.51.197.78,29.56.27.210,Regrant

534,10/21/2017,17:47,906ab0fb-1786-4fe3-a1bd-574840fdc900,65.26.124.38,250.104.148.252,Sonair

535,3/12/2018,10:52,07d80190-93c0-4caf-be1e-e580d1bd382f,175.90.130.65,196.39.84.164,Overhold

536,2/10/2018,9:45,9d139d5b-40d4-40e2-ad07-1523c864c392,78.223.84.116,196.165.239.101,Zamit

537,3/28/2018,5:56,2e51cc18-657b-4736-8200-33d2e935b078,121.213.118.140,185.208.126.235,Veribet

538,10/11/2017,1:55,72f6017b-5b0c-4af5-bca0-8a4560d8c5d3,12.240.224.93,144.131.29.218,Hatity

539,2/1/2018,16:49,7d94af74-96eb-4fca-b0ac-d03bf27b04b1,52.96.28.169,33.76.33.177,Fintone

540,6/8/2018,11:04,51d06cac-34ab-4a99-a4d3-9694db9d8646,85.172.81.88,154.49.6.43,Andalax

541,12/7/2017,4:28,b43c9c6c-b673-415e-b0bd-ffd655dbac69,122.234.31.153,39.76.185.178,Span

542,9/6/2018,12:47,7f047510-a399-4531-8b0d-0d0771e5a19d,153.197.62.187,8.189.241.196,Tin

543,6/18/2018,15:50,99da7c8f-71cd-4393-9078-a50b098d47a0,127.156.197.154,113.123.163.156,Andalax

544,5/30/2018,8:16,f8f24108-494b-46b4-9a92-a1b4e251fbcf,148.23.253.47,203.241.167.77,Tempsoft

545,6/12/2018,0:55,ec2382ee-9ee5-4938-a232-90c31fa9a870,39.174.235.240,55.69.47.49,Lotstring

546,3/29/2018,3:31,8e932c9f-4314-46c7-977d-085f0d98be8b,35.242.243.181,77.155.30.94,Redhold

547,12/16/2017,11:06,b2df888e-a2f9-486a-98c9-2447372e7e1a,23.119.184.149,23.80.220.144,Konklab

548,10/8/2018,7:01,709d9c51-7dca-431f-8ecd-0a49f45e9586,252.91.226.136,130.140.126.203,It

549,5/20/2018,12:03,0ed225a7-e2a2-4d6c-b8ea-4df01e2b7aa2,190.10.145.42,38.232.16.215,Zoolab

550,8/31/2018,1:53,e04c369e-747e-4f14-bc6f-43bf58836780,179.233.226.6,41.84.219.233,Zathin

551,7/26/2018,12:56,3c6e0fde-0076-424d-8836-ed8a3e3a59f0,43.63.48.124,251.243.189.4,Temp

552,11/9/2017,13:05,176ca553-6f07-4f37-9a13-f1ebf5ce68d2,80.12.230.34,73.203.8.52,Domainer

553,10/27/2017,11:02,e8e6bea3-e0b3-4392-8657-480af1d746a7,51.9.36.146,176.67.40.146,Mat Lam Tam

554,3/30/2018,20:28,d14eb30a-4f7a-4067-8e25-6402475aa9aa,144.185.101.10,113.45.64.221,Sub-Ex

555,8/17/2018,23:55,cf1a4642-a55e-4e99-9d3a-e0da9f5639a0,27.173.203.236,81.235.217.184,Span

556,4/9/2018,22:08,0ee35ace-e79c-4023-bfbd-1bbc27fc6185,103.29.80.176,122.126.20.235,Pannier

557,10/7/2018,3:34,99d7603f-58ba-4c74-84b1-338e4863d1a5,9.113.90.102,106.194.32.223,Transcof

558,4/14/2018,6:18,08df70d7-7440-4b35-8ede-1454c1a2d30c,128.146.168.190,35.213.148.142,Prodder

559,3/5/2018,7:58,a6c2c375-5315-41e7-bb2a-450976321573,252.85.146.104,179.94.178.66,Sonair

560,10/9/2018,12:24,7a4c9821-449a-4784-a253-6c2ef0eaf2fb,248.105.58.191,30.151.233.97,Transcof

561,10/25/2017,3:01,b5cd5b9b-2f3d-4646-bac6-ccc3516d8d6b,158.204.82.139,143.73.64.55,Namfix

562,10/27/2017,4:54,61e5306a-395e-4cda-a3b1-c9a64efd5f59,140.111.35.48,56.87.62.90,Cardify

563,10/20/2018,21:59,71770e82-ab26-4741-88e4-1f6727ae3b75,212.245.108.46,48.67.42.41,Hatity

564,12/2/2017,15:51,1d265b58-7620-407b-9800-9030ff852f5d,5.248.226.54,123.50.59.190,Lotlux

565,1/4/2018,9:39,876efe78-60a7-4221-8ff9-0e2c51827a1c,52.29.132.134,81.64.18.220,Rank

566,2/14/2018,22:53,a07dbead-f33f-403b-b06b-e72f13d14e1a,150.131.240.214,84.199.244.228,Keylex

567,2/28/2018,5:37,a9f789e6-4bb4-4a29-9d72-62599fee6b0c,172.240.25.14,95.134.216.254,Ventosanzap

568,12/28/2017,6:34,4dcead5c-1f37-4cd7-bb43-c17d5f6c8a41,89.13.71.49,119.60.12.112,Viva

569,8/22/2018,5:28,8555e052-f822-4374-a062-0f990adab7d3,7.8.129.179,8.43.167.127,Matsoft

570,6/27/2018,5:30,8e19bbcc-694c-4431-bd59-f6fa28fd754a,65.166.50.231,105.185.11.47,Rank

571,7/26/2018,1:27,eb70f42b-0f73-4c36-b332-719ddccd0711,226.255.153.43,155.192.47.254,Redhold

572,10/15/2018,17:12,dad9f0a3-a0df-436f-8279-f6516b6f37bc,143.161.112.183,60.71.11.14,Otcom

573,10/8/2017,7:00,456afd16-354e-4be6-b535-c9a07efb5ffc,165.22.23.168,14.240.84.105,Mat Lam Tam

574,6/12/2018,20:41,297120bf-46cb-418f-ae99-1e1e9d854dba,69.7.58.205,37.243.14.155,Tampflex

575,2/16/2018,2:58,22d14540-a441-4642-82ad-1b34c64ebf76,72.100.116.216,38.199.69.89,Gembucket

576,10/14/2018,2:52,1d67cd11-79e9-4556-ae56-3c9781ba9efe,59.103.134.14,193.81.74.193,Quo Lux

577,8/31/2018,23:13,14090de0-dd97-4c1a-a30b-cbd60d20eb97,54.18.147.190,91.252.112.146,Ventosanzap

578,5/24/2018,6:11,c9e643e0-abe1-4691-9504-70da8071949a,79.220.147.155,20.152.48.97,Aerified

579,10/5/2018,23:24,a41e5196-a6e2-45fe-a102-d199bba47a5a,255.167.68.177,249.222.7.11,Cardify

580,12/12/2017,8:46,211f5bdb-9b5c-4837-9600-f76e94f6769b,70.13.179.48,195.41.44.190,Pannier

581,4/8/2018,13:07,7971638f-b0cd-4ee5-b548-adf940242ee4,218.32.194.8,137.76.221.255,Prodder

582,6/12/2018,19:22,4588ac92-e601-470f-bc69-b03a69957666,244.161.231.0,254.108.49.229,Job

583,10/5/2017,21:16,4b34dff8-6c35-489c-b580-d121b53f7c13,20.121.28.46,237.199.32.88,Stronghold

584,4/16/2018,13:31,41c6a31e-b8b7-4b10-a065-ca6493dd4054,136.180.95.28,241.179.127.54,Voltsillam

585,10/11/2018,10:33,6a03f4f1-eab0-404a-a7b1-8e7961391104,235.227.205.139,99.51.14.125,Holdlamis

586,12/10/2017,20:00,85013f08-db95-4654-aa6e-e81776108604,253.42.147.39,230.221.203.158,Alpha

587,3/2/2018,4:30,b1a6e095-b438-4f87-a7c2-dc9bc8e757f6,158.227.93.222,0.137.63.232,Bitchip

588,12/4/2017,3:46,ccb4c284-06ec-465d-9552-0ddb881e2999,236.71.154.68,23.189.109.171,Alpha

589,12/9/2017,11:29,78356b6d-e424-403a-a129-2f47a0bb54c7,177.95.204.215,13.74.22.216,Zaam-Dox

590,6/14/2018,19:51,b5ac15a4-163a-4a6c-8927-90958e73af19,174.63.24.13,255.145.228.80,Tin

591,4/30/2018,22:53,5c37a6c5-ad74-4aab-81d0-d15186af809f,8.203.107.88,228.59.111.124,Job

592,1/12/2018,1:54,24d16c91-5e94-4f4d-87c2-b45167fcbee7,108.105.222.247,250.18.8.157,Tres-Zap

593,2/22/2018,17:20,4aa39801-6f3c-4483-a529-ef45e67ef160,11.40.167.215,27.153.119.230,Duobam

594,10/31/2017,11:17,7b9fac00-467e-4ef8-909d-563164a40359,173.85.82.236,177.106.108.112,Fixflex

595,12/25/2017,0:06,f7bd91c0-6e93-4108-8004-377427a92d7b,86.34.254.67,240.122.232.9,Cookley

596,10/21/2018,13:08,afc4aed0-bd15-48b2-b7a9-737002cf3a0f,92.159.47.6,150.224.17.144,Stringtough

597,12/4/2017,11:37,af659b6e-03a4-4a10-869d-48ebee58691d,196.214.44.66,117.36.41.166,Lotlux

598,1/20/2018,14:12,28d9381f-63f0-4b5d-8c33-7fe9aada359b,14.199.31.157,225.205.181.39,Flowdesk

599,10/8/2017,6:17,4a228199-cac0-470e-a60b-80ce06204403,82.69.9.244,226.106.151.21,Pannier

600,10/25/2018,21:02,db5b1369-47f7-416b-94a1-9c06304bc6d7,82.123.202.105,87.94.126.255,Veribet

601,6/20/2018,9:20,890dad49-801c-4922-b531-a87d7f01c8b2,198.88.234.157,254.89.198.65,Sonair

602,11/11/2017,8:45,32be1e84-7c23-4b21-a07f-a012ccd48c1c,225.141.198.175,127.202.240.4,Bytecard

603,9/2/2018,1:31,bc809e08-c45f-45df-a546-94fecf16ea90,91.100.51.169,180.16.61.248,Zoolab

604,8/19/2018,11:42,4ad4edef-afe2-41aa-9e27-968ab56a6cd4,210.114.216.60,245.218.10.237,Sub-Ex

605,8/22/2018,5:10,b6cd8477-313a-43ca-bdc4-f7e8c7ff6385,39.131.144.195,180.226.128.242,Trippledex

606,10/2/2018,13:05,76c97070-6ab5-4997-b47e-8ff35af99877,227.190.248.94,130.250.40.244,Otcom

607,5/11/2018,21:35,a97ad925-8ec9-4768-8056-11b2bccfab5b,111.201.43.154,113.69.248.36,It

608,1/25/2018,0:12,003edde0-03ce-4228-aae4-921e0a80dfb1,9.180.10.146,69.186.39.83,Veribet

609,4/20/2018,15:24,2c3c8278-d3ca-492c-951f-176081f0cd48,156.4.48.231,203.43.63.33,Matsoft

610,5/14/2018,0:47,01345fb5-cd1b-4e41-be72-a284386a81c3,248.233.130.196,133.88.228.52,Zathin

611,8/24/2018,20:09,7f12e202-ce26-4f80-ad30-bf8f0402e124,126.1.92.220,97.136.247.119,Daltfresh

612,2/18/2018,22:26,84fed4c1-79b4-458b-b006-d41d345d7385,249.132.38.62,204.219.245.64,Tempsoft

613,12/1/2017,7:54,f0b68581-a201-4b73-9510-f329278cf6f0,6.138.153.243,237.230.241.239,Ronstring

614,10/20/2018,21:23,0bad0d6f-de9e-4bfb-aee0-6c8a0a33e1ca,91.13.122.114,44.77.12.255,Zaam-Dox

615,6/5/2018,19:23,9837d2eb-1e7b-41a2-8549-7ffe3d554131,124.148.202.186,164.102.190.55,Bitwolf

616,8/10/2018,14:00,52ee6ead-25c4-4614-8503-9c34de5dae90,3.166.247.32,60.64.245.97,Bamity

617,12/11/2017,12:24,e39730ca-14e7-4562-b394-e32dcd54bb9f,173.159.157.85,205.77.139.84,Overhold

618,4/27/2018,22:45,95bc6c44-c982-43a3-92f4-41509e30f6d3,168.53.204.66,36.141.221.162,Konklux

619,9/18/2018,1:01,66a7a579-9c0b-43e9-a089-25dfb6d4b212,170.18.53.171,20.12.222.33,Alpha

620,11/18/2017,17:03,0c55772c-612c-4cba-86dc-e4374aa45668,19.17.114.29,148.75.253.40,Cookley

621,4/5/2018,15:20,af2bf0e9-c482-4f2c-ae8e-65522e9b1d9e,68.226.253.24,136.215.143.25,Fixflex

622,5/31/2018,9:49,43b6e547-be34-46a7-a4f1-75dac2946fb6,85.138.7.59,252.143.52.156,Bitchip

623,11/26/2017,14:13,9aa7067a-d783-4291-b984-3d296d3ae8af,153.18.143.151,255.77.75.22,Duobam

624,11/17/2017,9:26,3e6cf359-6386-41c0-91e3-cb062fc5b462,201.235.55.121,65.28.213.146,Trippledex

625,12/19/2017,22:38,2aaea825-7000-407c-9625-6860d1ee8c17,116.20.58.102,202.162.210.118,Treeflex

626,3/9/2018,12:25,91d5a342-76e3-4d2c-ae39-1e419df400f7,229.51.91.46,130.204.102.170,Konklux

627,1/10/2018,4:02,d8764706-49a0-41d5-b9a3-12f42a21563a,253.62.131.9,244.45.132.51,Ronstring

628,2/20/2018,2:12,5865de27-3971-49a3-80b2-cf234f7dae07,50.201.181.219,220.165.145.212,Subin

629,6/24/2018,3:33,6aa084f4-c21f-4634-932c-2715c9a87aa5,210.45.169.39,231.157.149.103,Konklux

630,4/25/2018,2:07,9bcf089e-c3c0-4b02-8a1e-f397015751d2,113.205.126.143,170.123.208.164,Namfix

631,10/11/2017,1:53,5b43a5da-5eaa-4ade-8b7a-9624a32010ee,82.65.67.49,68.224.0.62,Zaam-Dox

632,8/4/2018,0:40,de2e0e2e-6eb7-4983-98f5-bd1618d2d32e,0.204.143.232,78.233.62.6,Regrant

633,10/23/2018,10:31,3ee1577f-1fba-4cd4-abe6-b61cfd0eb46c,101.55.168.222,40.7.89.41,Lotlux

634,3/24/2018,16:04,48a544c8-7f26-4494-bcb4-d598934d9fcf,27.111.66.97,223.96.210.188,Trippledex

635,9/26/2018,20:45,57c61d1b-2bce-4f65-b554-a322407522a9,78.102.22.236,130.18.150.191,Stringtough

636,4/16/2018,11:31,ead3d5f2-93ef-4470-9478-5d02847caba3,198.185.227.2,219.6.184.199,Konklab

637,10/28/2018,20:01,38393e04-a8ae-4e59-a79c-8a8d59cdc6c3,19.113.58.121,62.220.89.2,Alphazap

638,10/26/2018,6:14,cc16aeb3-b6c2-464f-a5e0-3513f4abc81c,127.126.234.58,191.169.189.68,Fixflex

639,8/7/2018,2:38,ecc2f7dc-516c-4fca-992e-2864678b47fd,186.138.135.114,71.77.20.183,Stim

640,4/22/2018,12:30,ee07b6dc-e6ec-4960-959b-205a425f5f03,95.85.130.4,218.181.119.148,Trippledex

641,5/26/2018,4:21,761baa60-855b-4ccd-8819-7db20743b0b2,122.153.181.20,234.165.168.3,Tres-Zap

642,12/5/2017,11:15,1164a357-2c6b-4c94-8cea-eacca842cdb8,81.137.40.93,70.206.31.168,Temp

643,11/20/2017,4:41,52ffbc80-bceb-44f1-8199-da3b7fac7563,51.30.67.176,35.177.112.16,Flexidy

644,10/27/2018,21:50,992e9871-cc87-436e-9985-e4d9482744b7,121.115.159.203,135.115.50.74,Bigtax

645,6/5/2018,14:15,67ceaf28-3881-44b6-93e1-2eb7c0e12daa,98.247.42.187,32.17.152.98,Tres-Zap

646,10/14/2017,23:24,54cc4834-cf9b-4b92-bbb3-58046dc16c3c,231.165.133.45,206.208.202.38,Domainer

647,10/17/2018,6:31,a90a7b22-ea29-45cd-b7ce-d28e732aa1c0,224.213.68.175,234.188.27.10,Prodder

648,1/9/2018,15:32,5ee1c57f-83dc-450d-b218-8878dd09b080,11.53.69.165,244.9.118.143,Quo Lux

649,10/4/2017,18:26,dc2cb7d4-82c7-4d21-b12b-0a0234f1771a,239.72.240.17,156.163.172.140,Konklab

650,3/3/2018,13:11,461ea836-a09a-416f-abdf-bade0905fe8c,180.141.89.215,109.90.22.110,Tres-Zap

651,5/5/2018,8:56,b73251f8-8408-4961-a90c-ff84c8bedd8b,130.89.51.22,238.183.236.71,Konklab

652,12/11/2017,21:54,993d84fc-7dbf-47ee-b676-5f6256f50ddd,96.91.62.186,165.67.27.12,Andalax

653,4/24/2018,23:54,1721d6c9-0d18-4cc4-a3a6-9e8d6e2b41ce,251.230.250.55,62.1.103.47,Fintone

654,2/24/2018,14:02,4abe9184-f925-4fd9-81c9-80c33f8314e0,240.55.199.40,183.223.60.54,It

655,11/26/2017,0:04,9fada0a2-1e1e-4fe0-975a-02151084dc51,80.143.79.133,102.48.237.50,Tampflex

656,5/19/2018,12:51,1f0d1969-1afb-408e-8935-b80ca1a73ea8,49.75.178.101,127.140.167.184,Ronstring

657,2/19/2018,0:31,c2c2c258-e6d5-4340-bae5-afa699027e4b,82.20.60.100,0.174.88.80,Zathin

658,10/12/2018,23:57,70ad2e81-2eec-4b12-86e1-74f0a2ca89bd,197.0.67.164,180.130.192.169,Voyatouch

659,9/7/2018,20:37,f55a531f-8796-4851-837c-f550f6baa06a,82.122.239.20,170.150.231.14,Treeflex

660,8/15/2018,11:02,2c337e11-f720-419e-9b34-6c91cce961fa,224.86.153.230,211.192.47.60,Overhold

661,7/29/2018,20:13,ce9f1f2a-4d7b-4534-b042-e8f7f12a35ac,231.131.96.240,179.70.238.38,Quo Lux

662,10/18/2017,14:03,cf71fd52-669e-4e4c-970a-969bc132e6ef,41.211.125.2,98.66.137.195,Sub-Ex

663,5/3/2018,3:56,62c6c17f-038b-45bc-a647-07e777e86c6d,213.21.25.132,72.201.193.175,Gembucket

664,1/14/2018,19:56,b387496e-19e7-4da6-b5b2-749c6402e31f,229.199.161.220,237.136.247.172,Stronghold

665,10/16/2017,17:43,6a3d115d-9d4d-4e53-8f92-c981ddd292a2,242.208.60.46,84.191.81.203,Ventosanzap

666,7/25/2018,22:16,6e51e9ac-28d2-42fd-928b-bff8ae565470,52.107.185.154,204.1.20.121,Bigtax

667,10/25/2018,19:25,6b78e3c4-139e-4b3d-ac1d-670755fe5e1d,74.249.220.109,187.212.65.0,Kanlam

668,12/31/2017,11:40,f8792656-e8e3-4603-933f-907a68e5dd46,92.149.144.104,217.60.12.89,Veribet

669,7/23/2018,12:23,105016d6-eac4-4bf0-b3a1-16296854681e,154.237.125.176,149.149.85.9,Zaam-Dox

670,2/17/2018,7:46,400f0895-755b-4316-8663-00342d707e19,250.81.35.217,4.127.118.180,Tin

671,10/16/2018,14:15,f75cc888-e28b-4454-bd86-40dbdfe5cb2d,132.36.237.176,100.103.97.47,Tresom

672,1/3/2018,19:02,e2bf1179-2bda-43f6-be9b-48946c932c5b,246.174.114.86,122.28.26.194,Bitchip

673,5/27/2018,0:22,6998e1e5-88a7-46ce-bc92-9b466f8f8117,203.198.210.139,121.252.183.202,Veribet

674,6/29/2018,1:08,91a58a1a-36aa-47d8-ae42-a08a86853c60,85.137.156.251,249.195.1.92,Zathin

675,3/3/2018,5:38,08359bee-7496-44d2-ad48-e595e060d48f,143.4.154.211,249.170.65.231,Duobam

676,5/26/2018,18:51,0e552ea0-0c1b-41cb-8a12-435d26e9a987,157.24.13.219,56.75.90.56,Fix San

677,8/15/2018,0:03,3a706adb-f3d6-4f4a-b19c-131c7dad0f74,175.153.112.186,26.98.193.166,Trippledex

678,3/17/2018,17:26,8c072176-3aa6-4070-8d15-44950812ea9e,163.247.153.31,19.212.59.8,Latlux

679,2/26/2018,3:05,ef775a23-a2e3-4f2c-981c-7d8ebb20e4df,201.129.103.178,69.211.156.37,Trippledex

680,6/8/2018,11:19,3c07b043-1164-4477-9396-9a41dde1e367,125.191.59.126,168.87.136.113,Cookley

681,6/15/2018,23:28,ba733d35-e5ae-4654-8972-7986aa261e5e,53.18.162.112,86.96.25.169,Bamity

682,10/28/2018,5:51,f3584d60-3939-4162-b1c2-abcb0eeefd0c,8.134.182.22,27.139.254.176,Aerified

683,8/23/2018,11:04,bafc411b-b4ec-4da6-9589-7ff9a31b6335,123.92.45.109,138.1.219.2,Alpha

684,12/2/2017,0:41,1b5eebe8-c632-4751-834e-ac3d0df5f5fe,14.220.138.54,233.38.219.191,Temp

685,2/24/2018,6:44,5faae41f-f090-4fa3-a95b-700a38d7a684,38.31.172.204,57.112.142.173,Sub-Ex

686,9/24/2018,0:44,10a50b2e-4a9b-42e2-b948-d181b2026e98,212.29.106.105,157.211.135.179,Mat Lam Tam

687,7/13/2018,9:59,fcc70b88-f129-4a0c-8398-49ec5385a84d,10.190.110.253,90.115.52.42,Fix San

688,10/26/2018,11:52,b6a03bbc-3080-4d52-b97c-d3d509476720,104.219.20.120,186.176.190.71,Tres-Zap

689,11/23/2017,21:12,73b2be55-b48e-4bf5-a751-2ca1c15a6c59,173.233.98.103,172.92.228.111,Sonsing

690,7/31/2018,3:35,6d4d7864-76e3-4658-bc99-3ed3a39b20d7,102.70.170.161,76.150.135.210,Home Ing

691,6/28/2018,14:35,b26a4936-822a-43c6-953d-732089b13401,103.66.25.240,161.176.5.73,Sub-Ex

692,8/25/2018,11:49,f47c6d1b-de35-49ec-ac07-8e1312cf749c,208.40.119.142,176.165.28.223,Holdlamis

693,10/17/2018,14:20,472eae7d-1e5f-44a0-9d4e-9a6a5e31f790,79.177.119.232,82.218.16.187,Namfix

694,12/19/2017,20:09,dddca7c3-4815-4ea0-82ca-48796afa50c3,120.68.24.240,191.221.113.242,Holdlamis

695,2/15/2018,17:41,31216a1f-923d-412b-88c0-05d2f5983644,221.239.4.43,207.72.32.47,Fix San

696,10/26/2018,2:08,38120d62-b7ab-4f27-b5e7-a4b9adea05ed,170.88.235.172,242.32.102.237,Bitchip

697,12/2/2017,23:02,f53115af-e0f8-4ba7-a8db-48ba1512df5d,72.114.100.134,162.181.246.152,Namfix

698,8/21/2018,17:34,11782064-2560-4845-a2d5-e1a36c3871a8,83.60.89.186,247.230.36.193,Asoka

699,8/28/2018,21:28,49a15a9b-8550-4d12-b647-e7c8791f2d3f,176.184.41.205,8.171.57.13,Zathin

700,11/4/2017,0:32,f2b65805-1e49-443d-a6f8-c42dfd394ff0,249.54.251.234,229.48.35.152,Rank

701,10/31/2017,5:22,17b72fac-b5fe-411f-ae8e-fa42528eac65,232.102.55.39,33.174.1.166,Cardguard

702,3/25/2018,17:28,27cb8040-5ca3-4d47-a501-4ca493a34a22,32.124.21.188,204.216.55.240,Konklab

703,3/8/2018,18:26,4ee9073b-9254-4791-93c6-5e9b9d9b9a16,183.154.157.81,234.133.109.96,Veribet

704,9/9/2018,18:21,11ca4190-00cb-4c79-a26a-1b94d4a2df9b,146.246.116.19,203.192.121.104,Sonair

705,2/27/2018,1:14,fbff2770-5a73-4ba9-95a8-014efb7914dc,36.161.251.181,239.89.77.211,Home Ing

706,6/11/2018,15:53,88bfd275-e108-46cf-9d5c-40286c413e22,18.61.120.251,135.250.0.79,Sonair

707,5/29/2018,0:06,cfef9855-4d40-46b0-b749-9121bf97acb4,162.207.62.77,56.28.244.61,Vagram

708,10/18/2018,21:17,7f836bc7-8914-4d10-8b7f-e00492374bc2,206.51.134.4,185.143.90.156,Quo Lux

709,3/15/2018,16:10,ca36245f-6535-4984-a877-73bb68b1cbb9,195.150.54.230,184.58.67.152,Daltfresh

710,9/13/2018,6:13,576fe83a-07e2-4f3a-8b6f-45b7998c0c24,197.31.202.175,237.238.65.168,Pannier

711,11/2/2017,13:26,a068510f-2530-47ae-8c6d-1158dac39f72,81.45.155.37,178.67.128.15,Veribet

712,2/11/2018,5:27,c4f211e1-680a-488a-92a1-44aa9cc0ce83,202.217.116.40,160.100.49.164,Prodder

713,7/12/2018,5:05,f96495aa-83e5-4202-84de-a57b01e25fb0,145.209.148.128,68.99.121.112,Vagram

714,5/12/2018,11:25,a15e0797-ac00-4fd9-a153-07264127074d,133.200.175.228,16.226.250.233,Keylex

715,8/31/2018,5:50,9edbb5eb-ba35-4f25-996f-11b99fb0e396,20.45.138.224,130.231.203.51,Vagram

716,5/4/2018,6:13,15e82abb-47f6-47ac-8fca-134daac5b52a,108.111.157.85,206.142.178.50,Transcof

717,4/2/2018,15:12,65793805-8598-453a-970d-a5d45cc2ab83,111.189.66.35,72.196.184.120,Gembucket

718,9/7/2018,8:16,5b80eef3-0c9a-4f34-a127-8359c8e548f8,11.176.202.9,157.74.209.93,Home Ing

719,10/20/2017,19:03,bce66ebd-fb22-4e95-ad9f-fda500e67d3b,91.187.243.14,220.227.164.149,Sonsing

720,5/21/2018,0:21,b23b0213-a49d-418c-a79e-620d8b44972a,41.188.27.5,119.171.171.237,Ronstring

721,7/27/2018,10:01,979040e7-ec01-4cae-aff7-2e1ffeea28f9,8.129.48.211,215.173.151.239,Temp

722,3/15/2018,10:25,2033b2cb-7fa0-4990-adb3-a28e8d2f96f3,113.199.93.19,172.241.124.26,Pannier

723,10/19/2017,7:55,5a66ec75-6f85-4cc2-90fb-fd021746749e,33.86.219.93,174.188.24.212,Job

724,1/31/2018,15:25,d86198ef-173e-4062-ac4d-00d6944f2b0a,140.178.4.214,232.51.138.110,Zontrax

725,7/12/2018,21:28,c9c8dd66-cdef-48b2-ade7-1148b5d10831,185.30.20.43,128.171.204.57,Zontrax

726,6/13/2018,19:36,81b18d17-d7c5-4776-8c85-0dd9645f718b,110.78.212.188,115.169.83.155,Otcom

727,5/29/2018,3:01,365f636b-5cde-48a6-8cde-9d4866e5c5ea,217.203.193.218,36.191.4.226,Viva

728,10/3/2018,7:40,765e4da7-61da-4a6b-9789-a2a255c2a50f,169.65.252.185,155.47.150.96,Bamity

729,3/12/2018,14:09,bd5e2298-eec0-4c19-8f4e-ded47139db76,7.176.119.31,226.17.210.243,Andalax

730,6/28/2018,13:10,472b1238-8b67-4d9e-96d5-8e231009c2e2,1.33.245.252,26.59.26.200,Holdlamis

731,8/19/2018,2:23,02c913b2-9ea4-4545-889e-1c50a0017545,97.157.98.244,72.222.21.17,Cardguard

732,5/30/2018,23:35,234c6f7d-a613-42a5-a8fa-336780fdbe1d,35.180.182.117,201.117.120.13,Trippledex

733,11/19/2017,1:26,10d0823e-5deb-499f-bb35-de547abb6892,235.255.105.251,138.214.71.235,Ronstring

734,6/24/2018,4:32,40fea23c-7f3a-4001-9720-1263ab0ebd22,64.190.168.81,174.133.175.182,Veribet