This is the multi-page printable view of this section. Click here to print.

User Guide

- 1: Application Programming Interfaces (API)

- 1.1: API Specification

- 1.2: Calling an API

- 1.3: Query APIs

- 1.4: Export Content API

- 1.5: Reference Data API

- 1.6: Explorer API

- 1.7: Feed API

- 2: Background Jobs

- 2.1: Scheduler

- 3: Concepts

- 3.1: Streams

- 4: Data Retention

- 5: Data Splitter

- 5.1: Simple CSV Example

- 5.2: Simple CSV example with heading

- 5.3: Complex example with regex and user defined names

- 5.4: Multi Line Example

- 5.5: Element Reference

- 5.5.1: Content Providers

- 5.5.2: Expressions

- 5.5.3: Variables

- 5.5.4: Output

- 5.6: Match References, Variables and Fixed Strings

- 5.6.1: Expression match references

- 5.6.2: Variable reference

- 5.6.3: Use of fixed strings

- 5.6.4: Concatenation of references

- 6: Event Feeds

- 7: Indexing data

- 7.1: Elasticsearch

- 7.1.1: Introduction

- 7.1.2: Getting Started

- 7.1.3: Indexing data

- 7.1.4: Exploring Data in Kibana

- 7.2: Lucene Indexes

- 7.3: Solr Integration

- 8: Nodes

- 9: Pipelines

- 9.1: Pipeline Recipies

- 9.2: Parser

- 9.2.1: XML Fragments

- 9.3: XSLT Conversion

- 9.3.1: XSLT Basics

- 9.3.2: XSLT Functions

- 9.3.3: XSLT Includes

- 9.4: File Output

- 9.5: Reference Data

- 9.6: Context Data

- 10: Properties

- 11: Searching Data

- 11.1: Data Sources

- 11.1.1: Lucene Index Data Source

- 11.1.2: Statistics

- 11.1.3: Elasticsearch

- 11.1.4: Internal Data Sources

- 11.2: Dashboards

- 11.2.1: Queries

- 11.2.2: Internal Links

- 11.2.3: Direct URLs

- 11.3: Query

- 11.3.1: Stroom Query Language

- 11.4: Analytic Rules

- 11.5: Search Extraction

- 11.6: Dictionaries

- 12: Security

- 12.1: Deployment

- 12.2: User Accounts

- 12.3: Users and Groups

- 12.4: Application Permissions

- 12.5: Document Permissions

- 13: Tools

- 13.1: Command Line Tools

- 13.2: Stream Dump Tool

- 14: User Content

- 14.1: Editing Text

- 14.2: Naming Conventions

- 14.3: Documenting content

- 14.4: Finding Things

- 15: Viewing Data

- 16: Volumes

1 - Application Programming Interfaces (API)

Stroom has many public REST APIs to allow other systems to interact with Stroom. Everything that can be done via the user interface can also be done using the API.

All methods on the API will are authenticated and authorised, so the permissions will be exactly the same as if the API user is using the Stroom user interface directly.

1.1 - API Specification

Swagger UI

The APIs are available as a Swagger Open API specification in the following forms:

- JSON - stroom.json

- YAML - stroom.yaml

A dynamic Swagger user interface is also available for viewing all the API endpoints with details of parameters and data types. This can be found in two places.

- Published on GitHub for each minor version Swagger user interface .

- Published on a running stroom instance at the path

/stroom/noauth/swagger-ui.

API Endpoints in Application Logs

The API methods are also all listed in the application logs when Stroom first boots up, e.g.

INFO 2023-01-17T11:09:30.244Z main i.d.j.DropwizardResourceConfig The following paths were found for the configured resources:

GET /api/account/v1/ (stroom.security.identity.account.AccountResourceImpl)

POST /api/account/v1/ (stroom.security.identity.account.AccountResourceImpl)

POST /api/account/v1/search (stroom.security.identity.account.AccountResourceImpl)

DELETE /api/account/v1/{id} (stroom.security.identity.account.AccountResourceImpl)

GET /api/account/v1/{id} (stroom.security.identity.account.AccountResourceImpl)

PUT /api/account/v1/{id} (stroom.security.identity.account.AccountResourceImpl)

GET /api/activity/v1 (stroom.activity.impl.ActivityResourceImpl)

POST /api/activity/v1 (stroom.activity.impl.ActivityResourceImpl)

POST /api/activity/v1/acknowledge (stroom.activity.impl.ActivityResourceImpl)

GET /api/activity/v1/current (stroom.activity.impl.ActivityResourceImpl)

...

You will also see entries in the logs for the various servlets exposed by Stroom, e.g.

INFO ... main s.d.common.Servlets Adding servlets to application path/port:

INFO ... main s.d.common.Servlets stroom.core.servlet.DashboardServlet => /stroom/dashboard

INFO ... main s.d.common.Servlets stroom.core.servlet.DynamicCSSServlet => /stroom/dynamic.css

INFO ... main s.d.common.Servlets stroom.data.store.impl.ImportFileServlet => /stroom/importfile.rpc

INFO ... main s.d.common.Servlets stroom.receive.common.ReceiveDataServlet => /stroom/noauth/datafeed

INFO ... main s.d.common.Servlets stroom.receive.common.ReceiveDataServlet => /stroom/noauth/datafeed/*

INFO ... main s.d.common.Servlets stroom.receive.common.DebugServlet => /stroom/noauth/debug

INFO ... main s.d.common.Servlets stroom.data.store.impl.fs.EchoServlet => /stroom/noauth/echo

INFO ... main s.d.common.Servlets stroom.receive.common.RemoteFeedServiceRPC => /stroom/noauth/remoting/remotefeedservice.rpc

INFO ... main s.d.common.Servlets stroom.core.servlet.StatusServlet => /stroom/noauth/status

INFO ... main s.d.common.Servlets stroom.core.servlet.SwaggerUiServlet => /stroom/noauth/swagger-ui

INFO ... main s.d.common.Servlets stroom.resource.impl.SessionResourceStoreImpl => /stroom/resourcestore/*

INFO ... main s.d.common.Servlets stroom.dashboard.impl.script.ScriptServlet => /stroom/script

INFO ... main s.d.common.Servlets stroom.security.impl.SessionListServlet => /stroom/sessionList

INFO ... main s.d.common.Servlets stroom.core.servlet.StroomServlet => /stroom/ui

1.2 - Calling an API

Authentication

In order to use the API endpoints you will need to authenticate. Authentication is achieved using an API Key or Token .

You will either need to create an API key for your personal Stroom user account or for a shared processing user account. Whichever user account you use it will need to have the necessary permissions for each API endpoint it is to be used with.

To create an API key (token) for a user:

- In the top menu, select:

- Click Create.

- Enter a suitable expiration date. Short expiry periods are more secure in case the key is compromised.

- Select the user account that you are creating the key for.

- Click

- Select the newly created API Key from the list of keys and double click it to open it.

- Click to copy the key to the clipboard.

To make an authenticated API call you need to provide a header of the form Authorization:Bearer ${TOKEN}, where ${TOKEN} is your API Key as copied from Stroom.

Calling an API method with curl

This section describes how to call an API method using the command line tool curl as an example client.

Other clients can be used, e.g. using python, but these examples should provide enough help to get started using another client.

HTTP Requests Without a Body

Typically HTTP GET requests will have no body/payload

Often PUT and DELETE requests will also have no body/payload.

The following is an example of how to call an HTTP GET method (i.e. a method that does not require a request body) on the API using curl.

Warning

The --insecure argument is used in this example which means certificate verification will not take place.

It is recommended not to use this argument and instead supply curl with client and certificate authority certificates to make a secure connection.

You can either call the API via Nginx (or similar reverse proxy) at https://stroom-fddn/api/some/path or if you are making the call from one of the stroom hosts you can go direct using http://localhost:8080/api/some/path. The former is preferred as it is more secure.

Requests With a Body

A lot of the API methods in Stroom require complex bodies/payloads for the request.

The following example is an HTTP POST to perform a reference data lookup on the local host.

Create a file req.json containing:

{

"mapName": "USER_ID_TO_STAFF_NO_MAP",

"effectiveTime": "2024-12-02T08:37:02.772Z",

"key": "user2",

"referenceLoaders": [

{

"loaderPipeline" : {

"name" : "Reference Loader",

"uuid" : "da1c7351-086f-493b-866a-b42dbe990700",

"type" : "Pipeline"

},

"referenceFeed" : {

"name": "STAFF-NO-REFERENCE",

"uuid": "350003fe-2b6c-4c57-95ed-2e6018c5b3d5",

"type" : "Feed"

}

}

]

}

Now send the request with curl.

This API method returns plain text or XML depending on the reference data value.

Note

This assumes you are using curl version 7.82.0 or later that supports the --json argument.

If not you will need to replace --json with --data and add these arguments:

--header "Content-Type: application/json"

--header "Accept: application/json"

Handling JSON

jq is a utility for processing JSON and is very useful when using the API methods.

For example to get just the build version from the node info endpoint:

1.3 - Query APIs

The Query APIs use common request/response models and end points for querying each type of data source held in Stroom. The request/response models are defined in stroom-query .

Currently Stroom exposes a set of query endpoints for the following data source types. Each data source type will have its own endpoint due to differences in the way the data is queried and the restrictions imposed on the query terms. However they all share the same API definition.

- stroom-index Queries - The Lucene based search indexes.

- Sql Statistics Query - Stroom’s SQL Statistics store.

- Searchable - Searchables are various data sources that allow you to search the internals of Stroom, e.g. local reference data store, annotations, processor tasks, etc.

The detailed documentation for the request/responses is contained in the Swagger definition linked to above.

Common endpoints

The standard query endpoints are

Datasource

The Data Source endpoint is used to query Stroom for the details of a data source with a given DocRef . The details will include such things as the fields available and any restrictions on querying the data.

Search

The search endpoint is used to initiate a search against a data source or to request more data for an active search. A search request can be made using iterative mode, where it will perform the search and then only return the data it has immediately available. Subsequent requests for the same queryKey will also return the data immediately available, expecting that more results will have been found by the query. Requesting a search in non-iterative mode will result in the response being returned when the query has completed and all known results have been found.

The SearchRequest model is fairly complicated and contains not only the query terms but also a definition of how the data should be returned. A single SearchRequest can include multiple ResultRequest sections to return the queried data in multiple ways, e.g. as flat data and in an alternative aggregated form.

Stroom as a query builder

Stroom is able to export the json form of a SearchRequest model from its dashboards. This makes the dashboard a useful tool for building a query and the table settings to go with it. You can use the dashboard to defined the data source, define the query terms tree and build a table definition (or definitions) to describe how the data should be returned. The, clicking the download icon on the query pane of the dashboard will generate the SearchRequest json which can be immediately used with the /search API or modified to suit.

Destroy

This endpoint is used to kill an active query by supplying the queryKey for query in question.

Keep alive

Stroom will only hold search results from completed queries for a certain lenght of time. It will also terminate running queries that are too old. In order to prevent queries being aged off you can hit this endpoint to indicate to Stroom that you still have an interest in a particular query by supplying the query key.

1.4 - Export Content API

Stroom has API methods for exporting content in Stroom to a single zip file.

Export All - /api/export/v1

This method will export all content in Stroom to a single zip file. This is useful as an alternative backup of the content or where you need to export the content for import into another Stroom instance.

In order to perform a full export, the user (identified by their API Key) performing the export will need to ensure the following:

- Have created an API Key

- The system property

stroom.export.enabledis set totrue. - The user has the application permission

Export ConfigurationorAdministrator.

Only those items that the user has Read permission on will be exported, so to export all items, the user performing the export will need Read permission on all items or have the Administrator application permission.

Performing an Export

To export all readable content to a file called export.zip do something like the following:

Note

If you encounter problems then replace--silent with --verbose to get more information.

Export Zip Format

The export zip will contain a number of files for each document exported. The number and type of these files will depend on the type of document, however every document will have the following two file types:

.node- This file represents the document’s location in the explorer tree along with its name and UUID..meta- This is the metadata for the document independent of the explorer tree. It contains the name, type and UUID of the document along with the unique identifier for the version of the document.

Documents may also have files like these (a non-exhaustive list):

.json- JSON data holding the content of the document, as used for Dashboards..txt- Plain text data holding the content of the document, as used for Dictionaries..xml- XML data holding the content of the document, as used for Pipelines..xsd- XML Schema content..xsl- XSLT content.

The following is an example of the content of an export zip file:

TEST_FEED_CERT.Feed.fcee4270-a479-4cc0-a79c-0e8f18a4bad8.meta

TEST_FEED_CERT.Feed.fcee4270-a479-4cc0-a79c-0e8f18a4bad8.node

TEST_FEED_PROXY.Feed.f06d4416-8b0e-4774-94a9-729adc5633aa.meta

TEST_FEED_PROXY.Feed.f06d4416-8b0e-4774-94a9-729adc5633aa.node

TEST_REFERENCE_DATA_EVENTS_XXX.XSLT.4f74999e-9d69-47c7-97f7-5e88cc7459f7.meta

TEST_REFERENCE_DATA_EVENTS_XXX.XSLT.4f74999e-9d69-47c7-97f7-5e88cc7459f7.xsl

TEST_REFERENCE_DATA_EVENTS_XXX.XSLT.4f74999e-9d69-47c7-97f7-5e88cc7459f7.node

Standard_Pipelines/Reference_Loader.Pipeline.da1c7351-086f-493b-866a-b42dbe990700.xml

Standard_Pipelines/Reference_Loader.Pipeline.da1c7351-086f-493b-866a-b42dbe990700.meta

Standard_Pipelines/Reference_Loader.Pipeline.da1c7351-086f-493b-866a-b42dbe990700.node

Filenames

When documents are added to the zip, they are added with a directory structure that mirrors the explorer tree.

The filenames are of the form:

<name>.<type>.<UUID>.<extension>

As Stroom allows characters in document and folder names that would not be supported in operating system paths (or cause confusion), some characters in the name/directory parts are replaced by _ to avoid this. e.g. Dashboard 01/02/2020 would become Dashboard_01_02_2020.

If you need to see the contents of the zip as if viewing it within Stroom you can run this bash script in the root of the extracted zip.

#!/usr/bin/env bash

shopt -s globstar

for node_file in **/*.node; do

name=

name="$(grep -o -P "(?<=name=).*" "${node_file}" )"

path=

path="$(grep -o -P "(?<=path=).*" "${node_file}" )"

echo "./${path}/${name} (./${node_file})"

done

This will output something like:

./Standard Pipelines/Json/Events to JSON (./Standard_Pipelines/Json/Events_to_JSON.XSLT.1c3d42c2-f512-423f-aa6a-050c5cad7c0f.node)

./Standard Pipelines/Json/JSON Extraction (./Standard_Pipelines/Json/JSON_Extraction.Pipeline.13143179-b494-4146-ac4b-9a6010cada89.node)

./Standard Pipelines/Json/JSON Search Extraction (./Standard_Pipelines/Json/JSON_Search_Extraction.XSLT.a8c1aa77-fb90-461a-a121-d4d87d2ff072.node)

./Standard Pipelines/Reference Loader (./Standard_Pipelines/Reference_Loader.Pipeline.da1c7351-086f-493b-866a-b42dbe990700.node)

1.5 - Reference Data API

The reference data store has an API to allow other systems to access the reference data store.

/api/refData/v1/lookup

The /lookup endpoint requires the caller to provide details of the reference feed and loader pipeline so if the effective stream is not in the store it can be loaded prior to performing the lookup.

It is useful for forcing a reference load into the store and for performing ad-hoc lookups.

Note

As reference data stores are local to a node, it is best to send the request to a node that does processing as it is more likely to have already loaded the data. If you send it to a UI node that does not do processing, it is likely to trigger a load as the data will not be there.Below is an example of a lookup request file req.json.

{

"mapName": "USER_ID_TO_LOCATION",

"effectiveTime": "2020-12-02T08:37:02.772Z",

"key": "jbloggs",

"referenceLoaders": [

{

"loaderPipeline" : {

"name" : "Reference Loader",

"uuid" : "da1c7351-086f-493b-866a-b42dbe990700",

"type" : "Pipeline"

},

"referenceFeed" : {

"name": "USER_ID_TOLOCATION-REFERENCE",

"uuid": "60f9f51d-e5d6-41f5-86b9-ae866b8c9fa3",

"type" : "Feed"

}

}

]

}

This is an example of how to perform the lookup on the local host.

1.6 - Explorer API

Creating a New Document

The explorer API is responsible for creation of all document types. The explorer API is used to create the initial skeleton of a document then the API specific to the document type in question is used to update the document skeleton with additional settings/content.

This is an example request file req.json:

{

"docType": "Feed",

"docName": "MY_FEED",

"destinationFolder": {

"type": "Folder",

"uuid": "3dfab6a2-dbd5-46ee-b6e9-6df45f90cd85",

"name": "My Folder",

"rootNodeUuid": "0"

},

"permissionInheritance": "DESTINATION"

}

You need to set the following properties in the JSON:

docType- The type of the document being created, see Documents.docName- The name of the new document.destinationFolder.uuid- The UUID of the destination folder (or0if the document is being created in the System root.rootNodeUuid- This is always0for the System root.

To create the skeleton document run the following:

This will create the document and return its new UUID to stdout.

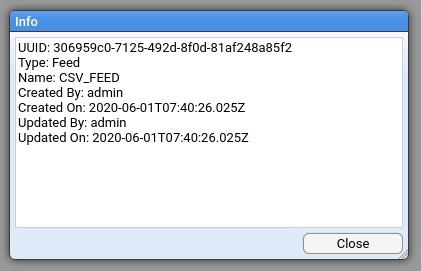

1.7 - Feed API

Creating a Feed

In order to create a Feed you must first create the skeleton document using the Explorer API.

Updating a Feed

To modify a feed you must first fetch the existing Feed document. This is done as follows:

Where ${feed_uuid} is the

UUID

of the feed in question.

This will return the Feed document JSON.

{

"type": "Feed",

"uuid": "0dafc9c2-dcd8-4bb6-88ce-5ee228babe78",

"name": "MY_FEED",

"version": "32dae12f-a696-4e0e-8acb-47cf0ad3c77f",

"createTimeMs": 1718103980225,

"updateTimeMs": 1718103980225,

"createUser": "admin",

"updateUser": "admin",

"reference": false,

"streamType": "Raw Events",

"status": "RECEIVE"

}

You can use jq to modify this JSON to add/change any of the document settings.

Example Script

The following is an example bash script for creating and modifying multiple Feeds.

It requires curl and jq to run.

#!/usr/bin/env bash

set -e -o pipefail

main() {

# Your API key

local TOKEN="sak_d5752a32b2_mv1JYUYUuvRUDpikW75G5w4kQUq7EEjShQ9DiRjN14yEFonKTW42KbeQogui52gTjq9RDRufNEz2MXt1PRCThudzHU5RVpLMbZKThCgyyEX2y2sBrk31rYMJRKNg2yMG"

# UUID of the dest folder

local FOLDER_UUID="fc617580-8cf0-4ac3-93dd-93604603aef0"

local feed_name

local create_feed_req

local feed_uuid

local feed_doc

for i in {1..2}; do

# Use date to make a unique name for the test

feed_name="MY_FEED_$(date +%s)_${i}"

# Set the feed name and its destination

create_feed_req=$(cat <<-END

{

"docType": "Feed",

"docName": "${feed_name}",

"destinationFolder": {

"type": "Folder",

"uuid": "${FOLDER_UUID}",

"rootNodeUuid": "0"

},

"permissionInheritance": "DESTINATION"

}

END

)

# Create the skeleton feed and extract its new UUID from the response

feed_uuid=$( \

curl \

-s \

-X POST \

-H "Authorization:Bearer ${TOKEN}" \

-H 'Content-Type: application/json' \

--data "${create_feed_req}" \

http://localhost:8080/api/explorer/v2/create/ \

| jq -r '.uuid'

)

echo "Created feed $i with name '${feed_name}' and UUID '${feed_uuid}'"

# Fetch the created feed

feed_doc=$( \

curl \

-s \

-H "Authorization:Bearer ${TOKEN}" \

"http://localhost:8080/api/feed/v1/${feed_uuid}" \

)

echo -e "Skeleton Feed doc for '${feed_name}'\n$(jq '.' <<< "${feed_doc}")"

# Add/modify propeties on the feed doc

feed_doc=$(jq '

.classification="HUSH HUSH"

| .encoding="UTF8"

| .contextEncoding="ASCII"

| .streamType="Events"

| .volumeGroup="Default Volume Group"' <<< "${feed_doc}")

#echo -e "Updated feed doc for '${feed_name}'\n$(jq '.' <<< "${feed_doc}")"

# Update the feed with the new properties

curl \

-s \

-X PUT \

-H "Authorization:Bearer ${TOKEN}" \

-H 'Content-Type: application/json' \

--data "${feed_doc}" \

"http://localhost:8080/api/feed/v1/${feed_uuid}" \

> /dev/null

# Fetch the created feed

feed_doc=$( \

curl \

-s \

-H "Authorization:Bearer ${TOKEN}" \

"http://localhost:8080/api/feed/v1/${feed_uuid}" \

)

echo -e "Updated Feed doc for '${feed_name}'\n$(jq '.' <<< "${feed_doc}")"

echo

done

}

main "$@"

2 - Background Jobs

There are various jobs that run in the background within Stroom. Among these are jobs that control pipeline processing, removing old files from the file system, checking the status of nodes and volumes etc. Each job executes at a different time depending on the purpose of the job. There are three ways that a job can be executed:

- Cron scheduled jobs execute periodically according to a cron schedule. These include jobs such as cleaning the file system where Stroom only needs to perform this action once a day and can do so overnight.

- Frequency controlled jobs are executed every X seconds, minutes, hours etc. Most of the jobs that execute with a given frequency are status checking jobs that perform a short lived action fairly frequently.

- Distributed jobs are only applicable to stream processing with a pipeline. Distributed jobs are executed by a worker node as soon as a worker has available threads to execute a jobs and the task distributor has work available.

A list of job types and their execution method can be seen by opening Jobs from the main menu.

Each job can be enabled/disabled at the job level. If you click on a job you will see an entry for each Stroom node in the lower pane. The job can be enabled/disabled at the node level for fine grained control of which nodes are running which jobs.

For a full list of all the jobs and details of what each one does, see the Job reference.

2.1 - Scheduler

Stroom has two main types of schedule, a simple frequency schedule that runs the job at a fixed time interval or a more complex cron schedule.

Note

This scheduler and its syntax are also used for Analytic Rules .

Frequency Schedules

A frequency schedule is expressed as a fixed time interval.

The frequency schedule expression syntax is stroom’s standard duration syntax and takes the form of a value followed by an optional unit suffix, e.g. 10m for ten minutes.

| Prefix | Time Unit |

|---|---|

| milliseconds | |

ms |

milliseconds |

s |

seconds |

m |

minutes |

h |

hours |

d |

days |

Cron Schedules

cron is a syntax for expressing schedules.

For full details of cron expressions see Cron Syntax

Stroom uses a scheduler called Quartz which supports cron expressions for scheduling.

3 - Concepts

3.1 - Streams

Streams can either be created when data is directly POSTed in to Stroom or during the proxy aggregation process. When data is directly POSTed to Stroom the content of the POST will be stored as one Stream. With proxy aggregation multiple files in the proxy repository will/can be aggregated together into a single Stream.

Anatomy of a Stream

A Stream is made up of a number of parts of which the raw or cooked data is just one. In addition to the data the Stream can contain a number of other child stream types, e.g. Context and Meta Data.

The hierarchy of a stream is as follows:

- Stream nnn

- Part [1 to *]

- Data [1-1]

- Context [0-1]

- Meta Data [0-1]

- Part [1 to *]

Although all streams conform to the above hierarchy there are three main types of Stream that are used in Stroom:

- Non-segmented Stream - Raw events, Raw Reference

- Segmented Stream - Events, Reference

- Segmented Error Stream - Error

Segmented means that the data has been demarcated into segments or records.

Child Stream Types

Data

This is the actual data of the stream, e.g. the XML events, raw CSV, JSON, etc.

Context

This is additional contextual data that can be sent with the data. Context data can be used for reference data lookups.

Meta Data

This is the data about the Stream (e.g. the feed name, receipt time, user agent, etc.). This meta data either comes from the HTTP headers when the data was POSTed to Stroom or is added by Stroom or Stroom-Proxy on receipt/processing.

Non-Segmented Stream

The following is a representation of a non-segmented stream with three parts, each with Meta Data and Context child streams.

Raw Events and Raw Reference streams contain non-segmented data, e.g. a large batch of CSV, JSON, XML, etc. data. There is no notion of a record/event/segment in the data, it is simply data in any form (including malformed data) that is yet to be processed and demarcated into records/events, for example using a Data Splitter or an XML parser.

The Stream may be single-part or multi-part depending on how it is received. If it is the product of proxy aggregation then it is likely to be multi-part. Each part will have its own context and meta data child streams, if applicable.

Segmented Stream

The following is a representation of a segmented stream that contains three records (i.e events) and the Meta Data.

Cooked Events and Reference data are forms of segmented data. The raw data has been parsed and split into records/events and the resulting data is stored in a way that allows Stroom to know where each record/event starts/ends. These streams only have a single part.

Error Stream

Error streams are similar to segmented Event/Reference streams in that they are single-part and have demarcated records (where each error/warning/info message is a record). Error streams do not have any Meta Data or Context child streams.

4 - Data Retention

By default Stroom will retain all the data it ingests and creates forever. It is likely that storage constraints/costs will mean that data needs to be deleted after a certain time. It is also likely that certain types of data may need to be kept for longer than other types.

Rules

Stroom allows for a set of data retention policy rules to be created to control at a fine grained level what data is deleted and what is retained.

The data retention rules are accessible by selecting Data Retention from the Tools menu. On first use the Rules tab of the Data Retention screen will show a single rule named Default Retain All Forever Rule. This is the implicit rule in stroom that retains all data and is always in play unless another rule overrides it. This rule cannot be edited, moved or removed.

Rule Precedence

Rules have a precedence, with a lower rule number being a higher priority.

When running the data retention job, Stroom will look at each stream held on the system and the retention policy of the first rule (starting from the lowest numbered rule) that matches that stream will apply.

One a matching rule is found all other rules with higher rule numbers (lower priority) are ignored.

For example if rule 1 says to retain streams from feed X-EVENTS for 10 years and rule 2 says to retain streams from feeds *-EVENTS for 1 year then rule 1 would apply to streams from feed X-EVENTS and they would be kept for 10 years, but rule 2 would apply to feed Y-EVENTS and they would only be kept for 1 year.

Rules are re-numbered as new rules are added/deleted/moved.

Creating a Rule

To create a rule do the following:

- Click the icon to add a new rule.

- Edit the expression to define the data that the rule will match on.

- Provide a name for the rule to help describe what its purpose is.

- Set the retention period for data matching this rule, i.e Forever or a set time period.

The new rule will be added at the top of the list of rules, i.e. with the highest priority. The and icons can be used to change the priority of the rule.

Rules can be enabled/disabled by clicking the checkbox next to the rule.

Changes to rules will not take effect until the icon is clicked.

Rules can also be deleted ( ) and copied ( ).

Impact Summary

When you have a number of complex rules it can be difficult to determine what data will actually be deleted next time the Policy Based Data Retention job runs. To help with this, Stroom has the Impact Summary tab that acts as a dry run for the active rules. The impact summary provides a count of the number of streams that will be deleted broken down by rule, stream type and feed name. On large systems with lots of data or complex rules, this query may take a long time to run.

The impact summary operates on the current state of the rules on the Rules tab whether saved or un-saved. This allows you to make a change to the rules and test its impact before saving it.

5 - Data Splitter

Data Splitter was created to transform text into XML. The XML produced is basic but can be processed further with XSLT to form any desired XML output.

Data Splitter works by using regular expressions to match a region of content or tokenizers to split content. The whole match or match group can then be output or passed to other expressions to further divide the matched data.

The root <dataSplitter> element controls the way content is read and buffered from the source. It then passes this content on to one or more child expressions that attempt to match the content. The child expressions attempt to match content one at a time in the order they are specified until one matches. The matching expression then passes the content that it has matched to other elements that either emit XML or apply other expressions to the content matched by the parent.

This process of content supply, match, (supply, match)*, emit is best illustrated in a simple CSV example. Note that the elements and attributes used in all examples are explained in detail in the element reference.

5.1 - Simple CSV Example

The following CSV data will be split up into separate fields using Data Splitter.

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

01/01/2010,00:01:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,create,c:\test.txt

01/01/2010,00:02:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logoff,

The first thing we need to do is match each record. Each record in a CSV file is delimited by a new line character. The following configuration will split the data into records using ‘\n’ as a delimiter:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n"/>

</dataSplitter>

In the above example the ‘split’ tokenizer matches all of the supplied content up to the end of each line ready to pass each line of content on for further treatment.

We can now add a <group> element within <split> to take content matched by the tokenizer.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

</group>

</split>

</dataSplitter>

The <group> within the <split> chooses to take the content from the <split> without including the new line ‘\n’ delimiter by using match group 1, see expression match references for details.

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

The content selected by the <group> from its parent match can then be passed onto sub expressions for further matching:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

<!-- Match each value separated by a comma as the delimiter -->

<split delimiter=",">

</split>

</group>

</split>

</dataSplitter>

In the above example the additional <split> element within the <group> will match the content provided by the group repeatedly until it has used all of the group content.

The content matched by the inner <split> element can be passed to a <data> element to emit XML content.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

<!-- Match each value separated by a comma as the delimiter -->

<split delimiter=",">

<!-- Output the value from group 1 (as above using group 1

ignores the delimiters, without this each value would include

the comma) -->

<data value="$1" />

</split>

</group>

</split>

</dataSplitter>

In the above example each match from the inner <split> is made available to the inner <data> element that chooses to output content from match group 1, see expression match references for details.

The above configuration results in the following XML output for the whole input:

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data value="01/01/2010" />

<data value="00:00:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="logon" />

</record>

<record>

<data value="01/01/2010" />

<data value="00:01:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="create" />

<data value="c:\test.txt" />

</record>

<record>

<data value="01/01/2010" />

<data value="00:02:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="logoff" />

</record>

</records>

5.2 - Simple CSV example with heading

In addition to referencing content produced by a parent element it is often desirable to store content and reference it later. The following example of a CSV with a heading demonstrates how content can be stored in a variable and then referenced later on.

Input

This example will use a similar input to the one in the previous CSV example but also adds a heading line.

Date,Time,IPAddress,HostName,User,EventType,Detail

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

01/01/2010,00:01:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,create,c:\test.txt

01/01/2010,00:02:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logoff,

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match heading line (note that maxMatch="1" means that only the

first line will be matched by this splitter) -->

<split delimiter="\n" maxMatch="1">

<!-- Store each heading in a named list -->

<group>

<split delimiter=",">

<var id="heading" />

</split>

</group>

</split>

<!-- Match each record -->

<split delimiter="\n">

<!-- Take the matched line -->

<group value="$1">

<!-- Split the line up -->

<split delimiter=",">

<!-- Output the stored heading for each iteration and the value

from group 1 -->

<data name="$heading$1" value="$1" />

</split>

</group>

</split>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:00:00" />

<data name="IPAddress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="logon" />

</record>

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:01:00" />

<data name="IPAddress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="create" />

<data name="Detail" value="c:\test.txt" />

</record>

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:02:00" />

<data name="IPAdress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="logoff" />

</record>

</records>

5.3 - Complex example with regex and user defined names

The following example uses a real world Apache log and demonstrates the use of regular expressions rather than simple ‘split’ tokenizers. The usage and structure of regular expressions is outside of the scope of this document but Data Splitter uses Java’s standard regular expression library that is POSIX compliant and documented in numerous places.

This example also demonstrates that the names and values that are output can be hard coded in the absence of field name information to make XSLT conversion easier later on. Also shown is that any match can be divided into further fields with additional expressions and the ability to nest data elements to provide structure if needed.

Input

192.168.1.100 - "-" [12/Jul/2012:11:57:07 +0000] "GET /doc.htm HTTP/1.1" 200 4235 "-" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)"

192.168.1.100 - "-" [12/Jul/2012:11:57:07 +0000] "GET /default.css HTTP/1.1" 200 3494 "http://some.server:8080/doc.htm" "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)"

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!--

Standard Apache Format

%h - host name should be ok without quotes

%l - Remote logname (from identd, if supplied). This will return a dash unless IdentityCheck is set On.

\"%u\" - user name should be quoted to deal with DNs

%t - time is added in square brackets so is contained for parsing purposes

\"%r\" - URL is quoted

%>s - Response code doesn't need to be quoted as it is a single number

%b - The size in bytes of the response sent to the client

\"%{Referer}i\" - Referrer is quoted so that’s ok

\"%{User-Agent}i\" - User agent is quoted so also ok

LogFormat "%h %l \"%u\" %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

-->

<!-- Match line -->

<split delimiter="\n">

<group value="$1">

<!-- Provide a regular expression for the whole line with match

groups for each field we want to split out -->

<regex pattern="^([^ ]+) ([^ ]+) "([^"]+)" \[([^\]]+)] "([^"]+)" ([^ ]+) ([^ ]+) "([^"]+)" "([^"]+)"">

<data name="host" value="$1" />

<data name="log" value="$2" />

<data name="user" value="$3" />

<data name="time" value="$4" />

<data name="url" value="$5">

<!-- Take the 5th regular expression group and pass it to

another expression to divide into smaller components -->

<group value="$5">

<regex pattern="^([^ ]+) ([^ ]+) ([^ /]*)/([^ ]*)">

<data name="httpMethod" value="$1" />

<data name="url" value="$2" />

<data name="protocol" value="$3" />

<data name="version" value="$4" />

</regex>

</group>

</data>

<data name="response" value="$6" />

<data name="size" value="$7" />

<data name="referrer" value="$8" />

<data name="userAgent" value="$9" />

</regex>

</group>

</split>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data name="host" value="192.168.1.100" />

<data name="log" value="-" />

<data name="user" value="-" />

<data name="time" value="12/Jul/2012:11:57:07 +0000" />

<data name="url" value="GET /doc.htm HTTP/1.1">

<data name="httpMethod" value="GET" />

<data name="url" value="/doc.htm" />

<data name="protocol" value="HTTP" />

<data name="version" value="1.1" />

</data>

<data name="response" value="200" />

<data name="size" value="4235" />

<data name="referrer" value="-" />

<data name="userAgent" value="Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)" />

</record>

<record>

<data name="host" value="192.168.1.100" />

<data name="log" value="-" />

<data name="user" value="-" />

<data name="time" value="12/Jul/2012:11:57:07 +0000" />

<data name="url" value="GET /default.css HTTP/1.1">

<data name="httpMethod" value="GET" />

<data name="url" value="/default.css" />

<data name="protocol" value="HTTP" />

<data name="version" value="1.1" />

</data>

<data name="response" value="200" />

<data name="size" value="3494" />

<data name="referrer" value="http://some.server:8080/doc.htm" />

<data name="userAgent" value="Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)" />

</record>

</records>

5.4 - Multi Line Example

Example multi line file where records are split over may lines. There are various ways this data could be treated but this example forms a record from data created when some fictitious query starts plus the subsequent query results.

Input

09/07/2016 14:49:36 User = user1

09/07/2016 14:49:36 Query = some query

09/07/2016 16:34:40 Results:

09/07/2016 16:34:40 Line 1: result1

09/07/2016 16:34:40 Line 2: result2

09/07/2016 16:34:40 Line 3: result3

09/07/2016 16:34:40 Line 4: result4

09/07/2009 16:35:21 User = user2

09/07/2009 16:35:21 Query = some other query

09/07/2009 16:45:36 Results:

09/07/2009 16:45:36 Line 1: result1

09/07/2009 16:45:36 Line 2: result2

09/07/2009 16:45:36 Line 3: result3

09/07/2009 16:45:36 Line 4: result4

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<!-- Match each record. We want to treat the query and results as a single event so match the two sets of data separated by a double new line -->

<regex pattern="\n*((.*\n)+?\n(.*\n)+?\n)|\n*(.*\n?)+">

<group>

<!-- Split the record into query and results -->

<regex pattern="(.*?)\n\n(.*)" dotAll="true">

<!-- Create a data element to output query data -->

<data name="query">

<group value="$1">

<!-- We only want to output the date and time from the first line. -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

<data name="date" value="$1" />

<data name="time" value="$2" />

<data name="$3" value="$4" />

</regex>

<!-- Output all other values -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

<data name="$3" value="$4" />

</regex>

</group>

</data>

<!-- Create a data element to output result data -->

<data name="results">

<group value="$2">

<!-- We only want to output the date and time from the first line. -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

<data name="date" value="$1" />

<data name="time" value="$2" />

<data name="$3" value="$4" />

</regex>

<!-- Output all other values -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

<data name="$3" value="$4" />

</regex>

</group>

</data>

</regex>

</group>

</regex>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="2.0">

<record>

<data name="query">

<data name="date" value="09/07/2016" />

<data name="time" value="14:49:36" />

<data name="User" value="user1" />

<data name="Query" value="some query" />

</data>

<data name="results">

<data name="date" value="09/07/2016" />

<data name="time" value="16:34:40" />

<data name="Results" />

<data name="Line 1" value="result1" />

<data name="Line 2" value="result2" />

<data name="Line 3" value="result3" />

<data name="Line 4" value="result4" />

</data>

</record>

<record>

<data name="query">

<data name="date" value="09/07/2016" />

<data name="time" value="16:35:21" />

<data name="User" value="user2" />

<data name="Query" value="some other query" />

</data>

<data name="results">

<data name="date" value="09/07/2016" />

<data name="time" value="16:45:36" />

<data name="Results" />

<data name="Line 1" value="result1" />

<data name="Line 2" value="result2" />

<data name="Line 3" value="result3" />

<data name="Line 4" value="result4" />

</data>

</record>

</records>

5.5 - Element Reference

There are various elements used in a Data Splitter configuration to control behaviour. Each of these elements can be categorised as one of the following:

5.5.1 - Content Providers

Content providers take some content from the input source or elsewhere (see fixed strings and provide it to one or more expressions.

Both the root element <dataSplitter> and <group> elements are content providers.

Root element <dataSplitter>

The root element of a Data Splitter configuration is <dataSplitter>.

It supplies content from the input source to one or more expressions defined within it.

The way that content is buffered is controlled by the root element and the way that errors are handled as a result of child expressions not matching all of the content it supplies.

Attributes

The following attributes can be added to the <dataSplitter> root element:

ignoreErrors

Data Splitter generates errors if not all of the content is matched by the regular expressions beneath the <dataSplitter> or within <group> elements.

The error messages are intended to aid the user in writing good Data Splitter configurations.

The intent is to indicate when the input data is not being matched fully and therefore possibly skipping some important data.

Despite this, in some cases it is laborious to have to write expressions to match all content.

In these cases it is preferable to add this attribute to ignore these errors.

However it is often better to write expressions that capture all of the supplied content and discard unwanted characters.

This attribute also affects errors generated by the use of the minMatch attribute on <regex> which is described later on.

Take the following example input:

Name1,Name2,Name3

value1,value2,value3 # a useless comment

value1,value2,value3 # a useless comment

This could be matched with the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<regex id="heading" pattern=".+" maxMatch="1">

…

</regex>

<regex id="body" pattern="\n[^#]+">

…

</regex>

</dataSplitter>

The above configuration would only match up to a comment for each record line, e.g.

Name1,Name2,Name3

value1,value2,value3 # a useless comment

value1,value2,value3 # a useless comment

This may well be the desired functionality but if there was useful content within the comment it would be lost. Because of this Data Splitter warns you when expressions are failing to match all of the content presented so that you can make sure that you aren’t missing anything important. In the above example it is obvious that this is the required behaviour but in more complex cases you might be otherwise unaware that your expressions were losing data.

To maintain this assurance that you are handling all content it is usually best to write expressions to explicitly match all content even though you may do nothing with some matches, e.g.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<regex id="heading" pattern=".+" maxMatch="1">

…

</regex>

<regex id="body" pattern="\n([^#]+)#.+">

…

</regex>

</dataSplitter>

The above example would match all of the content and would therefore not generate warnings. Sub-expressions of ‘body’ could use match group 1 and ignore the comment.

However as previously stated it might often be difficult to write expressions that will just match content that is to be discarded.

In these cases ignoreErrors can be used to suppress errors caused by unmatched content.

bufferSize (Advanced)

This is an optional attribute used to tune the size of the character buffer used by Data Splitter. The default size is 20000 characters and should be fine for most translations. The minimum value that this can be set to is 20000 characters and the maximum is 1000000000. The only reason to specify this attribute is when individual records are bigger than 10000 characters which is rarely the case.

Group element <group>

Groups behave in a similar way to the root element in that they provide content for one or more inner expressions to deal with, e.g.

<group value="$1">

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

...

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

...

Attributes

As the <group> element is a content provider it also includes the same ignoreErrors attribute which behaves in the same way.

The complete list of attributes for the <group> element is as follows:

id

When Data Splitter reports errors it outputs an XPath to describe the part of the configuration that generated the error, e.g.

DSParser [2:1] ERROR: Expressions failed to match all of the content provided by group: regex[0]/group[0]/regex[3]/group[1] : <group>

It is often a little difficult to identify the configuration element that generated the error by looking at the path and the element description, particularly when multiple elements are the same, e.g. many <group> elements without attributes.

To make identification easier you can add an ‘id’ attribute to any element in the configuration resulting in error descriptions as follows:

DSParser [2:1] ERROR: Expressions failed to match all of the content provided by group: regex[0]/group[0]/regex[3]/group[1] : <group id="myGroupId">

value

This attribute determines what content to present to child expressions.

By default the entire content matched by a group’s parent expression is passed on by the group to child expressions.

If required, content from a specific match group in the parent expression can be passed to child expressions using the value attribute, e.g. value="$1".

In addition to this content can be composed in the same way as it is for data names and values.

See Also

Match references for a full description of match references.

ignoreErrors

This behaves in the same way as for the root element.

matchOrder

This is an optional attribute used to control how content is consumed by expression matches.

Content can be consumed in sequence or in any order using matchOrder="sequence" or matchOrder="any".

If the attribute is not specified, Data Splitter will default to matching in sequence.

When matching in sequence, each match consumes some content and the content position is moved beyond the match ready for the subsequent match. However, in some cases the order of these constructs is not predictable, e.g. we may sometimes be presented with:

Value1=1 Value2=2

… or sometimes with:

Value2=2 Value1=1

Using a sequential match order the following example would work to find both values in Value1=1 Value2=2

<group>

<regex pattern="Value1=([^ ]*)">

...

<regex pattern="Value2=([^ ]*)">

...

… but this example would skip over Value2 and only find the value of Value1 if the input was Value2=2 Value1=1.

To be able to deal with content that contains these constructs in either order we need to change the match order to any.

When matching in any order, each match removes the matched section from the content rather than moving the position past the match so that all remaining content can be matched by subsequent expressions.

In the following example the first expression would match and remove Value1=1 from the supplied content and the second expression would be presented with Value2=2 which it could also match.

<group matchOrder="any">

<regex pattern="Value1=([^ ]*)">

...

<regex pattern="Value2=([^ ]*)">

...

If the attribute is omitted by default the match order will be sequential. This is the default behaviour as tokens are most often in sequence and consuming content in this way is more efficient as content does not need to be copied by the parser to chop out sections as is required for matching in any order. It is only necessary to use this feature when fields that are identifiable with a specific match can occur in any order.

reverse

Occasionally it is desirable to reverse the content presented by a group to child expressions. This is because it is sometimes easier to form a pattern by matching content in reverse.

Take the following example content of name, value pairs delimited by = but with no spaces between names, multiple spaces between values and only a space between subsequent pairs:

ipAddress=123.123.123.123 zones=Zone 1, Zone 2, Zone 3 location=loc1 A user=An end user serverName=bigserver

We could write a pattern that matches each name value pair by matching up to the start of the next name, e.g.

<regex pattern="([^=]+)=(.+?)( [^=]+=)">

This would match the following:

ipAddress=123.123.123.123 zones=

Here we are capturing the name and value for each pair in separate groups but the pattern has to also match the name from the next name value pair to find the end of the value. By default Data Splitter will move the content buffer to the end of the match ready for subsequent matches so the next name will not be available for matching.

In addition to matching too much content the above example also uses a reluctant qualifier .+?. Use of reluctant qualifiers almost always impacts performance so they are to be avoided if at all possible.

A better way to match the example content is to match the input in reverse, reading characters from right to left.

The following example demonstrates this:

<group reverse="true">

<regex pattern="([^=]+)=([^ ]+)">

<data name="$2" value="$1" />

</regex>

</group>

Using the reverse attribute on the parent group causes content to be supplied to all child expressions in reverse order. In the above example this allows the pattern to match values followed by names which enables us to cope with the fact that values have multiple spaces but names have no spaces.

Content is only presented to child regular expressions in reverse. When referencing values from match groups the content is returned in the correct order, e.g. the above example would return:

<data name="ipAddress" value="123.123.123.123" />

<data name="zones" value="Zone 1, Zone 2, Zone 3" />

<data name="location" value="loc1" />

<data name="user" value="An end user" />

<data name="serverName" value="bigserver" />

The reverse feature isn’t needed very often but there are a few cases where it really helps produce the desired output without the complexity and performance overhead of a reluctant match.

An alternative to using the reverse attribute is to use the original reluctant expression example but tell Data Splitter to make the subsequent name available for the next match by not advancing the content beyond the end of the previous value. This is done by using the advance attribute on the <regex>. However, the reverse attribute represents a better way to solve this particular problem and allows a simpler and more efficient regular expression to be used.

5.5.2 - Expressions

Expressions match some data supplied by a parent content provider. The content matched by an expression depends on the type of expression and how it is configured.

The <split>, <regex> and <all> elements are all expressions and match content as described below.

The <split> element

The <split> element directs Data Splitter to break up content using a specified character sequence as a delimiter.

In addition to this it is possible to specify characters that are used to escape the delimiter as well as characters that contain or “quote” a value that may include the delimiter sequence but allow it to be ignored.

Attributes

The <split> element has the following attributes:

id

Optional attribute used to debug the location of expressions causing errors, see id.

delimiter

A required attribute used to specify the character string that will be used as a delimiter to split the supplied content unless it is preceded by an escape character or within a container if specified. Several of the previous examples use this attribute.

escape

An optional attribute used to specify a character sequence that is used to escape the delimiter. Many delimited text formats have an escape character that is used to tell any parser that the following delimiter should be ignored, e.g. often a character such as ‘' is used to escape the character that follows it so that it is not treated as a delimiter. When specified this escape sequence also applies to any container characters that may be specified.

containerStart

An optional attribute used to specify a character sequence that will make this expression ignore the presence of delimiters until an end container is found. If the character is preceded by the specified escape sequence then this container sequence will be ignored and the expression will continue matching characters up to a delimiter.

If used containerEnd must also be specified.

If the container characters are to be ignored from the match then match group 1 must be used instead of 0.

containerEnd

An optional attribute used to specify a character sequence that will make this expression stop ignoring the presence of delimiters if it believes it is currently in a container. If the character is preceded by the specified escape sequence then this container sequence will be ignored and the expression will continue matching characters while ignoring the presence of any delimiter.

If used containerStart must also be specified.

If the container characters are to be ignored from the match then match group 1 must be used instead of 0.

maxMatch

An optional attribute used to specify the maximum number of times this expression is allowed to match the supplied content. If you do not supply this attribute then the Data Splitter will keep matching the supplied content until it reaches the end. If specified Data Splitter will stop matching the supplied content when it has matched it the specified number of times.

This attribute is used in the ‘CSV with header line’ example to ensure that only the first line is treated as a header line.

minMatch

An optional attribute used to specify the minimum number of times this expression should match the supplied content. If you do not supply this attribute then Data Splitter will not enforce that the expression matches the supplied content. If specified Data Splitter will generate an error if the expression does not match the supplied content at least as many times as specified.

Unlike maxMatch, minMatch does not control the matching process but instead controls the production of error messages generated if the parser is not seeing the expected input.

onlyMatch

Optional attribute to use this expression only for specific instances of a match of the parent expression, e.g. on the 4th, 5th and 8th matches of the parent expression specified by ‘4,5,8’. This is used when this expression should only be used to subdivide content from certain parent matches.

The <regex> element

The <regex> element directs Data Splitter to match content using the specified regular expression pattern.

In addition to this the same match control attributes that are available on the <split> element are also present as well as attributes to alter the way the pattern works.

Attributes

The <regex> element has the following attributes:

id

Optional attribute used to debug the location of expressions causing errors, see id.

pattern

This is a required attribute used to specify a regular expression to use to match on the supplied content.

The pattern is used to match the content multiple times until the end of the content is reached while the maxMatch and onlyMatch conditions are satisfied.

dotAll

An optional attribute used to specify if the use of ‘.’ in the supplied pattern matches all characters including new lines. If ’true’ ‘.’ will match all characters including new lines, if ‘false’ it will only match up to a new line. If this attribute is not specified it defaults to ‘false’ and will only match up to a new line.

This attribute is used in many of the multi-line examples above.

caseInsensitive

An optional attribute used to specify if the supplied pattern should match content in a case insensitive way. If ’true’ the expression will match content in a case insensitive manner, if ‘false’ it will match the content in a case sensitive manner. If this attribute is not specified it defaults to ‘false’ and will match the content in a case sensitive manner.

maxMatch

This is used in the same way it is on the <split> element, see maxMatch.

minMatch

This is used in the same way it is on the <split> element, see minMatch.

onlyMatch

This is used in the same way it is on the <split> element, see onlyMatch.

advance

After an expression has matched content in the buffer, the buffer start position is advanced so that it moves to the end of the entire match. This means that subsequent expressions operating on the content buffer will not see the previously matched content again. This is normally required behaviour, but in some cases some of the content from a match is still required for subsequent matches. Take the following example of name value pairs:

name1=some value 1 name2=some value 2 name3=some value 3

The first name value pair could be matched with the following expression:

<regex pattern="([^=]+)=(.+?) [^= ]+=">

The above expression would match as follows:

name1=some value 1 name2=some value 2 name3=some value 3

In this example we have had to do a reluctant match to extract the value in group 2 and not include the subsequent name. Because the reluctant match requires us to specify what we are reluctantly matching up to, we have had to include an expression after it that matches the next name.

By default the parser will move the character buffer to the end of the entire match so the next expression will be presented with the following:

some value 2 name3=some value 3

Therefore name2 will have been lost from the content buffer and will not be available for matching.

This behaviour can be altered by telling the expression how far to advance the character buffer after matching. This is done with the advance attribute and is used to specify the match group whose end position should be treated as the point the content buffer should advance to, e.g.

<regex pattern="([^=]+)=(.+?) [^= ]+=" advance="2">

In this example the content buffer will only advance to the end of match group 2 and subsequent expressions will be presented with the following content:

name2=some value 2 name3=some value 3

Therefore name2 will still be available in the content buffer.

It is likely that the advance feature will only be useful in cases where a reluctant match is performed. Reluctant matches are discouraged for performance reasons so this feature should rarely be used. A better way to tackle the above example would be to present the content in reverse, however this is only possible if the expression is within a group, i.e. is not a root expression. There may also be more complex cases where reversal is not an option and the use of a reluctant match is the only option.

The <all> element

The <all> element matches the entire content of the parent group and makes it available to child groups or <data> elements.

The purpose of <all> is to act as a catch all expression to deal with content that is not handled by a more specific expression, e.g. to output some other unknown, unrecognised or unexpected data.

<group>

<regex pattern="^\s*([^=]+)=([^=]+)\s*">

<data name="$1" value="$2" />

</regex>

<!-- Output unexpected data -->

<all>

<data name="unknown" value="$" />

</all>

</group>

The <all> element provides the same functionality as using .* as a pattern in a <regex> element and where dotAll is set to true, e.g. <regex pattern=".*" dotAll="true">.

However it performs much faster as it doesn’t require pattern matching logic and is therefore always preferred.

Attributes

The <all> element has the following attributes:

id

Optional attribute used to debug the location of expressions causing errors, see id.

5.5.3 - Variables

A variable is added to Data Splitter using the <var> element. A variable is used to store matches from a parent expression for use in a reference elsewhere in the configuration, see variable reference.

The most recent matches are stored for use in local references, i.e. references that are in the same match scope as the variable. Multiple matches are stored for use in references that are in a separate match scope. The concept of different variable scopes is described in scopes.

The <var> element

The <var> element is used to tell Data Splitter to store matches from a parent expression for use in a reference.

Attributes

The <var> element has the following attributes:

id

Mandatory attribute used to uniquely identify it within the configuration (see id) and is the means by which a variable is referenced, e.g. $VAR_ID$.

5.5.4 - Output

As with all other aspects of Data Splitter, output XML is determined by adding certain elements to the Data Splitter configuration.

The <data> element

Output is created by Data Splitter using one or more <data> elements in the configuration.

The first <data> element that is encountered within a matched expression will result in parent <record> elements being produced in the output.

Attributes

The <data> element has the following attributes:

id

Optional attribute used to debug the location of expressions causing errors, see id.

name

Both the name and value attributes of the <data> element can be specified using match references.

value

Both the name and value attributes of the <data> element can be specified using match references.

Single <data> element example

The simplest example that can be provided uses a single <data> element within a <split> expression.

Given the following input:

This is line 1

This is line 2

This is line 3

… and the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<split delimiter="\n" >

<data value="$1"/>

</split>

</dataSplitter>

… you would get the following output:

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data value="This is line 1" />

</record>

<record>

<data value="This is line 2" />

</record>

<record>

<data value="This is line 3" />

</record>

</records>

Multiple <data> element example

You could also output multiple <data> elements for the same <record> by adding multiple elements within the same expression:

Given the following input:

ip=1.1.1.1 user=user1

ip=2.2.2.2 user=user2

ip=3.3.3.3 user=user3

… and the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<regex pattern="ip=([^ ]+) user=([^ ]+)\s*">

<data name="ip" value="$1"/>

<data name="user" value="$2"/>

</split>

</dataSplitter>

… you would get the following output:

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data name="ip" value="1.1.1.1" />

<data name="user" value="user1" />

</record>

<record>

<data name="ip" value="2.2.2.2" />

<data name="user" value="user2" />

</record>

<record>

<data name="ip" value="3.3.3.3" />

<data name="user" value="user3" />

</record>

</records>

Multi level <data> elements

As long as all data elements occur within the same parent/ancestor expression, all data elements will be output within the same record.

Given the following input:

ip=1.1.1.1 user=user1

ip=2.2.2.2 user=user2

ip=3.3.3.3 user=user3

… and the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<split delimiter="\n" >

<data name="line" value="$1"/>

<group value="$1">

<regex pattern="ip=([^ ]+) user=([^ ]+)">

<data name="ip" value="$1"/>

<data name="user" value="$2"/>

</regex>

</group>

</split>

</dataSplitter>

… you would get the following output:

<?xml version="1.0" encoding="UTF-8"?>

<records

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data name="line" value="ip=1.1.1.1 user=user1" />

<data name="ip" value="1.1.1.1" />

<data name="user" value="user1" />

</record>

<record>

<data name="line" value="ip=2.2.2.2 user=user2" />

<data name="ip" value="2.2.2.2" />

<data name="user" value="user2" />

</record>

<record>

<data name="line" value="ip=3.3.3.3 user=user3" />

<data name="ip" value="3.3.3.3" />

<data name="user" value="user3" />

</record>

</records>

Nesting <data> elements

Rather than having <data> elements all appear as children of <record> it is possible to nest them either as direct children or within child groups.

Direct children

Given the following input:

ip=1.1.1.1 user=user1

ip=2.2.2.2 user=user2

ip=3.3.3.3 user=user3

… and the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd"

version="3.0">

<regex pattern="ip=([^ ]+) user=([^ ]+)\s*">

<data name="line" value="$">

<data name="ip" value="$1"/>

<data name="user" value="$2"/>

</data>

</split>

</dataSplitter>

… you would get the following output:

<?xml version="1.0" encoding="UTF-8"?>

<record

xmlns="records:2"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="records:2 file://records-v2.0.xsd"

version="3.0">

<record>

<data name="line" value="ip=1.1.1.1 user=user1">

<data name="ip" value="1.1.1.1" />

<data name="user" value="user1" />

</data>

</record>

<record>

<data name="line" value="ip=2.2.2.2 user=user2">

<data name="ip" value="2.2.2.2" />

<data name="user" value="user2" />

</data>

</record>

<record>

<data name="line" value="ip=3.3.3.3 user=user3">

<data name="ip" value="3.3.3.3" />

<data name="user" value="user3" />

</data>

</record>

</records>

Within child groups

Given the following input:

ip=1.1.1.1 user=user1

ip=2.2.2.2 user=user2

ip=3.3.3.3 user=user3

… and the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter

xmlns="data-splitter:3"

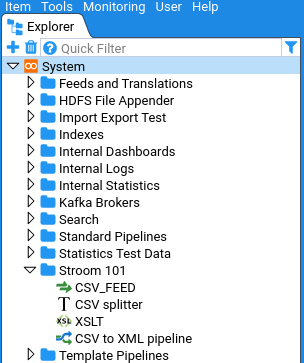

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"