This is the multi-page printable view of this section. Click here to print.

Installation

- 1: Apache Httpd/Mod_JK configuration for Stroom

- 2: Database Installation

- 3: Installation

- 4: Installation of Stroom Application

- 5: Installation of Stroom Proxy

- 6: NFS Installation and Configuration

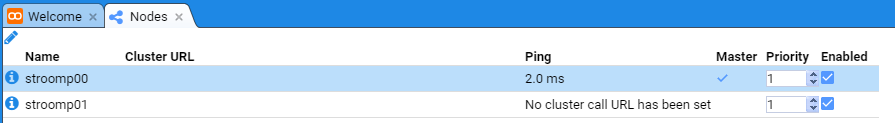

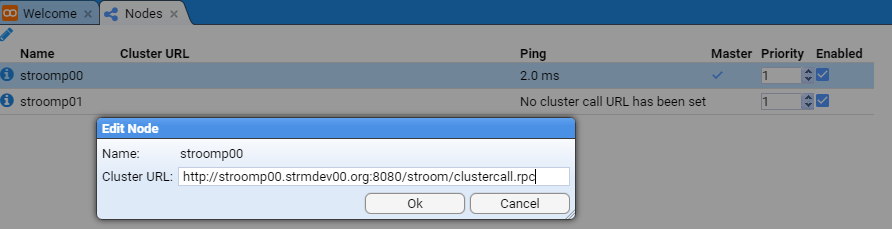

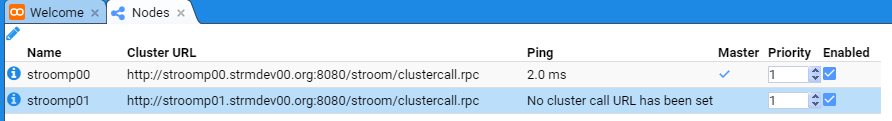

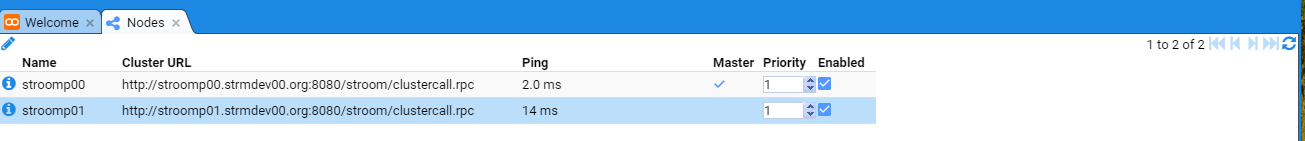

- 7: Node Cluster URL Setup

- 8: Processing User setup

- 9: SSL Certificate Generation

- 10: Testing Stroom Installation

- 11: Volume Maintenance

1 - Apache Httpd/Mod_JK configuration for Stroom

Assumptions

The following assumptions are used in this document.

- the user has reasonable RHEL/Centos System administration skills

- installations are on Centos 7.3 minimal systems (fully patched)

- the security of the HTTPD deployment should be reviewed for a production environment.

Installation of Apache httpd and Mod_JK Software

To deploy Stroom using Apache’s httpd web service as a front end (https) and Apache’s mod_jk as the interface between Apache and the Stroom tomcat applications, we also need

- apr

- apr-util

- apr-devel

- gcc

- httpd

- httpd-devel

- mod_ssl

- epel-release

- tomcat-native

- apache’s mod_jk tomcat connector plugin

Most of the required software are packages available via standard repositories and hence we can simply execute

sudo yum -y install apr apr-util apr-devel gcc httpd httpd-devel mod_ssl epel-release

sudo yum -y install tomcat-native

The reason for the distinct tomcat-native installation is that this package is from the

EPEL

repository so it must be installed first.

For the Apache mod_jk Tomcat connector we need to acquire a recent release and install it. The following commands achieve this for the 1.2.42 release.

sudo bash

cd /tmp

V=1.2.42

wget https://www.apache.org/dist/tomcat/tomcat-connectors/jk/tomcat-connectors-${V}-src.tar.gz

tar xf tomcat-connectors-${V}-src.tar.gz

cd tomcat-connectors-*-src/native

./configure --with-apxs=/bin/apxs

make && make install

cd /tmp

rm -rf tomcat-connectors-*-src

Although you could remove the gcc compiler at this point, we leave it installed as one should continue to upgrade the Tomcat Connectors to later releases.

Configure Apache httpd

We need to configure Apache as the root user.

If the Apache httpd service is ‘fronting’ a Stroom user interface, we should create an index file (/var/www/html/index.html) on all nodes so browsing to the root of the node will present the Stroom UI. This is not needed if you are deploying a Forwarding or Standalone Stroom proxy.

Forwarding file for Stroom User Interface deployments

F=/var/www/html/index.html

printf '<html>\n' > ${F}

printf '<head>\n' >> ${F}

printf ' <meta http-equiv="Refresh" content="0; URL=stroom"/>\n' >> ${F}

printf '</head>\n' >> ${F}

printf '</html>\n' >> ${F}

chmod 644 ${F}

Remember, deploy this file on all nodes running the Stroom Application.

Httpd.conf Configuration

We modify /etc/httpd/conf/httpd.conf on all nodes, but backup the file first with

cp /etc/httpd/conf/httpd.conf /etc/httpd/conf/httpd.conf.ORIG

Irrespective of the Stroom scenario being deployed - Multi Node Stroom (Application and Proxy), single Standalone Stroom Proxy or single Forwarding

Stroom Proxy, the configuration of the /etc/httpd/conf/httpd.conf file is the same.

We start by modify the configuration file by, add just before the ServerRoot directive the following directives which are designed to make the httpd service more secure.

# Stroom Change: Start - Apply generic security directives

ServerTokens Prod

ServerSignature Off

FileETag None

RewriteEngine On

RewriteCond %{THE_REQUEST} !HTTP/1.1$

RewriteRule .* - [F]

Header set X-XSS-Protection "1; mode=block"

# Stroom Change: End

That is,

...

# Do not add a slash at the end of the directory path. If you point

# ServerRoot at a non-local disk, be sure to specify a local disk on the

# Mutex directive, if file-based mutexes are used. If you wish to share the

# same ServerRoot for multiple httpd daemons, you will need to change at

# least PidFile.

#

ServerRoot "/etc/httpd"

#

# Listen: Allows you to bind Apache to specific IP addresses and/or

...

becomes

...

# Do not add a slash at the end of the directory path. If you point

# ServerRoot at a non-local disk, be sure to specify a local disk on the

# Mutex directive, if file-based mutexes are used. If you wish to share the

# same ServerRoot for multiple httpd daemons, you will need to change at

# least PidFile.

#

# Stroom Change: Start - Apply generic security directives

ServerTokens Prod

ServerSignature Off

FileETag None

RewriteEngine On

RewriteCond %{THE_REQUEST} !HTTP/1.1$

RewriteRule .* - [F]

Header set X-XSS-Protection "1; mode=block"

# Stroom Change: End

ServerRoot "/etc/httpd"

#

# Listen: Allows you to bind Apache to specific IP addresses and/or

...

We now block access to the /var/www directory by commenting out

<Directory "/var/www">

AllowOverride None

# Allow open access:

Require all granted

</Directory>

that is

...

#

# Relax access to content within /var/www.

#

<Directory "/var/www">

AllowOverride None

# Allow open access:

Require all granted

</Directory>

# Further relax access to the default document root:

...

becomes

...

#

# Relax access to content within /var/www.

#

# Stroom Change: Start - Block access to /var/www

# <Directory "/var/www">

# AllowOverride None

# # Allow open access:

# Require all granted

# </Directory>

# Stroom Change: End

# Further relax access to the default document root:

...

then within the /var/www/html directory turn off Indexes FollowSymLinks by commenting out the line

Options Indexes FollowSymLinks

That is

...

# The Options directive is both complicated and important. Please see

# http://httpd.apache.org/docs/2.4/mod/core.html#options

# for more information.

#

Options Indexes FollowSymLinks

#

# AllowOverride controls what directives may be placed in .htaccess files.

# It can be "All", "None", or any combination of the keywords:

...

becomes

...

# The Options directive is both complicated and important. Please see

# http://httpd.apache.org/docs/2.4/mod/core.html#options

# for more information.

#

# Stroom Change: Start - turn off indexes and FollowSymLinks

# Options Indexes FollowSymLinks

# Stroom Change: End

#

# AllowOverride controls what directives may be placed in .htaccess files.

# It can be "All", "None", or any combination of the keywords:

...

Then finally we add two new log formats and configure the access log to use the new format. This is done within the <IfModule logio_module> by adding the two new LogFormat directives

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%u\" \"%r\" %s/%>s %D %I/%O/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxUser

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%{SSL_CLIENT_S_DN}x\" \"%r\" %s/%>s %D %I/%O/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxSSLUser

and replacing the CustomLog directive

CustomLog "logs/access_log" combined

with

CustomLog logs/access_log blackboxSSLUser

That is

...

LogFormat "%h %l %u %t \"%r\" %>s %b" common

<IfModule logio_module>

# You need to enable mod_logio.c to use %I and %O

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %I %O" combinedio

</IfModule>

#

# The location and format of the access logfile (Common Logfile Format).

# If you do not define any access logfiles within a <VirtualHost>

# container, they will be logged here. Contrariwise, if you *do*

# define per-<VirtualHost> access logfiles, transactions will be

# logged therein and *not* in this file.

#

#CustomLog "logs/access_log" common

#

# If you prefer a logfile with access, agent, and referer information

# (Combined Logfile Format) you can use the following directive.

#

CustomLog "logs/access_log" combined

</IfModule>

...

becomes

...

LogFormat "%h %l %u %t \"%r\" %>s %b" common

<IfModule logio_module>

# You need to enable mod_logio.c to use %I and %O

LogFormat "%h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\" %I %O" combinedio

# Stroom Change: Start - Add new logformats

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%u\" \"%r\" %s/%>s %D %I/%O/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxUser

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%{SSL_CLIENT_S_DN}x\" \"%r\" %s/%>s %D %I/%O/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxSSLUser

# Stroom Change: End

</IfModule>

# Stroom Change: Start - Add new logformats without the additional byte values

<IfModule !logio_module>

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%u\" \"%r\" %s/%>s %D 0/0/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxUser

LogFormat "%a/%{REMOTE_PORT}e %X %t %l \"%{SSL_CLIENT_S_DN}x\" \"%r\" %s/%>s %D 0/0/%B \"%{Referer}i\" \"%{User-Agent}i\" %V/%p" blackboxSSLUser

</IfModule>

# Stroom Change: End

#

# The location and format of the access logfile (Common Logfile Format).

# If you do not define any access logfiles within a <VirtualHost>

# container, they will be logged here. Contrariwise, if you *do*

# define per-<VirtualHost> access logfiles, transactions will be

# logged therein and *not* in this file.

#

#CustomLog "logs/access_log" common

#

# If you prefer a logfile with access, agent, and referer information

# (Combined Logfile Format) you can use the following directive.

#

# Stroom Change: Start - Make the access log use a new format

# CustomLog "logs/access_log" combined

CustomLog logs/access_log blackboxSSLUser

# Stroom Change: End

</IfModule>

...

Remember, deploy this file on all nodes.

Configuration of ssl.conf

We modify /etc/httpd/conf.d/ssl.conf on all nodes, backing up first,

cp /etc/httpd/conf.d/ssl.conf /etc/httpd/conf.d/ssl.conf.ORIG

The configuration of the /etc/httpd/conf.d/ssl.conf does change depending on the Stroom scenario deployed. In the following we will indicate

differences by tagged sub-headings. If the configuration applies irrespective of scenario, then All scenarios is the tag, else the tag indicated the

type of Stroom deployment.

ssl.conf: HTTP to HTTPS Redirection - All scenarios

Before the

<VirtualHost *:80>

ServerName stroomp00.strmdev00.org

Redirect permanent "/" "https://stroomp00.strmdev00.org/"

</VirtualHost>

That is, we change

...

## SSL Virtual Host Context

##

<VirtualHost _default_:443>

...

to

...

## SSL Virtual Host Context

##

# Stroom Change: Start - Add http redirection to https

<VirtualHost *:80>

ServerName stroomp00.strmdev00.org

Redirect permanent "/" "https://stroomp00.strmdev00.org/"

</VirtualHost>

# Stroom Change: End

<VirtualHost _default_:443>

ssl.conf: VirtualHost directives - Multi Node ‘Application and Proxy’ deployment

Within the stroomp.strmdev00.org

ServerName stroomp.strmdev00.org

JkMount /stroom* loadbalancer

JkMount /stroom/remoting/cluster* local

JkMount /stroom/datafeed* loadbalancer_proxy

JkMount /stroom/remoting* loadbalancer_proxy

JkMount /stroom/datafeeddirect* loadbalancer

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

That is, we change

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

to

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Stroom Change: Start - Set servername and mod_jk connectivity

ServerName stroomp.strmdev00.org

JkMount /stroom* loadbalancer

JkMount /stroom/remoting/cluster* local

JkMount /stroom/datafeed* loadbalancer_proxy

JkMount /stroom/remoting* loadbalancer_proxy

JkMount /stroom/datafeeddirect* loadbalancer

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

# Stroom Change: End

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

ssl.conf: VirtualHost directives - Standalone or Forwarding Proxy deployment

Within the stroomfp0.strmdev00.org

ServerName stroomfp0.strmdev00.org

JkMount /stroom/datafeed* local_proxy

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

That is, we change

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

to

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Stroom Change: Start - Set servername and mod_jk connectivity

ServerName stroomfp0.strmdev00.org

JkMount /stroom/datafeed* local_proxy

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

# Stroom Change: End

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

ssl.conf: VirtualHost directives - Single Node ‘Application and Proxy’ deployment

Within the stroomp00.strmdev00.org

ServerName stroomp00.strmdev00.org

JkMount /stroom* local

JkMount /stroom/remoting/cluster* local

JkMount /stroom/datafeed* local_proxy

JkMount /stroom/remoting* local_proxy

JkMount /stroom/datafeeddirect* local

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

That is, we change

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

to

...

<VirtualHost _default_:443>

# General setup for the virtual host, inherited from global configuration

#DocumentRoot "/var/www/html"

#ServerName www.example.com:443

# Stroom Change: Start - Set servername and mod_jk connectivity

ServerName stroomp00.strmdev00.org

JkMount /stroom* local

JkMount /stroom/remoting/cluster* local

JkMount /stroom/datafeed* local_proxy

JkMount /stroom/remoting* local_proxy

JkMount /stroom/datafeeddirect* local

JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories

# Stroom Change: End

# Use separate log files for the SSL virtual host; note that LogLevel

# is not inherited from httpd.conf.

...

ssl.conf: Certificate file changes - All scenarios

We replace the standard certificate files with the generated certificates. In the example below, we are using the multi node scenario, in

that the key file names are stroomp.crt and stroomp.key. For other scenarios, use the appropriate file names generated. We replace

SSLCertificateFile /etc/pki/tls/certs/localhost.crt

with

SSLCertificateFile /home/stroomuser/stroom-jks/public/stroomp.crt

and

SSLCertificateKeyFile /etc/pki/tls/private/localhost.key

with

SSLCertificateKeyFile /home/stroomuser/stroom-jks/private/stroomp.key

That is, change

...

# pass phrase. Note that a kill -HUP will prompt again. A new

# certificate can be generated using the genkey(1) command.

SSLCertificateFile /etc/pki/tls/certs/localhost.crt

# Server Private Key:

# If the key is not combined with the certificate, use this

# directive to point at the key file. Keep in mind that if

# you've both a RSA and a DSA private key you can configure

# both in parallel (to also allow the use of DSA ciphers, etc.)

SSLCertificateKeyFile /etc/pki/tls/private/localhost.key

# Server Certificate Chain:

# Point SSLCertificateChainFile at a file containing the

...

to

...

# pass phrase. Note that a kill -HUP will prompt again. A new

# certificate can be generated using the genkey(1) command.

# Stroom Change: Start - Replace with Stroom server certificate

# SSLCertificateFile /etc/pki/tls/certs/localhost.crt

SSLCertificateFile /home/stroomuser/stroom-jks/public/stroomp.crt

# Stroom Change: End

# Server Private Key:

# If the key is not combined with the certificate, use this

# directive to point at the key file. Keep in mind that if

# you've both a RSA and a DSA private key you can configure

# both in parallel (to also allow the use of DSA ciphers, etc.)

# Stroom Change: Start - Replace with Stroom server private key file

# SSLCertificateKeyFile /etc/pki/tls/private/localhost.key

SSLCertificateKeyFile /home/stroomuser/stroom-jks/private/stroomp.key

# Stroom Change: End

# Server Certificate Chain:

# Point SSLCertificateChainFile at a file containing the

...

ssl.conf: Certificate Bundle/NO-CA Verification - All scenarios

If you have signed your Stroom server certificate with a Certificate Authority, then change

SSLCACertificateFile /etc/pki/tls/certs/ca-bundle.crt

to be your own certificate bundle which you should probably store as ~stroomuser/stroom-jks/public/stroomp-ca-bundle.crt.

Now if you are using a self signed certificate, you will need to set the Client Authentication to have a value of

SSLVerifyClient optional_no_ca

noting that this may change if you actually use a CA. That is, changing

...

# Client Authentication (Type):

# Client certificate verification type and depth. Types are

# none, optional, require and optional_no_ca. Depth is a

# number which specifies how deeply to verify the certificate

# issuer chain before deciding the certificate is not valid.

#SSLVerifyClient require

#SSLVerifyDepth 10

# Access Control:

# With SSLRequire you can do per-directory access control based

...

to

...

# Client Authentication (Type):

# Client certificate verification type and depth. Types are

# none, optional, require and optional_no_ca. Depth is a

# number which specifies how deeply to verify the certificate

# issuer chain before deciding the certificate is not valid.

#SSLVerifyClient require

#SSLVerifyDepth 10

# Stroom Change: Start - Set optional_no_ca (given we have a self signed certificate)

SSLVerifyClient optional_no_ca

# Stroom Change: End

# Access Control:

# With SSLRequire you can do per-directory access control based

...

ssl.conf: Servlet Protection - Single or Multi Node scenarios (not for Standalone/Forwarding Proxy)

We now need to secure certain Stroom Application servlets, to ensure they are only accessed under appropriate control.

This set of servlets will be accessible by all nodes in the subnet 192.168.2 (as well as localhost). We achieve this by adding after the example Location directives

<Location ~ "stroom/(status|echo|sessionList|debug)" >

Require all denied

Require ip 127.0.0.1 192.168.2

</Location>

We further restrict the clustercall and export servlets to just the localhost. If we had multiple Stroom processing nodes, you would specify each node, or preferably, the subnet they are on. In our multi node case this is 192.168.2.

<Location ~ "stroom/export/|stroom/remoting/clustercall.rpc" >

Require all denied

Require ip 127.0.0.1 192.168.2

</Location>

That is, the following

...

# and %{TIME_WDAY} >= 1 and %{TIME_WDAY} <= 5 \

# and %{TIME_HOUR} >= 8 and %{TIME_HOUR} <= 20 ) \

# or %{REMOTE_ADDR} =~ m/^192\.76\.162\.[0-9]+$/

#</Location>

# SSL Engine Options:

# Set various options for the SSL engine.

# o FakeBasicAuth:

...

changes to

...

# and %{TIME_WDAY} >= 1 and %{TIME_WDAY} <= 5 \

# and %{TIME_HOUR} >= 8 and %{TIME_HOUR} <= 20 ) \

# or %{REMOTE_ADDR} =~ m/^192\.76\.162\.[0-9]+$/

#</Location>

# Stroom Change: Start - Lock access to certain servlets

<Location ~ "stroom/(status|echo|sessionList|debug)" >

Require all denied

Require ip 127.0.0.1 192.168.2

</Location>

# Lock these Servlets more securely - to just localhost and processing node(s)

<Location ~ "stroom/export/|stroom/remoting/clustercall.rpc" >

Require all denied

Require ip 127.0.0.1 192.168.2

</Location>

# Stroom Change: End

# SSL Engine Options:

# Set various options for the SSL engine.

# o FakeBasicAuth:

...

ssl.conf: Log formats - All scenarios

Finally, as we make use of the Black Box Apache log format, we replace the standard format

CustomLog logs/ssl_request_log \

"%t %h %{SSL_PROTOCOL}x %{SSL_CIPHER}x \"%r\" %b"

with

CustomLog logs/ssl_request_log blackboxSSLUser

That is, change

...

# Per-Server Logging:

# The home of a custom SSL log file. Use this when you want a

# compact non-error SSL logfile on a virtual host basis.

CustomLog logs/ssl_request_log \

"%t %h %{SSL_PROTOCOL}x %{SSL_CIPHER}x \"%r\" %b"

</VirtualHost>

to

...

# Per-Server Logging:

# The home of a custom SSL log file. Use this when you want a

# compact non-error SSL logfile on a virtual host basis.

# Stroom Change: Start - Change ssl_request log to use our BlackBox format

# CustomLog logs/ssl_request_log \

# "%t %h %{SSL_PROTOCOL}x %{SSL_CIPHER}x \"%r\" %b"

CustomLog logs/ssl_request_log blackboxSSLUser

# Stroom Change: End

</VirtualHost>

Remember, in the case of Multi node stroom Application servers, deploy this file on all servers.

Apache Mod_JK configuration

Apache Mod_JK has two configuration files

- /etc/httpd/conf.d/mod_jk.conf - for the http server configuration

- /etc/httpd/conf/workers.properties - to configure the Tomcat workers

In multi node scenarios, /etc/httpd/conf.d/mod_jk.conf is the same on all servers, but the /etc/httpd/conf/workers.properties file is different.

The contents of these two configuration files differ depending on the Stroom deployment. The following provide the various deployment scenarios.

Mod_JK Multi Node Application and Proxy Deployment

For a Stroom Multi node Application and Proxy server,

- we configure

/etc/httpd/conf.d/mod_jk.confas per

F=/etc/httpd/conf.d/mod_jk.conf

printf 'LoadModule jk_module modules/mod_jk.so\n' > ${F}

printf 'JkWorkersFile conf/workers.properties\n' >> ${F}

printf 'JkLogFile logs/mod_jk.log\n' >> ${F}

printf 'JkLogLevel info\n' >> ${F}

printf 'JkLogStampFormat "[%%a %%b %%d %%H:%%M:%%S %%Y]"\n' >> ${F}

printf 'JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories\n' >> ${F}

printf 'JkRequestLogFormat "%%w %%V %%T"\n' >> ${F}

printf 'JkMount /stroom* loadbalancer\n' >> ${F}

printf 'JkMount /stroom/remoting/cluster* local\n' >> ${F}

printf 'JkMount /stroom/datafeed* loadbalancer_proxy\n' >> ${F}

printf 'JkMount /stroom/remoting* loadbalancer_proxy\n' >> ${F}

printf 'JkMount /stroom/datafeeddirect* loadbalancer\n' >> ${F}

printf '# Note: Replaced JkShmFile logs/jk.shm due to SELinux issues. Refer to\n' >> ${F}

printf '# https://bugzilla.redhat.com/bugzilla/show_bug.cgi?id=225452\n' >> ${F}

printf '# The following makes use of the existing /run/httpd directory\n' >> ${F}

printf 'JkShmFile run/jk.shm\n' >> ${F}

printf '<Location /jkstatus/>\n' >> ${F}

printf ' JkMount status\n' >> ${F}

printf ' Order deny,allow\n' >> ${F}

printf ' Deny from all\n' >> ${F}

printf ' Allow from 127.0.0.1\n' >> ${F}

printf '</Location>\n' >> ${F}

chmod 640 ${F}

- we configure

/etc/httpd/conf/workers.propertiesas per

Since we are deploying for a cluster with load balancing, we need a workers.properties file per node. Executing the following will result in two files (workers.properties.stroomp00 and workers.properties.stroomp01) which should be deployed to their respective servers.

cd /tmp

# Set the list of nodes

Nodes="stroomp00.strmdev00.org stroomp01.strmdev00.org"

for oN in ${Nodes}; do

_n=`echo ${oN} | cut -f1 -d\.`

(

printf '# Workers.properties for Stroom Cluster member: %s %s\n' ${oN}

printf 'worker.list=loadbalancer,loadbalancer_proxy,local,local_proxy,status\n'

L_t=""

Lp_t=""

for FQDN in ${Nodes}; do

N=`echo ${FQDN} | cut -f1 -d\.`

printf 'worker.%s.port=8009\n' ${N}

printf 'worker.%s.host=%s\n' ${N} ${FQDN}

printf 'worker.%s.type=ajp13\n' ${N}

printf 'worker.%s.lbfactor=1\n' ${N}

printf 'worker.%s.max_packet_size=65536\n' ${N}

printf 'worker.%s_proxy.port=9009\n' ${N}

printf 'worker.%s_proxy.host=%s\n' ${N} ${FQDN}

printf 'worker.%s_proxy.type=ajp13\n' ${N}

printf 'worker.%s_proxy.lbfactor=1\n' ${N}

printf 'worker.%s_proxy.max_packet_size=65536\n' ${N}

L_t="${L_t}${N},"

Lp_t="${Lp_t}${N}_proxy,"

done

L=`echo $L_t | sed -e 's/.$//'`

Lp=`echo $Lp_t | sed -e 's/.$//'`

printf 'worker.loadbalancer.type=lb\n'

printf 'worker.loadbalancer.balance_workers=%s\n' $L

printf 'worker.loadbalancer.sticky_session=1\n'

printf 'worker.loadbalancer_proxy.type=lb\n'

printf 'worker.loadbalancer_proxy.balance_workers=%s\n' $Lp

printf 'worker.loadbalancer_proxy.sticky_session=1\n'

printf 'worker.local.type=lb\n'

printf 'worker.local.balance_workers=%s\n' ${_n}

printf 'worker.local.sticky_session=1\n'

printf 'worker.local_proxy.type=lb\n'

printf 'worker.local_proxy.balance_workers=%s_proxy\n' ${_n}

printf 'worker.local_proxy.sticky_session=1\n'

printf 'worker.status.type=status\n'

) > workers.properties.${_n}

chmod 640 workers.properties.${_n}

done

Now depending in the node you are on, copy the relevant workers.properties.nodename file to /etc/httpd/conf/workers.properties. The following command makes this simple.

cp workers.properties.`hostname -s` /etc/httpd/conf/workers.properties

If you were to add an additional node to a multi node cluster, say the node stroomp02.strmdev00.org, then you would re-run the above script with

Nodes="stroomp00.strmdev00.org stroomp01.strmdev00.org stroomp02.strmdev00.org"

then redeploy all three files to the respective servers. Also note, that for the newly created workers.properties files for the existing nodes to take effect you will need to restart the Apache Httpd service on both nodes.

Remember, in multi node cluster deployments, the following files are the same and hence can be created on one node, but copied to the others not forgetting to backup the other node’s original files. That is, the files

- /var/www/html/index.html

- /etc/httpd/conf.d/mod_jk.conf

- /etc/httpd/conf/httpd.conf

are to be the same on all nodes. Only the /etc/httpd/conf.d/ssl.conf and /etc/httpd/conf/workers.properties files change.

Mod_JK Standalone or Forwarding Stroom Proxy Deployment

For a Stroom Standalone or Forwarding proxy,

- we configure

/etc/httpd/conf.d/mod_jk.confas per

F=/etc/httpd/conf.d/mod_jk.conf

printf 'LoadModule jk_module modules/mod_jk.so\n' > ${F}

printf 'JkWorkersFile conf/workers.properties\n' >> ${F}

printf 'JkLogFile logs/mod_jk.log\n' >> ${F}

printf 'JkLogLevel info\n' >> ${F}

printf 'JkLogStampFormat "[%%a %%b %%d %%H:%%M:%%S %%Y]"\n' >> ${F}

printf 'JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories\n' >> ${F}

printf 'JkRequestLogFormat "%%w %%V %%T"\n' >> ${F}

printf 'JkMount /stroom/datafeed* local_proxy\n' >> ${F}

printf '# Note: Replaced JkShmFile logs/jk.shm due to SELinux issues. Refer to\n' >> ${F}

printf '# https://bugzilla.redhat.com/bugzilla/show_bug.cgi?id=225452\n' >> ${F}

printf '# The following makes use of the existing /run/httpd directory\n' >> ${F}

printf 'JkShmFile run/jk.shm\n' >> ${F}

printf '<Location /jkstatus/>\n' >> ${F}

printf ' JkMount status\n' >> ${F}

printf ' Order deny,allow\n' >> ${F}

printf ' Deny from all\n' >> ${F}

printf ' Allow from 127.0.0.1\n' >> ${F}

printf '</Location>\n' >> ${F}

chmod 640 ${F}

- we configure

/etc/httpd/conf/workers.propertiesas per

The variable N in the script below is to be the node name (not FQDN) of your sever (i.e. stroomfp0).

N=stroomfp0

FQDN=`hostname -f`

F=/etc/httpd/conf/workers.properties

printf 'worker.list=local_proxy,status\n' > ${F}

printf 'worker.%s_proxy.port=9009\n' ${N} >> ${F}

printf 'worker.%s_proxy.host=%s\n' ${N} ${FQDN} >> ${F}

printf 'worker.%s_proxy.type=ajp13\n' ${N} >> ${F}

printf 'worker.%s_proxy.lbfactor=1\n' ${N} >> ${F}

printf 'worker.local_proxy.type=lb\n' >> ${F}

printf 'worker.local_proxy.balance_workers=%s_proxy\n' ${N} >> ${F}

printf 'worker.local_proxy.sticky_session=1\n' >> ${F}

printf 'worker.status.type=status\n' >> ${F}

chmod 640 ${F}

Mod_JK Single Node Application and Proxy Deployment

For a Stroom Single node Application and Proxy server,

- we configure

/etc/httpd/conf.d/mod_jk.confas per

F=/etc/httpd/conf.d/mod_jk.conf

printf 'LoadModule jk_module modules/mod_jk.so\n' > ${F}

printf 'JkWorkersFile conf/workers.properties\n' >> ${F}

printf 'JkLogFile logs/mod_jk.log\n' >> ${F}

printf 'JkLogLevel info\n' >> ${F}

printf 'JkLogStampFormat "[%%a %%b %%d %%H:%%M:%%S %%Y]"\n' >> ${F}

printf 'JkOptions +ForwardKeySize +ForwardURICompat +ForwardSSLCertChain -ForwardDirectories\n' >> ${F}

printf 'JkRequestLogFormat "%%w %%V %%T"\n' >> ${F}

printf 'JkMount /stroom* local\n' >> ${F}

printf 'JkMount /stroom/remoting/cluster* local\n' >> ${F}

printf 'JkMount /stroom/datafeed* local_proxy\n' >> ${F}

printf 'JkMount /stroom/remoting* local_proxy\n' >> ${F}

printf 'JkMount /stroom/datafeeddirect* local\n' >> ${F}

printf '# Note: Replaced JkShmFile logs/jk.shm due to SELinux issues. Refer to\n' >> ${F}

printf '# https://bugzilla.redhat.com/bugzilla/show_bug.cgi?id=225452\n' >> ${F}

printf '# The following makes use of the existing /run/httpd directory\n' >> ${F}

printf 'JkShmFile run/jk.shm\n' >> ${F}

printf '<Location /jkstatus/>\n' >> ${F}

printf ' JkMount status\n' >> ${F}

printf ' Order deny,allow\n' >> ${F}

printf ' Deny from all\n' >> ${F}

printf ' Allow from 127.0.0.1\n' >> ${F}

printf '</Location>\n' >> ${F}

chmod 640 ${F}

- we configure

/etc/httpd/conf/workers.propertiesas per

The variable N in the script below is to be the node name (not FQDN) of your sever (i.e. stroomp00).

N=stroomp00

FQDN=`hostname -f`

F=/etc/httpd/conf/workers.properties

printf 'worker.list=local,local_proxy,status\n' > ${F}

printf 'worker.%s.port=8009\n' ${N} >> ${F}

printf 'worker.%s.host=%s\n' ${N} ${FQDN} >> ${F}

printf 'worker.%s.type=ajp13\n' ${N} >> ${F}

printf 'worker.%s.lbfactor=1\n' ${N} >> ${F}

printf 'worker.%s.max_packet_size=65536\n' ${N} >> ${F}

printf 'worker.%s_proxy.port=9009\n' ${N} >> ${F}

printf 'worker.%s_proxy.host=%s\n' ${N} ${FQDN} >> ${F}

printf 'worker.%s_proxy.type=ajp13\n' ${N} >> ${F}

printf 'worker.%s_proxy.lbfactor=1\n' ${N} >> ${F}

printf 'worker.%s_proxy.max_packet_size=65536\n' ${N} >> ${F}

printf 'worker.local.type=lb\n' >> ${F}

printf 'worker.local.balance_workers=%s\n' ${N} >> ${F}

printf 'worker.local.sticky_session=1\n' >> ${F}

printf 'worker.local_proxy.type=lb\n' >> ${F}

printf 'worker.local_proxy.balance_workers=%s_proxy\n' ${N} >> ${F}

printf 'worker.local_proxy.sticky_session=1\n' >> ${F}

printf 'worker.status.type=status\n' >> ${F}

chmod 640 ${F}

Final host configuration and web service enablement

Now tidy up the SELinux context for access on all nodes and files via the commands

setsebool -P httpd_enable_homedirs on

setsebool -P httpd_can_network_connect on

chcon --reference /etc/httpd/conf.d/README /etc/httpd/conf.d/mod_jk.conf

chcon --reference /etc/httpd/conf/magic /etc/httpd/conf/workers.properties

We also enable both http and https services via the firewall on all nodes. If you don’t want to present a http access point, then don’t enable it in the firewall setting. This is done with

firewall-cmd --zone=public --add-service=http --permanent

firewall-cmd --zone=public --add-service=https --permanent

firewall-cmd --reload

firewall-cmd --zone=public --list-all

Finally enable then start the httpd service, correcting any errors. It should be noted that on any errors,

the suggestion of a systemctl status or viewing the journal are good, but also review information in the httpd error logs found in /var/log/httpd/.

systemctl enable httpd.service

systemctl start httpd.service

2 - Database Installation

Following this HOWTO will produce a simple, minimally secured database deployment. In a production environment consideration needs to be made for redundancy, better security, data-store location, increased memory usage, and the like.

Stroom has two databases. The first, stroom, is used for management of Stroom itself and the second, statistics is used for the Stroom Statistics capability. There are many ways to deploy these two databases. One could

- have a single database instance and serve both databases from it

- have two database instances on the same server and serve one database per instance

- have two separate nodes, each with it’s own database instance

- the list goes on.

In this HOWTO, we describe the deployment of two database instances on the one node, each serving a single database. We provide example deployments using either the MariaDB or MySQL Community versions of MySQL.

Assumptions

- we are installing the MariaDB or MySQL Community RDBMS software.

- the primary database node is ‘stroomdb0.strmdev00.org’.

- installation is on a fully patched minimal Centos 7.3 instance.

- we are installing BOTH databases (

stroomandstatistics) on the same node - ‘stroomdb0.stromdev00.org’ but with two distinct database engines. The first database will communicate on port3307and the second on3308. - we are deploying with SELinux in enforcing mode.

- any scripts or commands that should run are in code blocks and are designed to allow the user to cut then paste the commands onto their systems.

- in this document, when a textual screen capture is documented, data entry is identified by the data surrounded by ‘<’ ‘>’ . This excludes enter/return presses.

Installation of Software

MariaDB Server Installation

As MariaDB is directly supported by Centos 7, we simply install the database server software and SELinux policy files, as per

sudo yum -y install policycoreutils-python mariadb-server

MySQL Community Server Installation

As MySQL is not directly supported by Centos 7, we need to install it’s repository files prior to installation. We get the current MySQL Community release repository rpm and validate it’s MD5 checksum against the published value found on the MySQL Yum Repository site.

wget https://repo.mysql.com/mysql57-community-release-el7.rpm

md5sum mysql57-community-release-el7.rpm

On correct validation of the MD5 checksum, we install the repository files via

sudo yum -y localinstall mysql57-community-release-el7.rpm

NOTE: Stroom currently does not support the latest production MySQL version - 5.7. You will need to install MySQL Version 5.6.

Now since we must use MySQL Version 5.6 you will need to edit the MySQL repo file /etc/yum.repos.d/mysql-community.repo to

disable the mysql57-community channel and enable the mysql56-community channel. We start by, backing up the repo file with

sudo cp /etc/yum.repos.d/mysql-community.repo /etc/yum.repos.d/mysql-community.repo.ORIG

Then edit the file to change

...

# Enable to use MySQL 5.6

[mysql56-community]

name=MySQL 5.6 Community Server

baseurl=http://repo.mysql.com/yum/mysql-5.6-community/el/7/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

[mysql57-community]

name=MySQL 5.7 Community Server

baseurl=http://repo.mysql.com/yum/mysql-5.7-community/el/7/$basearch/

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

...

to become

...

# Enable to use MySQL 5.6

[mysql56-community]

name=MySQL 5.6 Community Server

baseurl=http://repo.mysql.com/yum/mysql-5.6-community/el/7/$basearch/

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

[mysql57-community]

name=MySQL 5.7 Community Server

baseurl=http://repo.mysql.com/yum/mysql-5.7-community/el/7/$basearch/

enabled=0

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-mysql

...

Next we install server software and SELinux policy files, as per

sudo yum -y install policycoreutils-python mysql-community-server

Preparing the Database Deployment

MariaDB Variant

Create and instantiate both database instances

To set up two MariaDB database instances on the one node, we will use mysql_multi and systemd service templates. The mysql_multi utility is a capability that manages multiple MariaDB databases on the same node and systemd service templates manage multiple services from one configuration file. A systemd service template is unique in that it has an @ character before the .service suffix.

To use this multiple-instance capability, we need to create two data directories for each database instance and also replace the main MariaDB configuration file, /etc/my.cnf, with one that includes configuration of key options for each instance. We will name our instances, mysqld0 and mysqld1. We will also create specific log files for each instance.

We will use the directories, /var/lib/mysql-mysqld0 and /var/lib/mysql-mysqld1 for the data directories and /var/log/mariadb/mysql-mysqld0.log and /var/log/mariadb/mysql-mysqld1.log for the log files. Note you should modify /etc/logrotate.d/mariadb to manage these log files. Note also, we need to set the appropriate SELinux file contexts on the created directories and any files.

We create the data directories and log files and set their respective SELinux contexts via

sudo mkdir /var/lib/mysql-mysqld0

sudo chown mysql:mysql /var/lib/mysql-mysqld0

sudo semanage fcontext -a -t mysqld_db_t "/var/lib/mysql-mysqld0(/.*)?"

sudo restorecon -Rv /var/lib/mysql-mysqld0

sudo touch /var/log/mariadb/mysql-mysqld0.log

sudo chown mysql:mysql /var/log/mariadb/mysql-mysqld0.log

sudo chcon --reference=/var/log/mariadb/mariadb.log /var/log/mariadb/mysql-mysqld0.log

sudo mkdir /var/lib/mysql-mysqld1

sudo chown mysql:mysql /var/lib/mysql-mysqld1

sudo semanage fcontext -a -t mysqld_db_t "/var/lib/mysql-mysqld1(/.*)?"

sudo restorecon -Rv /var/lib/mysql-mysqld1

sudo touch /var/log/mariadb/mysql-mysqld1.log

sudo chown mysql:mysql /var/log/mariadb/mysql-mysqld1.log

sudo chcon --reference=/var/log/mariadb/mariadb.log /var/log/mariadb/mysql-mysqld1.log

We now initialise the our two database data directories via

sudo mysql_install_db --user=mysql --datadir=/var/lib/mysql-mysqld0

sudo mysql_install_db --user=mysql --datadir=/var/lib/mysql-mysqld1

We now replace the MySQL configuration file to set the options for each instance. Note that we will serve mysqld0 and mysqld1 via TCP ports 3307 and 3308 respectively. First backup the existing configuration file with

sudo cp /etc/my.cnf /etc/my.cnf.ORIG

then setup /etc/my.cnf as per

sudo bash

F=/etc/my.cnf

printf '[mysqld_multi]\n' > ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf '\n' >> ${F}

printf '[mysqld0]\n' >> ${F}

printf 'port=3307\n' >> ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf 'datadir=/var/lib/mysql-mysqld0/\n' >> ${F}

printf 'socket=/var/lib/mysql-mysqld0/mysql.sock\n' >> ${F}

printf 'pid-file=/var/run/mariadb/mysql-mysqld0.pid\n' >> ${F}

printf '\n' >> ${F}

printf 'log-error=/var/log/mariadb/mysql-mysqld0.log\n' >> ${F}

printf '\n' >> ${F}

printf '# Disabling symbolic-links is recommended to prevent assorted security\n' >> ${F}

printf '# risks\n' >> ${F}

printf 'symbolic-links=0\n' >> ${F}

printf '\n' >> ${F}

printf '[mysqld1]\n' >> ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf 'port=3308\n' >> ${F}

printf 'datadir=/var/lib/mysql-mysqld1/\n' >> ${F}

printf 'socket=/var/lib/mysql-mysqld1/mysql.sock\n' >> ${F}

printf 'pid-file=/var/run/mariadb/mysql-mysqld1.pid\n' >> ${F}

printf '\n' >> ${F}

printf 'log-error=/var/log/mariadb/mysql-mysqld1.log\n' >> ${F}

printf '\n' >> ${F}

printf '# Disabling symbolic-links is recommended to prevent assorted security risks\n' >> ${F}

printf 'symbolic-links=0\n' >> ${F}

exit # To exit the root shell

We also need to associate the ports with the mysqld_port_t SELinux context as per

sudo semanage port -a -t mysqld_port_t -p tcp 3307

sudo semanage port -a -t mysqld_port_t -p tcp 3308

We next create the systemd service template as per

sudo bash

F=/etc/systemd/system/mysqld@.service

printf '# Install in /etc/systemd/system\n' > ${F}

printf '# Enable via systemctl enable mysqld@0 or systemctl enable mysqld@1\n' >> ${F}

printf '[Unit]\n' >> ${F}

printf 'Description=MySQL Multi Server for instance %%i\n' >> ${F}

printf 'After=syslog.target\n' >> ${F}

printf 'After=network.target\n' >> ${F}

printf '\n' >> ${F}

printf '[Service]\n' >> ${F}

printf 'User=mysql\n' >> ${F}

printf 'Group=mysql\n' >> ${F}

printf 'Type=forking\n' >> ${F}

printf 'ExecStart=/usr/bin/mysqld_multi start %%i\n' >> ${F}

printf 'ExecStop=/usr/bin/mysqld_multi stop %%i\n' >> ${F}

printf 'Restart=always\n' >> ${F}

printf 'PrivateTmp=true\n' >> ${F}

printf '\n' >> ${F}

printf '[Install]\n' >> ${F}

printf 'WantedBy=multi-user.target\n' >> ${F}

chmod 644 ${F}

exit; # to exit the root shell

We next enable and start both instances via

sudo systemctl enable mysqld@0

sudo systemctl enable mysqld@1

sudo systemctl start mysqld@0

sudo systemctl start mysqld@1

At this we should have both instances running. One should check each instance’s log file for any errors.

Secure each database instance

We secure each database engine by running the mysql_secure_installation script. One should accept all defaults, which means the

only entry (aside from pressing returns) is the administrator (root) database password. Make a note of the password you use. In this case

we will use Stroom5User@.

The utility mysql_secure_installation expects to find the Linux socket file to access the database it’s securing at /var/lib/mysql/mysql.sock.

Since we have used other locations, we temporarily link the real socket file to /var/lib/mysql/mysql.sock for each invocation of the

utility. Thus we execute

sudo ln /var/lib/mysql-mysqld0/mysql.sock /var/lib/mysql/mysql.sock

sudo mysql_secure_installation

to see

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MariaDB to secure it, we'll need the current

password for the root user. If you've just installed MariaDB, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MariaDB

root user without the proper authorisation.

Set root password? [Y/n]

New password: <__ Stroom5User@ __>

Re-enter new password: <__ Stroom5User@ __>

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MariaDB installation has an anonymous user, allowing anyone

to log into MariaDB without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n]

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n]

... Success!

By default, MariaDB comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n]

- Dropping test database...

... Success!

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n]

... Success!

Cleaning up...

All done! If you've completed all of the above steps, your MariaDB

installation should now be secure.

Thanks for using MariaDB!

then we execute

sudo rm /var/lib/mysql/mysql.sock

sudo ln /var/lib/mysql-mysqld1/mysql.sock /var/lib/mysql/mysql.sock

sudo mysql_secure_installation

sudo rm /var/lib/mysql/mysql.sock

and process as before (for when running mysql_secure_installation). At this both database instances should be secure.

MySQL Community Variant

Create and instantiate both database instances

To set up two MySQL database instances on the one node, we will use mysql_multi and systemd service templates. The mysql_multi utility is a capability that manages multiple MySQL databases on the same node and systemd service templates manage multiple services from one configuration file. A systemd service template is unique in that it has an @ character before the .service suffix.

To use this multiple-instance capability, we need to create two data directories for each database instance and also replace the main MySQL configuration file, /etc/my.cnf, with one that includes configuration of key options for each instance. We will name our instances, mysqld0 and mysqld1. We will also create specific log files for each instance.

We will use the directories, /var/lib/mysql-mysqld0 and /var/lib/mysql-mysqld1 for the data directories and /var/log/mysql-mysqld0.log and /var/log/mysql-mysqld1.log for the log directories. Note you should modify /etc/logrotate.d/mysql to manage these log files. Note also, we need to set the appropriate SELinux file context on the created directories and files.

sudo mkdir /var/lib/mysql-mysqld0

sudo chown mysql:mysql /var/lib/mysql-mysqld0

sudo semanage fcontext -a -t mysqld_db_t "/var/lib/mysql-mysqld0(/.*)?"

sudo restorecon -Rv /var/lib/mysql-mysqld0

sudo touch /var/log/mysql-mysqld0.log

sudo chown mysql:mysql /var/log/mysql-mysqld0.log

sudo chcon --reference=/var/log/mysqld.log /var/log/mysql-mysqld0.log

sudo mkdir /var/lib/mysql-mysqld1

sudo chown mysql:mysql /var/lib/mysql-mysqld1

sudo semanage fcontext -a -t mysqld_db_t "/var/lib/mysql-mysqld1(/.*)?"

sudo restorecon -Rv /var/lib/mysql-mysqld1

sudo touch /var/log/mysql-mysqld1.log

sudo chown mysql:mysql /var/log/mysql-mysqld1.log

sudo chcon --reference=/var/log/mysqld.log /var/log/mysql-mysqld1.log

We now initialise the our two database data directories via

sudo mysql_install_db --user=mysql --datadir=/var/lib/mysql-mysqld0

sudo mysql_install_db --user=mysql --datadir=/var/lib/mysql-mysqld1

Disable the default database via

sudo systemctl disable mysqld

We now modify the MySQL configuration file to set the options for each instance. Note that we will serve mysqld0 and mysqld1 via TCP ports 3307 and 3308 respectively. First backup the existing configuration file with

sudo cp /etc/my.cnf /etc/my.cnf.ORIG

then setup /etc/my.cnf as per

sudo bash

F=/etc/my.cnf

printf '[mysqld_multi]\n' > ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf '\n' >> ${F}

printf '[mysqld0]\n' >> ${F}

printf 'port=3307\n' >> ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf 'datadir=/var/lib/mysql-mysqld0/\n' >> ${F}

printf 'socket=/var/lib/mysql-mysqld0/mysql.sock\n' >> ${F}

printf 'pid-file=/var/run/mysqld/mysql-mysqld0.pid\n' >> ${F}

printf '\n' >> ${F}

printf 'log-error=/var/log/mysql-mysqld0.log\n' >> ${F}

printf '\n' >> ${F}

printf '# Disabling symbolic-links is recommended to prevent assorted security\n' >> ${F}

printf '# risks\n' >> ${F}

printf 'symbolic-links=0\n' >> ${F}

printf '\n' >> ${F}

printf '[mysqld1]\n' >> ${F}

printf 'mysqld = /usr/bin/mysqld_safe --basedir=/usr\n' >> ${F}

printf 'port=3308\n' >> ${F}

printf 'datadir=/var/lib/mysql-mysqld1/\n' >> ${F}

printf 'socket=/var/lib/mysql-mysqld1/mysql.sock\n' >> ${F}

printf 'pid-file=/var/run/mysqld/mysql-mysqld1.pid\n' >> ${F}

printf '\n' >> ${F}

printf 'log-error=/var/log/mysql-mysqld1.log\n' >> ${F}

printf '\n' >> ${F}

printf '# Disabling symbolic-links is recommended to prevent assorted security risks\n' >> ${F}

printf 'symbolic-links=0\n' >> ${F}

exit # To exit the root shell

We also need to associate the ports with the mysqld_port_t SELinux context as per

sudo semanage port -a -t mysqld_port_t -p tcp 3307

sudo semanage port -a -t mysqld_port_t -p tcp 3308

We next create the systemd service template as per

sudo bash

F=/etc/systemd/system/mysqld@.service

printf '# Install in /etc/systemd/system\n' > ${F}

printf '# Enable via systemctl enable mysqld@0 or systemctl enable mysqld@1\n' >> ${F}

printf '[Unit]\n' >> ${F}

printf 'Description=MySQL Multi Server for instance %%i\n' >> ${F}

printf 'After=syslog.target\n' >> ${F}

printf 'After=network.target\n' >> ${F}

printf '\n' >> ${F}

printf '[Service]\n' >> ${F}

printf 'User=mysql\n' >> ${F}

printf 'Group=mysql\n' >> ${F}

printf 'Type=forking\n' >> ${F}

printf 'ExecStart=/usr/bin/mysqld_multi start %%i\n' >> ${F}

printf 'ExecStop=/usr/bin/mysqld_multi stop %%i\n' >> ${F}

printf 'Restart=always\n' >> ${F}

printf 'PrivateTmp=true\n' >> ${F}

printf '\n' >> ${F}

printf '[Install]\n' >> ${F}

printf 'WantedBy=multi-user.target\n' >> ${F}

chmod 644 ${F}

exit; # to exit the root shell

We next enable and start both instances via

sudo systemctl enable mysqld@0

sudo systemctl enable mysqld@1

sudo systemctl start mysqld@0

sudo systemctl start mysqld@1

At this we should have both instances running. One should check each instance’s log file for any errors.

Secure each database instance

We secure each database engine by running the mysql_secure_installation script. One should accept all defaults, which means the

only entry (aside from pressing returns) is the administrator (root) database password. Make a note of the password you use. In this case

we will use Stroom5User@.

The utility mysql_secure_installation expects to find the Linux socket file to access the database it’s securing at /var/lib/mysql/mysql.sock.

Since we have used other locations, we temporarily link the real socket file to /var/lib/mysql/mysql.sock for each invocation of the

utility. Thus we execute

sudo ln /var/lib/mysql-mysqld0/mysql.sock /var/lib/mysql/mysql.sock

sudo mysql_secure_installation

to see

NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MySQL

SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY!

In order to log into MySQL to secure it, we'll need the current

password for the root user. If you've just installed MySQL, and

you haven't set the root password yet, the password will be blank,

so you should just press enter here.

Enter current password for root (enter for none):

OK, successfully used password, moving on...

Setting the root password ensures that nobody can log into the MySQL

root user without the proper authorisation.

Set root password? [Y/n] y

New password: <__ Stroom5User@ __>

Re-enter new password: <__ Stroom5User@ __>

Password updated successfully!

Reloading privilege tables..

... Success!

By default, a MySQL installation has an anonymous user, allowing anyone

to log into MySQL without having to have a user account created for

them. This is intended only for testing, and to make the installation

go a bit smoother. You should remove them before moving into a

production environment.

Remove anonymous users? [Y/n]

... Success!

Normally, root should only be allowed to connect from 'localhost'. This

ensures that someone cannot guess at the root password from the network.

Disallow root login remotely? [Y/n]

... Success!

By default, MySQL comes with a database named 'test' that anyone can

access. This is also intended only for testing, and should be removed

before moving into a production environment.

Remove test database and access to it? [Y/n]

- Dropping test database...

ERROR 1008 (HY000) at line 1: Can't drop database 'test'; database doesn't exist

... Failed! Not critical, keep moving...

- Removing privileges on test database...

... Success!

Reloading the privilege tables will ensure that all changes made so far

will take effect immediately.

Reload privilege tables now? [Y/n]

... Success!

All done! If you've completed all of the above steps, your MySQL

installation should now be secure.

Thanks for using MySQL!

Cleaning up...

then we execute

sudo rm /var/lib/mysql/mysql.sock

sudo ln /var/lib/mysql-mysqld1/mysql.sock /var/lib/mysql/mysql.sock

sudo mysql_secure_installation

sudo rm /var/lib/mysql/mysql.sock

and process as before (for when running mysql_secure_installation). At this both database instances should be secure.

Create the Databases and Enable access by the Stroom processing users

We now create the stroom database within the first instance, mysqld0 and the statistics database within the second

instance mysqld1. It does not matter which database variant used as all commands are the same for both.

As well as creating the databases, we also need to establish the Stroom processing users

that the Stroom processing nodes will use to access each database.

For the stroom database, we will use the database user stroomuser with a password of Stroompassword1@ and for the statistics database, we will use the database user stroomstats with a password of Stroompassword2@. One identifies a processing user as <user>@<host> on a grant SQL command.

In the stroom database instance, we will grant access for

stroomuser@localhostfor local access for maintenance etc.stroomuser@stroomp00.strmdev00.orgfor access by processing nodestroomp00.strmdev00.orgstroomuser@stroomp01.strmdev00.orgfor access by processing nodestroomp01.strmdev00.org

and in the statistics database instance, we will grant access for

stroomstats@localhostfor local access for maintenance etc.stroomstats@stroomp00.strmdev00.orgfor access by processing nodestroomp00.strmdev00.orgstroomstats@stroomp01.strmdev00.orgfor access by processing nodestroomp01.strmdev00.org

Thus for the stroom database we execute

mysql --user=root --port=3307 --socket=/var/lib/mysql-mysqld0/mysql.sock --password

and on entering the administrator’s password, we arrive at the MariaDB [(none)]> or mysql> prompt. At this we create the database with

create database stroom;

and then to establish the users, we execute

grant all privileges on stroom.* to stroomuser@localhost identified by 'Stroompassword1@';

grant all privileges on stroom.* to stroomuser@stroomp00.strmdev00.org identified by 'Stroompassword1@';

grant all privileges on stroom.* to stroomuser@stroomp01.strmdev00.org identified by 'Stroompassword1@';

then

quit;

to exit.

And for the statistics database

mysql --user=root --port=3308 --socket=/var/lib/mysql-mysqld1/mysql.sock --password

with

create database statistics;

and then to establish the users, we execute

grant all privileges on statistics.* to stroomstats@localhost identified by 'Stroompassword2@';

grant all privileges on statistics.* to stroomstats@stroomp00.strmdev00.org identified by 'Stroompassword2@';

grant all privileges on statistics.* to stroomstats@stroomp01.strmdev00.org identified by 'Stroompassword2@';

then

quit;

to exit.

Clearly if we need to add more processing nodes, additional grant commands would be used. Further, if we were installing the databases in a single node Stroom environment, we would just have the first two pairs of grants.

Configure Firewall

Next we need to modify our firewall to allow remote access to our databases which listens on ports 3307 and 3308. The simplest way to achieve this is with the commands

sudo firewall-cmd --zone=public --add-port=3307/tcp --permanent

sudo firewall-cmd --zone=public --add-port=3308/tcp --permanent

sudo firewall-cmd --reload

sudo firewall-cmd --zone=public --list-all

Note

That this allows ANY node to connect to your databases. You should give consideration to restricting this to only allowing processing node access.Debugging of Mariadb for Stroom

If there is a need to debug the Mariadb database and Stroom interaction, one can turn on auditing for the Mariadb service. To do so, log onto the relevant database as the administrative user as per

mysql --user=root --port=3307 --socket=/var/lib/mysql-mysqld0/mysql.sock --password

or

mysql --user=root --port=3308 --socket=/var/lib/mysql-mysqld1/mysql.sock --password

and at the MariaDB [(none)]> prompt enter

install plugin server_audit SONAME 'server_audit';

set global server_audit_file_path='/var/log/mariadb/mysqld-mysqld0_server_audit.log';

or

set global server_audit_file_path='/var/log/mariadb/mysqld-mysqld1_server_audit.log';

set global server_audit_logging=ON;

set global server_audit_file_rotate_size=10485760;

install plugin SQL_ERROR_LOG soname 'sql_errlog';

quit;

The above will generate two log files,

/var/log/mariadb/mysqld-mysqld0_server_audit.logor/var/log/mariadb/mysqld-mysqld1_server_audit.logwhich records all commands the respective databases run. We have configured the log file will rotate at 10MB in size./var/lib/mysql-mysqld0/sql_errors.logor/var/lib/mysql-mysqld1/sql_errors.logwhich records all erroneous SQL commands. This log file will rotate at 10MB in size. Note we cannot set this filename via the UI, but it will be appear in the data directory.

All files will, by default, generate up to 9 rotated files.

If you wish to rotate a log file manually, log into the database as the administrative user and execute either

set global server_audit_file_rotate_now=1;to rotate the audit log fileset global sql_error_log_rotate=1;to rotate the sql_errlog log file

Initial Database Access

It should be noted that if you monitor the sql_errors.log log file on a new Stooom deployment, when the Stoom Application first starts, it’s initial access to the stroom database will result in the following attempted sql statements.

2017-04-16 16:24:50 stroomuser[stroomuser] @ stroomp00.strmdev00.org [192.168.2.126] ERROR 1146: Table 'stroom.schema_version' doesn't exist : SELECT version FROM schema_version ORDER BY installed_rank DESC

2017-04-16 16:24:50 stroomuser[stroomuser] @ stroomp00.strmdev00.org [192.168.2.126] ERROR 1146: Table 'stroom.STROOM_VER' doesn't exist : SELECT VER_MAJ, VER_MIN, VER_PAT FROM STROOM_VER ORDER BY VER_MAJ DESC, VER_MIN DESC, VER_PAT DESC LIMIT 1

2017-04-16 16:24:50 stroomuser[stroomuser] @ stroomp00.strmdev00.org [192.168.2.126] ERROR 1146: Table 'stroom.FD' doesn't exist : SELECT ID FROM FD LIMIT 1

2017-04-16 16:24:50 stroomuser[stroomuser] @ stroomp00.strmdev00.org [192.168.2.126] ERROR 1146: Table 'stroom.FEED' doesn't exist : SELECT ID FROM FEED LIMIT 1

After this access the application will realise the database does not exist and it will initialise the database.

In the case of the statistics database you may note the following attempted access

2017-04-16 16:25:09 stroomstats[stroomstats] @ stroomp00.strmdev00.org [192.168.2.126] ERROR 1146: Table 'statistics.schema_version' doesn't exist : SELECT version FROM schema_version ORDER BY installed_rank DESC

Again, at this point the application will initialise this database.

3 - Installation

Assumptions

The following assumptions are used in this document.

- the user has reasonable RHEL/Centos System administration skills.

- installations are on Centos 7.3 minimal systems (fully patched).

- the term ’node’ is used to reference the ‘host’ a service is running on.

- the Stroom Proxy and Application software runs as user ‘stroomuser’ and will be deployed in this user’s home directory

- data will reside in a directory tree referenced via ‘/stroomdata’. It is up to the user to provision a filesystem here, noting sub-directories of it will be NFS shared in Multi Node Stroom Deployments

- any scripts or commands that should run are in code blocks and are designed to allow the user to cut then paste the commands onto their systems

- in this document, when a textual screen capture is documented, data entry is identified by the data surrounded by ‘<’ ‘>’ . This excludes enter/return presses.

- better security of password choices, networking, firewalls, data stores, etc. can and should be achieved in various ways, but these HOWTOs are just a quick means of getting a working system, so only limited security is applied

- better configuration of the database (e.g. more memory. redundancy) should be considered in production environments

- the use of self signed certificates is appropriate for test systems, but users should consider appropriate CA infrastructure in production environments

- the user has access to a Chrome web browser as Stroom is optimised for this browser.

Introduction

This HOWTO provides guidance on a variety of simple Stroom deployments.

for an environment where multiple nodes are required to handle the processing load.

for extensive networks where one wants to aggregate data through a proxy before sending data to the central Stroom processing systems.

for disconnected networks where collected data can be manually transferred to a Stroom processing service.

for when one needs to add an additional node to an existing cluster.

Nodename Nomenclature

For simplicity sake, the nodenames used in this HOWTO are geared towards the Multi Node Stroom Cluster deployment. That is,

- the database nodename is

stroomdb0.strmdev00.org - the processing nodenames are

stroomp00.strmdev00.org,stroomp01.strmdev00.org, andstroomp02.strmdev00.org - the first node in our cluster,

stroomp00.strmdev00.org, also has the CNAMEstroomp.strmdev00.org

In the case of the Proxy only deployments,

- the forwarding Stroom proxy nodename is

stoomfp0.strmdev00.org - the standalone nodename will be

stroomp00.strmdev00.org

Storage

Both the Stroom Proxy and Application store data. The typical requirement is

- directory for Stroom proxy to store inbound data files

- directory for Stroom application permanent data files (events, etc.)

- directory for Stroom application index data files

- directory for Stroom application working files (temporary files, output, etc.)

Where multiple processing nodes are involved, the application’s permanent data directories need to be accessible by all participating nodes.

Thus a hierarchy for a Stroom Proxy might by

- /stroomdata/stroom-proxy

and for an Application node

- /stroomdata/stroom-data

- /stroomdata/stroom-index

- /stroomdata/stroom-working

In the following examples, the storage hierarchy proposed will more suited for a multi node Stroom cluster, including the Forwarding or Standalone proxy deployments. This is to simplify the documentation. Thus, the above structure is generalised into

- /stroomdata/stroom-working-p_nn_/proxy

and

- /stroomdata/stroom-data-p_nn_

- /stroomdata/stroom-index-p_nn_

- /stroomdata/stroom-working-p_nn_

where nn is a two digit node number. The reason for placing the proxy directory within the Application working area will be explained later.

All data should be owned by the Stroom processing user. In this HOWTO, we will use stroomuser

Multi Node Stroom Cluster (Proxy and Application) Deployment

In this deployment we will install the database on a given node then deploy both the Stroom Proxy and Stroom Application software to both our processing nodes. At this point we will then integrate a web service to run ‘in-front’ of our Stroom software and then perform the initial configuration of Stroom via the user interface.

Database Installation

The Stroom capability requires access to two MySQL/MariaDB databases. The first is for persisting application configuration and metadata information, and the second is for the Stroom Statistics capability. Instructions for installation of the Stroom databases can be found here. Although these instructions describe the deployment of the databases to their own node, there is no reason why one can’t just install them both on the first (or only) Stroom node.

Prerequisite Software Installation

Certain software packages are required for either the Stroom Proxy or Stroom Application to run.

The core software list is

- java-1.8.0-openjdk

- java-1.8.0-openjdk-devel

- policycoreutils-python

- unzip

- zip

- mariadb or mysql client

Most of the required software are packages available via standard repositories and hence we can simply execute

sudo yum -y install java-1.8.0-openjdk java-1.8.0-openjdk-devel policycoreutils-python unzip zip

One has a choice of database clients. MariaDB is directly supported by Centos 7 and is simplest to install. This is done via

sudo yum -y install mariadb

One could deploy the MySQL database software as the alternative.

To do this you need to install the MySQL Community repository files then install the client. Instructions for installation of the MySQL Community repository files can be found here or on the MySQL Site . Once you have installed the MySQL repository files, install the client via

sudo yum -y install mysql-community-client

Note that additional software will be required for other integration components (e.g. Apache httpd/mod_jk). This is described in the Web Service Integration section of this document.

Note also, that Standalone or Forwarding Stroom Proxy deployments do NOT need a database client deployed.

Entropy Issues in Virtual environments

Both the Stroom Application and Stroom Proxy currently run on Tomcat (Version 7) which relies on the Java SecureRandom class to provide random values for any generated session identifiers as well as other components. In some circumstances the Java runtime can be delayed if the entropy source that is used to initialise SecureRandom is short of entropy. The delay is caused by the Java runtime waiting on the blocking entropy souce /dev/random to have sufficient entropy. This quite often occurs in virtual environments were there are few sources that can contribute to a system’s entropy.

To view the current available entropy on a Linux system, run the command

cat /proc/sys/kernel/random/entropy_avail

A reasonable value would be over 2000 and a poor value would be below a few hundred.

If you are deploying Stroom onto systems with low available entropy, the start time for the Stroom Proxy can be as high as 5 minutes and for the Application as high as 15 minutes.

One software based solution would be to install the haveged service that attempts to provide an easy-to-use, unpredictable random number generator based upon an adaptation of the HAVEGE algorithm. To install execute

yum -y install haveged

systemctl enable haveged

systemctl start haveged

For background reading in this matter, see this reference or this reference .

Storage Scenario

For the purpose of this Installation HOWTO, the following sets up the storage hierarchy for a two node processing cluster. To share our permanent data we will use NFS. Accept that the NFS deployment described here is very simple, and in a production deployment, a lot more security controls should be used. Further,

Our hierarchy is

- Node:

stroomp00.strmdev00.org /stroomdata/stroom-data-p00- location to store Stroom application data files (events, etc.) for this node/stroomdata/stroom-index-p00- location to store Stroom application index files/stroomdata/stroom-working-p00- location to store Stroom application working files (e.g. temporary files, output, etc.) for this node/stroomdata/stroom-working-p00/proxy- location for Stroom proxy to store inbound data files- Node:

stroomp01.strmdev00.org /stroomdata/stroom-data-p01- location to store Stroom application data files (events, etc.) for this node/stroomdata/stroom-index-p01- location to store Stroom application index files/stroomdata/stroom-working-p01- location to store Stroom application working files (e.g. temporary files, output, etc.) for this node/stroomdata/stroom-working-p01/proxy- location for Stroom proxy to store inbound data files

Creation of Storage Hierarchy

So, we first create processing user on all nodes as per

sudo useradd --system stroomuser

And the relevant commands to create the above hierarchy would be

- Node:

stroomp00.strmdev00.org

sudo mkdir -p /stroomdata/stroom-data-p00 /stroomdata/stroom-index-p00 /stroomdata/stroom-working-p00 /stroomdata/stroom-working-p00/proxy

sudo mkdir -p /stroomdata/stroom-data-p01 # So that this node can mount stroomp01's data directory

sudo chown -R stroomuser:stroomuser /stroomdata

sudo chmod -R 750 /stroomdata

- Node:

stroomp01.strmdev00.org

sudo mkdir -p /stroomdata/stroom-data-p01 /stroomdata/stroom-index-p01 /stroomdata/stroom-working-p01 /stroomdata/stroom-working-p01/proxy

sudo mkdir -p /stroomdata/stroom-data-p00 # So that this node can mount stroomp00's data directory

sudo chown -R stroomuser:stroomuser /stroomdata

sudo chmod -R 750 /stroomdata

Deployment of NFS to share Stroom Storage

We will use NFS to cross mount the permanent data directories. That is

- node

stroomp00.strmdev00.orgwill mountstroomp01.strmdev00.org:/stroomdata/stroom-data-p01and, - node

stroomp01.strmdev00.orgwill mountstroomp00.strmdev00.org:/stroomdata/stroom-data-p00.

The HOWTO guide to deploy and configure NFS for our Scenario is here

Stroom Installation

Pre-installation setup

Before installing either the Stroom Proxy or Stroom Application, we need establish various files and scripts within the Stroom Processing user’s home directory to support the Stroom services and their persistence. This is setup is described here.

Stroom Proxy Installation

Instructions for installation of the Stroom Proxy can be found here.

Stroom Application Installation

Instructions for installation of the Stroom application can be found here.

Web Service Integration

One typically ‘fronts’ either a Stroom Proxy or Stroom Application with a secure web service such as Apache’s Httpd or NGINX. In our scenario, we will use SSL to secure the web service and further, we will use Apache’s Httpd.

We first need to create certificates for use by the web service. The following provides instructions for this. The created certificates can then be used when configuration the web service.

This HOWTO is designed to deploy Apache’s httpd web service as a front end (https) (to the user) and Apache’s mod_jk as the interface between Apache and the Stroom tomcat applications. The instructions to configure this can be found here.

Other Web service capability can be used, for example, NGINX .

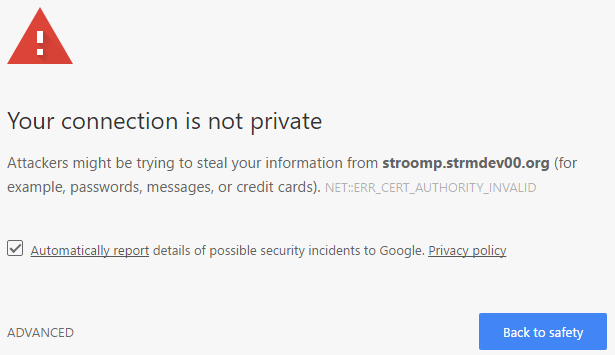

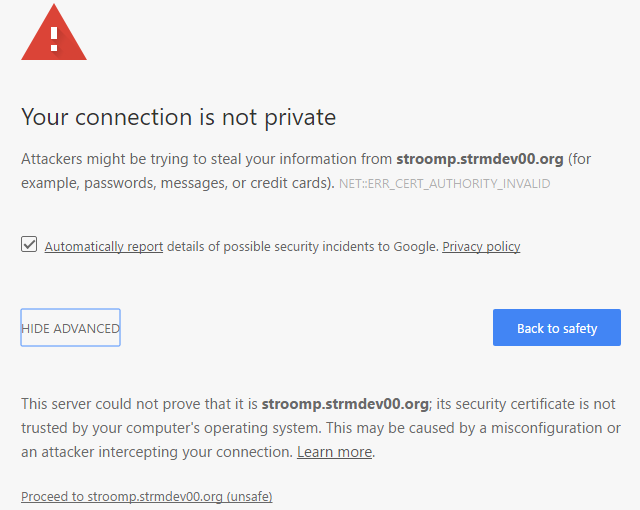

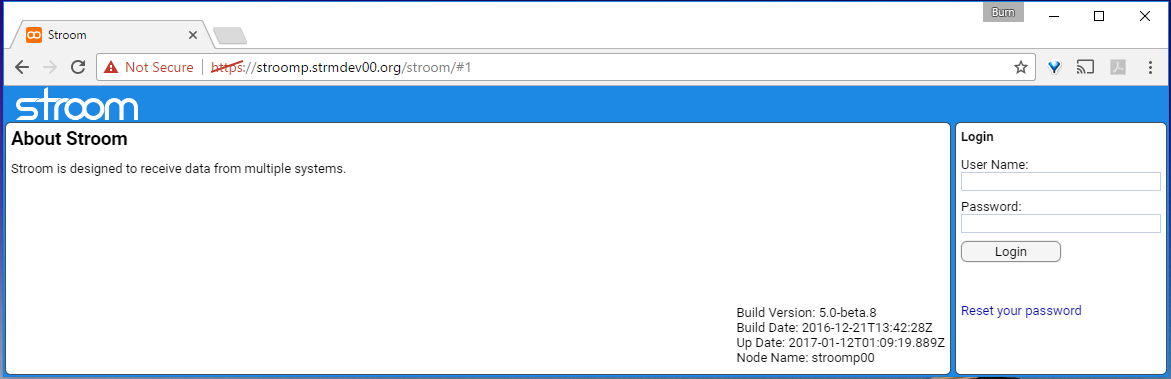

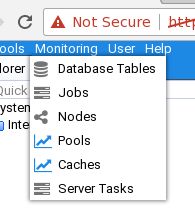

Installation Validation

We will now check that the installation and web services integration has worked.

Sanity firewall check

To ensure you have the firewall correctly set up, the following command

sudo firewall-cmd --reload

sudo firewall-cmd --zone=public --list-all

should result in

public (active)

target: default

icmp-block-inversion: no

interfaces: enp0s3

sources:

services: dhcpv6-client http https nfs ssh

ports: 8009/tcp 9080/tcp 8080/tcp 9009/tcp

protocols:

masquerade: no

forward-ports:

sourceports:

icmp-blocks:

rich rules:

Test Posting of data to the Stroom service

You can test the data posting service with the command

curl -k --data-binary @/etc/group "https://stroomp.strmdev00.org/stroom/datafeed" -H "Feed:TEST-FEED-V1_0" -H "System:EXAMPLE_SYSTEM" -H "Environment:EXAMPLE_ENVIRONMENT"

which WILL result in an error as we have not configured the Stroom Application as yet. The error should look like

<html><head><title>Apache Tomcat/7.0.53 - Error report</title><style><!--H1 {font-family:Tahoma,Arial,sans-serif;color:white;background-color:#525D76;font-size:22px;} H2 {font-family:Tahoma,Arial,sans-serif;color:white;background-color:#525D76;font-size:16px;} H3 {font-family:Tahoma,Arial,sans-serif;color:white;background-color:#525D76;font-size:14px;} BODY {font-family:Tahoma,Arial,sans-serif;color:black;background-color:white;} B {font-family:Tahoma,Arial,sans-serif;color:white;background-color:#525D76;} P {font-family:Tahoma,Arial,sans-serif;background:white;color:black;font-size:12px;}A {color : black;}A.name {color : black;}HR {color : #525D76;}--></style> </head><body><h1>HTTP Status 406 - Stroom Status 110 - Feed is not set to receive data - </h1><HR size="1" noshade="noshade"><p><b>type</b> Status report</p><p><b>message</b> <u>Stroom Status 110 - Feed is not set to receive data - </u></p><p><b>description</b> <u>The resource identified by this request is only capable of generating responses with characteristics not acceptable according to the request "accept" headers.</u></p><HR size="1" noshade="noshade"><h3>Apache Tomcat/7.0.53</h3></body></html>

If you view the Stroom proxy log, ~/stroom-proxy/instance/logs/stroom.log, on both processing nodes, you will see on one node,

the datafeed.DataFeedRequestHandler events running under, in this case, the ajp-apr-9009-exec-1 thread indicating the failure

...

2017-01-03T03:35:47.366Z WARN [ajp-apr-9009-exec-1] datafeed.DataFeedRequestHandler (DataFeedRequestHandler.java:131) - "handleException()","Environment=EXAMPLE_ENVIRONMENT","Expect=100-continue","Feed=TEST-FEED-V1_0","GUID=39960cf9-e50b-4ae8-a5f2-449ee670d2eb","ReceivedTime=2017-01-03T03:35:46.915Z","RemoteAddress=192.168.2.220","RemoteHost=192.168.2.220","System=EXAMPLE_SYSTEM","accept=*/*","content-length=1051","content-type=application/x-www-form-urlencoded","host=stroomp.strmdev00.org","user-agent=curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2","Stroom Status 110 - Feed is not set to receive data"

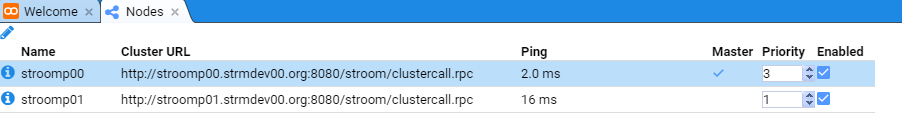

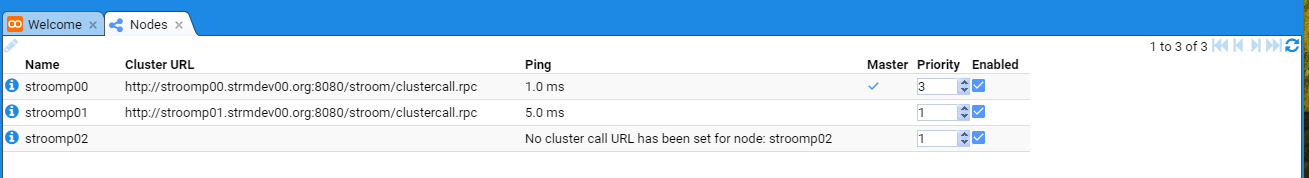

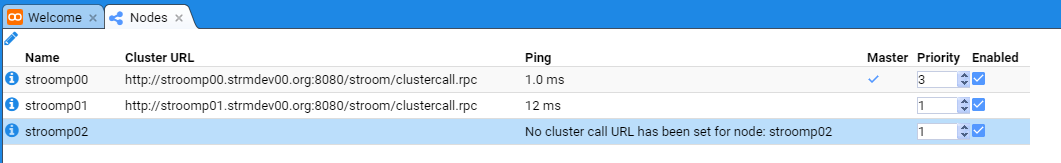

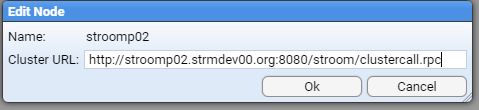

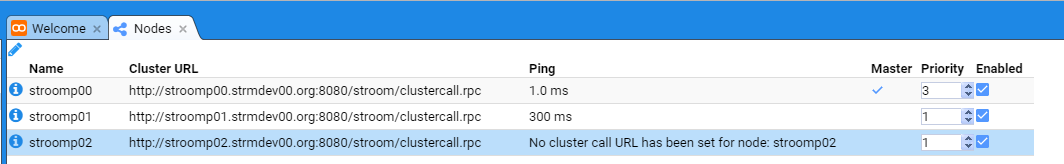

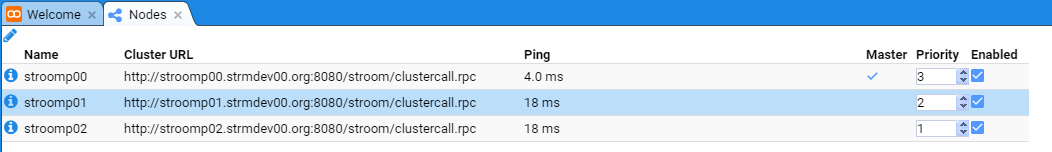

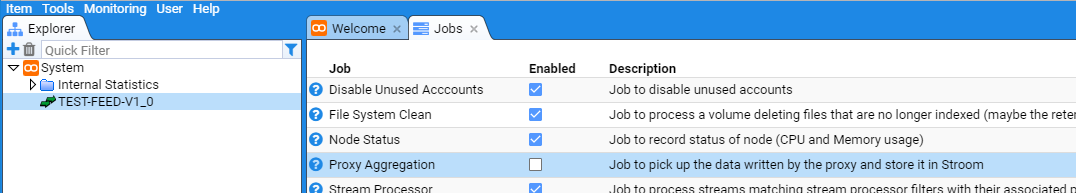

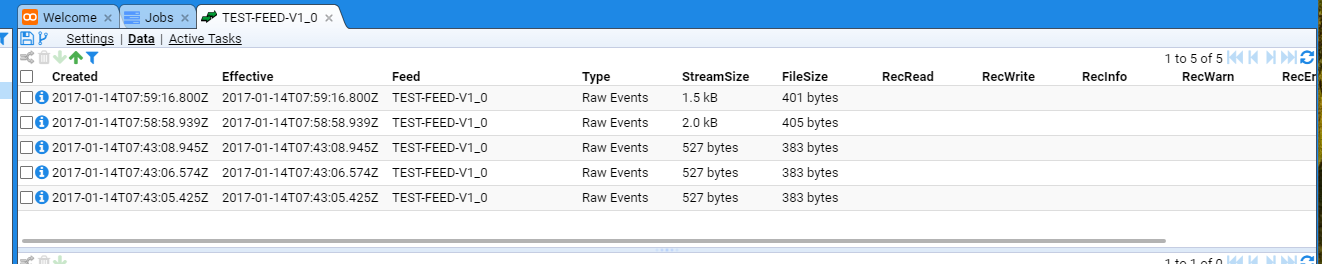

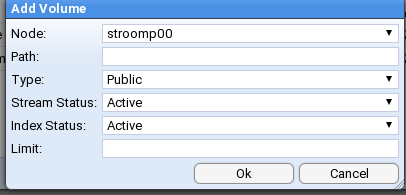

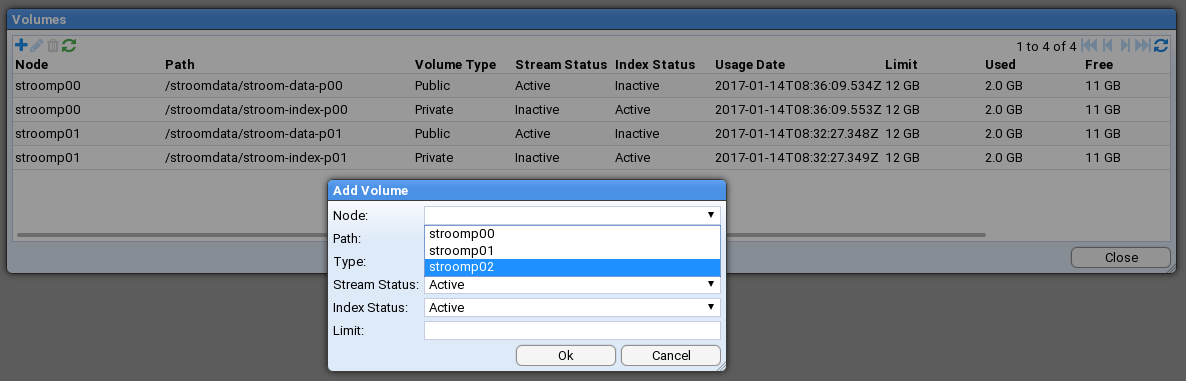

2017-01-03T03:35:47.367Z ERROR [ajp-apr-9009-exec-1] zip.StroomStreamException (StroomStreamException.java:131) - sendErrorResponse() - 406 Stroom Status 110 - Feed is not set to receive data -