This is the multi-page printable view of this section. Click here to print.

Release Notes

- 1: Unreleased

- 2: Version 7.4

- 2.1: New Features

- 2.2: Preview Features (experimental)

- 2.3: Breaking Changes

- 2.4: Upgrade Notes

- 2.5: Change Log

- 3: Version 7.3

- 3.1: New Features

- 3.2: Preview Features (experimental)

- 3.3: Breaking Changes

- 3.4: Upgrade Notes

- 3.5: Change Log

- 4: Version 7.2

- 4.1: New Features

- 4.2: Preview Features (experimental)

- 4.3: Breaking Changes

- 4.4: Upgrade Notes

- 4.5: Change Log

- 5: Version 7.1

- 6: Version 7.0

- 7: Version 6.1

- 8: Version 6.0

1 - Unreleased

2 - Version 7.4

2.1 - New Features

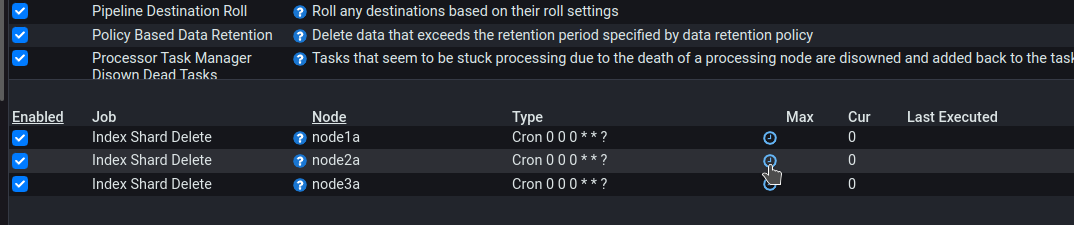

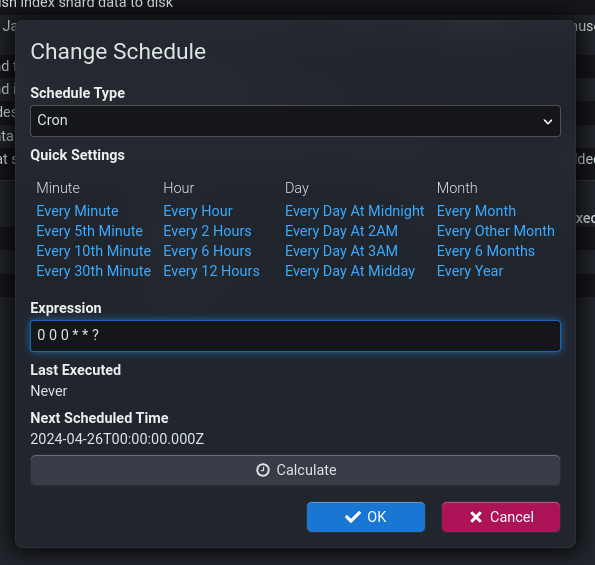

Scheduler

The scheduler in Stroom that is used to schedule all the background jobs and Analytic Rules has been changed. The existing cron /frequency scheduler was quite simplistic and only supported a limited set of features of a cron schedule.

The cron format has changed from a three value cron expression (e.g. * * * to run every minute) to six value one.

Existing three value cron expressions will be migrated to the new syntax when deploying Stroom v7.4.

For full details of the new scheduler see Scheduler

General User Interface Changes

Keyboard Shortcuts

Various new keyboard shortcuts for performing actions in Stroom. See Keyboard Shortcuts for details.

- Add the keyboard shortcut Ctrl ^ + Enter ↵ to the code pane of the stepper to perform a step refresh .

- Add the keyboard shortcut Ctrl ^ + Enter ↵ to the Dashboards to execute all queries.

- Add multiple Goto type shortcuts to jump directly to a screen. See Direct Access to Screens for details.

Documentation

The Documentation entity will now default to edit mode if there is no documentation, e.g. on a newly created Documentation entity.

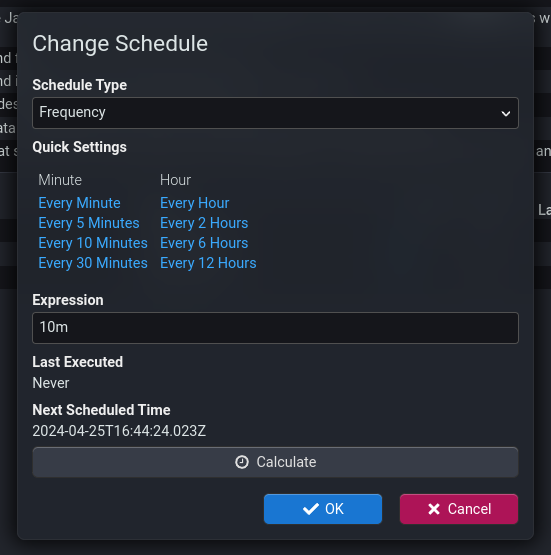

Copy As

A Copy As group has been added to the explorer tree context menu. This replaces, but includes the Copy Link to Clipboard menu item.

- Copy Name to Clipboard - Copies the name of the entity to the clipboard.

- Copy UUID to Clipboard - Copies the UUID of the entity to the clipboard.

- Copy link to Clipboard - Copies a URL to the clipboard that will link directly to the selected entity.

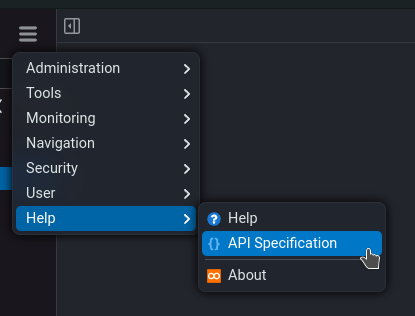

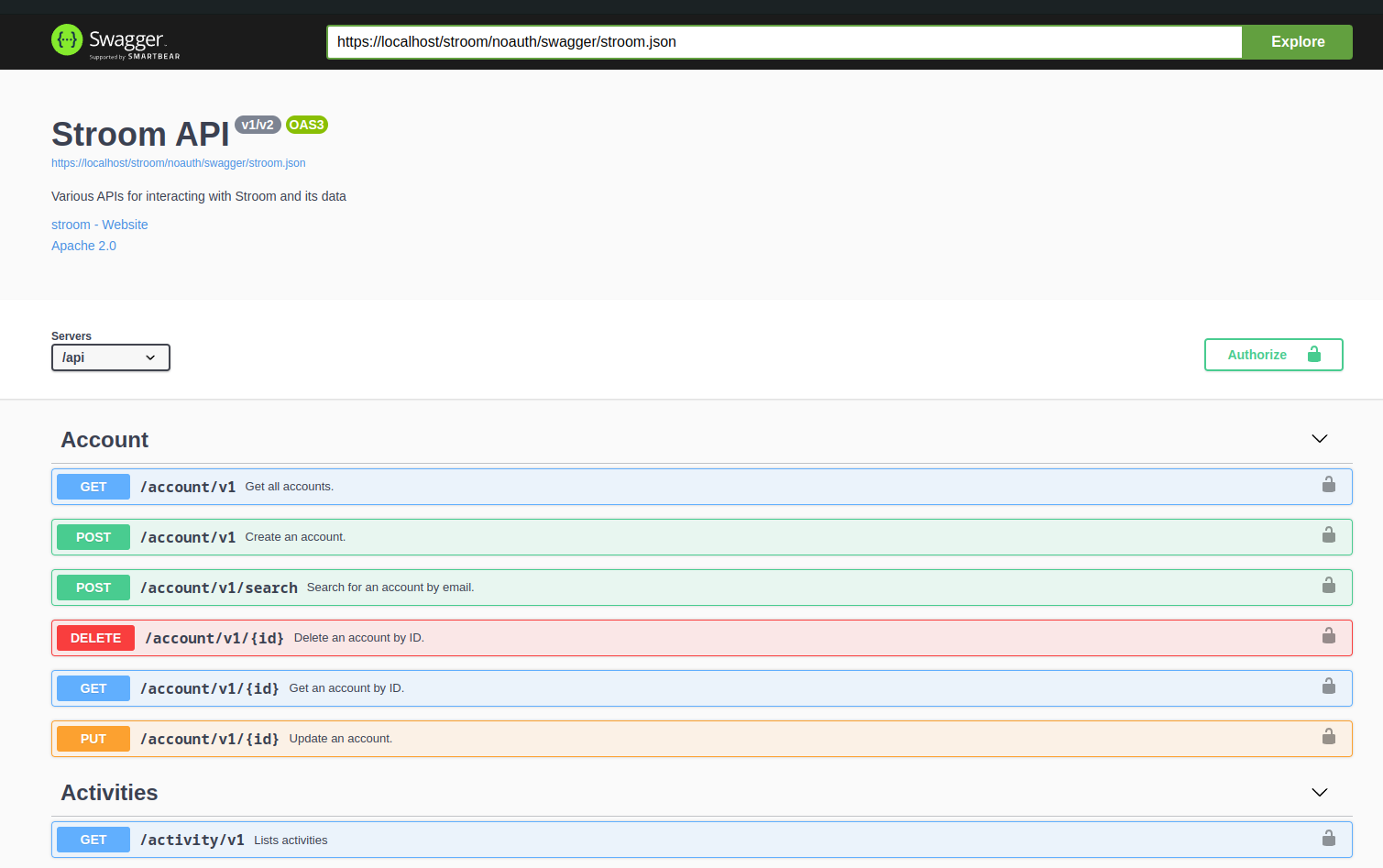

API Specification Link

Previous versions of Stroom have included an interactive user interface for navigating Stroom’s API specification. A link to this has been added to the Help menu.

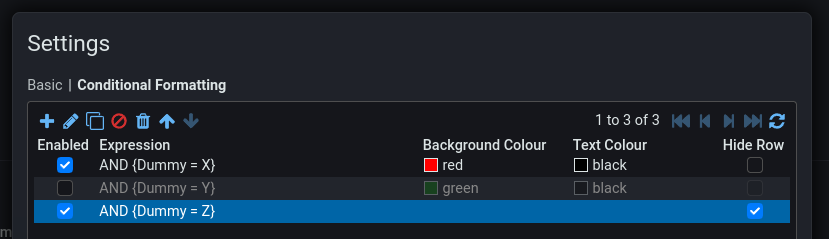

Dashboard Conditional Formatting

- Make the Enabled and Hide Row checkboxes clickable in the table without having open the rule edit dialog.

- Dim disabled rules in the table.

- Add colour swatches for the background and text colours.

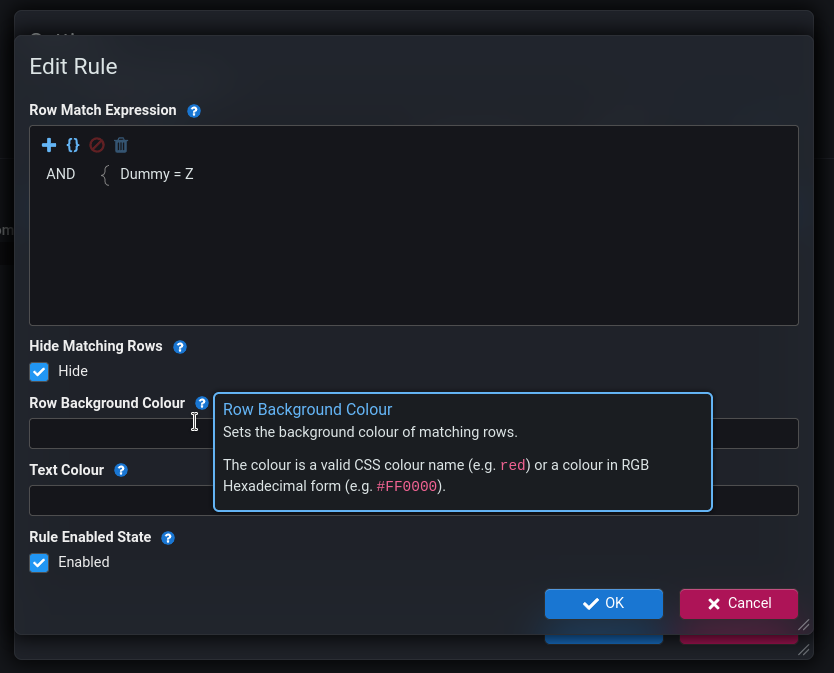

Help Icons on Dialogs

Functionality has been added to included help icons on dialogs. Clicking the icon will display a popup containing help text relating to the thing the icon is next to.

Currently help icons have only been added to a few dialogs, but more will follow.

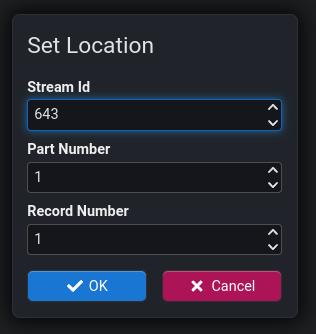

Stepping Location

The way you change the step location in the stepper has changed. Previously you could click and then directly edit each of the three numbers. Now the location label is a clickable link that opens an edit dialog.

2.2 - Preview Features (experimental)

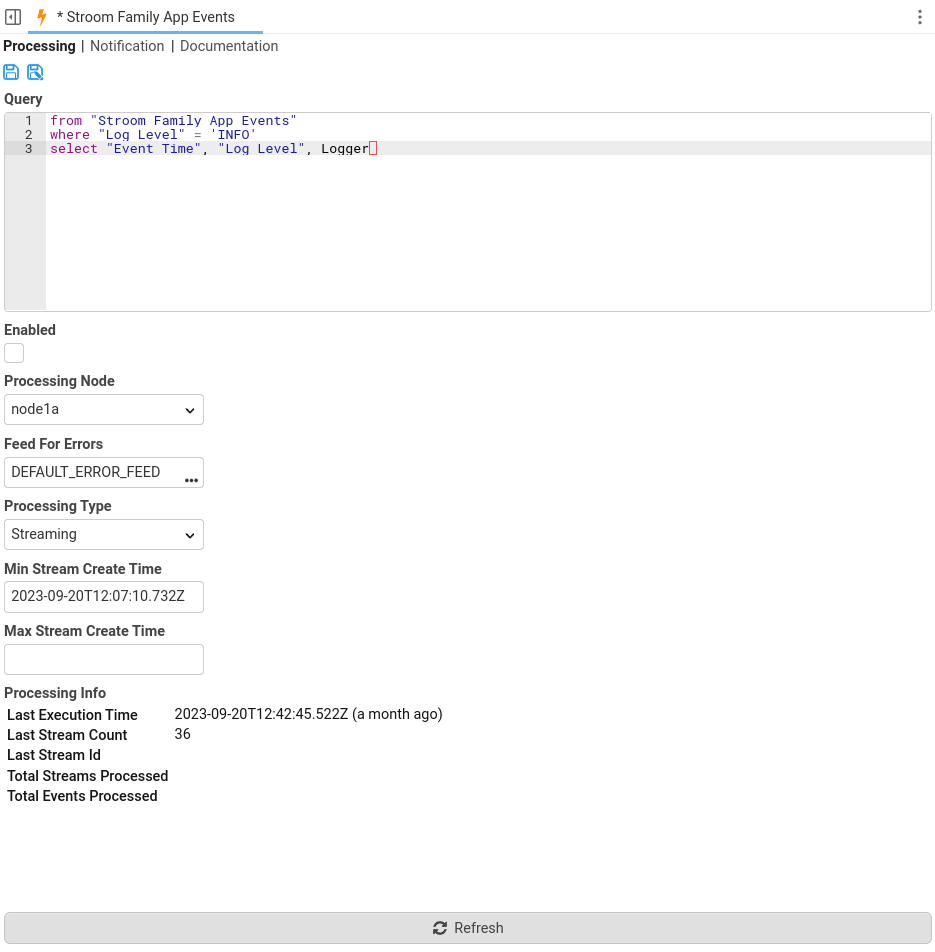

Analytic Rules

Analytic Rules were introduced in Stroom v7.2 as a preview feature. They remain an experimental preview feature but have undergone more changes/improvements.

Analytic rules are a means of writing a query to find matching data.

Processing Types

Analytic rules have three different processing types:

Streaming

A streaming rule uses a processor filter to find streams that match the filter and runs the query against the stream.

Scheduled Query

A scheduled query will run the rule’s query against a time window of data on a scheduled basis. The time window can be absolute or relative to when the scheduled query fires.

Table Builder

TODO

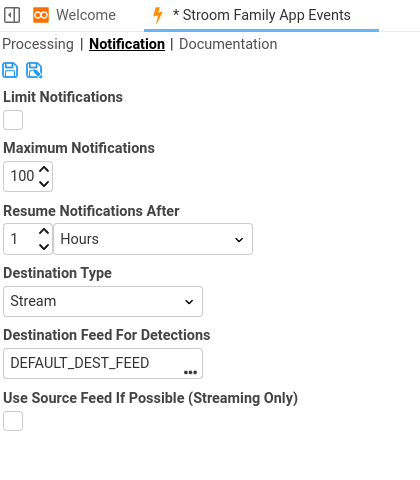

CompleteMultiple Notifications

Rules now support having multiple notification types/destinations, for example sending an email as well a stream . Currently Email and Stream are the only notification types supported.

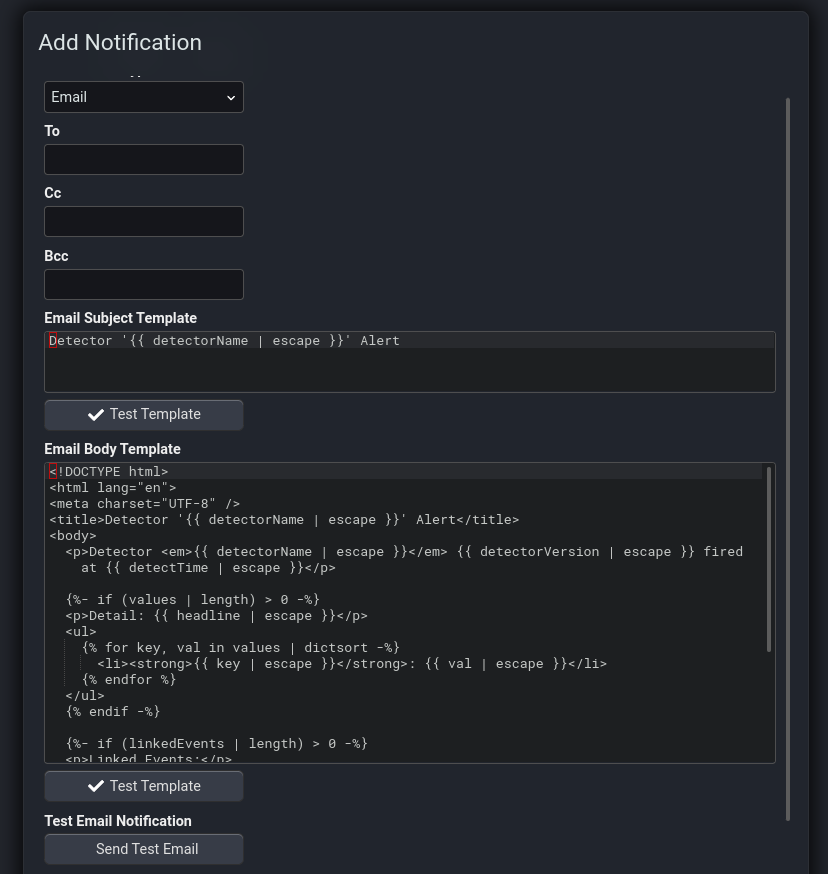

Email Templating

The email notifications have been improved to allow templating of the email subject and body. The template enables static text/HTML to be mixed with values taken from the detection.

The templating uses a template syntax called jinja and specifically the JinJava library. The templating syntax includes support for variables, filters, condition blocks, loops, etc. Full details of the syntax see Templating and for details of the templating context available in email subject/body templates see Rule Detections Context.

Example Template

The following is an example of a detection template producing a HTML email body that includes conditions and loops:

<!DOCTYPE html>

<html lang="en">

<meta charset="UTF-8" />

<title>Detector '{{ detectorName | escape }}' Alert</title>

<body>

<p>Detector <em>{{ detectorName | escape }}</em> {{ detectorVersion | escape }} fired at {{ detectTime | escape }}</p>

{%- if (values | length) > 0 -%}

<p>Detail: {{ headline | escape }}</p>

<ul>

{% for key, val in values | dictsort -%}

<li><strong>{{ key | escape }}</strong>: {{ val | escape }}</li>

{% endfor %}

</ul>

{% endif -%}

{%- if (linkedEvents | length) > 0 -%}

<p>Linked Events:</p>

<ul>

{% for linkedEvent in linkedEvents -%}

<li>Environment: {{ linkedEvent.stroom | escape }}, Stream ID: {{ linkedEvent.streamId | escape }}, Event ID: {{ linkedEvent.eventId | escape }}</li>

{% endfor %}

</ul>

{% endif %}

</body>

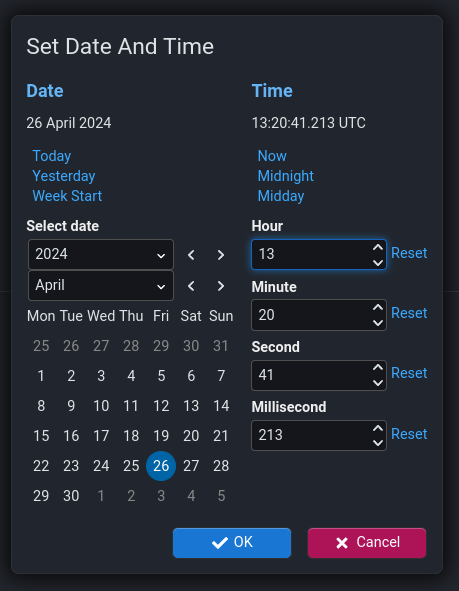

Improved Date Picker

Scheduled queries make use of a new data picker dialog which makes the process of setting a date/time value much easier for the user. This new date picker will be rolled out to other screens in Stroom in some later release.

2.3 - Breaking Changes

Warning

Please read this section carefully in case any of the changes affect you.Analytic Rules

Any Analytic Rules created in versions 7.2 or 7.3 will need to be deleted and re-created in v7.4. Analytic Rules has been an experimental feature therefore there is no migration in place for rules from previous versions of Stroom.

2.4 - Upgrade Notes

Warning

Please read this section carefully in case any of it is relevant to your Stroom instance.Java Version

Stroom v7.4 requires Java 21. This is the same java version as Stroom v7.3. Ensure the Stroom and Stroom-Proxy hosts are running the latest patch release of Java v21.

Database Migrations

When Stroom boots for the first time with a new version it will run any required database migrations to bring the database schema up to the correct version.

Warning

It is highly recommended to ensure you have a database backup in place before booting stroom with a new version. This is to mitigate against any problems with the migration. It is also recommended to test the migration against a copy of your database to ensure that there are no problems when you do it for real.On boot, Stroom will ensure that the migrations are only run by a single node in the cluster. This will be the node that reaches that point in the boot process first. All other nodes will wait until that is complete before proceeding with the boot process.

It is recommended however to use a single node to execute the migration.

To avoid Stroom starting up and beginning processing you can use the migrage command to just migrate the database and not fully boot Stroom.

See migrage command for more details.

Migration Filename Change

If you are deploying onto a v7.4-beta.1 instance you will need to modify the database table used to log migration history.

If the job_schema_history table contains version 07.03.00.001 then you will need to run the following SQL against the database to prevent Stroom from failing the migration on boot.

Migration Scripts

For information purposes only, the following are the database migrations that will be run when upgrading to 7.4.0 from the previous minor version.

Note, the legacy module will run first (if present) then the other module will run in no particular order.

Module stroom-analytics

Script V07_04_00_001__execution_schedule.sql

Path: stroom-analytics/stroom-analytics-impl-db/src/main/resources/stroom/analytics/impl/db/migration/V07_04_00_001__execution_schedule.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

-- --------------------------------------------------

--

-- Create the table

--

CREATE TABLE IF NOT EXISTS execution_schedule (

id int NOT NULL AUTO_INCREMENT,

name varchar(255) NOT NULL,

enabled tinyint NOT NULL DEFAULT '0',

node_name varchar(255) NOT NULL,

schedule_type varchar(255) NOT NULL,

expression varchar(255) NOT NULL,

contiguous tinyint NOT NULL DEFAULT '0',

start_time_ms bigint DEFAULT NULL,

end_time_ms bigint DEFAULT NULL,

doc_type varchar(255) NOT NULL,

doc_uuid varchar(255) NOT NULL,

PRIMARY KEY (id)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

CREATE INDEX execution_schedule_doc_idx ON execution_schedule (doc_type, doc_uuid);

CREATE INDEX execution_schedule_enabled_idx ON execution_schedule (doc_type, doc_uuid, enabled, node_name);

CREATE TABLE IF NOT EXISTS execution_history (

id bigint(20) NOT NULL AUTO_INCREMENT,

fk_execution_schedule_id int NOT NULL,

execution_time_ms bigint NOT NULL,

effective_execution_time_ms bigint NOT NULL,

status varchar(255) NOT NULL,

message longtext,

PRIMARY KEY (id),

CONSTRAINT execution_history_execution_schedule_id FOREIGN KEY (fk_execution_schedule_id) REFERENCES execution_schedule (id)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

CREATE TABLE IF NOT EXISTS execution_tracker (

fk_execution_schedule_id int NOT NULL,

actual_execution_time_ms bigint NOT NULL,

last_effective_execution_time_ms bigint DEFAULT NULL,

next_effective_execution_time_ms bigint NOT NULL,

PRIMARY KEY (fk_execution_schedule_id),

CONSTRAINT execution_tracker_execution_schedule_id FOREIGN KEY (fk_execution_schedule_id) REFERENCES execution_schedule (id)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

-- --------------------------------------------------

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set shiftwidth=2 tabstop=2 expandtab:

Module stroom-job

Script V07_04_00_005__job_node.sql

Path: stroom-job/stroom-job-impl-db/src/main/resources/stroom/job/impl/db/migration/V07_04_00_005__job_node.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

update job_node set schedule = concat('0 ', schedule, ' * ?') where job_type = 1 and regexp_like(schedule, '^[^ ]+ [^ ]+ [^ ]+$');

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set tabstop=4 shiftwidth=4 expandtab:

2.5 - Change Log

For a detailed list of all the changes in v7.4 see: v7.4 CHANGELOG

3 - Version 7.3

3.1 - New Features

User Interface

-

Add a Copy button to the Processors sub-tab on the Pipeline screen. This will create a duplicate of an existing filter.

-

Add a Line Wrapping toggle button to the Server Tasks screen. This will enable/disable line wrapping on the Name and Info cells.

-

Allow pane resizing in dashboards without needing to be in design mode.

-

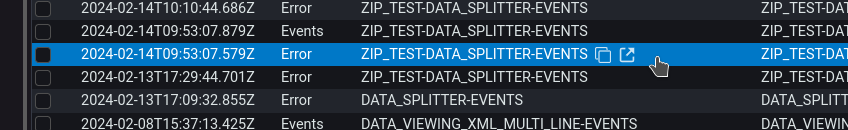

Add Copy and Jump to hover buttons to the Stream Browser screen to copy the value of the cell or (if it is a document) jump to that document.

-

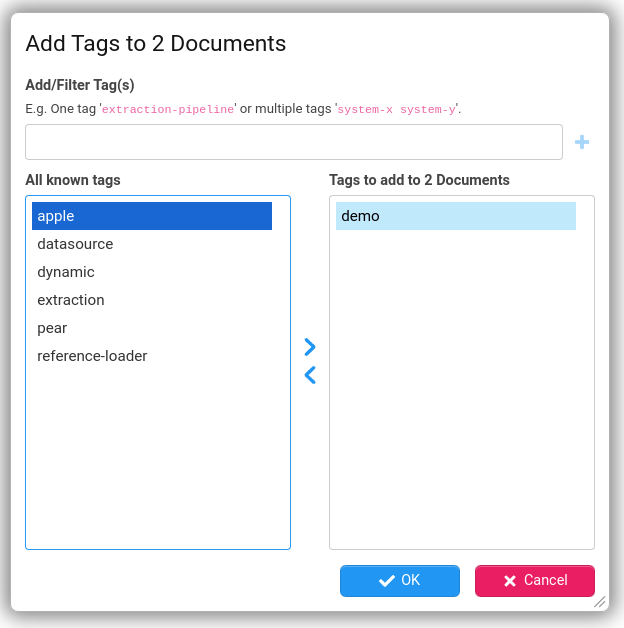

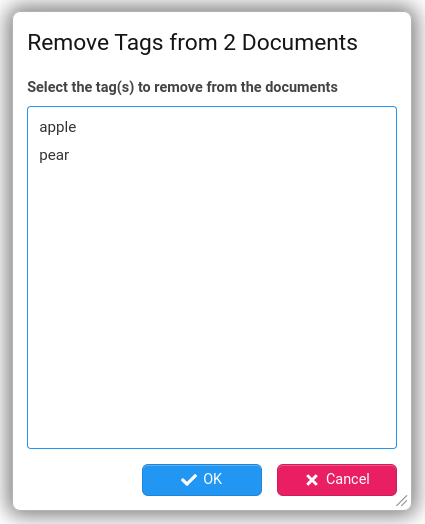

Tagging of individual explorer nodes was introduced in v7.2. v7.3 however adds support for adding/removing tags to/from multiple explorer tree nodes via the explorer context menu.

Explorer Tree

-

Additional buttons on the top of the explorer tree pane.

-

Add Expand All and Collapse All buttons to the explorer pane to open or close all items in the tree respectively.

-

Add a Locate Current Item button to the explorer pane to locate the currently open document in the explorer tree.

-

Finding Things

Find

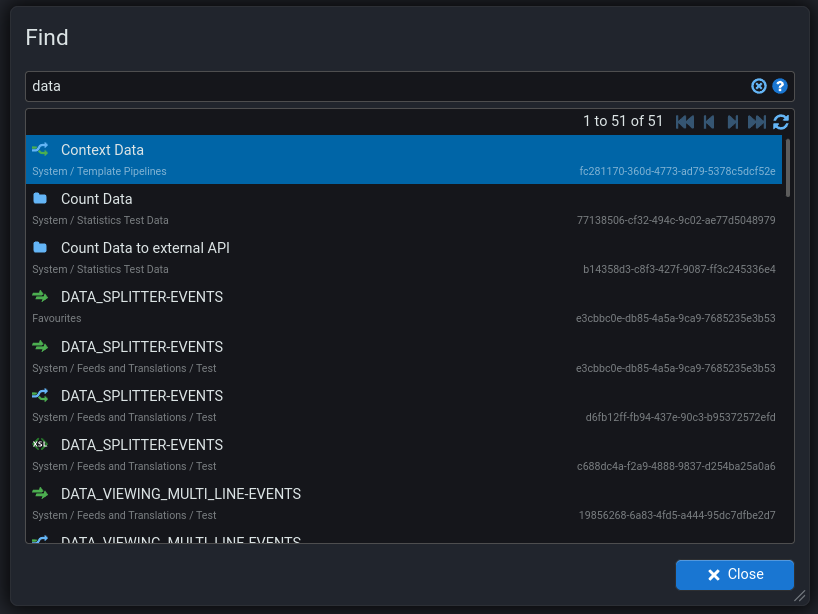

New screen for finding documents by name. Accessed using Shift ⇧ + Alt + f or

Find In Content

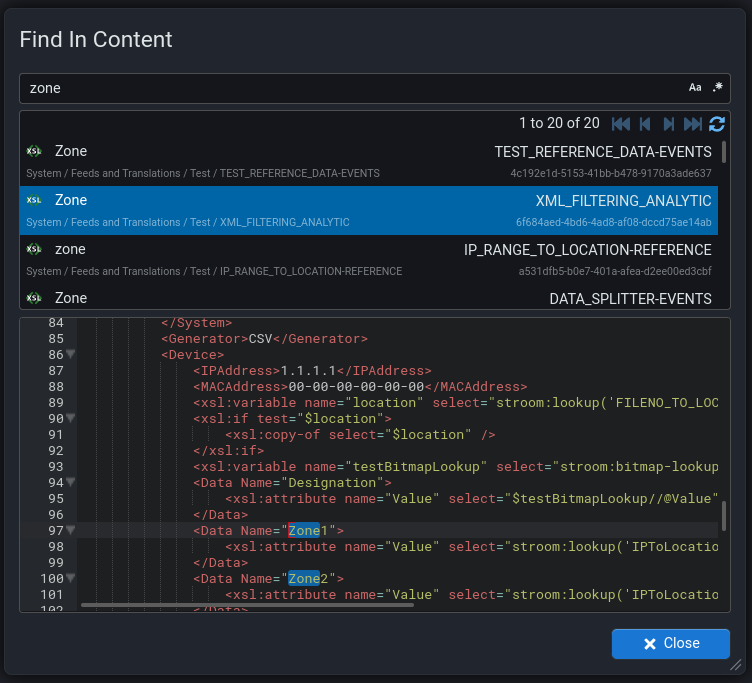

Improvements to the Find In Content screen so that it now shows the content of the document and highlights the matched terms. Now accessible using shift,ctrl + f or

Recent Items

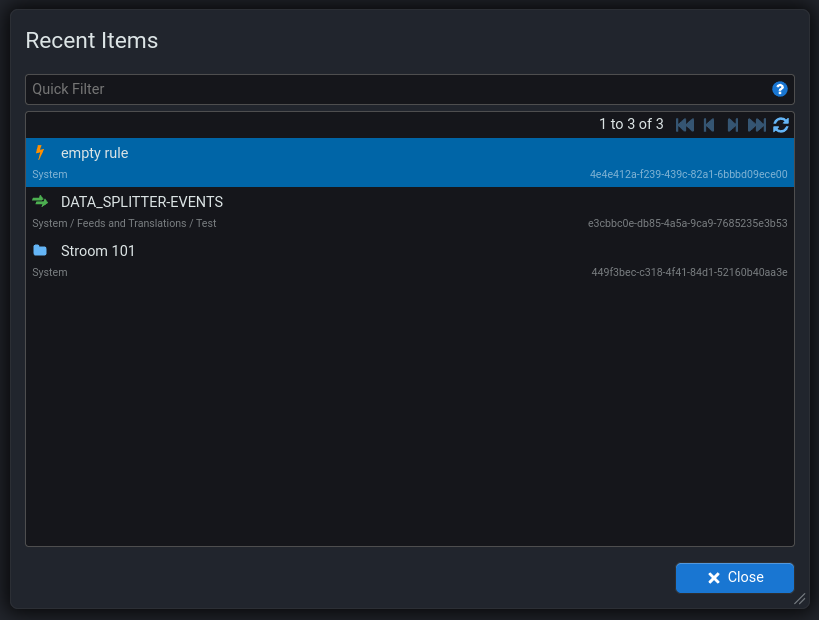

New screen for finding recently used documents. Accessed using Ctrl ^ + e or

Editor Snippets

Snippets are a way of quickly adding snippets of pre-defined text into the text editors in Stroom. Snippets have been available in previous versions of Stroom however there have been various additions to the library of snippets available which makes creating/editing content a lot easier.

-

Add snippets for Data Splitter. For the list of available snippets see here.

-

Add snippets for XMLFragmentParser. For the list of available snippets see here.

-

Add new XSLT snippets for

<xsl:element>and<xsl:message>. For the list of available snippets see here. -

Add snippets for StroomQL . For the list of available snippets see here.

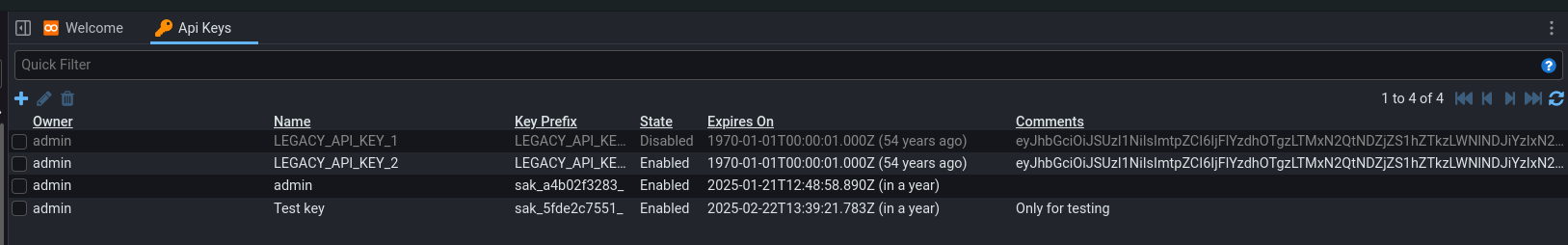

API Keys

API Keys are a means for client systems to authenticate with Stroom. In v7.2 of Stroom, the ability to use API Keys was removed if you were using an external identity provider as client systems could get tokens from the IDP themselves. In v7.3 the ability to use API Keys with an external IDP has returned as we felt it offered client systems a choice and removed the complexity of dealing with the IDP.

The API Keys screen has undergone various improvements:

- Look/feel brought in line with other screens in Stroom.

- Ability to temporarily enable/disable API Keys. A key that is disabled in Stroom cannot be authenticated against.

- Deletion of an API Key prevents any future authentication against that key.

- Named API Keys to indicate purpose, e.g. naming a key with the client system’s name.

- Comments for keys to add additional context/information for a key.

- API Key prefix to aid with identifying an API Key.

- The full API key string is no longer stored in Stroom and cannot be viewed after creation.

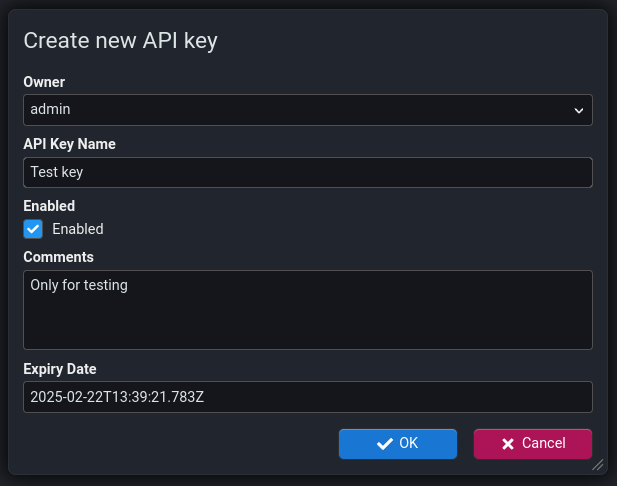

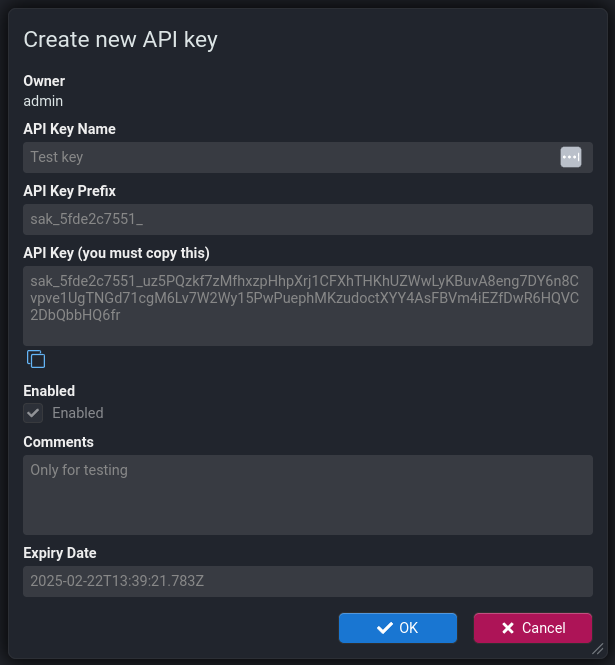

Key Creation

The screens for creating a new API Key are as follows:

Warning

Note that the actual API Key is ONLY visible on the second dialog. Once that dialog is closed Stroom cannot show you the API Key string as it does not store it. This is for security reasons. You must copy the created key and give it to the recipient at this point.Key Format

We have also made changes to the format of the API Key itself. In v7.2, the API Key was an OAuth token so had data baked into it. In v7.3, the API Key is essentially just a dumb random string, like a very long and secure password. The following is an example of a new API Key:

sak_e1e78f6ee0_6CyT2Mpj2seVJzYtAXsqWwKJzyUqkYqTsammVerbJpvimtn4BpE9M5L2Sx6oeG5prhyzcA7U6fyV5EkwTxoXJPfDWLneQAq16i5P75qdQNbqJ99Wi7xzaDhryMdhVZhs

The structure of the key is as follows:

sak- The key type, Stroom API Key._- separator- Truncated SHA2-256 hash (truncated to 10 chars) of the whole API Key.

_- separator- 128 crypto random chars in the Base58 character set.

This character set ensures no awkward characters that might need escaping and removes some ambiguous characters (

0OIl).

Features of the new format are:

- Fixed length of 143 chars with fixed prefix (

sak_) that make it easier to search for API Keys in config, e.g. to stop API Keys being leaked into online public repositories and the like. - Unique prefix (e.g.

sak_e1e78f6ee0_) to help link an API being used by a client with the API Key record stored in Stroom. This part of the key is stored and displayed in Stroom. - The hash part acts as a checksum for the key to ensure it is correct. The following CyberChef recipe shows how you can validate the hash part of a key.

Analytics

-

Add distributed processing for streaming analytics. This means streaming analytics can now run on all nodes in the cluster rather than just one.

-

Add multiple recipients to rule notification emails. Previously only one recipient could be added.

Search

-

Add support for Lucene 9.8.0 and supporting multiple version of Lucene. Stroom now stores the Lucene version used to create an index shard against the shard so that the correct Lucene version is used when reading/writing from that shard. This will allow Stroom to harness new Lucene features in future while maintaining backwards compatibility with older versions.

-

Add support for

field inandfield in dictionaryto StroomQL .

Processing

-

Improve the display of processor filter state. The columns

Tracker MsandTracker %have been removed and theStatuscolumn has been improved better reflect the state of the filter tracker. -

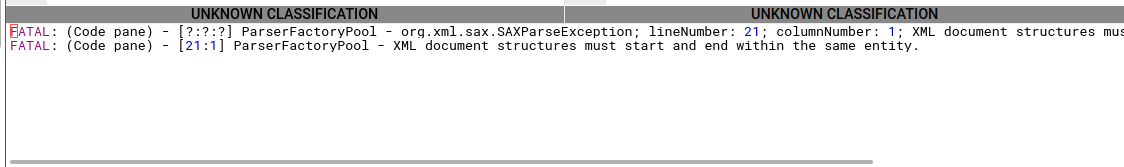

Stroom now supports the XSLT standard element

<xsl:message>. This element will be handled as follows:<!-- Log `some message` at severity `FATAL` and terminate processing of that stream part immediately. Note that `terminate="yes"` will trump any severity that is also set. --> <xsl:message terminate="yes">some message</xsl:message> <!-- Log `some message` at severity `ERROR`. --> <xsl:message>some message</xsl:message> <!-- Log `some message` at severity `FATAL`. --> <xsl:message><fatal>some message</fatal></xsl:message> <!-- Log `some message` at severity `ERROR`. --> <xsl:message><error>some message</error></xsl:message> <!-- Log `some message` at severity `WARNING`. --> <xsl:message><warn>some message</warn></xsl:message> <!-- Log `some message` at severity `INFO`. --> <xsl:message><info>some message</info></xsl:message> <!-- Log $msg at severity `ERROR`. --> <xsl:message><xsl:value-of select="$msg"></xsl:message>The namespace of the severity element (e.g.

<info>is ignored. -

Add the following pipeline element properties to allow control of logged warnings for removal/replacement respectively.

- Add property

warnOnRemovalto InvalidCharFilterReader . - Add property

warnOnReplacementto InvalidXMLCharFilterReader .

- Add property

XSLT Functions

- Add XSLT function

stroom:hex-to-string(hex, charsetName). - Add XSLT function

stroom:cidr-to-numeric-ip-rangeXSLT function. - Add XSLT function

stroom:ip-in-cidrfor testing whether an IP address is within the specified CIDR range.

For details of the new functions, see XSLT Functions.

API

- Add the un-authenticated API method

/api/authproxy/v1/noauth/fetchClientCredsTokento effectively proxy for the IDP's token endpoint to obtain an access token using the client credentials flow. The request contains the client credentials and looks like{ "clientId": "a-client", "clientSecret": "BR9m.....KNQO" }. The response media type istext/plainand contains the access token.

3.2 - Preview Features (experimental)

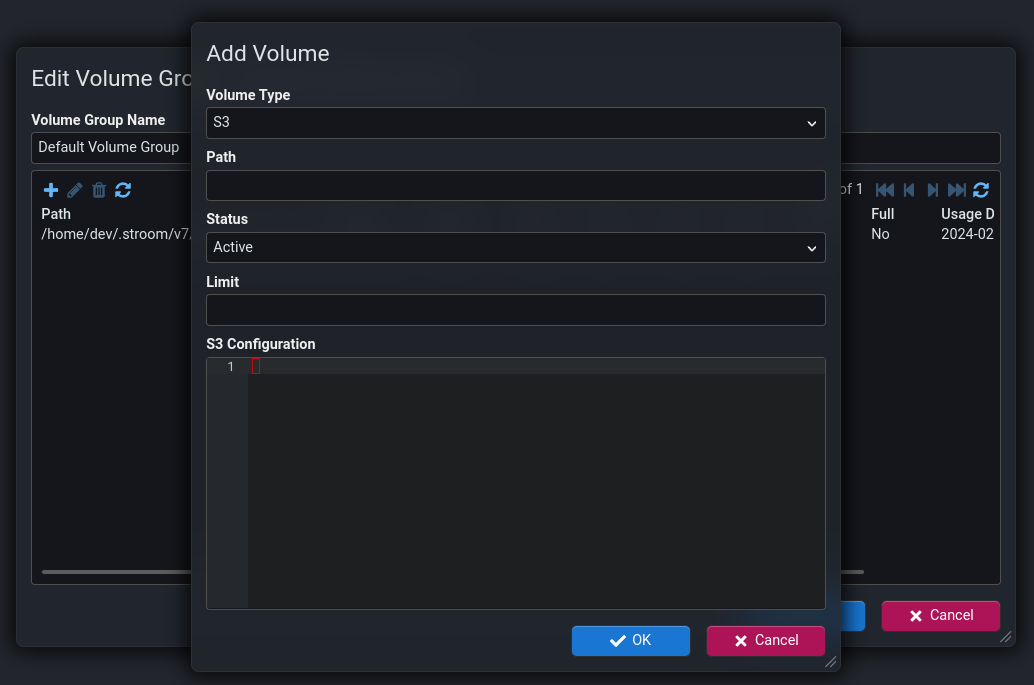

S3 Storage

Integration with S3 storage has been added to allow Stroom to read/write to/from S3 storage, e.g. S3 on AWS.

A data volume can now be create as either Standard or S3.

If configured as S3 you need to supply the S3 configuration data.

This is an experimental feature at this stage and may be subject to change. The way Stroom reads and writes data has not been optimised for S3 so performance at scale is currently unknown.

3.3 - Breaking Changes

Warning

Please read this section carefully in case any of the changes affect you.-

The Hessian based feed status RPC service

/remoting/remotefeedservice.rpchas been removed as it is using the legacyjavax.servletdependency that is incompatible withjakarta.servletthat is now in use in stroom. This was used by Stroom-Proxy up to v5. -

The StroomQL keyword combination

vis ashas been replaced withshow.

3.4 - Upgrade Notes

Warning

Please read this section carefully in case any of it is relevant to your Stroom instance.Java Version

Stroom v7.3 requires Java v21. Previous versions of Stroom used Java v17 or lower. You will need to upgrade Java on the Stroom and Stroom-Proxy hosts to the latest patch release of Java v21.

API Keys

With the change to the format of API Keys (see here), it is recommended to migrate legacy API Keys over to the new format. There is no hard requirement to do this as legacy keys will continue to work as is, however the new keys are easier to work with and Stroom has more control over the new format keys, making them more secure. You are encouraged to create new keys for client systems and ask them to change the keys over.

Legacy Key Migration

The new API Keys are now stored in a new table api_key.

Legacy keys will be migrated into this table and given a key name and prefix like LEGACY_API_KEY_N, where N is a unique number.

As the whole API was previously visible in v7.2, the API Key string is migrated into the Comments field so remains visible in the UI.

Database Migrations

When Stroom boots for the first time with a new version it will run any required database migrations to bring the database schema up to the correct version.

Warning

It is highly recommended to ensure you have a database backup in place before booting stroom with a new version. This is to mitigate against any problems with the migration. It is also recommended to test the migration against a copy of your database to ensure that there are no problems when you do it for real.On boot, Stroom will ensure that the migrations are only run by a single node in the cluster. This will be the node that reaches that point in the boot process first. All other nodes will wait until that is complete before proceeding with the boot process.

It is recommended however to use a single node to execute the migration.

To avoid Stroom starting up and beginning processing you can use the migrage command to just migrate the database and not fully boot Stroom.

See migrage command for more details.

Migration Scripts

For information purposes only, the following are the database migrations that will be run when upgrading to 7.3.0 from the previous minor version.

Note, the legacy module will run first (if present) then the other module will run in no particular order.

Module stroom-data

Script V07_03_00_001__fs_volume_s3.sql

Path: stroom-data/stroom-data-store-impl-fs-db/src/main/resources/stroom/data/store/impl/fs/db/migration/V07_03_00_001__fs_volume_s3.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

CREATE TABLE IF NOT EXISTS fs_volume_group (

id int NOT NULL AUTO_INCREMENT,

version int NOT NULL,

create_time_ms bigint NOT NULL,

create_user varchar(255) NOT NULL,

update_time_ms bigint NOT NULL,

update_user varchar(255) NOT NULL,

name varchar(255) NOT NULL,

-- 'name' needs to be unique because it is used as a reference

UNIQUE (name),

PRIMARY KEY (id)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

DROP PROCEDURE IF EXISTS V07_03_00_001;

DELIMITER $$

CREATE PROCEDURE V07_03_00_001 ()

BEGIN

DECLARE object_count integer;

-- Add volume type

SELECT COUNT(1)

INTO object_count

FROM information_schema.columns

WHERE table_schema = database()

AND table_name = 'fs_volume'

AND column_name = 'volume_type';

IF object_count = 0 THEN

ALTER TABLE `fs_volume` ADD COLUMN `volume_type` int NOT NULL;

ALTER TABLE `fs_volume` ADD COLUMN `data` longblob;

UPDATE `fs_volume` set `volume_type` = 0;

END IF;

-- Add default group

SELECT COUNT(*)

INTO object_count

FROM fs_volume_group

WHERE name = "Default";

IF object_count = 0 THEN

INSERT INTO fs_volume_group (

version,

create_time_ms,

create_user,

update_time_ms,

update_user,

name)

VALUES (

1,

UNIX_TIMESTAMP() * 1000,

"Flyway migration",

UNIX_TIMESTAMP() * 1000,

"Flyway migration",

"Default Volume Group");

END IF;

-- Add volume group

SELECT COUNT(1)

INTO object_count

FROM information_schema.columns

WHERE table_schema = database()

AND table_name = 'fs_volume'

AND column_name = 'fk_fs_volume_group_id';

IF object_count = 0 THEN

ALTER TABLE `fs_volume`

ADD COLUMN `fk_fs_volume_group_id` int NOT NULL;

UPDATE `fs_volume` SET `fk_fs_volume_group_id` = (SELECT `id` FROM `fs_volume_group` WHERE `name` = "Default Volume Group");

ALTER TABLE fs_volume

ADD CONSTRAINT fs_volume_group_fk_fs_volume_group_id

FOREIGN KEY (fk_fs_volume_group_id)

REFERENCES fs_volume_group (id);

END IF;

END $$

DELIMITER ;

CALL V07_03_00_001;

DROP PROCEDURE IF EXISTS V07_03_00_001;

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set shiftwidth=4 tabstop=4 expandtab:

Module stroom-index

Script V07_03_00_001__index_field.sql

Path: stroom-index/stroom-index-impl-db/src/main/resources/stroom/index/impl/db/migration/V07_03_00_001__index_field.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

DROP PROCEDURE IF EXISTS drop_field_source;

DELIMITER //

CREATE PROCEDURE drop_field_source ()

BEGIN

IF EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'field_info') THEN

DROP TABLE field_info;

END IF;

IF EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'field_source') THEN

DROP TABLE field_source;

END IF;

IF EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'field_schema_history') THEN

DROP TABLE field_schema_history;

END IF;

END//

DELIMITER ;

CALL drop_field_source();

DROP PROCEDURE drop_field_source;

--

-- Create the field_source table

--

CREATE TABLE IF NOT EXISTS `index_field_source` (

`id` int NOT NULL AUTO_INCREMENT,

`type` varchar(255) NOT NULL,

`uuid` varchar(255) NOT NULL,

`name` varchar(255) NOT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `index_field_source_type_uuid` (`type`, `uuid`)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

--

-- Create the index_field table

--

CREATE TABLE IF NOT EXISTS `index_field` (

`id` bigint NOT NULL AUTO_INCREMENT,

`fk_index_field_source_id` int NOT NULL,

`type` tinyint NOT NULL,

`name` varchar(255) NOT NULL,

`analyzer` varchar(255) NOT NULL,

`indexed` tinyint NOT NULL DEFAULT '0',

`stored` tinyint NOT NULL DEFAULT '0',

`term_positions` tinyint NOT NULL DEFAULT '0',

`case_sensitive` tinyint NOT NULL DEFAULT '0',

PRIMARY KEY (`id`),

UNIQUE KEY `index_field_source_id_name` (`fk_index_field_source_id`, `name`),

CONSTRAINT `index_field_fk_index_field_source_id` FOREIGN KEY (`fk_index_field_source_id`) REFERENCES `index_field_source` (`id`)

) ENGINE=InnoDB DEFAULT CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci;

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set tabstop=4 shiftwidth=4 expandtab:

Script V07_03_00_005__index_field_change_pk.sql

Path: stroom-index/stroom-index-impl-db/src/main/resources/stroom/index/impl/db/migration/V07_03_00_005__index_field_change_pk.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

DELIMITER $$

DROP PROCEDURE IF EXISTS modify_field_source$$

-- The surrogate PK results in fields from different indexes all being mixed together

-- in the PK index, which causes deadlocks in batch upserts due to gap locks.

-- Change the PK to be (fk_index_field_source_id, name) which should keep the fields

-- together.

CREATE PROCEDURE modify_field_source ()

BEGIN

-- Remove existing PK

IF EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.columns

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'index_field'

AND COLUMN_NAME = 'id') THEN

ALTER TABLE index_field DROP COLUMN id;

END IF;

-- Add the new PK

IF NOT EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.table_constraints

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'index_field'

AND CONSTRAINT_NAME = 'PRIMARY') THEN

ALTER TABLE index_field ADD PRIMARY KEY (fk_index_field_source_id, name);

END IF;

-- Remove existing index that is now served by PK

IF EXISTS (

SELECT NULL

FROM INFORMATION_SCHEMA.table_constraints

WHERE TABLE_SCHEMA = database()

AND TABLE_NAME = 'index_field'

AND CONSTRAINT_NAME = 'index_field_source_id_name') THEN

ALTER TABLE index_field DROP INDEX index_field_source_id_name;

END IF;

END $$

DELIMITER ;

CALL modify_field_source();

DROP PROCEDURE IF EXISTS modify_field_source;

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set tabstop=4 shiftwidth=4 expandtab:

Module stroom-processor

Script V07_03_00_001__processor.sql

Path: stroom-processor/stroom-processor-impl-db/src/main/resources/stroom/processor/impl/db/migration/V07_03_00_001__processor.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

DROP PROCEDURE IF EXISTS modify_processor;

DELIMITER //

CREATE PROCEDURE modify_processor ()

BEGIN

DECLARE object_count integer;

SELECT COUNT(1)

INTO object_count

FROM information_schema.table_constraints

WHERE table_schema = database()

AND table_name = 'processor'

AND constraint_name = 'processor_pipeline_uuid';

IF object_count = 1 THEN

ALTER TABLE processor DROP INDEX processor_pipeline_uuid;

END IF;

SELECT COUNT(1)

INTO object_count

FROM information_schema.table_constraints

WHERE table_schema = database()

AND table_name = 'processor'

AND constraint_name = 'processor_task_type_pipeline_uuid';

IF object_count = 0 THEN

CREATE UNIQUE INDEX processor_task_type_pipeline_uuid ON processor (task_type, pipeline_uuid);

END IF;

END//

DELIMITER ;

CALL modify_processor();

DROP PROCEDURE modify_processor;

SET SQL_NOTES=@OLD_SQL_NOTES;

-- vim: set shiftwidth=4 tabstop=4 expandtab:

Script V07_03_00_005__processor_filter.sql

Path: stroom-processor/stroom-processor-impl-db/src/main/resources/stroom/processor/impl/db/migration/V07_03_00_005__processor_filter.sql

-- ------------------------------------------------------------------------

-- Copyright 2020 Crown Copyright

--

-- Licensed under the Apache License, Version 2.0 (the "License");

-- you may not use this file except in compliance with the License.

-- You may obtain a copy of the License at

--

-- http://www.apache.org/licenses/LICENSE-2.0

--

-- Unless required by applicable law or agreed to in writing, software

-- distributed under the License is distributed on an "AS IS" BASIS,

-- WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

-- See the License for the specific language governing permissions and

-- limitations under the License.

-- ------------------------------------------------------------------------

-- Stop NOTE level warnings about objects (not)? existing

SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0;

-- --------------------------------------------------

DELIMITER $$

-- --------------------------------------------------

DROP PROCEDURE IF EXISTS processor_run_sql_v1 $$

-- DO NOT change this without reading the header!

CREATE PROCEDURE processor_run_sql_v1 (

p_sql_stmt varchar(1000)

)

BEGIN

SET @sqlstmt = p_sql_stmt;

SELECT CONCAT('Running sql: ', @sqlstmt);

PREPARE stmt FROM @sqlstmt;

EXECUTE stmt;

DEALLOCATE PREPARE stmt;

END $$

-- --------------------------------------------------

DROP PROCEDURE IF EXISTS processor_add_column_v1$$

-- DO NOT change this without reading the header!

CREATE PROCEDURE processor_add_column_v1 (

p_table_name varchar(64),

p_column_name varchar(64),

p_column_type_info varchar(64) -- e.g. 'varchar(255) default NULL'

)

BEGIN

DECLARE object_count integer;

SELECT COUNT(1)

INTO object_count

FROM information_schema.columns

WHERE table_schema = database()

AND table_name = p_table_name

AND column_name = p_column_name;

IF object_count = 0 THEN

CALL processor_run_sql_v1(CONCAT(

'alter table ', database(), '.', p_table_name,

' add column ', p_column_name, ' ', p_column_type_info));

ELSE

SELECT CONCAT(

'Column ',

p_column_name,

' already exists on table ',

database(),

'.',

p_table_name);

END IF;

END $$

-- idempotent

CALL processor_add_column_v1(

'processor_filter',

'max_processing_tasks',

'int NOT NULL DEFAULT 0');

-- vim: set shiftwidth=4 tabstop=4 expandtab:

3.5 - Change Log

For a detailed list of all the changes in v7.3 see: v7.3 CHANGELOG

4 - Version 7.2

Stroom v7.1 was not widely adopted so this section may describe features or changes that were part of the v7.1 release.

4.1 - New Features

Look and Feel

New User Interface Design

The user interface has had a bit of a re-design to give it a more modern look and to make it conform to accessibility standards.

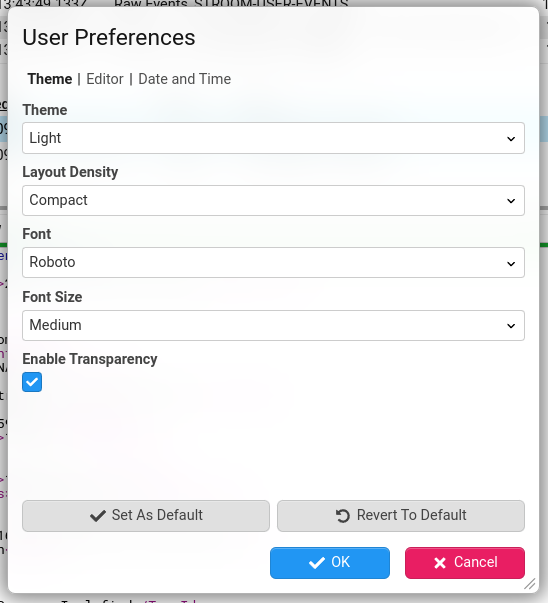

User Preferences

Now you can customise Stroom with your own personal preferences. From the main menu , select:

You can also change the following:

-

Layout Density - This controls the layout spacing to fit more or less user interface elements in the available space.

-

Font - Change font used in Stroom.

-

Font Size - Change the font size used in Stroom.

-

Transparency - Enables partial transparency of dialog windows. Entirely cosmetic.

Theme

Choose between the traditional light theme and a new dark theme with light text on a dark background.

Editor Preferences

The Ace text editor used within Stroom is used for editing things like XSLTs and viewing stream data. It can now be personalised with the following options:

-

Theme - The colour theme for the editor. The theme options will be applicable to the main user interface theme, i.e. light/dark editor themes. The theme affects the colours used for the syntax highlight content.

-

Key Bindings - Allows you to set the editor to use Vim key bindings for more powerful text editing. If you don’t know what Vim is then it is best to stick to Standard. If you would like to learn how to use Vim, install

vimtutor. Note: The Ace editor does not fully emulate Vim, not all Vim key bindings work and there is limited command mode support. -

Live Auto Completion - Set this to On if you want the editor code completion to make suggestions as you type. When set to Off you need to press Ctrl ^ + Space ␣ to show the suggestion dialog.

Date and Time

You can now change the format used for displaying the date and time in the user interface. You can also set the time zone used for displaying the date and time in the user interface.

Note

Stroom works in UTC time internally. Changing the time zone only affects display of dates/times, not how data is stored or the dates/times in events.

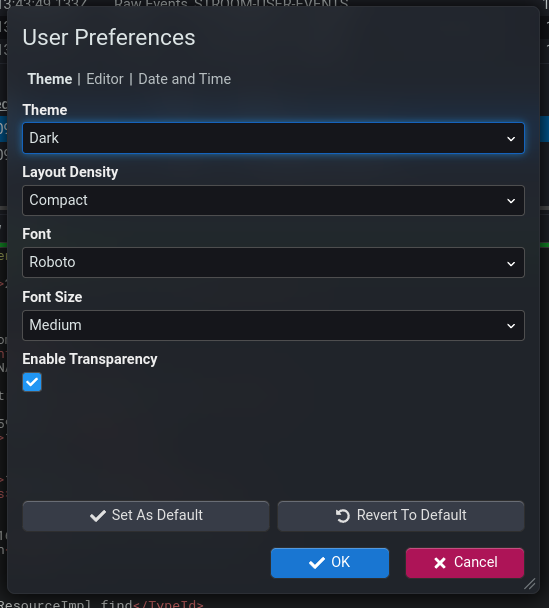

Dashboard Changes

Design Mode

A Design Mode has been introduced to Dashboards and is toggled using the button . When a Dashboard is in design mode, the following functionality is enabled:

- Adding components to the Dashboard.

- Removing components from the Dashboard.

- Moving Dashboard components within panes, to new panes or to existing panes.

- Changing the constraints of the Dashboard.

On creation of a new Dashboard, Design Mode will be on so the user has full functionality. On opening an existing Dashboard, Design Mode will be off. This is because typically, Dashboards are viewed more than they are modified.

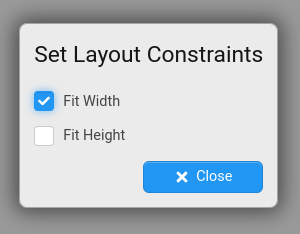

Visual Constraints

Now it is possible to control the horizontal and vertical constraints of a Dashboard. In Stroom 7.0, a dashboard would always scale to fit the user’s screen. Sometimes it is desirable for the dashboard canvas area to be larger than the screen so that you have to scroll to see it all. For example you may have a dashboard with a large number of component panes and rather than squashing them all into the available space you want to be able to scroll vertically in order to see them all.

It is now possible to change the horizontal and/or vertical constraints to fit the available width/height or to be fixed by clicking the button.

The edges of the canvas can be moved to shrink/grow it.

Explorer Filter Matches

Filtering in the explorer has been changed to highlight the filter matches and to search in folders that themselves are a match. In Stroom v7.0 folders that matched were not expanded. Match highlighting makes it clearer what items have matched.

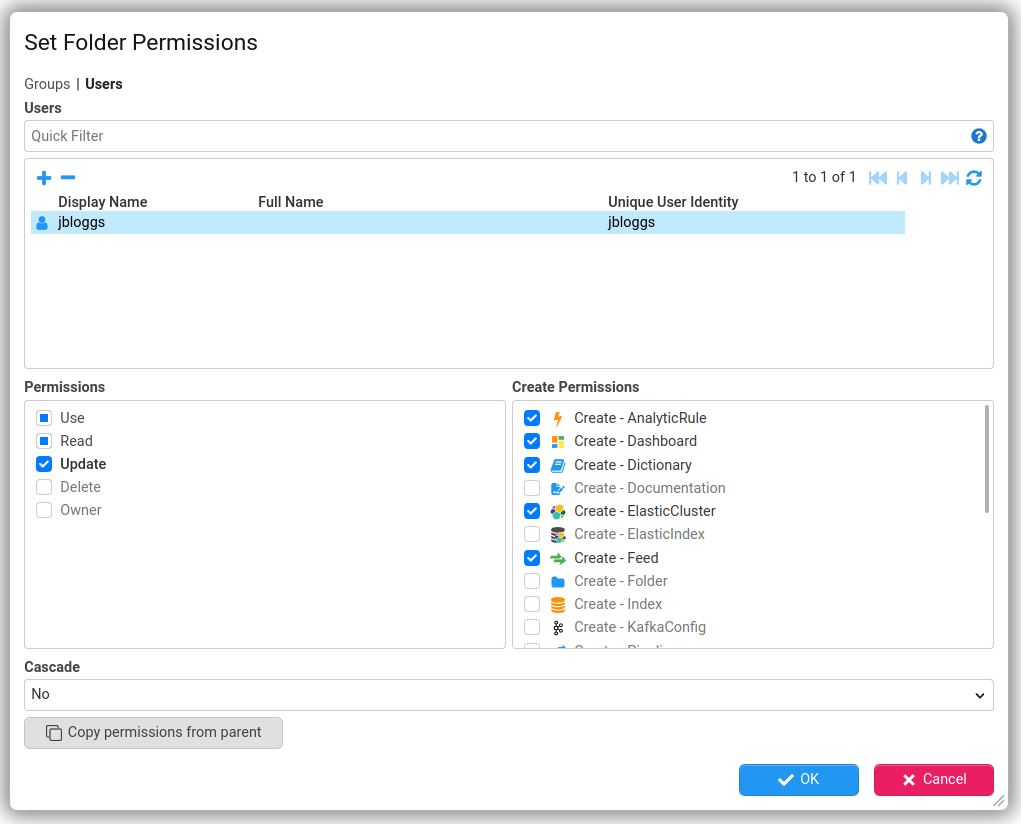

Document Permissions Screen

The document and folder permissions screens have been re-designed with a better layout and item highlighting to make it clearer which permissions have been selected.

Editor Completion snippets

The number of available editor completion snippets has increased. For a list of the available completion snippets see the Completion Snippet Reference.

Note

Completion snippets are an evolving feature so if you have an requests for generic completion snippets then raise an issue on GitHub and we will consider adding them in.Partitioned Reference Data Stores

In Stroom v7.0 reference data is loaded using a reference loader pipeline and the key/value pairs are stored in a single disk backed reference data store on each Stroom node for fast lookup. This single store approach has led to high contention and performance problems when purge operations are running against it at the same time or multiple reference Feeds are being loaded at the same time.

In Stroom v7.2 the reference data key/value pairs are now stored in multiple reference data stores on each node, with one store per Feed. This reduces contention as reference data for one Feed can be loading while a purge operation is running on the store for another Feed or reference data for multiple Feeds can be loaded concurrently. Performance is still limited by the file system that the stores are hosted on.

All reference data stores are stored in the directory defined by stroom.pipeline.referenceData.lmdb.localDir.

See Also

See the upgrade notes for the reference data stores.

Improved OAuth2.0/OpenID Connect Support

The support for Open ID Connect (OIDC) authentication has been improved in v7.2. Stroom can be integrated with AWS Cognito, MS Azure AD, KeyCloak and other OIDC Identity Providers (IDPs) .

Data receipt in Stroom and Stroom-Proxy can now enforce OIDC token authentication as well as certificate authentication. The data receipt authentication is configured via the properties:

stroom.receive.authenticationRequiredstroom.receive.certificateAuthenticationEnabledstroom.receive.tokenAuthenticationEnabled

Stroom and Stroom-Proxy have also been changed to use OIDC tokens for API endpoints and inter-node communications. This currently requires the OIDC IDP to support the client credentials flow.

Stroom can still be used with its own internal IDP if you do not have an external IDP available.

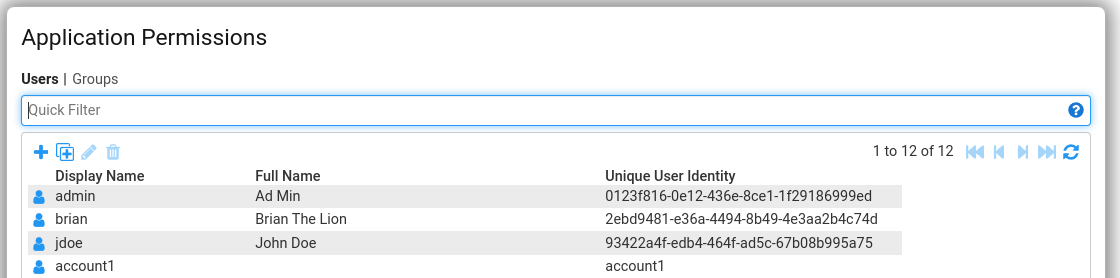

User Naming Changes

The changes to add integration with external OAuth 2.0/OpenID Connect identity provides has required some changes to the way users in Stroom are identified.

Previously in Stroom a user would have a unique username that would be set when creating the account in Stroom.

This would typically by a human friendly name like jbloggs or similar.

It would be used in all the user/permission management screens to identify the user, for functions like current-user(), for simple audit columns in the database (create_user and update_user) and for the audit events stroom produces.

With the integration to external identity providers this has had to change a little.

Typically in OpenID Connect IDPs the unique identity of a principle (user) is fairly unfriendly

UUID

.

The user will likely also have a more human friendly identity (sometimes called the preferred_username) that may be something like jblogs or jblogs@somedomain.x.

As per the OpenID Connect specification, this friendly identity may not be unique within the IDP, so Stroom has to assume this also.

In reality this identity is typically unique on the IDP though.

The IDP will often also have a full name for the user, e.g. Joe Bloggs.

Stroom now stores and can display all of these identities.

- Display Name - This is the (potentially non-unique) preferred user name held by the IDP, e.g.

jbloggsorjblogs@somedomain.x. - Full Name - The user’s full name, e.g.

Joe Bloggs, if known by the IDP. - Unique User Identity - The unique identity of the user on the IDP, which may look like

ca650638-b52c-45af-948c-3f34aeeb6f86.

In most screens, Stroom will display the Display Name. This will also be used for any audit purposes. The permissions screen show all three identities so an admin can be sure which user they are dealing with and be able to correlate it with one on the IDP.

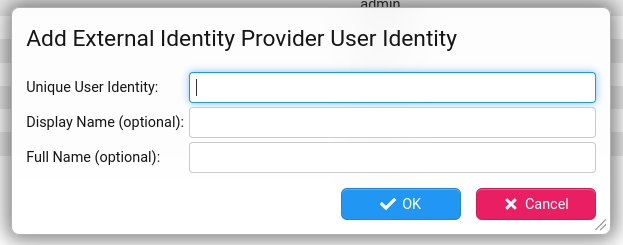

User Creation

When using an external IDP, a user visiting Stroom for the first time will result in the creation of a Stroom User record for them. This Stroom User will have no permissions associated with it. To improve the experience for a new user it is preferable for the Stroom administrator to pre-create the Stroom User account in Stroom with the necessary permissions.

This can be done from the Application Permissions screen accessed from the Main menu ().

You can create a single Stroom User by clicking the button.

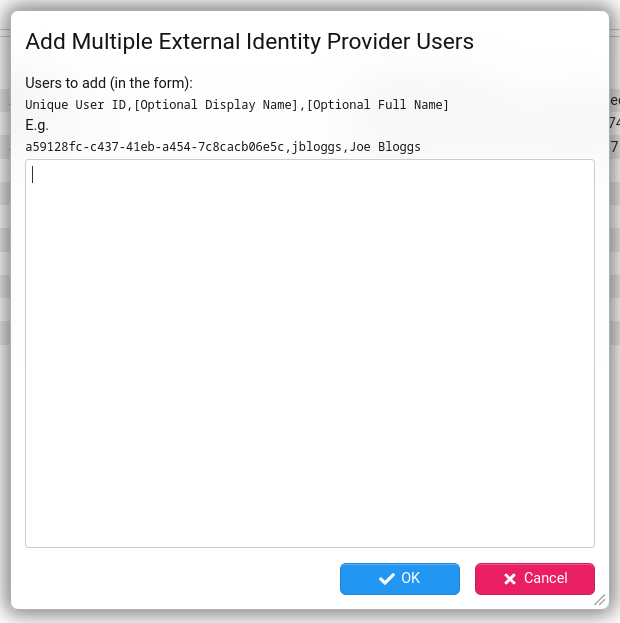

Or you can create multiple Stroom Users by clicking the button.

In both cases the Unique User ID is mandatory, and this must be obtained from the IDP. The Display Name and Full Name are optional, as these will be obtained automatically from the IDP by Stroom on login. It can be useful to populate them initially to make it easier for the administrator to see who is who in the list of users.

Once the user(s) are created, the appropriate permissions/groups can be assigned to them so that when they log in for the first time they will be able to see the required content and be able to use Stroom.

New Document types

The following new types of document can be created and managed in the explorer tree.

Documentation

It is now possible to create a Documentation entity in the explorer tree. This is designed to hold any text or documentation that the user chooses to write in Markdown format. These can be useful for providing documentation within a folder in the tree to collectively describe all the items in that folder, or to provide a useful README type document. It is not possible to add documentation to a folder entity itself, so this is useful substitute.

See Also

See Documenting Content for details on the Markdown syntax.

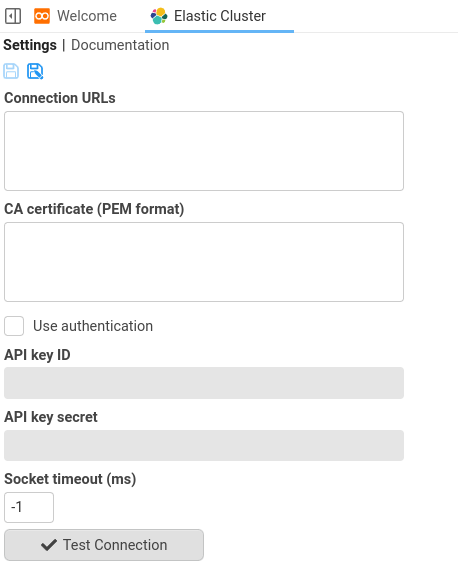

Elastic Cluster

Elastic Cluster provides a means to define a connection to an Elasticsearch Cluster. You would create one of these documents for each Elasticsearch cluster that you want to connect to. It defines the location and authentication details for connecting to an elastic cluster.

Thanks to Pete K for his help adding the new Elasticsearch integration features.

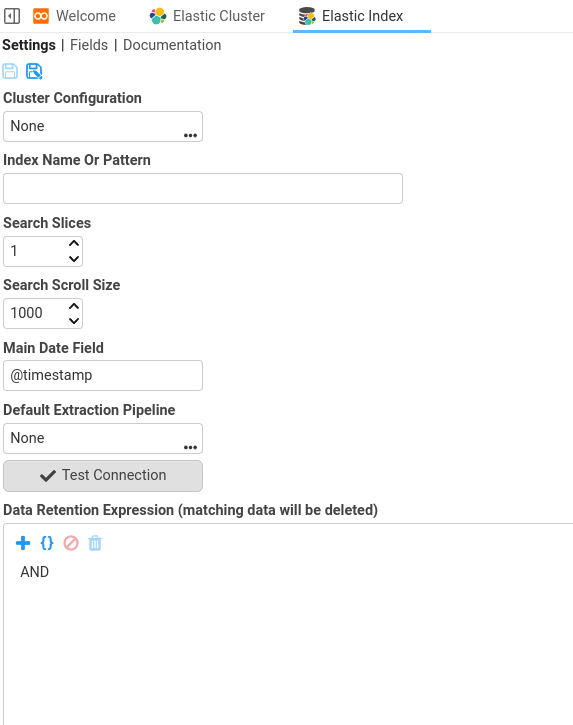

Elastic Index

An Elastic Index document is a data source for searching one or more indexes on Elasticsearch.

See Also

New Searchables

A Searchable is one of the data sources that appear at the top level of the tree pickers but not in the explorer tree.

Analytics

Adds the ability to query data from Table Builder type Analytic Rules.

New Pipeline Elements

DynamicIndexingFilter

DynamicIndexingFilterThis filter element is used by Views and Analytic Rules. Unlike IndexingFilter where you have to specify all the fields in the index up front for them to visible to the user in a Dashboard, DynamicIndexingFilter allows fields to be dynamically created in the XSLT based on the event being indexed. These dynamic fields are then ‘discovered’ after the event has been added to the index.

DynamicSearchResultOutputFilter

DynamicSearchResultOutputFilterThis filter element is used by Views and Analytic Rules. Unlike SearchResultOutputFilter this element can discover the fields found in the extracted event when the extraction pipeline creates fields that are not present in the index. These discovered field are then available for the user to pick from in the Dashboard/Query.

ElasticIndexingFilter

ElasticIndexingFilterElasticIndexingFilter is used to pass fields from an event to an Elasticsearch cluster to index.

See Also

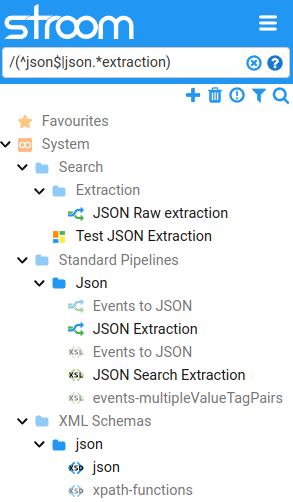

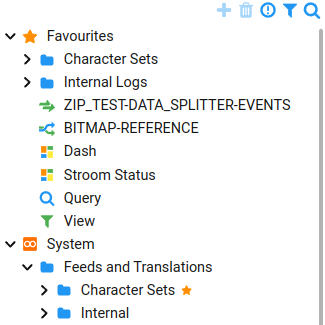

Explorer Tree

Various enhancements have been made to the explorer tree.

Favourites

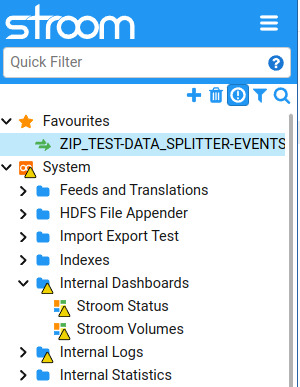

Users now have the ability to mark explorer tree entities as favourites. Favourites are user specific so each user can define their own favourites. This feature is useful for quick access to commonly used entities. Any entity or Folder at any level in the explorer tree can be set as a favourite. Favourites are also visible in the various entity pickers used in Stroom, e.g. Feed pickers.

An entity/folder can be added or removed from the favourites section using the context menu items :

An entity that is a favourite is marked with a in the main tree.

A change to a child item of a folder marked as a favourite will be reflected in both the main tree and the favourites section. All items marked as a favourite will appear as a top level item underneath the Favourites root, even if they have an ancestor folder that is also a favourite.

Thanks to Pete K for adding this new feature.

Document Tagging

You can now add tags to entities or folders in the explorer tree. Tags provide an additional means of searching for entities or folders. It allows entities/folders that reside in different folders to be associated together in one or more ways.

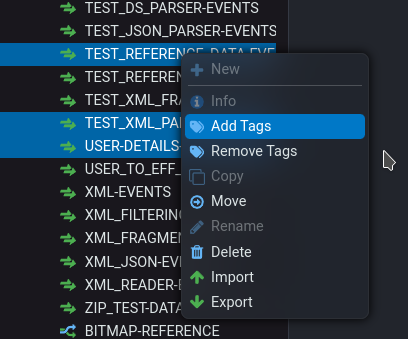

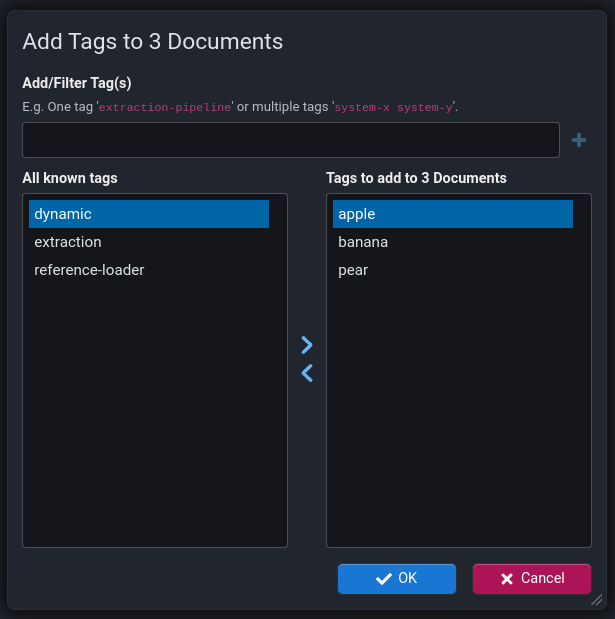

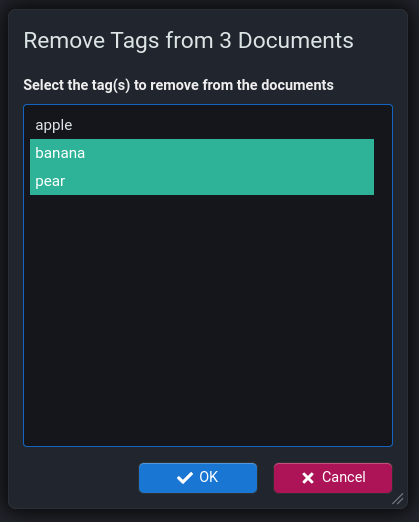

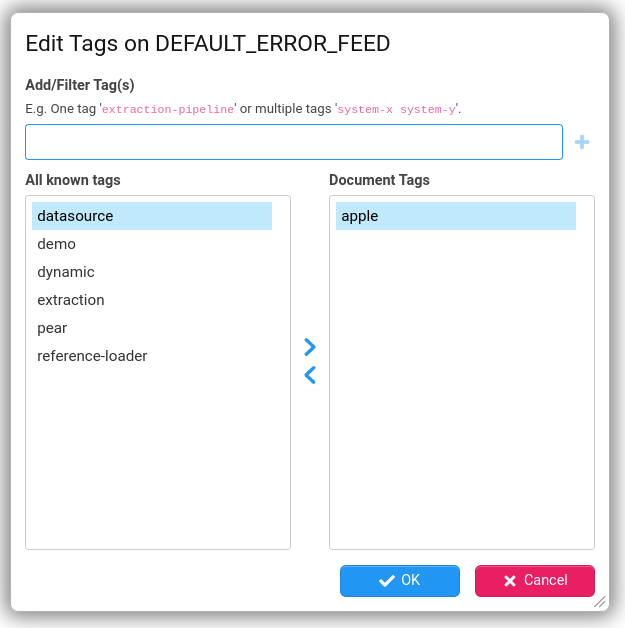

The tags on an entity/folder can be managed from the explorer tree context menu item:

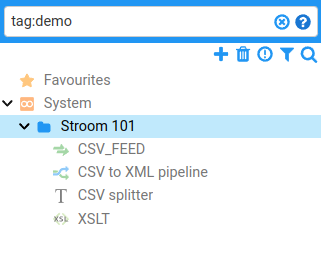

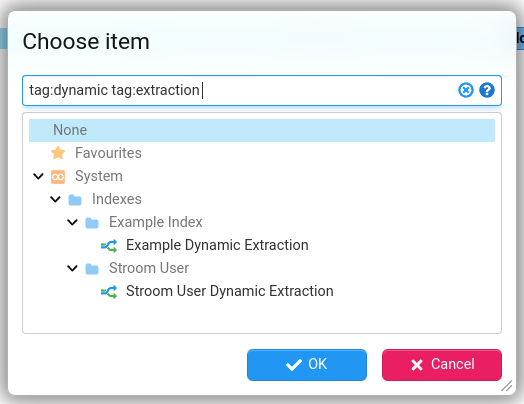

The explorer tree can be filtered by tag using the field prefix tag:, i.e. tag:extraction.

If multiple entities/folders are selected in the explorer tree then the following menu items are available:

Pre-populated Tag Filters

Stroom comes pre-configured with some default tags.

The property that sets these is stroom.explorer.suggestedTags.

The defaults for this property are dynamic, extraction and reference-loader

These pre-configured tags are also used in some of the tree pickers in stroom to provide extra filtering of entities in the picker.

For example when selecting a Pipeline on an XSLT Filter the filter on the tree picker to select the pipeline will be pre-populated with tag:reference-loader so only reference loader pipelines are included.

The following properties control the tags used to pre-populate tree picker filters:

stroom.ui.query.dashboardPipelineSelectorIncludedTagsstroom.ui.query.viewPipelineSelectorIncludedTagsstroom.ui.referencePipelineSelectorIncludedTags

See Also

See the migration task Tagging Entities for details on how to set up these pre-configured tags.

Copy Link to Clipboard

It is not possible to easily copy a direct link to a Document from the explorer tree. Direct links are useful if for example you want to share a link to a particular stroom dashboard.

To create a direct link, right click on the document you want a link for in the explorer tree and select:

You can then paste the link into a browser to jump directly to that document (authenticating as required).

Dependencies

It is not possible to jump to the Dependencies screen to see the dependencies or dependants of a particular document. In the explorer tree right click on a document and select one of:

This will open the Dependencies screen with a filter pre-populated to show all documents that are dependencies of the selected document.

This will open the Dependencies screen with a filter pre-populated to show all documents that depend on the selected document.

Broken Dependency Alerts

It is now possible to see alert icons in the explorer tree to highlight documents that have broken dependencies. The user can hover over these icons to display more information about the broken dependency. The explorer tree will show the alert icon against all documents with a broken dependency and all of its ancestor folders.

A broken dependency means a document (e.g. an XSLT) has a dependency on another document (e.g. a reference loader Pipeline) but that document does not exist. Broken dependencies can occur when a user deletes a document that other documents depend on, or by a partial import of content.

This feature is disabled by default as it can have a significant effect on performance of the explorer tree with large trees.

To enable this feature, set the property stroom.explorer.dependencyWarningsEnabled to true.

Once enabled at the system level by the property, the display of alerts in the tree can be enabled/disabled by the user using the Toggle Alerts button.

Entity Documentation

It is now possible to add documentation to all entities / documents in the explorer tree, e.g. adding documentation on a Feed. Each entity now has a Documentation sub-tab where the user can enter any documentation they choose about that entity. The documentation is written in Markdown syntax. It is not possible to add documentation to a Folder but you can create one or more a Documentation entities as a child item of that folder, see Documentation.

See Also

See Documenting Content for details on the Markdown syntax.

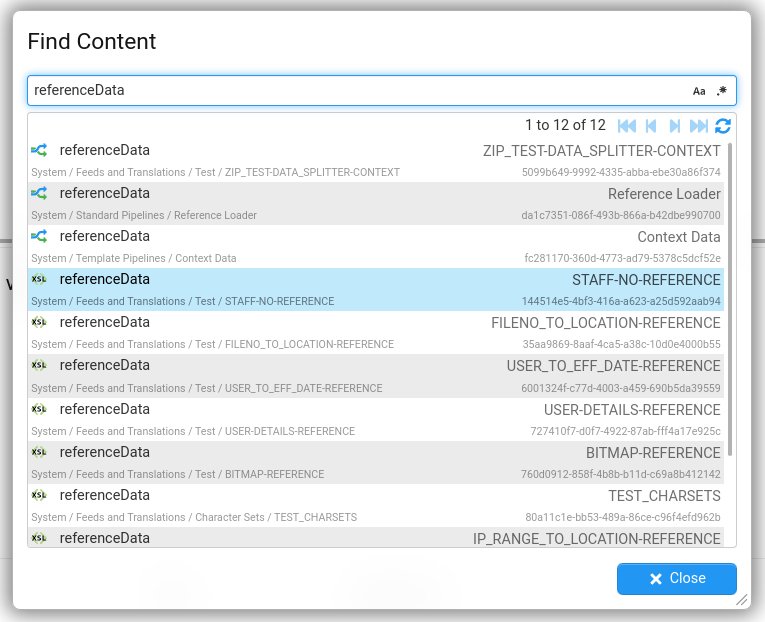

Find Content

You can now search the content of entities in the explorer tree, e.g. searching within XSLTs, Dictionaries, Pipeline structure, etc. This feature is available from the main menu :

It can also be accessed by hitting Ctrl ^ + Shift ⇧ + f (unless an editor pane has focus).

It is useful for finding which pipelines are using a certain element, or what XSLTs are using a certain stroom: function.

This is an early evolution of this feature and it is likely to be improved with time.

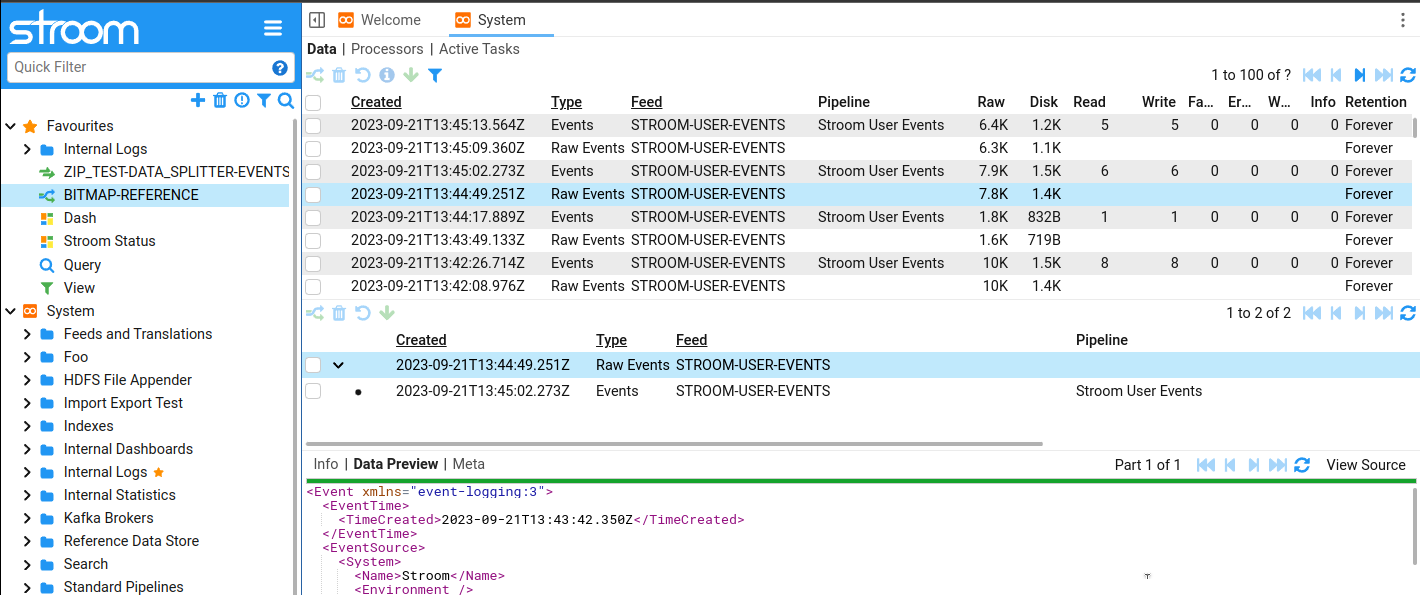

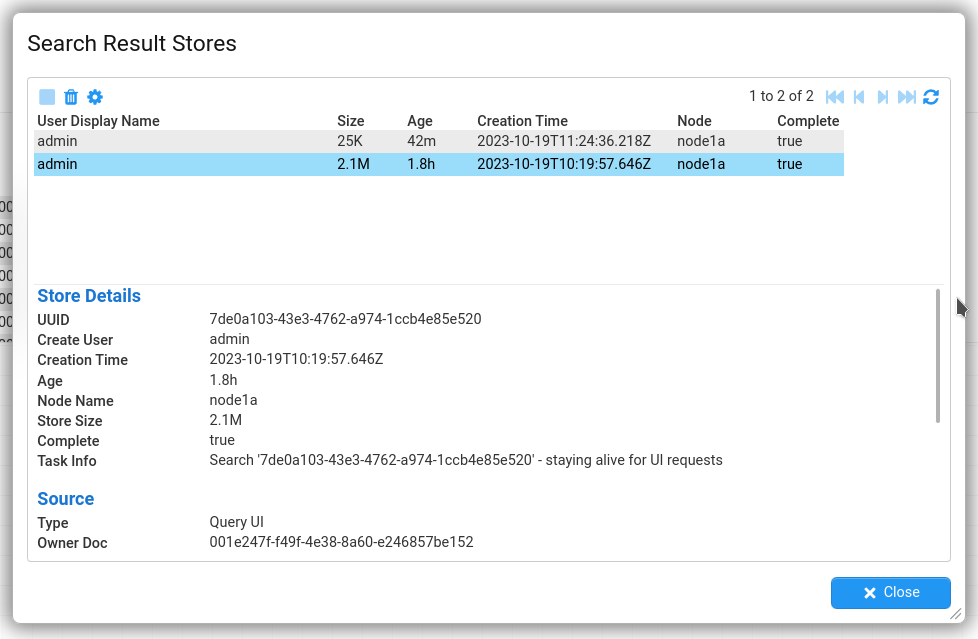

Search Result Stores

When a Dashboard/Query search is run, the results are written to a Search Results Store for that query. This stores reside on disk to reduce the memory used by queries. The Search Result Stores are stored on a single Stroom node and get created when a query is executed in a Dashboard, Query or Analytic Rule.

This screen provides an administrator with an overview of all the stores currently in existence in the Stroom cluster, showing details on their state and size. It can also be used to stop queries that are currently running or to delete the store entirely. Stores get deleted when the user closes the Dashboard or Query that created them.

Pipeline Stepper Improvements

The pipeline stepper has had a few user interface tweaks to make it easier to use.

Log Pane

When there are errors, warnings or info messages on a pipeline element they will now also be displayed in a pane at the bottom. This makes it easer to see all messages in one place.

The editor still displays icons with hover tips in the gutter on the appropriate line where the message has an associated line number.

The log pane can be hidden by clicking the icon.

Highlighting

The pipeline displayed at the top of the stepper now highlights elements that have log messages against them. This makes it easier to see when there is a problem with an element as you step through the data. The elements are given a coloured border according to the highest severity message on that element:

- Info - Blue

- Warning - Yellow

- Error - Red

- Fatal Error - Red (pulsating)

Filtering

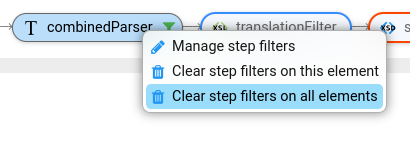

Stroom has always had the ability to filter the data being stepped, however the feature was a little hidden (the Mange Step Filters icon).

Now you can right click on a pipeline element to manage the filters on that element. You can also clear its filters or the filters on all elements.

The pipeline now shows which elements have an active filter by displaying a filter icon.

Server Tasks

Auto Refresh

You can now enable/disable the auto-refreshing of the Server Tasks table using the button. Auto refresh is enabled by default. Disabling it is useful when you want to delete a task, as it will stop the table being refreshed just before you hit delete.

Line Wrapping

You can now enable/disable line wrapping in the Name and Info cells using the button. Line wrapping is disable by default. Enabling this is useful to see long Info cell values.

Info Popup

The Info popup has been changed to include the value from the Info column.

Proxy

Stroom-Proxy v7.2 has undergone a significant re-write in an attempt to address certain performance issues, make it more flexible and to allow data to be forked to many destinations.

Warning

There are some known performance issues with Stroom-Proxy v7.2 so it is not yet production ready, therefore until these are addressed you are advised to continue using Stroom-Proxy v7.0.4.2 - Preview Features (experimental)

New Document types

Warning

The following features are usable but should be considered experimental at this point. The functionality may be subject to future changes that may break any content created with this version.View

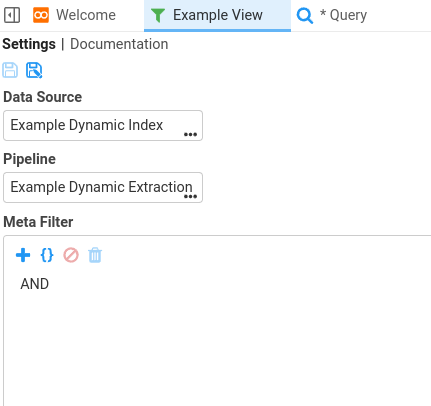

A View is a document type that has been added in to make using Dashboards and Queries easier. It encapsulates the data source and an optional extraction pipeline.

Previously a user wanting to create a Dashboard to query Stroom’s indexes would need to first select the Index to use as the data source then select an extraction pipeline. The indexes do not typically store the full event, so extraction pipelines retrieve the full event from the stream store for each matching event returned by the index. Users should not need to understand the distinction between what is held in the index and what has to be exacted, nor should they need to know how to do that extraction.

A View abstracts the user from this process. They can be configured by an admin or more senior user so that a standard user can just select an appropriate View as the data source in a Dashboard or Query and the View will silently handle the retrieval/extraction of data.

Views are also used by Analytic Rules so need to define a Meta filter that controls the streams that will be processed by the analytic. This filer should mirror the processor filter expression used to control data processed by the Index that the View is using. These two filters may be amalgamated in a future version of Stroom.

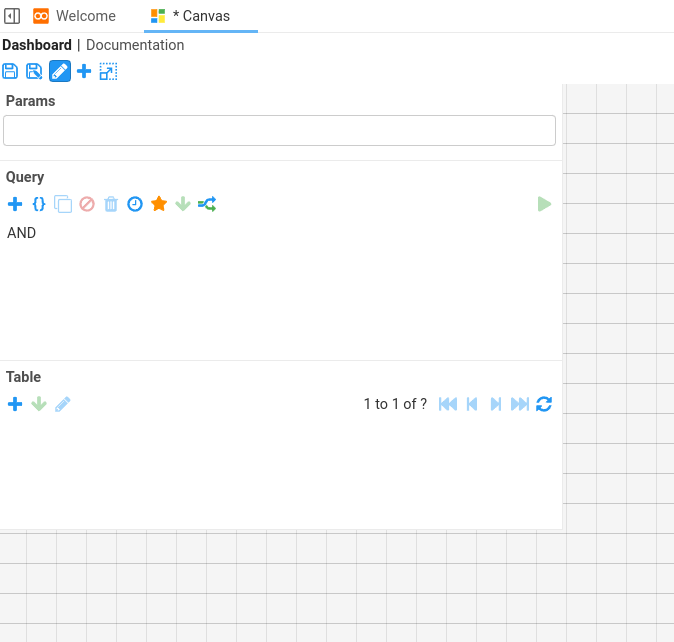

Query

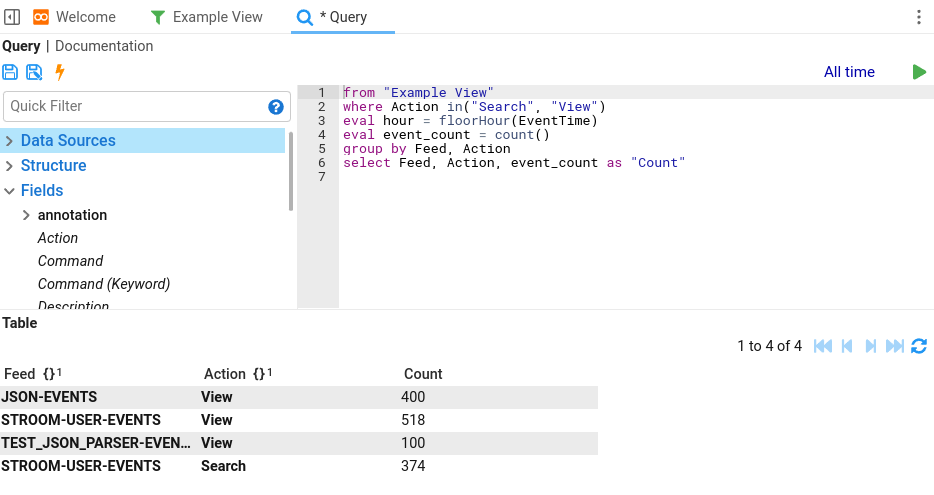

The Query feature provides a new way to query data in Stroom. It is a functional but evolving feature that is likely to be enhanced further in future versions.

Rather than using the query expression builder and table column expressions as used in Dashboards, it uses the new text based Stroom Query Language to define the query.

Stroom Query Language (StroomQL)

This is an example of a StroomQL query. It replaces the old dashboard expression ’tree’ and table column expressions. StroomQL has the advantage of being quicker to construct and is easier to copy from one query to another (whole or in part) as it is just plain text.

FROM "Example View" // Define the View to use as the data source

WHERE Action IN("Search", "View") // Equivalent to the Dashboard expression tree

EVAL hour = floorHour(EventTime) // Define named fields based on function expressions

EVAL event_count = count()

GROUP BY Feed, Action // Equivalent to Dashboard table column grouping

SELECT Feed, Action, event_count AS "Count" // Equivalent to adding columns to a Dashboard table

Editing StroomQL queries in the editor is also made easier by the code completion (using ctrl+space) to suggest data sources, fields, functions and StroomQL language terms.

StroomQl queries can be executed easily with ctrl+enter or shift+enter.

Analytic Rule

Analytic Rules allow the user to create scheduled or streaming Analytic Rule that will fire alerts when events matching the rule are seen.

Analytic rules rely on the new Stroom Query Language to define what events will match the rule. An Analytic Rule can be created directly from a Query by clicking the Create Analytic Rule icon.

4.3 - Breaking Changes

Warning

Please read this section carefully in case any of the changes affect you.Quoted Strings in Dashboard Table Expressions

Quoted strings in dashboard table expressions can now be expressed with single and double quotes.

As part of this change apostrophes in text are no longer escaped with an additional apostrophe (''), but instead require a leading \ before them if they are in a single quoted string, i.e:

'O''Neill' must be changed to 'O\'Neill'

In many cases it is preferable to use double quotes if the string in question has an apostrophe.

Note that the use of \ as an escape character also means that any existing \ characters will need to be escaped with a preceding \ so \ must now become \\, i.e:

c:\Windows\System32 must be changed to c:\\Windows\\System32

The new Find Content feature can be used to find affected Dashboards.

Search API Change

The APIs for running searches against Stroom data sources have changed in a breaking way. This is due to a change in the way running queries are identified.

Previously the client calling the API would provide generate a unique key for the query and included it in the searchRequest object.

This key would then be used again if the client wanted to make further requests for results for the same running query.

"key" : {

"uuid": "e244d45c-4086-463b-b1a8-10c8c7d7d6c7"

},

In v7.2 the query key is now generated by Stroom rather than the client.

In the first search request for a query, the client should now omit the key field from the request.

Stroom will generate a unique key for the running query and return it in the response.

However, in any subsequent requests for that running query, the client should include the key field, using the value from the previous response.

If you have static request JSON files then you can easily remove the key field using

jq

as follows:

4.4 - Upgrade Notes

Warning

Please read this section carefully in case any of it is relevant to your Stroom instance.Java Version

Stroom v7.2 requires Java v17. Previous versions of Stroom used Java v15 or lower. You will need to upgrade Java on the Stroom and Stroom-Proxy hosts to the latest patch release of Java v17.

Regex Performance Issue in XSLT Processing

v7.2 of Stroom uses a newer version of the Saxon XML processing library. Saxon is used for all pipeline processing. There is a bug in this version of Saxon which means that case insensitive regular expression matching performs very badly, i.e. it can be orders of magnitude slower than a case sensitive regex. This bug has been reported to Saxon and has been fixed but not yet released. It is likely a future release of Stroom will include a new version of Saxon that addresses this issue.

The performance issue will show itself when multiple pipelines with effected XSLTs are being processed concurrently.

This impacts XSLT/Xpath functions like matches() that use the i flag for case insensitive matching.

If you don’t not use any case-insensitive regular expressions in your XSLTs then you do not need to do anything.

Until Stroom is changed to used a new version of Saxon with a fix, you will have to change the XSLTs that use the i flag in one of the following ways:

-

Re-write the regular expression to use case sensitive matching, E.g:

matches('CATHODE', '^cat.*', 'i')=>matches('CATHODE', '^[cC][aA][tT].*')

This is the preferred option, but may not be possible for all regular expressions. -

Add the flag

;jto force Saxon to use the Java regular expression engine instead of the Saxon one, E.g:

matches('CATHODE', '^cat.*', 'i')=>matches('CATHODE', '^cat.*', 'i;j')

As this involves changing the regular expression engine it is possible that there will be subtle differences in the behaviour between the Saxon and Java engines. Regular expression engines are notorious for having subtle differences as there is no one standard for regular expressions.

Tagging Entities

As described in Document Tagging Stroom now pre-populates the filter of some of the tree pickers with pre-configured tags to limit the entities returned. If you do nothing then after upgrade these tree pickers will show no matching entities to the user.

You have two options:

-

Tag entities with the pre-configured tags so they are visible in the tree pickers.

To do this you need to find and tag the following entities:

- Tag all reference loader pipelines (those using the

ReferenceDataFilter

pipeline element) with

reference-loader(or whatever value(s) is/are set instroom.ui.referencePipelineSelectorIncludedTags). - Tag all extraction pipelines (those using the

SearchResultOutputFilter

pipeline element) with

extraction(or whatever value(s) is/are set instroom.ui.query.dashboardPipelineSelectorIncludedTags).

Any new entities matching the above criteria also need to be tagged in this way to ensure users see the correct entities. The new Find Content is useful for tracking down Pipelines that contain a certain element.

The property

stroom.ui.query.viewPipelineSelectorIncludedTagsis not an issue for an upgrade to v7.2 as Views did not exist prior to this version. All new dynamic extraction pipeline entities (those using the DynamicSearchResultOutputFilter pipeline element) need to be tagged withdynamicandextraction(or whatever value(s) is/are set instroom.ui.query.viewPipelineSelectorIncludedTags) - Tag all reference loader pipelines (those using the

ReferenceDataFilter

pipeline element) with

-

Change the system properties to not pre-populate the filters. If you do not want to use this feature then you can just clear the values of the following properties:

stroom.ui.query.dashboardPipelineSelectorIncludedTagsstroom.ui.query.viewPipelineSelectorIncludedTagsstroom.ui.referencePipelineSelectorIncludedTags

Reference Data Store

See Partitioned Reference Data Stores for details of the changes to reference data stores.

No intervention is required on upgrade for this change, this section is for information purposes only, however it is recommended that you take a backup copy of the existing reference data store files before booting the new version of Stroom.

To do this, make a copy of the files in the directory specified by stroom.pipeline.referenceData.lmdb.localDir.

If there is a problem then you can replace the store with the copy and try again.

Stroom will automatically migrate reference data from the legacy single data store into multiple Feed specific stores.

The legacy store exists in the directory configured by stroom.pipeline.referenceData.lmdb.localDir.

Each feed specific store will be in a sub-directory with a name like USER-DETAILS-REFERENCE___309e1ca0-7a5f-4f05-847b-b706805d758c (i.e. a file system safe version of the Feed name and the Feed’s

UUID

.

The migration happens on an as-needed basis. When a lookup is called from an XSLT, if the required reference stream is found to exist in the legacy store then it will be copied into the appropriate Feed specific store (creating the store if required). After being copied, the stream in the legacy store will be marked as available for purge so will get purged on the next run of the job Ref Data Off-heap Store Purge.

When Stroom boots it will delete a legacy store if it is found to be empty, so eventually the legacy store will cease to exist.

Depending on the speed of the local storage used for the reference data stores, the migration of streams and the subsequent purge from the legacy store may slow down processing until all the required migrations have happened. The migration is a trade-off between the additional time it would take to re-load all the reference streams (rather than just copying them from the legacy store) and the dedicated lock on the legacy store that all migrations need to acquire.

If you experience performance problems with reference data migrations or would prefer not to migrate the date then you can simply delete the legacy stores prior to running Stroom v7.2 for the first time.

The legacy store can be found in the directory configured by stroom.pipeline.referenceData.lmdb.localDir.

Simply delete the files data.mdb and lock.mdb (if present).

With the store deleted, stroom will simply load all reference streams as required with no migration.

Database Migrations

When Stroom boots for the first time with a new version it will run any required database migrations to bring the database schema up to the correct version.

Warning

It is highly recommended to ensure you have a database backup in place before booting stroom with a new version. This is to mitigate against any problems with the migration. It is also recommended to test the migration against a copy of your database to ensure that there are no problems when you do it for real.On boot, Stroom will ensure that the migrations are only run by a single node in the cluster. This will be the node that reaches that point in the boot process first. All other nodes will wait until that is complete before proceeding with the boot process.

It is recommended however to use a single node to execute the migration.

To avoid Stroom starting up and beginning processing you can use the migrage command to just migrate the database and not fully boot Stroom.

See migrage command for more details.

Migration Scripts

For information purposes only, the following is a list of all the database migrations that will be run when upgrading from v7.0 to v7.2.0. The migration script files can be viewed at github.com/gchq/stroom .

7.1.0

stroom-config

V07_01_00_001__preferences.sql - stroom-config/stroom-config-global-impl-db/src/main/resources/stroom/config/global/impl/db/migration/V07_01_00_001__preferences.sql

stroom-explorer

V07_01_00_005__explorer_favourite.sql - stroom-explorer/stroom-explorer-impl-db/src/main/resources/stroom/explorer/impl/db/migration/V07_01_00_005__explorer_favourite.sql

stroom-security

V07_01_00_001__add_stroom_user_cols.sql - stroom-security/stroom-security-impl-db/src/main/resources/stroom/security/impl/db/migration/V07_01_00_001__add_stroom_user_cols.sql

V07_01_00_002__rename_preferred_username_col.sql - stroom-security/stroom-security-impl-db/src/main/resources/stroom/security/impl/db/migration/V07_01_00_002__rename_preferred_username_col.sql

7.2.0

stroom-analytics

V07_02_00_001__analytics.sql - stroom-analytics/stroom-analytics-impl-db/src/main/resources/stroom/analytics/impl/db/migration/V07_02_00_001__analytics.sql

stroom-annotation

V07_02_00_005__annotation_assigned_migration_to_uuid.sql - stroom-annotation/stroom-annotation-impl-db/src/main/resources/stroom/annotation/impl/db/migration/V07_02_00_005__annotation_assigned_migration_to_uuid.sql

V07_02_00_010__annotation_entry_assigned_migration_to_uuid.sql - stroom-annotation/stroom-annotation-impl-db/src/main/resources/stroom/annotation/impl/db/migration/V07_02_00_010__annotation_entry_assigned_migration_to_uuid.sql

stroom-config

V07_02_00_005__preferences_column_rename.sql - stroom-config/stroom-config-global-impl-db/src/main/resources/stroom/config/global/impl/db/migration/V07_02_00_005__preferences_column_rename.sql

stroom-dashboard

V07_02_00_005__query_add_owner_uuid.sql - stroom-dashboard/stroom-storedquery-impl-db/src/main/resources/stroom/storedquery/impl/db/migration/V07_02_00_005__query_add_owner_uuid.sql

V07_02_00_006__query_add_uuid.sql - stroom-dashboard/stroom-storedquery-impl-db/src/main/resources/stroom/storedquery/impl/db/migration/V07_02_00_006__query_add_uuid.sql

stroom-explorer

V07_02_00_005__remove_datasource_tag.sql - stroom-explorer/stroom-explorer-impl-db/src/main/resources/stroom/explorer/impl/db/migration/V07_02_00_005__remove_datasource_tag.sql

stroom-security

V07_02_00_100__query_add_owners.sql - stroom-security/stroom-security-impl-db/src/main/resources/stroom/security/impl/db/migration/V07_02_00_100__query_add_owners.sql

V07_02_00_101__processor_filter_add_owners.sql - stroom-security/stroom-security-impl-db/src/main/resources/stroom/security/impl/db/migration/V07_02_00_101__processor_filter_add_owners.sql

4.5 - Change Log

For a detailed list of all the changes in v7.2 see: v7.2 CHANGELOG

5 - Version 7.1

For a detailed list of the changes in v7.1 see the

changelogTODO

Complete this section6 - Version 7.0

For a detailed list of the changes in v7.0 see the

changelogIntegrated Authentication

The previously standalone (in v6) stroom-auth-service and stroom-auth-ui services have been integrated into the core stroom application. This simplifies the installation and configuration of stroom.

Configuration Properties Improvements

Configuration is now provided by YAML files on boot

Previously stroom used a flat .conf file to manage the application configuration.

Application logging was configured either via a .yml file (in v6) or in an .xml file (in v5).

Now stroom uses a single .yml file to configure the application and logging.

This file is different to the .yml files(s) used in the docker compose configuration.

The YAML file provides a more logical hierarchical structure and support for typed values (longs, doubles, maps, lists, etc.).

The YAML configuration is intended for configuration items that are either needed to bootstrap stroom or have values that are specific to a node. Cluster wide configuration properties are still stored in the database and managed via the UI.

There has been a change to the precedence of the configuration properties held in different locations (YAML, database, default) and this is described in Properties.

Stroom Home and relative paths

The concept of Stroom Home has been introduced.

Stroom Home allows for one path to be configured and for all other configurable paths to default to being a child of this path.

This keeps all configured directories in one place by default.

Each configured directory can be set to an absolute path if a location outside Stroom Home is required.

If a relative path is used it will be relative to Stroom Home.

Stroom Home can be configured with the property stroom.path.home.

Improved Properties UI screens that tell you the values over the cluster

Previously the Properties UI screens could only tell you the values held within the database and not the value that a node was actually using. The Properties screens have been improved to tell you the source of a property value and where multiple values exist across the cluster, which nodes have what values. See Properties.

Validation of Configuration Property Values

Validation of configuration property values is now possible. The validation rules are defined in the application code and allow for things like:

- Ensuring that a regex pattern is a valid pattern

- Setting maximum or minimum values to numeric properties.

- Ensuring a property has a value.

Validation will be enforced on application boot or when a value is edited via the UI.

Hot Loading of Node Configuration

Now that node specific configuration is managed via the YAML configuration file stroom will detect changes to this file and update the configuration properties accordingly. Some properties however do not support being changed at runtime so will still require either the whole system or the UI nodes to be restarted.

Data retention impact summary