This is the multi-page printable view of this section. Click here to print.

User Guide

- 1: Application Programming Interfaces (API)

- 1.1: Query API

- 2: Concepts

- 2.1: Streams

- 3: Dashboards

- 3.1: Dashboard Expressions

- 3.1.1: Aggregate Functions

- 3.1.2: Cast Functions

- 3.1.3: Date Functions

- 3.1.4: Link Functions

- 3.1.5: Logic Funtions

- 3.1.6: Mathematics Functions

- 3.1.7: Rounding Functions

- 3.1.8: Selection Functions

- 3.1.9: String Functions

- 3.1.10: Type Checking Functions

- 3.1.11: URI Functions

- 3.1.12: Value Functions

- 3.2: Dictionaries

- 3.3: Direct URLs

- 3.4: Queries

- 4: Data Retention

- 5: Data Splitter

- 5.1: Simple CSV Example

- 5.2: Simple CSV example with heading

- 5.3: Complex example with regex and user defined names

- 5.4: Multi Line Example

- 5.5: Element Reference

- 5.5.1: Content Providers

- 5.5.2: Expressions

- 5.5.3: Output

- 5.5.4: Variables

- 5.6: Match References, Variables and Fixed Strings

- 5.6.1: Concatenation of references

- 5.6.2: Expression match references

- 5.6.3: Use of fixed strings

- 5.6.4: Variable reference

- 6: Editing and Viewing Data

- 7: Event Feeds

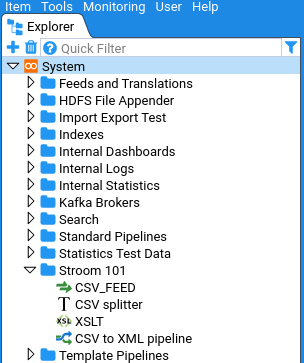

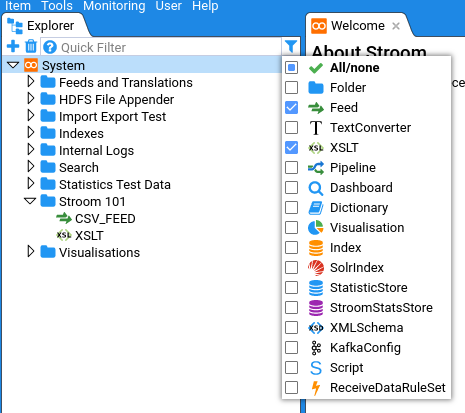

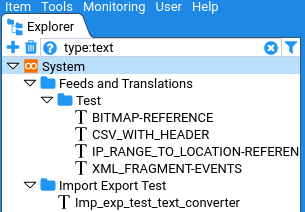

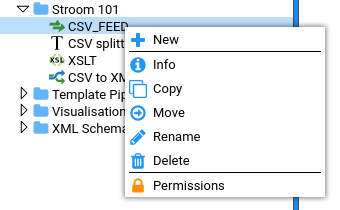

- 8: Finding Things

- 9: Nodes

- 10: Pipelines

- 10.1: Reference Data

- 10.2: File Output

- 10.3: Parser

- 10.3.1: Context Data

- 10.3.2: XML Fragments

- 10.4: XSLT Conversion

- 10.4.1: XSLT Functions

- 10.4.2: XSLT Includes

- 11: Properties

- 12: Roles

- 13: Security

- 14: Stroom Jobs

- 15: Tools

- 15.1: Command Line Tools

- 15.2: Stream Dump Tool

- 16: Volumes

1 - Application Programming Interfaces (API)

Stroom has a number of public APIs to allow other systems to interact with Stroom. The APIs are defined by a Swagger spec that can be viewed as json (external link) or yaml (external link). A dynamic user interface (external link) is also available for viewing the APIs.

1.1 - Query API

The Query API uses common request/response models and end points for querying each type of data source held in Stroom. The request/response models are defined in stroom-query (external link).

Currently Stroom exposes a set of query endpoints for the following data source types. Each data source type will have its own endpoint due to differences in the way the data is queried and the restrictions imposed on the query terms. However they all share the same API definition.

- stroom-index (external link) (the Lucene based event index)

- sqlstatistics (external link) (Stroom’s own statistics store)

The detailed documentation for the request/responses is contained in the Swagger definition linked to above.

Common endpoints

The standard query endpoints are

datasource

The data source endpoint is used to query Stroom for the details of a data source with a given docRef. The details will include such things as the fields available and any restrictions on querying the data.

search

The search endpoint is used to initiate a search against a data source or to request more data for an active search. A search request can be made using iterative mode, where it will perform the search and then only return the data it has immediately available. Subsequent requests for the same queryKey will also return the data immediately available, expecting that more results will have been found by the query. Requesting a search in non-iterative mode will result in the response being returned when the query has completed and all known results have been found.

The SearchRequest model is fairly complicated and contains not only the query terms but also a definition of how the data should be returned. A single SearchRequest can include multiple ResultRequest sections to return the queried data in multiple ways, e.g. as flat data and in an alternative aggregated form.

Stroom as a query builder

Stroom is able to export the json form of a SearchRequest model from its dashboards. This makes the dashboard a useful tool for building a query and the table settings to go with it. You can use the dashboard to defined the data source, define the query terms tree and build a table definition (or definitions) to describe how the data should be returned. The, clicking the download icon on the query pane of the dashboard will generate the SearchRequest json which can be immediately used with the /search API or modified to suit.

destroy

This endpoint is used to kill an active query by supplying the queryKey for query in question.

2 - Concepts

2.1 - Streams

Streams can either be created when data is directly POSTed in to Stroom or during the proxy aggregation process. When data is directly POSTed to Stroom the content of the POST will be stored as one Stream. With proxy aggregation multiple files in the proxy repository will/can be aggregated together into a single Stream.

Anatomy of a Stream

A Stream is made up of a number of parts of which the raw or cooked data is just one. In addition to the data the Stream can contain a number of other child stream types, e.g. Context and Meta Data.

The hierarchy of a stream is as follows:

- Stream nnn

- Part [1 to *]

- Data [1-1]

- Context [0-1]

- Meta Data [0-1]

- Part [1 to *]

Although all streams conform to the above hierarchy there are three main types of Stream that are used in Stroom:

- Non-segmented Stream - Raw events, Raw Reference

- Segmented Stream - Events, Reference

- Segmented Error Stream - Error

Segmented means that the data has been demarcated into segments or records.

Child Stream Types

Data

This is the actual data of the stream, e.g. the XML events, raw CSV, JSON, etc.

Context

This is additional contextual data that can be sent with the data. Context data can be used for reference data lookups.

Meta Data

This is the data about the Stream (e.g. the feed name, receipt time, user agent, etc.). This meta data either comes from the HTTP headers when the data was POSTed to Stroom or is added by Stroom or Stroom-Proxy on receipt/processing.

Non-Segmented Stream

The following is a representation of a non-segmented stream with three parts, each with Meta Data and Context child streams.

Raw Events and Raw Reference streams contain non-segmented data, e.g. a large batch of CSV, JSON, XML, etc. data. There is no notion of a record/event/segment in the data, it is simply data in any form (including malformed data) that is yet to be processed and demarcated into records/events, for example using a Data Splitter or an XML parser.

The Stream may be single-part or multi-part depending on how it is received. If it is the product of proxy aggregation then it is likely to be multi-part. Each part will have its own context and meta data child streams, if applicable.

Segmented Stream

The following is a representation of a segmented stream that contains three records (i.e events) and the Meta Data.

Cooked Events and Reference data are forms of segmented data. The raw data has been parsed and split into records/events and the resulting data is stored in a way that allows Stroom to know where each record/event starts/ends. These streams only have a single part.

Error Stream

Error streams are similar to segmented Event/Reference streams in that they are single-part and have demarcated records (where each error/warning/info message is a record). Error streams do not have any Meta Data or Context child streams.

3 - Dashboards

3.1 - Dashboard Expressions

Expressions can be used to manipulate data on Stroom Dashboards.

Each function has a name, and some have additional aliases.

In some cases, functions can be nested. The return value for some functions being used as the arguments for other functions.

The arguments to functions can either be other functions, literal values, or they can refer to fields on the input data using the field reference ${val} syntax.

3.1.1 - Aggregate Functions

Average

Takes an average value of the arguments

average(arg)

mean(arg)

Examples

average(${val})

${val} = [10, 20, 30, 40]

> 25

mean(${val})

${val} = [10, 20, 30, 40]

> 25

Count

Counts the number of records that are passed through it. Doesn’t take any notice of the values of any fields.

count()

Examples

Supplying 3 values...

count()

> 3

Count Groups

This is used to count the number of unique values where there are multiple group levels. For Example, a data set grouped as follows

- Group by Name

- Group by Type

A groupCount could be used to count the number of distinct values of ’type’ for each value of ’name'

Count Unique

This is used to count the number of unique values passed to the function where grouping is used to aggregate values in other columns. For Example, a data set grouped as follows

- Group by Name

- Group by Type

countUnique() could be used to count the number of distinct values of ’type’ for each value of ’name'

Examples

countUnique(${val})

${val} = ['bill', 'bob', 'fred', 'bill']

> 3

Joining

Concatenates all values together into a single string. If a delimter is supplied then the delimiter is placed bewteen each concatenated string. If a limit is supplied then it will only concatenate up to limit values.

joining(values)

joining(values, delimiter)

joining(values, delimiter, limit)

Examples

joining(${val}, ', ')

${val} = ['bill', 'bob', 'fred', 'bill']

> 'bill, bob, fred, bill'

Max

Determines the maximum value given in the args

max(arg)

Examples

max(${val})

${val} = [100, 30, 45, 109]

> 109

# They can be nested

max(max(${val}), 40, 67, 89)

${val} = [20, 1002]

> 1002

Min

Determines the minimum value given in the args

min(arg)

Examples

min(${val})

${val} = [100, 30, 45, 109]

> 30

They can be nested

min(max(${val}), 40, 67, 89)

${val} = [20, 1002]

> 20

Standard Deviation

Calculate the standard deviation for a set of input values.

stDev(arg)

Examples

round(stDev(${val}))

${val} = [600, 470, 170, 430, 300]

> 147

Sum

Sums all the arguments together

sum(arg)

Examples

sum(${val})

${val} = [89, 12, 3, 45]

> 149

Variance

Calculate the variance of a set of input values.

variance(arg)

Examples

variance(${val})

${val} = [600, 470, 170, 430, 300]

> 21704

3.1.2 - Cast Functions

To Boolean

Attempts to convert the passed value to a boolean data type.

toBoolean(arg1)

Examples:

toBoolean(1)

> true

toBoolean(0)

> false

toBoolean('true')

> true

toBoolean('false')

> false

To Double

Attempts to convert the passed value to a double data type.

toDouble(arg1)

Examples:

toDouble('1.2')

> 1.2

To Integer

Attempts to convert the passed value to a integer data type.

toInteger(arg1)

Examples:

toInteger('1')

> 1

To Long

Attempts to convert the passed value to a long data type.

toLong(arg1)

Examples:

toLong('1')

> 1

To String

Attempts to convert the passed value to a string data type.

toString(arg1)

Examples:

toString(1.2)

> '1.2'

3.1.3 - Date Functions

Parse Date

Parse a date and return a long number of milliseconds since the epoch.

parseDate(aString)

parseDate(aString, pattern)

parseDate(aString, pattern, timeZone)

Example

parseDate('2014 02 22', 'yyyy MM dd', '+0400')

> 1393012800000

Format Date

Format a date supplied as milliseconds since the epoch.

formatDate(aLong)

formatDate(aLong, pattern)

formatDate(aLong, pattern, timeZone)

Example

formatDate(1393071132888, 'yyyy MM dd', '+1200')

> '2014 02 23'

Ceiling Year/Month/Day/Hour/Minute/Second

ceilingYear(args...)

ceilingMonth(args...)

ceilingDay(args...)

ceilingHour(args...)

ceilingMinute(args...)

ceilingSecond(args...)

Examples

ceilingSecond("2014-02-22T12:12:12.888Z"

> "2014-02-22T12:12:13.000Z"

ceilingMinute("2014-02-22T12:12:12.888Z"

> "2014-02-22T12:13:00.000Z"

ceilingHour("2014-02-22T12:12:12.888Z"

> "2014-02-22T13:00:00.000Z"

ceilingDay("2014-02-22T12:12:12.888Z"

> "2014-02-23T00:00:00.000Z"

ceilingMonth("2014-02-22T12:12:12.888Z"

> "2014-03-01T00:00:00.000Z"

ceilingYear("2014-02-22T12:12:12.888Z"

> "2015-01-01T00:00:00.000Z"

Floor Year/Month/Day/Hour/Minute/Second

floorYear(args...)

floorMonth(args...)

floorDay(args...)

floorHour(args...)

floorMinute(args...)

floorSecond(args...)

Examples

floorSecond("2014-02-22T12:12:12.888Z"

> "2014-02-22T12:12:12.000Z"

floorMinute("2014-02-22T12:12:12.888Z"

> "2014-02-22T12:12:00.000Z"

floorHour("2014-02-22T12:12:12.888Z"

> 2014-02-22T12:00:00.000Z"

floorDay("2014-02-22T12:12:12.888Z"

> "2014-02-22T00:00:00.000Z"

floorMonth("2014-02-22T12:12:12.888Z"

> "2014-02-01T00:00:00.000Z"

floorYear("2014-02-22T12:12:12.888Z"

> "2014-01-01T00:00:00.000Z"

Round Year/Month/Day/Hour/Minute/Second

roundYear(args...)

roundMonth(args...)

roundDay(args...)

roundHour(args...)

roundMinute(args...)

roundSecond(args...)

Examples

roundSecond("2014-02-22T12:12:12.888Z")

> "2014-02-22T12:12:13.000Z"

roundMinute("2014-02-22T12:12:12.888Z")

> "2014-02-22T12:12:00.000Z"

roundHour("2014-02-22T12:12:12.888Z"

> "2014-02-22T12:00:00.000Z"

roundDay("2014-02-22T12:12:12.888Z"

> "2014-02-23T00:00:00.000Z"

roundMonth("2014-02-22T12:12:12.888Z"

> "2014-03-01T00:00:00.000Z"

roundYear("2014-02-22T12:12:12.888Z"

> "2014-01-01T00:00:00.000Z"

3.1.4 - Link Functions

Annotation

A helper function to make forming links to annotations easier than using Link. The Annotation function allows you to create a link to open the Annotation editor, either to view an existing annotation or to begin creating one with pre-populated values.

annotation(text, annotationId)

annotation(text, annotationId, [streamId, eventId, title, subject, status, assignedTo, comment])

If you provide just the text and an annotationId then it will produce a link that opens an existing annotation with the supplied ID in the Annotation Edit dialog.

Example

annotation('Open annotation', ${annotation:Id})

> [Open annotation](?annotationId=1234){annotation}

annotation('Create annotation', '', ${StreamId}, ${EventId})

> [Create annotation](?annotationId=&streamId=1234&eventId=45){annotation}

annotation('Escalate', '', ${StreamId}, ${EventId}, 'Escalation', 'Triage required')

> [Escalate](?annotationId=&streamId=1234&eventId=45&title=Escalation&subject=Triage%20required){annotation}

If you don’t supply an annotationId then the link will open the Annotation Edit dialog pre-populated with the optional arguments so that an annotation can be created.

If the annotationId is not provided then you must provide a streamId and an eventId.

If you don’t need to pre-populate a value then you can use '' or null() instead.

Example

annotation('Create suspect event annotation', null(), 123, 456, 'Suspect Event', null(), 'assigned', 'jbloggs')

> [Create suspect event annotation](?streamId=123&eventId=456&title=Suspect%20Event&assignedTo=jbloggs){annotation}

Dashboard

A helper function to make forming links to dashboards easier than using Link.

dashboard(text, uuid)

dashboard(text, uuid, params)

Example

dashboard('Click Here','e177cf16-da6c-4c7d-a19c-09a201f5a2da')

> [Click Here](?uuid=e177cf16-da6c-4c7d-a19c-09a201f5a2da){dashboard}

dashboard('Click Here','e177cf16-da6c-4c7d-a19c-09a201f5a2da', 'userId=user1')

> [Click Here](?uuid=e177cf16-da6c-4c7d-a19c-09a201f5a2da¶ms=userId%3Duser1){dashboard}

Data

Creates a link to open a source for data for viewing.

data(text, id, partNo, [recordNo, lineFrom, colFrom, lineTo, colTo, viewType, displayType])

viewType can be one of:

preview: Display the data as a formatted preview of a limited portion of the data.source: Display the un-formatted data in its original form with the ability to navigate around all of the data source.

displayType can be one of:

dialog: Open as a modal popup dialog.tab: Open as a top level tab within the Stroom browser tab.

Example

data('Quick View', ${StreamId}, 1)

> [Quick View]?id=1234&&partNo=1)

Link

Create a string that represents a hyperlink for display in a dashboard table.

link(url)

link(text, url)

link(text, url, type)

Example

link('http://www.somehost.com/somepath')

> [http://www.somehost.com/somepath](http://www.somehost.com/somepath)

link('Click Here','http://www.somehost.com/somepath')

> [Click Here](http://www.somehost.com/somepath)

link('Click Here','http://www.somehost.com/somepath', 'dialog')

> [Click Here](http://www.somehost.com/somepath){dialog}

link('Click Here','http://www.somehost.com/somepath', 'dialog|Dialog Title')

> [Click Here](http://www.somehost.com/somepath){dialog|Dialog Title}

Type can be one of:

dialog: Display the content of the link URL within a stroom popup dialog.tab: Display the content of the link URL within a stroom tab.browser: Display the content of the link URL within a new browser tab.dashboard: Used to launch a stroom dashboard internally with parameters in the URL.

If you wish to override the default title or URL of the target link in either a tab or dialog you can. Both dialog and tab types allow titles to be specified after a |, e.g. dialog|My Title.

Stepping

Open the Stepping tab for the requested data source.

stepping(text, id)

stepping(text, id, partNo)

stepping(text, id, partNo, recordNo)

Example

stepping('Click here to step',${StreamId})

> [Click here to step](?id=1)

3.1.5 - Logic Funtions

Equals

Evaluates if arg1 is equal to arg2

arg1 = arg2

equals(arg1, arg2)

Examples

'foo' = 'bar'

> false

'foo' = 'foo'

> true

51 = 50

> false

50 = 50

> true

equals('foo', 'bar')

> false

equals('foo', 'foo')

> true

equals(51, 50)

> false

equals(50, 50)

> true

Note that equals cannot be applied to null and error values, e.g. x=null() or x=err(). The isNull() and isError() functions must be used instead.

Greater Than

Evaluates if arg1 is greater than to arg2

arg1 > arg2

greaterThan(arg1, arg2)

Examples

51 > 50

> true

50 > 50

> false

49 > 50

> false

greaterThan(51, 50)

> true

greaterThan(50, 50)

> false

greaterThan(49, 50)

> false

Greater Than or Equal To

Evaluates if arg1 is greater than or equal to arg2

arg1 >= arg2

greaterThanOrEqualTo(arg1, arg2)

Examples

51 >= 50

> true

50 >= 50

> true

49 >= 50

> false

greaterThanOrEqualTo(51, 50)

> true

greaterThanOrEqualTo(50, 50)

> true

greaterThanOrEqualTo(49, 50)

> false

If

Evaluates the supplied boolean condition and returns one value if true or another if false

if(expression, trueReturnValue, falseReturnValue)

Examples

if(5 < 10, 'foo', 'bar')

> 'foo'

if(5 > 10, 'foo', 'bar')

> 'bar'

if(isNull(null()), 'foo', 'bar')

> 'foo'

Less Than

Evaluates if arg1 is less than to arg2

arg1 < arg2

lessThan(arg1, arg2)

Examples

51 < 50

> false

50 < 50

> false

49 < 50

> true

lessThan(51, 50)

> false

lessThan(50, 50)

> false

lessThan(49, 50)

> true

Less Than or Equal To

Evaluates if arg1 is less than or equal to arg2

arg1 <= arg2

lessThanOrEqualTo(arg1, arg2)

Examples

51 <= 50

> false

50 <= 50

> true

49 <= 50

> true

lessThanOrEqualTo(51, 50)

> false

lessThanOrEqualTo(50, 50)

> true

lessThanOrEqualTo(49, 50)

> true

Not

Inverts boolean values making true, false etc.

not(booleanValue)

Examples

not(5 > 10)

> true

not(5 = 5)

> false

not(false())

> true

3.1.6 - Mathematics Functions

Add

arg1 + arg2

Or reduce the args by successive addition

add(args...)

Examples

34 + 9

> 43

add(45, 6, 72)

> 123

Average

Takes an average value of the arguments

average(args...)

mean(args...)

Examples

average(10, 20, 30, 40)

> 25

mean(8.9, 24, 1.2, 1008)

> 260.525

Divide

Divides arg1 by arg2

arg1 / arg2

Or reduce the args by successive division

divide(args...)

Examples

42 / 7

> 6

divide(1000, 10, 5, 2)

> 10

divide(100, 4, 3)

> 8.33

Max

Determines the maximum value given in the args

max(args...)

Examples

max(100, 30, 45, 109)

> 109

# They can be nested

max(max(${val}), 40, 67, 89)

${val} = [20, 1002]

> 1002

Min

Determines the minimum value given in the args

min(args...)

Examples

min(100, 30, 45, 109)

> 30

They can be nested

min(max(${val}), 40, 67, 89)

${val} = [20, 1002]

> 20

Modulo

Determines the modulus of the dividend divided by the divisor.

modulo(dividend, divisor)

Examples

modulo(100, 30)

> 10

Multiply

Multiplies arg1 by arg2

arg1 * arg2

Or reduce the args by successive multiplication

multiply(args...)

Examples

4 * 5

> 20

multiply(4, 5, 2, 6)

> 240

Negate

Multiplies arg1 by -1

negate(arg1)

Examples

negate(80)

> -80

negate(23.33)

> -23.33

negate(-9.5)

> 9.5

Power

Raises arg1 to the power arg2

arg1 ^ arg2

Or reduce the args by successive raising to the power

power(args...)

Examples

4 ^ 3

> 64

power(2, 4, 3)

> 4096

Random

Generates a random number between 0.0 and 1.0

random()

Examples

random()

> 0.78

random()

> 0.89

...you get the idea

Subtract

arg1 - arg2

Or reduce the args by successive subtraction

subtract(args...)

Examples

29 - 8

> 21

subtract(100, 20, 34, 2)

> 44

Sum

Sums all the arguments together

sum(args...)

Examples

sum(89, 12, 3, 45)

> 149

3.1.7 - Rounding Functions

These functions require a value, and an optional decimal places. If the decimal places are not given it will give you nearest whole number.

Ceiling

ceiling(value, decimalPlaces<optional>)

Examples

ceiling(8.4234)

> 9

ceiling(4.56, 1)

> 4.6

ceiling(1.22345, 3)

> 1.223

Floor

floor(value, decimalPlaces<optional>)

Examples

floor(8.4234)

> 8

floor(4.56, 1)

> 4.5

floor(1.2237, 3)

> 1.223

Round

round(value, decimalPlaces<optional>)

Examples

round(8.4234)

> 8

round(4.56, 1)

> 4.6

round(1.2237, 3)

> 1.224

3.1.8 - Selection Functions

Selection functions are a form of aggregate function operating on grouped data.

Any

Selects the first value found in the group that is not null() or err().

If no explicit ordering is set then the value selected is indeterminate.

any(${val})

Examples

any(${val})

${val} = [10, 20, 30, 40]

> 10

Bottom

Selects the bottom N values and returns them as a delimited string in the order they are read.

bottom(${val}, delimiter, limit)

Examples

bottom(${val}, ', ', 2)

${val} = [10, 20, 30, 40]

> '30, 40'

First

Selects the first value found in the group even if it is null() or err().

If no explicit ordering is set then the value selected is indeterminate.

first(${val})

Examples

first(${val})

${val} = [10, 20, 30, 40]

> 10

Last

Selects the last value found in the group even if it is null() or err().

If no explicit ordering is set then the value selected is indeterminate.

last(${val})

Examples

last(${val})

${val} = [10, 20, 30, 40]

> 40

Nth

Selects the Nth value in a set of grouped values. If there is no explicit ordering on the field selected then the value returned is indeterminate.

nth(${val}, position)

Examples

nth(${val}, 2)

${val} = [20, 40, 30, 10]

> 40

Top

Selects the top N values and returns them as a delimited string in the order they are read.

top(${val}, delimiter, limit)

Examples

top(${val}, ', ', 2)

${val} = [10, 20, 30, 40]

> '10, 20'

3.1.9 - String Functions

Concat

Appends all the arguments end to end in a single string

concat(args...)

Example

concat('this ', 'is ', 'how ', 'it ', 'works')

> 'this is how it works'

Current User

Returns the username of the user running the query.

currentUser()

Example

currentUser()

> 'jbloggs'

Decode

The arguments are split into 3 parts

- The input value to test

- Pairs of regex matchers with their respective output value

- A default result, if the input doesn’t match any of the regexes

decode(input, test1, result1, test2, result2, ... testN, resultN, otherwise)

It works much like a Java Switch/Case statement

Example

decode(${val}, 'red', 'rgb(255, 0, 0)', 'green', 'rgb(0, 255, 0)', 'blue', 'rgb(0, 0, 255)', 'rgb(255, 255, 255)')

${val}='blue'

> rgb(0, 0, 255)

${val}='green'

> rgb(0, 255, 0)

${val}='brown'

> rgb(255, 255, 255) // falls back to the 'otherwise' value

in Java, this would be equivalent to

String decode(value) {

switch(value) {

case "red":

return "rgb(255, 0, 0)"

case "green":

return "rgb(0, 255, 0)"

case "blue":

return "rgb(0, 0, 255)"

default:

return "rgb(255, 255, 255)"

}

}

decode('red')

> 'rgb(255, 0, 0)'

DecodeUrl

Decodes a URL

decodeUrl('userId%3Duser1')

> userId=user1

EncodeUrl

Encodes a URL

encodeUrl('userId=user1')

> userId%3Duser1

Exclude

If the supplied string matches one of the supplied match strings then return null, otherwise return the supplied string

exclude(aString, match...)

Example

exclude('hello', 'hello', 'hi')

> null

exclude('hi', 'hello', 'hi')

> null

exclude('bye', 'hello', 'hi')

> 'bye'

Hash

Cryptographically hashes a string

hash(value)

hash(value, algorithm)

hash(value, algorithm, salt)

Example

hash(${val}, 'SHA-512', 'mysalt')

> A hashed result...

If not specified the hash() function will use the SHA-256 algorithm. Supported algorithms are determined by Java runtime environment.

Include

If the supplied string matches one of the supplied match strings then return it, otherwise return null

include(aString, match...)

Example

include('hello', 'hello', 'hi')

> 'hello'

include('hi', 'hello', 'hi')

> 'hi'

include('bye', 'hello', 'hi')

> null

Index Of

Finds the first position of the second string within the first

indexOf(firstString, secondString)

Example

indexOf('aa-bb-cc', '-')

> 2

Last Index Of

Finds the last position of the second string within the first

lastIndexOf(firstString, secondString)

Example

lastIndexOf('aa-bb-cc', '-')

> 5

Lower Case

Converts the string to lower case

lowerCase(aString)

Example

lowerCase('Hello DeVeLoPER')

> 'hello developer'

Match

Test an input string using a regular expression to see if it matches

match(input, regex)

Example

match('this', 'this')

> true

match('this', 'that')

> false

Query Param

Returns the value of the requested query parameter.

queryParam(paramKey)

Examples

queryParam('user')

> 'jbloggs'

Query Params

Returns all query parameters as a space delimited string.

queryParams()

Examples

queryParams()

> 'user=jbloggs site=HQ'

Replace

Perform text replacement on an input string using a regular expression to match part (or all) of the input string and a replacement string to insert in place of the matched part

replace(input, regex, replacement)

Example

replace('this', 'is', 'at')

> 'that'

String Length

Takes the length of a string

stringLength(aString)

Example

stringLength('hello')

> 5

Substring

Take a substring based on start/end index of letters

substring(aString, startIndex, endIndex)

Example

substring('this', 1, 2)

> 'h'

Substring After

Get the substring from the first string that occurs after the presence of the second string

substringAfter(firstString, secondString)

Example

substringAfter('aa-bb', '-')

> 'bb'

Substring Before

Get the substring from the first string that occurs before the presence of the second string

substringBefore(firstString, secondString)

Example

substringBefore('aa-bb', '-')

> 'aa'

Upper Case

Converts the string to upper case

upperCase(aString)

Example

upperCase('Hello DeVeLoPER')

> 'HELLO DEVELOPER'

3.1.10 - Type Checking Functions

Is Boolean

Checks if the passed value is a boolean data type.

isBoolean(arg1)

Examples:

isBoolean(toBoolean('true'))

> true

Is Double

Checks if the passed value is a double data type.

isDouble(arg1)

Examples:

isDouble(toDouble('1.2'))

> true

Is Error

Checks if the passed value is an error caused by an invalid evaluation of an expression on passed values, e.g. some values passed to an expression could result in a divide by 0 error.

Note that this method must be used to check for error as error equality using x=err() is not supported.

isError(arg1)

Examples:

isError(toLong('1'))

> false

isError(err())

> true

Is Integer

Checks if the passed value is an integer data type.

isInteger(arg1)

Examples:

isInteger(toInteger('1'))

> true

Is Long

Checks if the passed value is a long data type.

isLong(arg1)

Examples:

isLong(toLong('1'))

> true

Is Null

Checks if the passed value is null.

Note that this method must be used to check for null as null equality using x=null() is not supported.

isNull(arg1)

Examples:

isNull(toLong('1'))

> false

isNull(null())

> true

Is Number

Checks if the passed value is a numeric data type.

isNumber(arg1)

Examples:

isNumber(toLong('1'))

> true

Is String

Checks if the passed value is a string data type.

isString(arg1)

Examples:

isString(toString(1.2))

> true

Is Value

Checks if the passed value is a value data type, e.g. not null or error.

isValue(arg1)

Examples:

isValue(toLong('1'))

> true

isValue(null())

> false

Type Of

Returns the data type of the passed value as a string.

typeOf(arg1)

Examples:

typeOf('abc')

> string

typeOf(toInteger(123))

> integer

typeOf(err())

> error

typeOf(null())

> null

typeOf(toBoolean('false'))

> false

3.1.11 - URI Functions

Fields containing a Uniform Resource Identifier (URI) in string form can queried to extract the URI’s individual components of authority, fragment, host, path, port, query, scheme, schemeSpecificPart and userInfo. See either RFC 2306: Uniform Resource Identifiers (URI): Generic Syntax or Java’s java.net.URI Class for details regarding the components. If any component is not present within the passed URI, then an empty string is returned.

The extraction functions are

- extractAuthorityFromUri() - extract the Authority component

- extractFragmentFromUri() - extract the Fragment component

- extractHostFromUri() - extract the Host component

- extractPathFromUri() - extract the Path component

- extractPortFromUri() - extract the Port component

- extractQueryFromUri() - extract the Query component

- extractSchemeFromUri() - extract the Scheme component

- extractSchemeSpecificPartFromUri() - extract the Scheme specific part component

- extractUserInfoFromUri() - extract the UserInfo component

If the URI is http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details the table below displays the extracted components

| Expression | Extraction |

|---|---|

| extractAuthorityFromUri(${URI}) | foo:bar@w1.superman.com:8080 |

| extractFragmentFromUri(${URI}) | more-details |

| extractHostFromUri(${URI}) | w1.superman.com |

| extractPathFromUri(${URI}) | /very/long/path.html |

| extractPortFromUri(${URI}) | 8080 |

| extractQueryFromUri(${URI}) | p1=v1&p2=v2 |

| extractSchemeFromUri(${URI}) | http |

| extractSchemeSpecificPartFromUri(${URI}) | //foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2 |

| extractUserInfoFromUri(${URI}) | foo:bar |

extractAuthorityFromUri

Extracts the Authority component from a URI

extractAuthorityFromUri(uri)

Example

extractAuthorityFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'foo:bar@w1.superman.com:8080'

extractFragmentFromUri

Extracts the Fragment component from a URI

extractFragmentFromUri(uri)

Example

extractFragmentFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'more-details'

extractHostFromUri

Extracts the Host component from a URI

extractHostFromUri(uri)

Example

extractHostFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'w1.superman.com'

extractPathFromUri

Extracts the Path component from a URI

extractPathFromUri(uri)

Example

extractPathFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> '/very/long/path.html'

extractPortFromUri

Extracts the Port component from a URI

extractPortFromUri(uri)

Example

extractPortFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> '8080'

extractQueryFromUri

Extracts the Query component from a URI

extractQueryFromUri(uri)

Example

extractQueryFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'p1=v1&p2=v2'

extractSchemeFromUri

Extracts the Scheme component from a URI

extractSchemeFromUri(uri)

Example

extractSchemeFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'http'

extractSchemeSpecificPartFromUri

Extracts the SchemeSpecificPart component from a URI

extractSchemeSpecificPartFromUri(uri)

Example

extractSchemeSpecificPartFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> '//foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2'

extractUserInfoFromUri

Extracts the UserInfo component from a URI

extractUserInfoFromUri(uri)

Example

extractUserInfoFromUri('http://foo:bar@w1.superman.com:8080/very/long/path.html?p1=v1&p2=v2#more-details')

> 'foo:bar'

3.1.12 - Value Functions

Err

Returns err

err()

False

Returns boolean false

false()

Null

Returns null

null()

True

Returns boolean true

true()

3.2 - Dictionaries

Creating

Right click on a folder in the explorer tree that you want to create a dictionary in. Choose ‘New/Dictionary’ from the popup menu:

TODO: Fix image

Call the dictionary something like ‘My Dictionary’ and click OK.

TODO: Fix image

Now just add any search terms you want to the newly created dictionary and click save.

TODO: Fix image

You can add multiple terms.

- Terms on separate lines act as if they are part of an ‘OR’ expression when used in a search.

- Terms on a single line separated by spaces act as if they are part of an ‘AND’ expression when used in a search.

Using

To perform a search using your dictionary, just choose the newly created dictionary as part of your search expression:

TODO: Fix image

3.3 - Direct URLs

It is possible to navigate directly to a specific Stroom dashboard using a direct URL. This can be useful when you have a dashboard that needs to be viewed by users that would otherwise not be using the Stroom user interface.

URL format

The format for the URL is as follows:

https://<HOST>/stroom/dashboard?type=Dashboard&uuid=<DASHBOARD UUID>[&title=<DASHBOARD TITLE>][¶ms=<DASHBOARD PARAMETERS>]

Example:

https://localhost/stroom/dashboard?type=Dashboard&uuid=c7c6b03c-5d47-4b8b-b84e-e4dfc6c84a09&title=My%20Dash¶ms=userId%3DFred%20Bloggs

Host and path

The host and path are typically https://<HOST>/stroom/dashboard where <HOST> is the hostname/IP for Stroom.

type

type is a required parameter and must always be Dashboard since we are opening a dashboard.

uuid

uuid is a required parameter where <DASHBOARD UUID> is the UUID for the dashboard you want a direct URL to, e.g. uuid=c7c6b03c-5d47-4b8b-b84e-e4dfc6c84a09

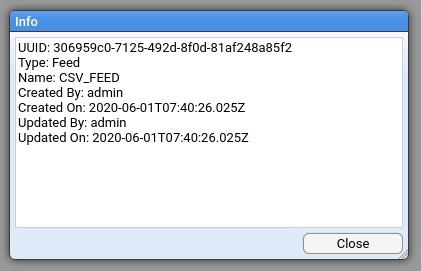

The UUID for the dashboard that you want to link to can be found by right clicking on the dashboard icon in the explorer tree and selecting Info.

The Info dialog will display something like this and the UUID can be copied from it:

DB ID: 4

UUID: c7c6b03c-5d47-4b8b-b84e-e4dfc6c84a09

Type: Dashboard

Name: Stroom Family App Events Dashboard

Created By: INTERNAL

Created On: 2018-12-10T06:33:03.275Z

Updated By: admin

Updated On: 2018-12-10T07:47:06.841Z

title (Optional)

title is an optional URL parameter where <DASHBOARD TITLE> allows the specification of a specific title for the opened dashboard instead of the default dashboard name.

The inclusion of ${name} in the title allows the default dashboard name to be used and appended with other values, e.g. 'title=${name}%20-%20' + param.name

params (Optional)

params is an optional URL parameter where <DASHBOARD PARAMETERS> includes any parameters that have been defined for the dashboard in any of the expressions, e.g. params=userId%3DFred%20Bloggs

Permissions

In order for as user to view a dashboard they will need the necessary permission on the various entities that make up the dashboard.

For a Lucene index query and associated table the following permissions will be required:

- Read permission on the Dashboard entity.

- Use permission on any Indexe entities being queried in the dashboard.

- Use permission on any Pipeline entities set as search extraction Pipelines in any of the dashboard’s tables.

- Use permission on any XSLT entities used by the above search extraction Pipeline entites.

- Use permission on any ancestor pipelines of any of the above search extraction Pipeline entites (if applicable).

- Use permission on any Feed entities that you want the user to be able to see data for.

For a SQL Statistics query and associated table the following permissions will be required:

- Read permission on the Dashboard entity.

- Use permission on the StatisticStore entity being queried.

For a visualisation the following permissions will be required:

- Read permission on any Visualiation entities used in the dashboard.

- Read permission on any Script entities used by the above Visualiation entities.

- Read permission on any Script entities used by the above Script entities.

3.4 - Queries

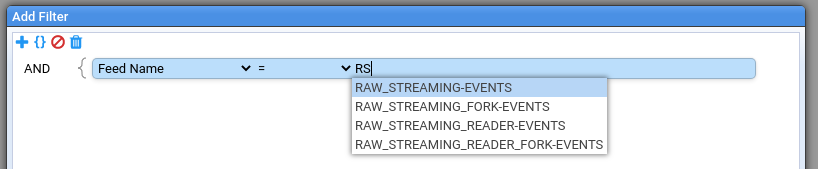

Dashboard queries are created with the query expression builder. The expression builder allows for complex boolean logic to be created across multiple index fields. The way in which different index fields may be queried depends on the type of data that the index field contains.

Date Time Fields

Time fields can be queried for times equal, greater than, greater than or equal, less than, less than or equal or between two times.

Times can be specified in two ways:

-

Absolute times

-

Relative times

Absolute Times

An absolute time is specified in ISO 8601 date time format, e.g. 2016-01-23T12:34:11.844Z

Relative Times

In addition to absolute times it is possible to specify times using expressions. Relative time expressions create a date time that is relative to the execution time of the query. Supported expressons are as follows:

-

now() - The current execution time of the query.

-

second() - The current execution time of the query rounded down to the nearest second.

-

minute() - The current execution time of the query rounded down to the nearest minute.

-

hour() - The current execution time of the query rounded down to the nearest hour.

-

day() - The current execution time of the query rounded down to the nearest day.

-

week() - The current execution time of the query rounded down to the first day of the week (Monday).

-

month() - The current execution time of the query rounded down to the start of the current month.

-

year() - The current execution time of the query rounded down to the start of the current year.

Adding/Subtracting Durations

With relative times it is possible to add or subtract durations so that queries can be constructed to provide for example, the last week of data, the last hour of data etc.

To add/subtract a duration from a query term the duration is simply appended after the relative time, e.g.

now() + 2d

Multiple durations can be combined in the expression, e.g.

now() + 2d - 10h

now() + 2w - 1d10h

Durations consist of a number and duration unit. Supported duration units are:

-

s - Seconds

-

m - Minutes

-

h - Hours

-

d - Days

-

w - Weeks

-

M - Months

-

y - Years

Using these durations a query to get the last weeks data could be as follows:

between now() - 1w and now()

Or midnight a week ago to midnight today:

between day() - 1w and day()

Or if you just wanted data for the week so far:

greater than week()

Or all data for the previous year:

between year() - 1y and year()

Or this year so far:

greater than year()

4 - Data Retention

By default Stroom will retain all the data it ingests and creates forever. It is likely that storage constraints/costs will mean that data needs to be deleted after a certain time. It is also likely that certain types of data may need to be kept for longer than other types.

Rules

Stroom allows for a set of data retention policy rules to be created to control at a fine grained level what data is deleted and what is retained.

The data retention rules are accessible by selecting Data Retention from the Tools menu. On first use the Rules tab of the Data Retention screen will show a single rule named Default Retain All Forever Rule. This is the implicit rule in stroom that retains all data and is always in play unless another rule overrides it. This rule cannot be edited, moved or removed.

Rule Precedence

Rules have a precedence, with a lower rule number being a higher priority.

When running the data retention job, Stroom will look at each stream held on the system and the retention policy of the first rule (starting from the lowest numbered rule) that matches that stream will apply.

One a matching rule is found all other rules with higher rule numbers (lower priority) are ignored.

For example if rule 1 says to retain streams from feed X-EVENTS for 10 years and rule 2 says to retain streams from feeds *-EVENTS for 1 year then rule 1 would apply to streams from feed X-EVENTS and they would be kept for 10 years, but rule 2 would apply to feed Y-EVENTS and they would only be kept for 1 year.

Rules are re-numbered as new rules are added/deleted/moved.

Creating a Rule

To create a rule do the following:

- Click the

icon to add a new rule.

- Edit the expression to define the data that the rule will match on.

- Provide a name for the rule to help describe what its purpose is.

- Set the retention period for data matching this rule, i.e Forever or a set time period.

The new rule will be added at the top of the list of rules, i.e. with the highest priority.

The

Rules can be enabled/disabled by clicking the checkbox next to the rule.

Changes to rules will not take effect until the

Rules can also be deleted (

Impact Summary

When you have a number of complex rules it can be difficult to determine what data will actually be deleted next time the Policy Based Data Retention job runs. To help with this, Stroom has the Impact Summary tab that acts as a dry run for the active rules. The impact summary provides a count of the number of streams that will be deleted broken down by rule, stream type and feed name. On large systems with lots of data or complex rules, this query may take a long time to run.

The impact summary operates on the current state of the rules on the Rules tab whether saved or un-saved. This allows you to make a change to the rules and test its impact before saving it.

5 - Data Splitter

Data Splitter was created to transform text into XML. The XML produced is basic but can be processed further with XSLT to form any desired XML output.

Data Splitter works by using regular expressions to match a region of content or tokenizers to split content. The whole match or match group can then be output or passed to other expressions to further divide the matched data.

The root <dataSplitter> element controls the way content is read and buffered from the source. It then passes this content on to one or more child expressions that attempt to match the content. The child expressions attempt to match content one at a time in the order they are specified until one matches. The matching expression then passes the content that it has matched to other elements that either emit XML or apply other expressions to the content matched by the parent.

This process of content supply, match, (supply, match)*, emit is best illustrated in a simple CSV example. Note that the elements and attributes used in all examples are explained in detail in the element reference.

5.1 - Simple CSV Example

The following CSV data will be split up into separate fields using Data Splitter.

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

01/01/2010,00:01:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,create,c:\test.txt

01/01/2010,00:02:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logoff,

The first thing we need to do is match each record. Each record in a CSV file is delimited by a new line character. The following configuration will split the data into records using ‘\n’ as a delimiter:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n"/>

</dataSplitter>

In the above example the ‘split’ tokenizer matches all of the supplied content up to the end of each line ready to pass each line of content on for further treatment.

We can now add a <group> element within <split> to take content matched by the tokenizer.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

</group>

</split>

</dataSplitter>

The <group> within the <split> chooses to take the content from the <split> without including the new line ‘\n’ delimiter by using match group 1, see expression match references for details.

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

The content selected by the <group> from its parent match can then be passed onto sub expressions for further matching:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

<!-- Match each value separated by a comma as the delimiter -->

<split delimiter=",">

</split>

</group>

</split>

</dataSplitter>

In the above example the additional <split> element within the <group> will match the content provided by the group repeatedly until it has used all of the group content.

The content matched by the inner <split> element can be passed to a <data> element to emit XML content.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match each line using a new line character as the delimiter -->

<split delimiter="\n">

<!-- Take the matched line (using group 1 ignores the delimiters,

without this each match would include the new line character) -->

<group value="$1">

<!-- Match each value separated by a comma as the delimiter -->

<split delimiter=",">

<!-- Output the value from group 1 (as above using group 1

ignores the delimiters, without this each value would include

the comma) -->

<data value="$1" />

</split>

</group>

</split>

</dataSplitter>

In the above example each match from the inner <split> is made available to the inner <data> element that chooses to output content from match group 1, see expression match references for details.

The above configuration results in the following XML output for the whole input:

<?xml version="1.0" encoding="UTF-8"?>

<records xmlns="records:2" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="records:2 file://records-v2.0.xsd" version="3.0">

<record>

<data value="01/01/2010" />

<data value="00:00:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="logon" />

</record>

<record>

<data value="01/01/2010" />

<data value="00:01:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="create" />

<data value="c:\test.txt" />

</record>

<record>

<data value="01/01/2010" />

<data value="00:02:00" />

<data value="192.168.1.100" />

<data value="SOMEHOST.SOMEWHERE.COM" />

<data value="user1" />

<data value="logoff" />

</record>

</records>

5.2 - Simple CSV example with heading

In addition to referencing content produced by a parent element it is often desirable to store content and reference it later. The following example of a CSV with a heading demonstrates how content can be stored in a variable and then referenced later on.

Input

This example will use a similar input to the one in the previous CSV example but also adds a heading line.

Date,Time,IPAddress,HostName,User,EventType,Detail

01/01/2010,00:00:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logon,

01/01/2010,00:01:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,create,c:\test.txt

01/01/2010,00:02:00,192.168.1.100,SOMEHOST.SOMEWHERE.COM,user1,logoff,

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match heading line (note that maxMatch="1" means that only the

first line will be matched by this splitter) -->

<split delimiter="\n" maxMatch="1">

<!-- Store each heading in a named list -->

<group>

<split delimiter=",">

<var id="heading" />

</split>

</group>

</split>

<!-- Match each record -->

<split delimiter="\n">

<!-- Take the matched line -->

<group value="$1">

<!-- Split the line up -->

<split delimiter=",">

<!-- Output the stored heading for each iteration and the value

from group 1 -->

<data name="$heading$1" value="$1" />

</split>

</group>

</split>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records xmlns="records:2" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="records:2 file://records-v2.0.xsd" version="3.0">

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:00:00" />

<data name="IPAddress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="logon" />

</record>

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:01:00" />

<data name="IPAddress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="create" />

<data name="Detail" value="c:\test.txt" />

</record>

<record>

<data name="Date" value="01/01/2010" />

<data name="Time" value="00:02:00" />

<data name="IPAdress" value="192.168.1.100" />

<data name="HostName" value="SOMEHOST.SOMEWHERE.COM" />

<data name="User" value="user1" />

<data name="EventType" value="logoff" />

</record>

</records>

5.3 - Complex example with regex and user defined names

The following example uses a real world Apache log and demonstrates the use of regular expressions rather than simple ‘split’ tokenizers. The usage and structure of regular expressions is outside of the scope of this document but Data Splitter uses Java’s standard regular expression library that is POSIX compliant and documented in numerous places.

This example also demonstrates that the names and values that are output can be hard coded in the absence of field name information to make XSLT conversion easier later on. Also shown is that any match can be divided into further fields with additional expressions and the ability to nest data elements to provide structure if needed.

Input

192.168.1.100 - "-" [12/Jul/2012:11:57:07 +0000] "GET /doc.htm HTTP/1.1" 200 4235 "-" "Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)"

192.168.1.100 - "-" [12/Jul/2012:11:57:07 +0000] "GET /default.css HTTP/1.1" 200 3494 "http://some.server:8080/doc.htm" "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)"

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!--

Standard Apache Format

%h - host name should be ok without quotes

%l - Remote logname (from identd, if supplied). This will return a dash unless IdentityCheck is set On.

\"%u\" - user name should be quoted to deal with DNs

%t - time is added in square brackets so is contained for parsing purposes

\"%r\" - URL is quoted

%>s - Response code doesn't need to be quoted as it is a single number

%b - The size in bytes of the response sent to the client

\"%{Referer}i\" - Referrer is quoted so that’s ok

\"%{User-Agent}i\" - User agent is quoted so also ok

LogFormat "%h %l \"%u\" %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

-->

<!-- Match line -->

<split delimiter="\n">

<group value="$1">

<!-- Provide a regular expression for the whole line with match

groups for each field we want to split out -->

<regex pattern="^([^ ]+) ([^ ]+) "([^"]+)" \[([^\]]+)] "([^"]+)" ([^ ]+) ([^ ]+) "([^"]+)" "([^"]+)"">

<data name="host" value="$1" />

<data name="log" value="$2" />

<data name="user" value="$3" />

<data name="time" value="$4" />

<data name="url" value="$5">

<!-- Take the 5th regular expression group and pass it to

another expression to divide into smaller components -->

<group value="$5">

<regex pattern="^([^ ]+) ([^ ]+) ([^ /]*)/([^ ]*)">

<data name="httpMethod" value="$1" />

<data name="url" value="$2" />

<data name="protocol" value="$3" />

<data name="version" value="$4" />

</regex>

</group>

</data>

<data name="response" value="$6" />

<data name="size" value="$7" />

<data name="referrer" value="$8" />

<data name="userAgent" value="$9" />

</regex>

</group>

</split>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records xmlns="records:2" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="records:2 file://records-v2.0.xsd" version="3.0">

<record>

<data name="host" value="192.168.1.100" />

<data name="log" value="-" />

<data name="user" value="-" />

<data name="time" value="12/Jul/2012:11:57:07 +0000" />

<data name="url" value="GET /doc.htm HTTP/1.1">

<data name="httpMethod" value="GET" />

<data name="url" value="/doc.htm" />

<data name="protocol" value="HTTP" />

<data name="version" value="1.1" />

</data>

<data name="response" value="200" />

<data name="size" value="4235" />

<data name="referrer" value="-" />

<data name="userAgent" value="Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)" />

</record>

<record>

<data name="host" value="192.168.1.100" />

<data name="log" value="-" />

<data name="user" value="-" />

<data name="time" value="12/Jul/2012:11:57:07 +0000" />

<data name="url" value="GET /default.css HTTP/1.1">

<data name="httpMethod" value="GET" />

<data name="url" value="/default.css" />

<data name="protocol" value="HTTP" />

<data name="version" value="1.1" />

</data>

<data name="response" value="200" />

<data name="size" value="3494" />

<data name="referrer" value="http://some.server:8080/doc.htm" />

<data name="userAgent" value="Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Trident/4.0; .NET CLR 1.1.4322; .NET CLR 2.0.50727; .NET4.0C; .NET4.0E; .NET CLR 3.0.4506.2152; .NET CLR 3.5.30729)" />

</record>

</records>

5.4 - Multi Line Example

Example multi line file where records are split over may lines. There are various ways this data could be treated but this example forms a record from data created when some fictitious query starts plus the subsequent query results.

Input

09/07/2016 14:49:36 User = user1

09/07/2016 14:49:36 Query = some query

09/07/2016 16:34:40 Results:

09/07/2016 16:34:40 Line 1: result1

09/07/2016 16:34:40 Line 2: result2

09/07/2016 16:34:40 Line 3: result3

09/07/2016 16:34:40 Line 4: result4

09/07/2009 16:35:21 User = user2

09/07/2009 16:35:21 Query = some other query

09/07/2009 16:45:36 Results:

09/07/2009 16:45:36 Line 1: result1

09/07/2009 16:45:36 Line 2: result2

09/07/2009 16:45:36 Line 3: result3

09/07/2009 16:45:36 Line 4: result4

Configuration

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<!-- Match each record. We want to treat the query and results as a single event so match the two sets of data separated by a double new line -->

<regex pattern="\n*((.*\n)+?\n(.*\n)+?\n)|\n*(.*\n?)+">

<group>

<!-- Split the record into query and results -->

<regex pattern="(.*?)\n\n(.*)" dotAll="true">

<!-- Create a data element to output query data -->

<data name="query">

<group value="$1">

<!-- We only want to output the date and time from the first line. -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

<data name="date" value="$1" />

<data name="time" value="$2" />

<data name="$3" value="$4" />

</regex>

<!-- Output all other values -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

<data name="$3" value="$4" />

</regex>

</group>

</data>

<!-- Create a data element to output result data -->

<data name="results">

<group value="$2">

<!-- We only want to output the date and time from the first line. -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

<data name="date" value="$1" />

<data name="time" value="$2" />

<data name="$3" value="$4" />

</regex>

<!-- Output all other values -->

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

<data name="$3" value="$4" />

</regex>

</group>

</data>

</regex>

</group>

</regex>

</dataSplitter>

Output

<?xml version="1.0" encoding="UTF-8"?>

<records xmlns="records:2" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="records:2 file://records-v2.0.xsd" version="2.0">

<record>

<data name="query">

<data name="date" value="09/07/2016" />

<data name="time" value="14:49:36" />

<data name="User" value="user1" />

<data name="Query" value="some query" />

</data>

<data name="results">

<data name="date" value="09/07/2016" />

<data name="time" value="16:34:40" />

<data name="Results" />

<data name="Line 1" value="result1" />

<data name="Line 2" value="result2" />

<data name="Line 3" value="result3" />

<data name="Line 4" value="result4" />

</data>

</record>

<record>

<data name="query">

<data name="date" value="09/07/2016" />

<data name="time" value="16:35:21" />

<data name="User" value="user2" />

<data name="Query" value="some other query" />

</data>

<data name="results">

<data name="date" value="09/07/2016" />

<data name="time" value="16:45:36" />

<data name="Results" />

<data name="Line 1" value="result1" />

<data name="Line 2" value="result2" />

<data name="Line 3" value="result3" />

<data name="Line 4" value="result4" />

</data>

</record>

</records>

5.5 - Element Reference

There are various elements used in a Data Splitter configuration to control behaviour. Each of these elements can be categorised as one of the following:

5.5.1 - Content Providers

Content providers take some content from the input source or elsewhere (see fixed strings and provide it to one or more expressions. Both the root element <dataSplitter> and <group> elements are content providers.

Root element <dataSplitter>

The root element of a Data Splitter configuration is <dataSplitter>. It supplies content from the input source to one or more expressions defined within it. The way that content is buffered is controlled by the root element and the way that errors are handled as a result of child expressions not matching all of the content it supplies.

Attributes

The following attributes can be added to the <dataSplitter> root element:

ignoreErrors

Data Splitter generates errors if not all of the content is matched by the regular expressions beneath the <dataSplitter> or within <group> elements. The error messages are intended to aid the user in writing good Data Splitter configurations. The intent is to indicate when the input data is not being matched fully and therefore possibly skipping some important data. Despite this, in some cases it is laborious to have to write expressions to match all content. In these cases it is preferable to add this attribute to ignore these errors. However it is often better to write expressions that capture all of the supplied content and discard unwanted characters. This attribute also affects errors generated by the use of the minMatch attribute on <regex> which is described later on.

Take the following example input:

Name1,Name2,Name3

value1,value2,value3 # a useless comment

value1,value2,value3 # a useless comment

This could be matched with the following configuration:

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<regex id="heading" pattern=".+" maxMatch="1">

…

</regex>

<regex id="body" pattern="\n[^#]+">

…

</regex>

</dataSplitter>

The above configuration would only match up to a comment for each record line, e.g.

Name1,Name2,Name3

value1,value2,value3 # a useless comment

value1,value2,value3 # a useless comment

This may well be the desired functionality but if there was useful content within the comment it would be lost. Because of this Data Splitter warns you when expressions are failing to match all of the content presented so that you can make sure that you aren’t missing anything important. In the above example it is obvious that this is the required behaviour but in more complex cases you might be otherwise unaware that your expressions were losing data.

To maintain this assurance that you are handling all content it is usually best to write expressions to explicitly match all content even though you may do nothing with some matches, e.g.

<?xml version="1.0" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.xsd" version="3.0">

<regex id="heading" pattern=".+" maxMatch="1">

…

</regex>

<regex id="body" pattern="\n([^#]+)#.+">

…

</regex>

</dataSplitter>

The above example would match all of the content and would therefore not generate warnings. Sub-expressions of ‘body’ could use match group 1 and ignore the comment.

However as previously stated it might often be difficult to write expressions that will just match content that is to be discarded. In these cases ignoreErrors can be used to suppress errors caused by unmatched content.

bufferSize (Advanced)

This is an optional attribute used to tune the size of the character buffer used by Data Splitter. The default size is 20000 characters and should be fine for most translations. The minimum value that this can be set to is 20000 characters and the maximum is 1000000000. The only reason to specify this attribute is when individual records are bigger than 10000 characters which is rarely the case.

Group element <group>

Groups behave in a similar way to the root element in that they provide content for one or more inner expressions to deal with, e.g.

<group value="$1">

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)" maxMatch="1">

...

<regex pattern="([^\t]*)\t([^\t]*)[\t]*([^=:]*)[=:]*(.*)">

...

Attributes

As the <group> element is a content provider it also includes the same ‘ignoreErrors’ attribute which behaves in the same way. The complete list of attributes for the <group> element is as follows:

id

When Data Splitter reports errors it outputs an XPath to describe the part of the configuration that generated the error, e.g.

DSParser [2:1] ERROR: Expressions failed to match all of the content provided by group: regex[0]/group[0]/regex[3]/group[1] : <group>

It is often a little difficult to identify the configuration element that generated the error by looking at the path and the element description, particularly when multiple elements are the same, e.g. many <group> elements without attributes. To make identification easier you can add an ‘id’ attribute to any element in the configuration resulting in error descriptions as follows:

DSParser [2:1] ERROR: Expressions failed to match all of the content provided by group: regex[0]/group[0]/regex[3]/group[1] : <group id="myGroupId">

value

This attribute determines what content to present to child expressions. By default the entire content matched by a group’s parent expression is passed on by the group to child expressions. If required, content from a specific match group in the parent expression can be passed to child expressions using the value attribute, e.g. value="$1". In addition to this content can be composed in the same way as it is for data names and values. see match references for a full description of match references.

ignoreErrors

This behaves in the same way as for the root element.

matchOrder

This is an optional attribute used to control how content is consumed by expression matches. Content can be consumed in sequence or in any order using matchOrder="sequence" or matchOrder="any". If the attribute is not specified, Data Splitter will default to matching in sequence.

When matching in sequence, each match consumes some content and the content position is moved beyond the match ready for the subsequent match. However, in some cases the order of these constructs is not predictable, e.g. we may sometimes be presented with:

Value1=1 Value2=2

… or sometimes with:

Value2=2 Value1=1

Using a sequential match order the following example would work to find both values in Value1=1 Value2=2

<group>

<regex pattern="Value1=([^ ]*)">

...

<regex pattern="Value2=([^ ]*)">

...

… but this example would skip over Value2 and only find the value of Value1 if the input was Value2=2 Value1=1.

To be able to deal with content that contains these constructs in either order we need to change the match order to any.

When matching in any order, each match removes the matched section from the content rather than moving the position past the match so that all remaining content can be matched by subsequent expressions. In the following example the first expression would match and remove Value1=1 from the supplied content and the second expression would be presented with Value2=2 which it could also match.

<group matchOrder="any">

<regex pattern="Value1=([^ ]*)">

...

<regex pattern="Value2=([^ ]*)">

...

If the attribute is omitted by default the match order will be sequential. This is the default behaviour as tokens are most often in sequence and consuming content in this way is more efficient as content does not need to be copied by the parser to chop out sections as is required for matching in any order. It is only necessary to use this feature when fields that are identifiable with a specific match can occur in any order.

reverse

Occasionally it is desirable to reverse the content presented by a group to child expressions. This is because it is sometimes easier to form a pattern by matching content in reverse.

Take the following example content of name, value pairs delimited by = but with no spaces between names, multiple spaces between values and only a space between subsequent pairs:

ipAddress=123.123.123.123 zones=Zone 1, Zone 2, Zone 3 location=loc1 A user=An end user serverName=bigserver

We could write a pattern that matches each name value pair by matching up to the start of the next name, e.g.

<regex pattern="([^=]+)=(.+?)( [^=]+=)">

This would match the following:

ipAddress=123.123.123.123 zones=

Here we are capturing the name and value for each pair in separate groups but the pattern has to also match the name from the next name value pair to find the end of the value. By default Data Splitter will move the content buffer to the end of the match ready for subsequent matches so the next name will not be available for matching.

In addition to matching too much content the above example also uses a reluctant qualifier .+?. Use of reluctant qualifiers almost always impacts performance so they are to be avoided if at all possible.

A better way to match the example content is to match the input in reverse, reading characters from right to left.

The following example demonstrates this:

<group reverse="true">

<regex pattern="([^=]+)=([^ ]+)">

<data name="$2" value="$1" />

</regex>

</group>

Using the reverse attribute on the parent group causes content to be supplied to all child expressions in reverse order. In the above example this allows the pattern to match values followed by names which enables us to cope with the fact that values have multiple spaces but names have no spaces.

Content is only presented to child regular expressions in reverse. When referencing values from match groups the content is returned in the correct order, e.g. the above example would return:

<data name="ipAddress" value="123.123.123.123" />

<data name="zones" value="Zone 1, Zone 2, Zone 3" />

<data name="location" value="loc1" />

<data name="user" value="An end user" />

<data name="serverName" value="bigserver" />

The reverse feature isn’t needed very often but there are a few cases where it really helps produce the desired output without the complexity and performance overhead of a reluctant match.

An alternative to using the reverse attribute is to use the original reluctant expression example but tell Data Splitter to make the subsequent name available for the next match by not advancing the content beyond the end of the previous value. This is done by using the advance attribute on the <regex>. However, the reverse attribute represents a better way to solve this particular problem and allows a simpler and more efficient regular expression to be used.

5.5.2 - Expressions

Expressions match some data supplied by a parent content provider. The content matched by an expression depends on the type of expression and how it is configured.

The <split>, <regex> and <all> elements are all expressions and match content as described below.

The <split> element

The <split> element directs Data Splitter to break up content using a specified character sequence as a delimiter. In addition to this it is possible to specify characters that are used to escape the delimiter as well as characters that contain or “quote” a value that may include the delimiter sequence but allow it to be ignored.

Attributes

The <split> element has the following attributes:

id

Optional attribute used to debug the location of expressions causing errors, see id.

delimiter

A required attribute used to specify the character string that will be used as a delimiter to split the supplied content unless it is preceded by an escape character or within a container if specified. Several of the previous examples use this attribute.

escape