This is the multi-page printable view of this section. Click here to print.

Indexing and Search

- 1: Elasticsearch

- 2: Apache Solr

- 3: Stroom Search API

1 - Elasticsearch

2 - Apache Solr

Assumptions

- You are familiar with Lucene indexing within Stroom

- You have some data to index

Points to note

- A Solr core is the home for exactly one Stroom index.

- Cores must initially be created in Solr.

- It is good practice to name your Solr core the same as your Stroom Index.

Method

-

Start a docker container for a single solr node.

-

Check your Solr node. Point your browser at http://yourSolrHost:8983

-

Create a core in Solr using the CLI.

-

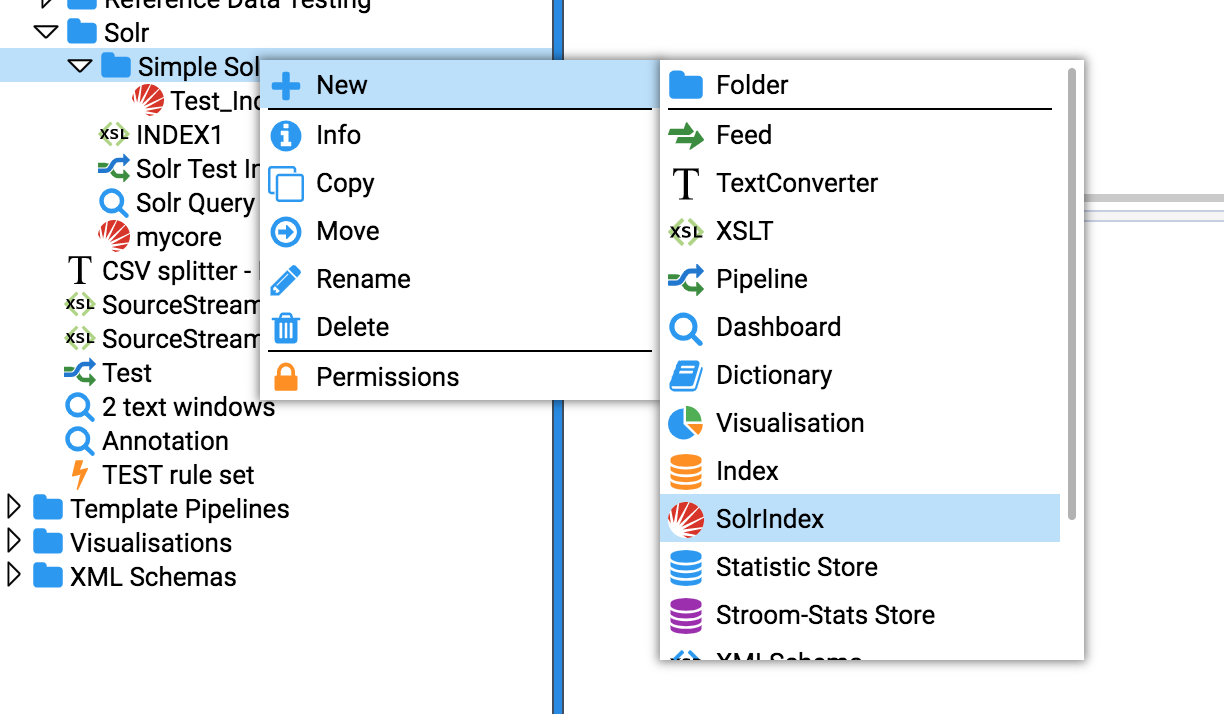

Create a SolrIndex in Stroom

-

Update settings for your new Solr Index in Stroom then press “Test Connection”. If successful then press Save. Note the “Solr URL” field is a reference to the newly created Solr core.

-

Add some Index fields. e.g.EventTime, UserId

-

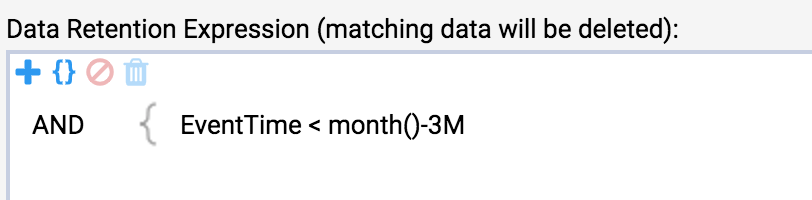

Retention is different in Solr, you must specify an expression that matches data that can be deleted.

-

Your Solr Index can now be used as per a Stroom Lucene Index. However, your Indexing pipeline must use a SolrIndexingFilter instead of an IndexingFilter.

3 - Stroom Search API

-

Create an API Key for yourself, this will allow the API to authenticate as you and run the query with your privileges.

-

Create a Dashboard that extracts the data you are interested in. You should create a Query and Table.

-

Download the JSON for your Query. Press the download icon in the Query Pane to generate a file containing the JSON. Save the JSON to a file named query.json.

-

Use curl to send the query to Stroom.

-

The query response should be in a file named response.out.

-

Optional step: reformat the response to csv using

jq.