1 - Use a Reference Feed

Introduction

Reference feeds are temporal stores of reference data that a translation can look up to enhance an Event with additional data.

For example, rather than storing a person’s full name and phone number in every event, we can just store their user id and, based on this value, look up the associated user data and decorate the event.

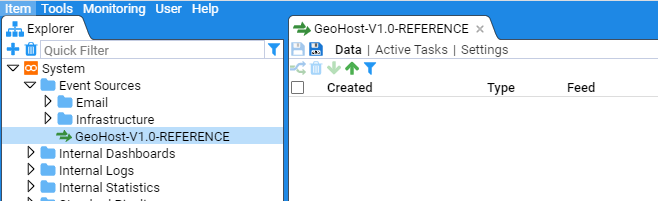

In the description below, we will make use of the GeoHost-V1.0-REFERENCE reference feed defined in separate HOWTO document.

Using a Reference Feed

To use a Reference Feed, one uses the Stroom xslt function stroom:lookup(). This function is found within the xml namespace xmlns:stroom=“stroom”.

The lookup function has two mandatory arguments and three optional as per

- lookup(String map, String key) Look up a reference data map using the period start time

- lookup(String map, String key, String time) Look up a reference data map using a specified time, e.g. the event time

- lookup(String map, String key, String time, Boolean ignoreWarnings) Look up a reference data map using a specified time, e.g. the event time, and ignore any warnings generated by a failed lookup

- lookup(String map, String key, String time, Boolean ignoreWarnings, Boolean trace) Look up a reference data map using a specified time, e.g. the event time, ignore any warnings generated by a failed lookup and get trace information for the path taken to resolve the lookup.

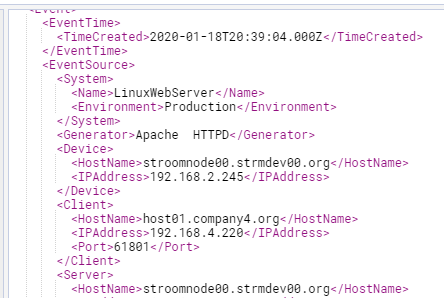

Let’s say, we have the Event fragment

<Event>

<EventTime>

<TimeCreated>2020-01-18T20:39:04.000Z</TimeCreated>

</EventTime>

<EventSource>

<System>

<Name>LinuxWebServer</Name>

<Environment>Production</Environment>

</System>

<Generator>Apache HTTPD</Generator>

<Device>

<HostName>stroomnode00.strmdev00.org</HostName>

<IPAddress>192.168.2.245</IPAddress>

</Device>

<Client>

<IPAddress>192.168.4.220</IPAddress>

<Port>61801</Port>

</Client>

<Server>

<HostName>stroomnode00.strmdev00.org</HostName>

<Port>443</Port>

</Server>

...

</EventSource>

then the following XSLT would lookup our GeoHost-V1.0-REFERENCE Reference map to find the FQDN of our client

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value)" />

And the XSLT to find the IP Address for our Server would be

<xsl:variable name="sipaddr" select="stroom:lookup('FQDN_TO_IP', data[@name = 'vserver']/@value)" />

In practice, one would also pass the time element as well as setting ignoreWarnings to true(). i.e.

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value, $formattedDate, true())" />

...

<xsl:variable name="sipaddr" select="stroom:lookup('FQDN_TO_IP', data[@name = 'vserver']/@value, $formattedDate, true())" />

Modifying an Event Feed to use a Reference Feed

We will now modify an Event feed to have it lookup our GeoHost-V1.0-REFERENCE reference maps to add additional information to the event.

The feed for this exercise is the Apache-SSL-BlackBox-V2.0-EVENTS event feed which processes Apache HTTPD SSL logs which make use of a variation on the BlackBox log format.

We will step through a Raw Event stream and modify the translation directly.

This way, we see the changes directly.

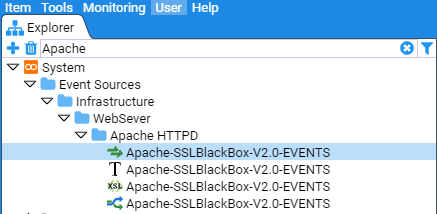

Using the Explorer pane’s Quick Filter, entry box, we will find the Apache feed.

First, select the Quick Filter text entry box and type Apache (the Quick Filter is case insensitive).

At this you will see the Explorer pane system group structure reduce down to just the Event Sources.

The Explorer pane will display any resources that match our Apache string.

Double clicking on the

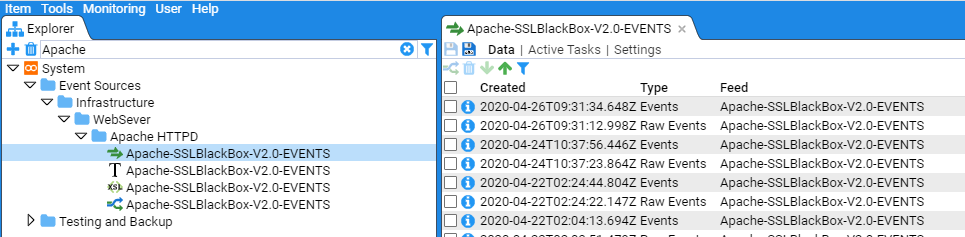

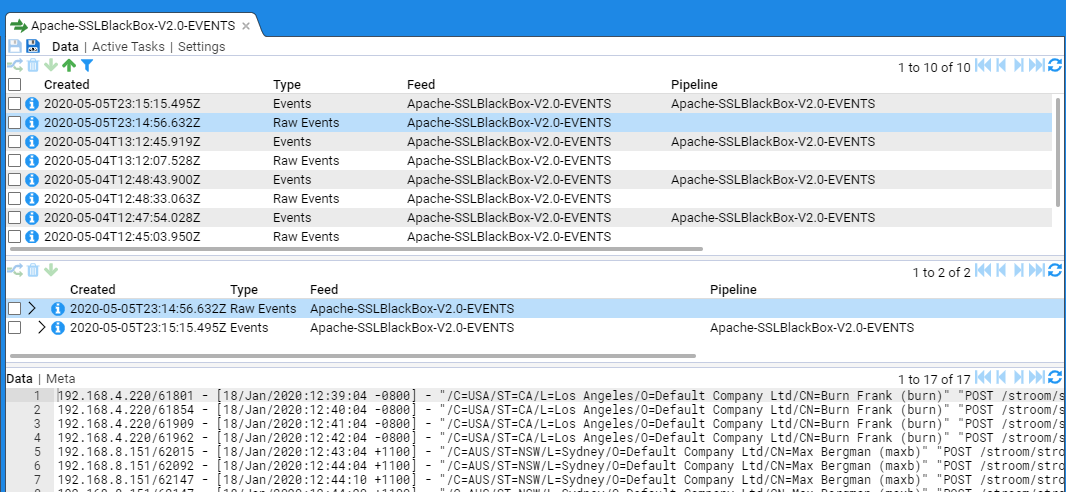

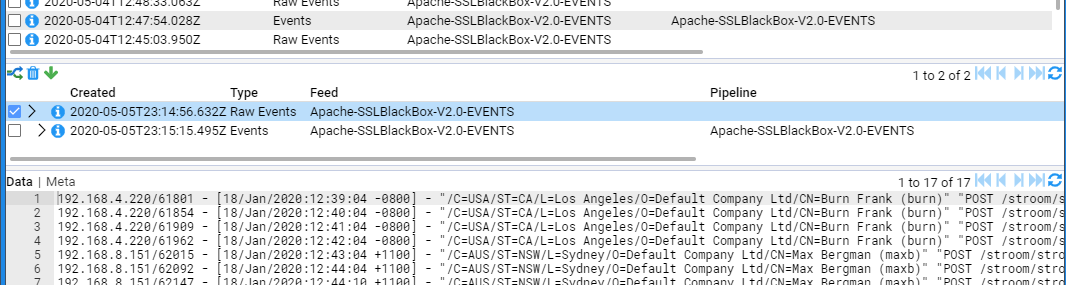

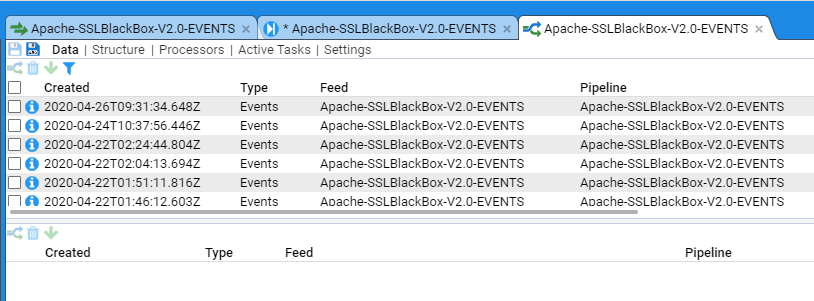

We click on the tab’s Data sub-item and then select the most recent Raw Events stream.

Now, select the check box on the Raw Events stream in the Specific Stream (middle) pane.

Note that, when we check the box, we see that the Process, Delete and Download icons (

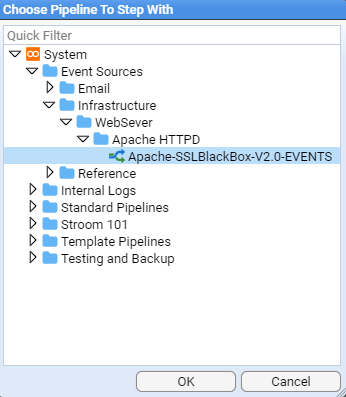

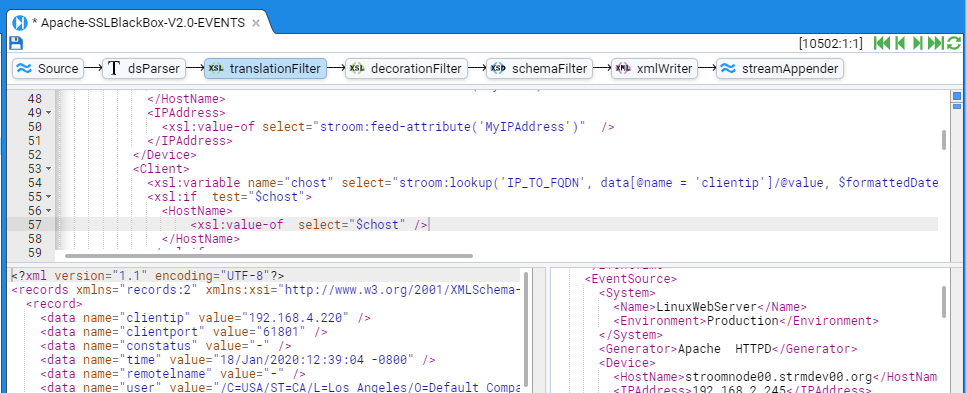

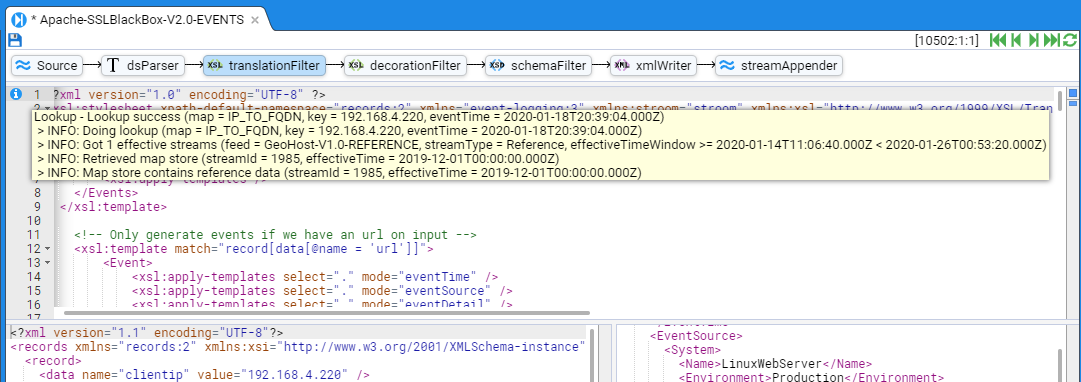

We enter Stepping Mode by pressing the stepping button found at the bottom right corner of the Data/Meta-data pane. You will then be requested to choose a pipeline to step with, with the selection already pre-selected

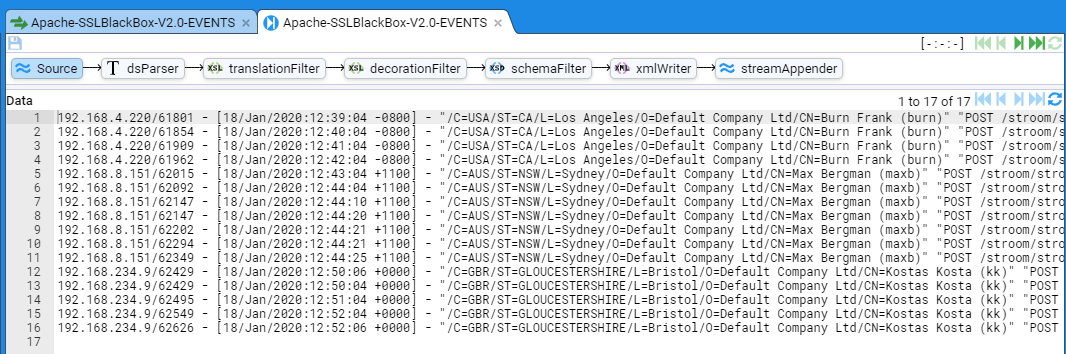

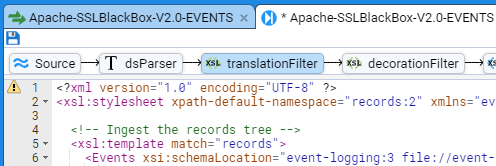

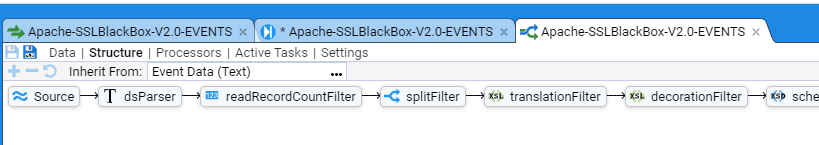

This auto pre-selection is a simple pattern matching action by Stroom. Press OK to start the stepping which displays the pipeline stepping tab

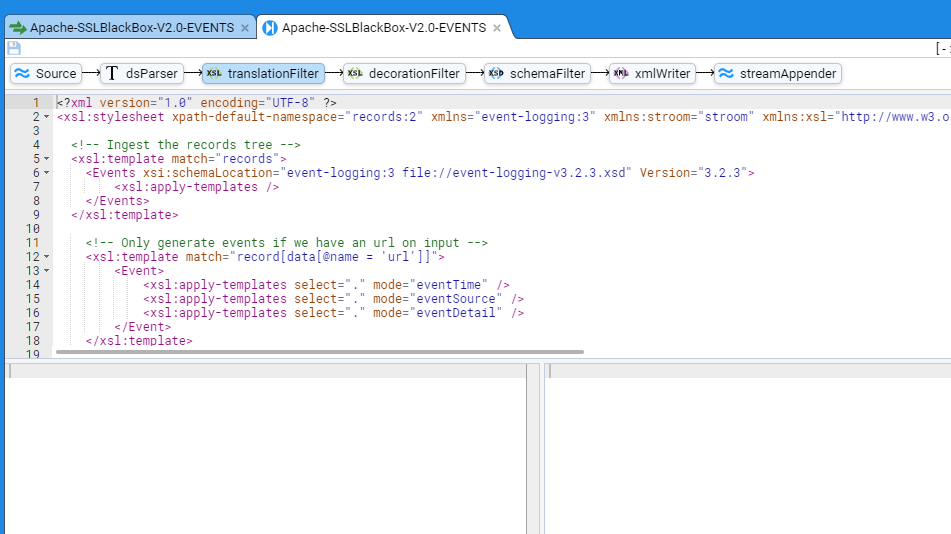

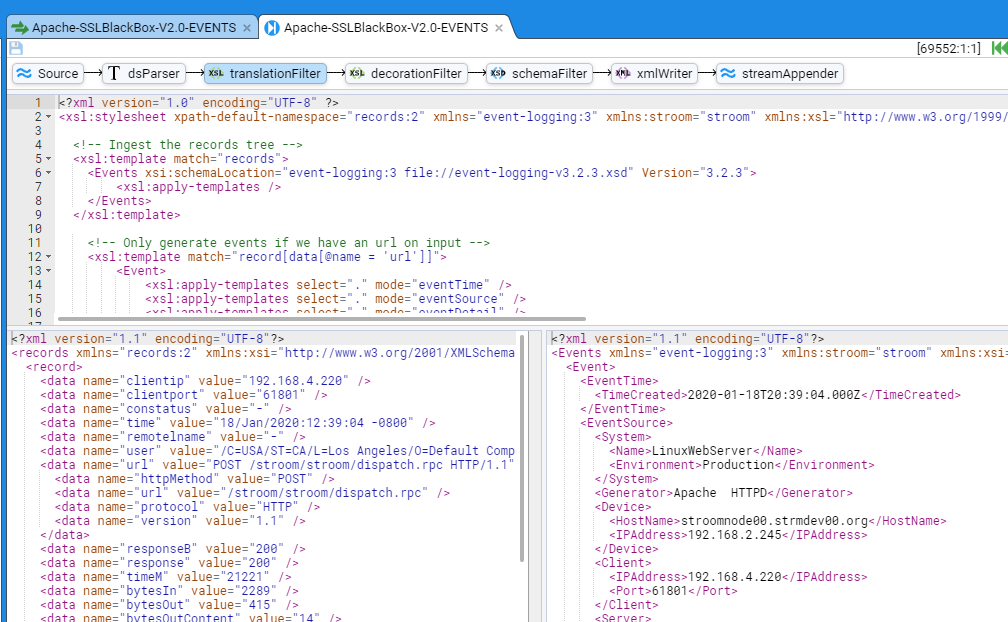

Select the translationFilter element to reveal the translation we plan to modify.

To bring up the first event from the stream, press the Step Forward button

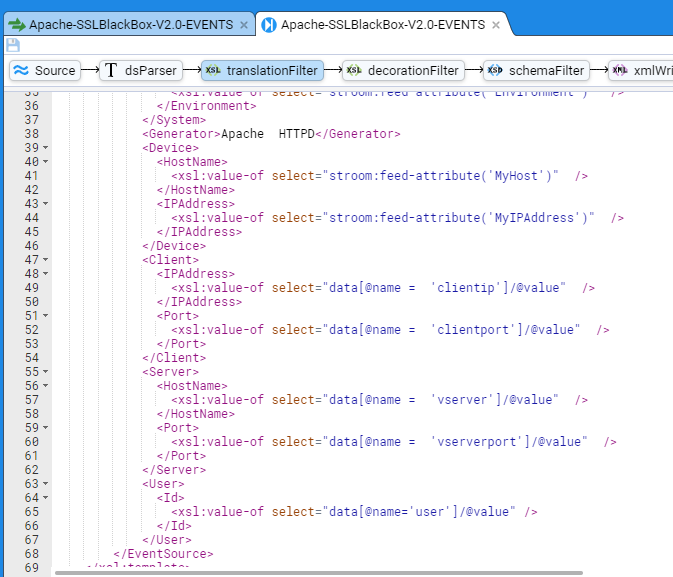

We scroll the translation pane to show the XSLT segment that deals with the

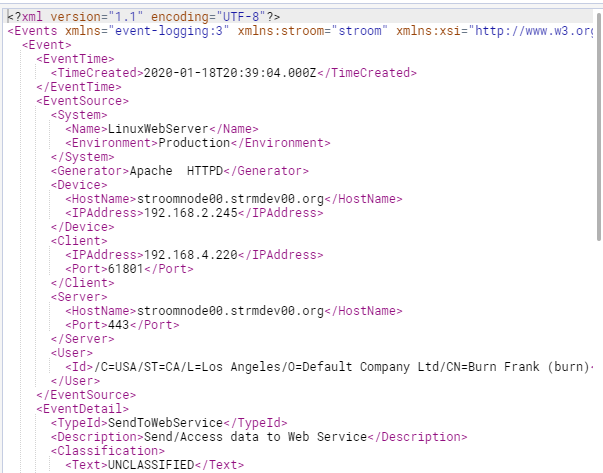

and also scroll the translation output pane to display the

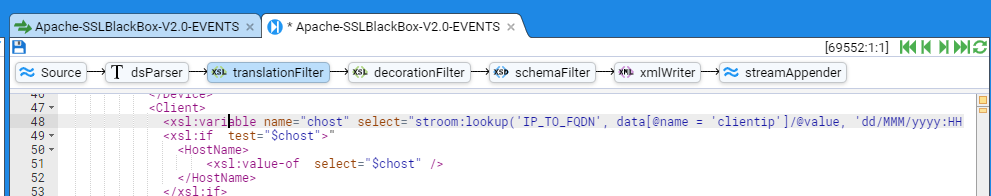

We modify the Client xslt segment to change

<Client>

<IPAddress>

<xsl:value-of select="data[@name = 'clientip']/@value" />

</IPAddress>

<Port>

<xsl:value-of select="data[@name = 'clientport']/@value" />

</Port>

</Client>

to

<Client>

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value)" />

<xsl:if test="$chost">"

<HostName>

<xsl:value-of select="$chost" />

</HostName>

</xsl:if>

<IPAddress>

<xsl:value-of select="data[@name = 'clientip']/@value" />

</IPAddress>

<xsl:if test="data[@name = 'clientport']/@value !='-'">

<Port>

<xsl:value-of select="data[@name = 'clientport']/@value" />

</Port>

</xsl:if>

</Client>

and then we press the Refresh Current Step icon

BUT NOTHING CHANGES !!!

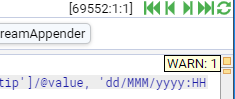

Not quite, you will note in the top right of the translation pane some yellow boxes.

If you click on the top square box, you will see the WARN: 1 selection window

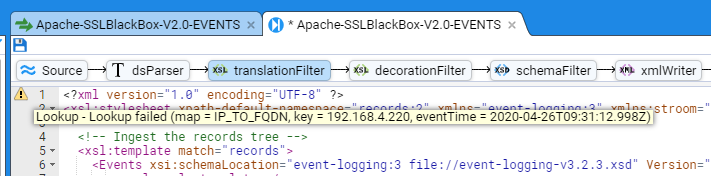

Clicking on the yellow rectangle box below the yellow square box, the translation pane will automatically scroll back to the top of the translation and show the

Clicking on the

The problem is that, the pipeline cannot find the Reference.

To allow a pipeline to find reference feeds, we need to modify the translation parameters within the pipeline.

The pipeline for this Event feed is called APACHE-SSLBlack-Box-V2.0-EVENTS.

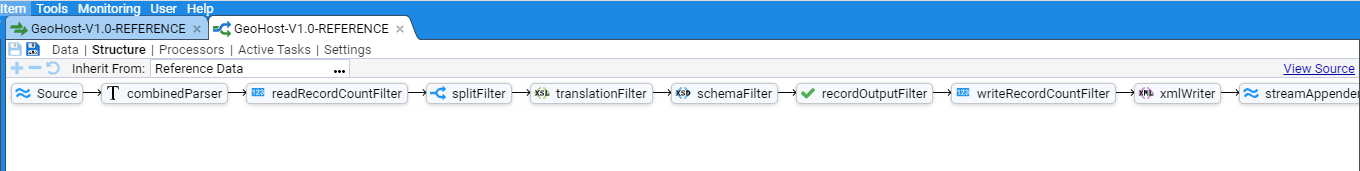

Open this pipeline by double clicking on its entry in the Explorer window

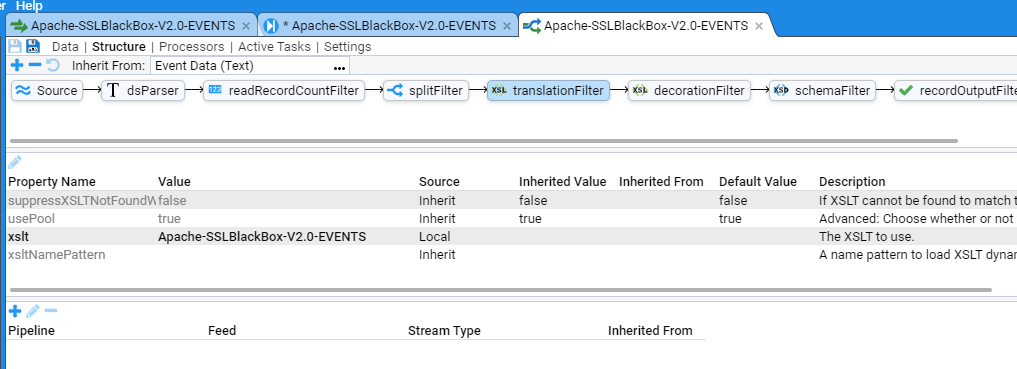

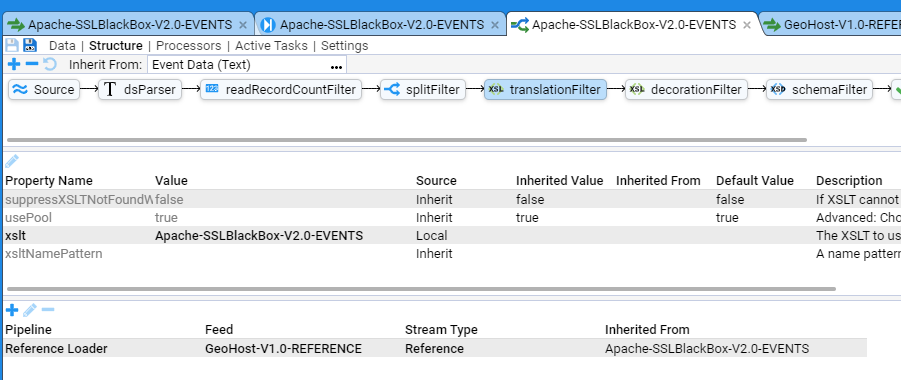

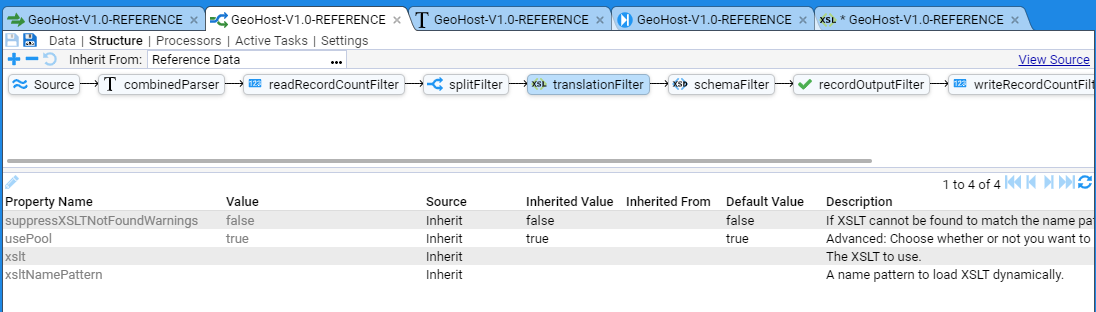

then switch to the Structure sub-item

and then select the translationFilter element to reveal

The top pane shows the pipeline, in this case, the selected translation filter

APACHE-BlackBoxV2.0-EVENTS translation.

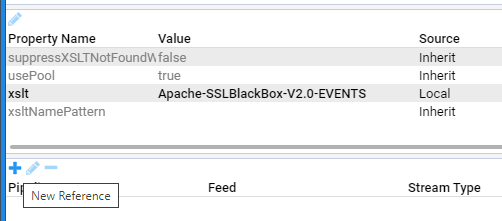

The bottom pane is the one we are interested in.

In the case of translation Filters, this pane allows one to associate Reference streams with the translation Filter.

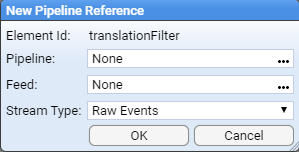

So, to associate our GeoHost-V1.0-REFERENCE reference feed with this translation filter, click on the

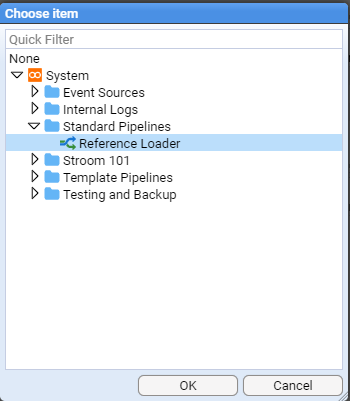

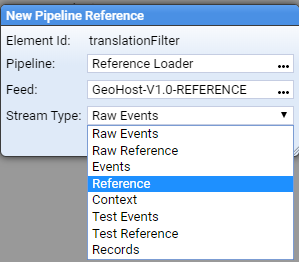

For Pipeline: use the menu selector

and choose the Reference Loader pipeline and then press OK

and choose the Reference Loader pipeline and then press OK

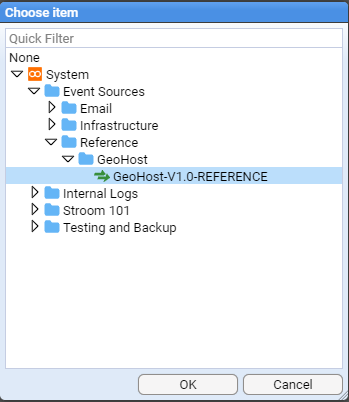

For Feed:, navigate to the reference feed we want, that is the GeoHost-V1.0-REFERENCE reference feed and press OK

And finally, for Stream Type: choose Reference from the drop-down menu

then press OK to save the new reference. We now see

Save these pipeline changes by pressing the

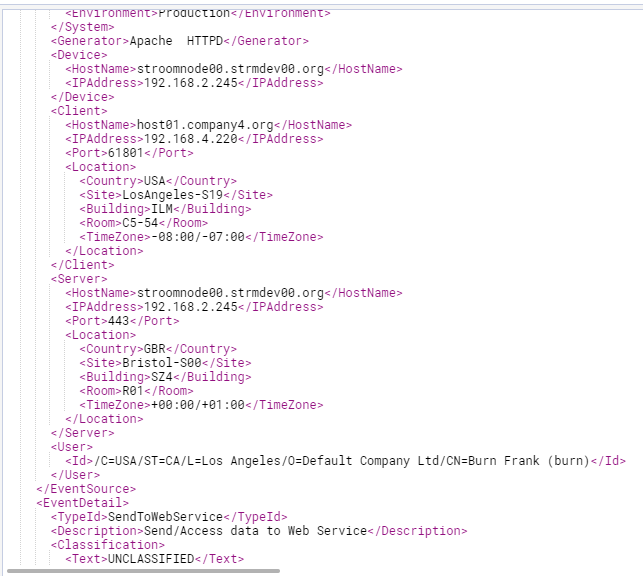

Pressing the Refresh Current Step

<Client/HostName> element.

To complete the translation, we will add reference lookups for the <Server/HostName> element and we will also add <Location> elements to both the <Client> and <Server> elements.

The completed code segment looks like

...

<!-- Set some variables to enable lookup functionality -->

<xsl:variable name="formattedDate" select="stroom:format-date(data[@name = 'time']/@value, 'dd/MMM/yyyy:HH:mm:ss XX')" />

<!-- For Version 2.0 of Apache audit we have the virtual server, so this will be our server -->

<xsl:variable name="vServer" select="data[@name = 'vserver']/@value" />

<xsl:variable name="vServerPort" select="data[@name = 'vserverport']/@value" />

...

<!-- -->

<Client>

<!-- See if we can get the client HostName from the given IP address -->

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN',data[@name = 'host']/@value, $formattedDate, true())" />

<xsl:if test="$chost">

<HostName>

<xsl:value-of select="$chost" />

</HostName>

</xsl:if>

<IPAddress>

<xsl:value-of select="data[@name = 'clientip']/@value" />

</IPAddress>

<xsl:if test="data[@name = 'clientport']/@value !='-'">

<Port>

<xsl:value-of select="data[@name = 'clientport']/@value" />

</Port>

</xsl:if>

<!-- See if we can get the client Location for the client FQDN if we have it -->

<xsl:variable name="cloc" select="stroom:lookup('FQDN_TO_LOC', $chost, $formattedDate, true())" />

<xsl:if test="$chost != '' and $cloc">

<xsl:copy-of select="$cloc" />

</xsl:if>

</Client>

<!-- -->

<Server>

<HostName>

<xsl:value-of select="$vServer" />

</HostName>

<!-- See if we can get the service IPAddress -->

<xsl:variable name="sipaddr" select="stroom:lookup('FQDN_TO_IP',$vServer, $formattedDate, true())" />

<xsl:if test="$sipaddr">

<IPAddress>

<xsl:value-of select="$sipaddr" />

</IPAddress>

</xsl:if>

<!-- Server Port Number -->

<xsl:if test="$vServerPort !='-'">

<Port>

<xsl:value-of select="$vServerPort" />

</Port>

</xsl:if>

<!-- See if we can get the Server location -->

<xsl:variable name="sloc" select="stroom:lookup('FQDN_TO_LOC', $vServer, $formattedDate, true())" />

<xsl:if test="$sloc">

<xsl:copy-of select="$sloc" />

</xsl:if>

</Server>

Once the above modifications have been made to the XSLT, save these by pressing the

Note the use of the fourth Boolean ignoreWarnings argument in the lookups. We set this to true() as we may not always have the item in the reference map we want and Warnings consume space in the Stroom store file system.

Thus, the fragment from the output pane for our first event shows

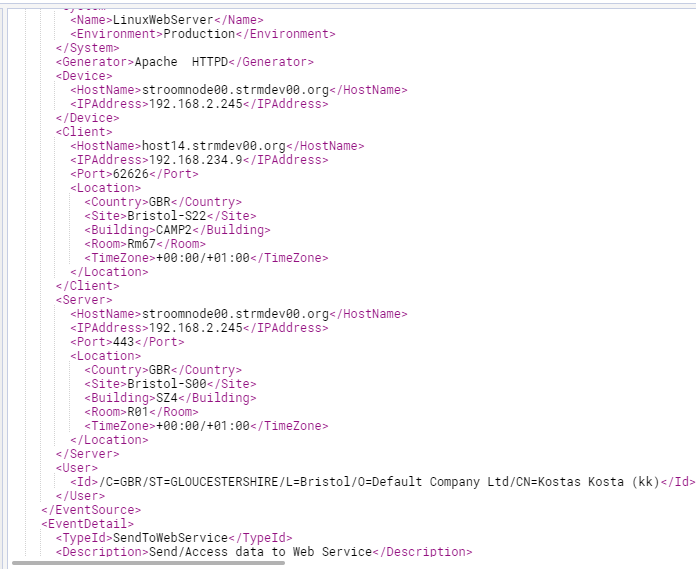

and the fragment from the output pane for our last event of this stream shows

This is the XSLT Translation.

<?xml version="1.0" encoding="UTF-8" ?>

<xsl:stylesheet xpath-default-namespace="records:2" xmlns="event-logging:3" xmlns:stroom="stroom" xmlns:xsl="http://www.w3.org/1999/XSL/Transform" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xs="http://www.w3.org/2001/XMLSchema" version="3.0">

<!-- Ingest the records tree -->

<xsl:template match="records">

<Events xsi:schemaLocation="event-logging:3 file://event-logging-v3.2.3.xsd" Version="3.2.3">

<xsl:apply-templates />

</Events>

</xsl:template>

<!-- Only generate events if we have an url on input -->

<xsl:template match="record[data[@name = 'url']]">

<Event>

<xsl:apply-templates select="." mode="eventTime" />

<xsl:apply-templates select="." mode="eventSource" />

<xsl:apply-templates select="." mode="eventDetail" />

</Event>

</xsl:template>

<xsl:template match="node()" mode="eventTime">

<EventTime>

<TimeCreated>

<xsl:value-of select="stroom:format-date(data[@name = 'time']/@value, 'dd/MMM/yyyy:HH:mm:ss XX')" />

</TimeCreated>

</EventTime>

</xsl:template>

<xsl:template match="node()" mode="eventSource">

<!-- Set some variables to enable lookup functionality -->

<xsl:variable name="formattedDate" select="stroom:format-date(data[@name = 'time']/@value, 'dd/MMM/yyyy:HH:mm:ss XX')" />

<!-- For Version 2.0 of Apache audit we have the virtual server, so this will be our server -->

<xsl:variable name="vServer" select="data[@name = 'vserver']/@value" />

<xsl:variable name="vServerPort" select="data[@name = 'vserverport']/@value" />

<EventSource>

<System>

<Name>

<xsl:value-of select="stroom:feed-attribute('System')" />

</Name>

<Environment>

<xsl:value-of select="stroom:feed-attribute('Environment')" />

</Environment>

</System>

<Generator>Apache HTTPD</Generator>

<Device>

<HostName>

<xsl:value-of select="stroom:feed-attribute('MyHost')" />

</HostName>

<IPAddress>

<xsl:value-of select="stroom:feed-attribute('MyIPAddress')" />

</IPAddress>

</Device>

<Client>

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value, $formattedDate, true())" />

<xsl:if test="$chost">

<HostName>

<xsl:value-of select="$chost" />

</HostName>

</xsl:if>

<IPAddress>

<xsl:value-of select="data[@name = 'clientip']/@value" />

</IPAddress>

<xsl:if test="data[@name = 'clientport']/@value !='-'">

<Port>

<xsl:value-of select="data[@name = 'clientport']/@value" />

</Port>

</xsl:if>

<xsl:variable name="cloc" select="stroom:lookup('FQDN_TO_LOC', $chost, $formattedDate, true())" />

<xsl:if test="$chost != '' and $cloc">

<xsl:copy-of select="$cloc" />

</xsl:if>

</Client>

<Server>

<HostName>

<xsl:value-of select="$vServer" />

</HostName>

<!-- See if we can get the service IPAddress -->

<xsl:variable name="sipaddr" select="stroom:lookup('FQDN_TO_IP',$vServer, $formattedDate, true())" />

<xsl:if test="$sipaddr">

<IPAddress>

<xsl:value-of select="$sipaddr" />

</IPAddress>

</xsl:if>

<!-- Server Port Number -->

<xsl:if test="$vServerPort !='-'">

<Port>

<xsl:value-of select="$vServerPort" />

</Port>

</xsl:if>

<!-- See if we can get the Server location -->

<xsl:variable name="sloc" select="stroom:lookup('FQDN_TO_LOC', $vServer, $formattedDate, true())" />

<xsl:if test="$sloc">

<xsl:copy-of select="$sloc" />

</xsl:if>

</Server>

<User>

<Id>

<xsl:value-of select="data[@name='user']/@value" />

</Id>

</User>

</EventSource>

</xsl:template>

<xsl:template match="node()" mode="eventDetail">

<EventDetail>

<TypeId>SendToWebService</TypeId>

<Description>Send/Access data to Web Service</Description>

<Classification>

<Text>UNCLASSIFIED</Text>

</Classification>

<Send>

<Source>

<Device>

<IPAddress>

<xsl:value-of select="data[@name = 'clientip']/@value"/>

</IPAddress>

<Port>

<xsl:value-of select="data[@name = 'vserverport']/@value"/>

</Port>

</Device>

</Source>

<Destination>

<Device>

<HostName>

<xsl:value-of select="data[@name = 'vserver']/@value"/>

</HostName>

<Port>

<xsl:value-of select="data[@name = 'vserverport']/@value"/>

</Port>

</Device>

</Destination>

<Payload>

<Resource>

<URL>

<xsl:value-of select="data[@name = 'url']/@value"/>

</URL>

<Referrer>

<xsl:value-of select="data[@name = 'referer']/@value"/>

</Referrer>

<HTTPMethod>

<xsl:value-of select="data[@name = 'url']/data[@name = 'httpMethod']/@value"/>

</HTTPMethod>

<HTTPVersion>

<xsl:value-of select="data[@name = 'url']/data[@name = 'version']/@value"/>

</HTTPVersion>

<UserAgent>

<xsl:value-of select="data[@name = 'userAgent']/@value"/>

</UserAgent>

<InboundSize>

<xsl:value-of select="data[@name = 'bytesIn']/@value"/>

</InboundSize>

<OutboundSize>

<xsl:value-of select="data[@name = 'bytesOut']/@value"/>

</OutboundSize>

<OutboundContentSize>

<xsl:value-of select="data[@name = 'bytesOutContent']/@value"/>

</OutboundContentSize>

<RequestTime>

<xsl:value-of select="data[@name = 'timeM']/@value"/>

</RequestTime>

<ConnectionStatus>

<xsl:value-of select="data[@name = 'constatus']/@value"/>

</ConnectionStatus>

<InitialResponseCode>

<xsl:value-of select="data[@name = 'responseB']/@value"/>

</InitialResponseCode>

<ResponseCode>

<xsl:value-of select="data[@name = 'response']/@value"/>

</ResponseCode>

<Data Name="Protocol">

<xsl:attribute select="data[@name = 'url']/data[@name = 'protocol']/@value" name="Value"/>

</Data>

</Resource>

</Payload>

<!-- Normally our translation at this point would contain an <Outcome> attribute.

Since all our sample data includes only successful outcomes we have ommitted the <Outcome> attribute

in the translation to minimise complexity-->

</Send>

</EventDetail>

</xsl:template>

</xsl:stylesheet>

Troubleshooting lookup issues

If your lookup is not working as expected you can use the 5th argument of the lookup function to help investigate the issue.

If we return to the

<Client>

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value, $formattedDate, true())" />

to

<Client>

<xsl:variable name="chost" select="stroom:lookup('IP_TO_FQDN', data[@name = 'clientip']/@value, $formattedDate, true(), true())" />

and then we press the Refresh Current Step icon

you will notice the two blue squares at the top right of the code pane

If you click on the lower blue square then the code screen will reposition to the beginning of the xslt.

Note the

Once you have completed your troubleshooting you can either remove the 5th argument from the lookup function, or set to false.

2 - Create a Simple Reference Feed

Introduction

A Reference Feed is a temporal set of data that a pipeline’s translation can look up to gain additional information to decorate the subject data of the translation. For example, an XML Event.

A Reference Feed is temporal, in that, each time a new set of reference data is loaded into Stroom, the effective date (for the data) is also recorded. Thus by using a timestamp field with the subject data, the appropriate batch of reference data can be accessed.

A typical reference data set to support the Stroom XML Event schema might be on that relates to devices. Such a data set can contain the device logical identifiers such as fully qualified domain name and ip address and their geographical location information such as country, site, building, room and timezone.

The following example will describe how to create a reference feed for such device data.

We will call the reference feed GeoHost-V1.0-REFERENCE.

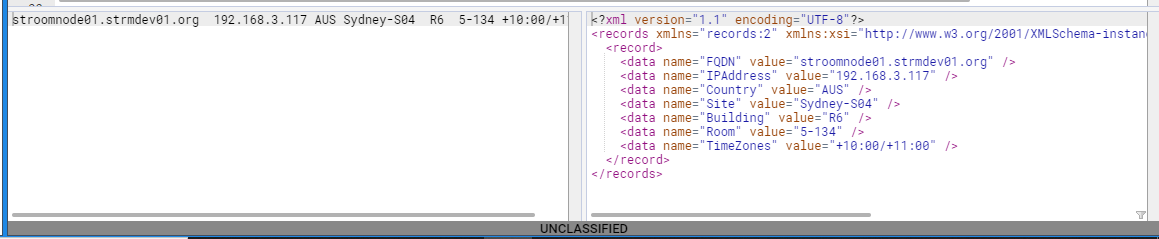

Reference Data

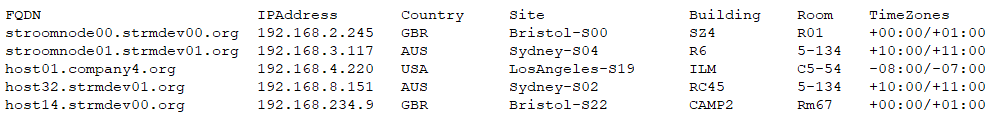

Our reference data will be supplied in a

- the device Fully Qualified Domain Name

- the device IP Address

- the device Country location (using ISO 3166-1 alpha-3 codes)

- the device Site location

- the device Building location

- the device TimeZone location (both standard then daylight timezone offsets from UTC)

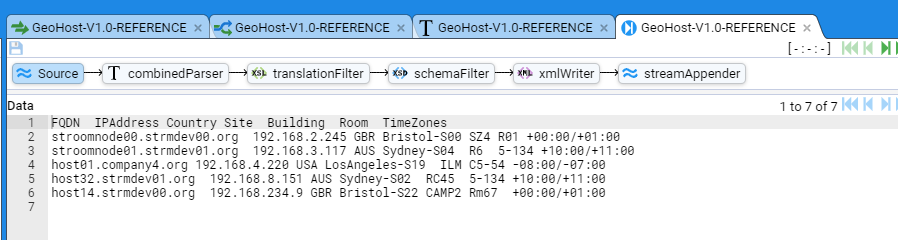

For simplicity, our example will use a file with just 5 entries

A copy of this sample data source can be found here. Save a copy of this data to your local environment for use later in this HOWTO. Save this file as a text document with ANSI encoding.

Creation

To create our Reference Event stream we need to create:

- the Feed

- a Pipeline to automatically process and store the Reference data

- a Text Parser to convert the text file into simple XML record format, and

- a Translation to create reference data maps

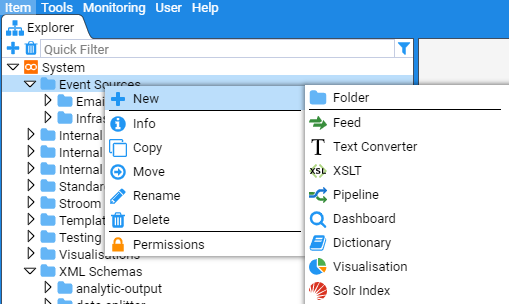

Create Feed

First, within the Explorer pane, and with the cursor having selected the Event Sources group, right click the mouse to have the object context menu appear.

If you hover over the

Now hover the mouse over the

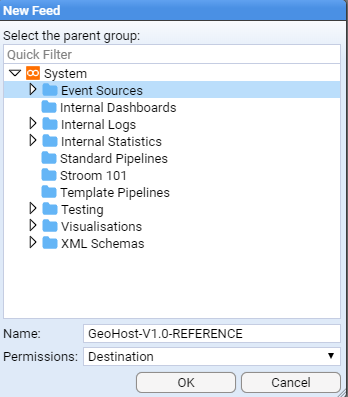

When the New Feed selection windows comes up, navigate to the Event Sources system group.

Then enter the name of the reference feed GeoHost-V1.0-REFERENCE onto the Name: text entry box.

On pressing the

button we will see the following Feed configuration tab appear.

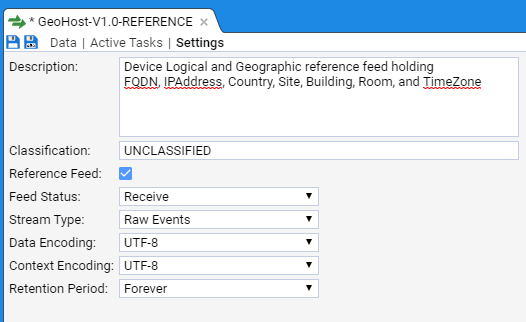

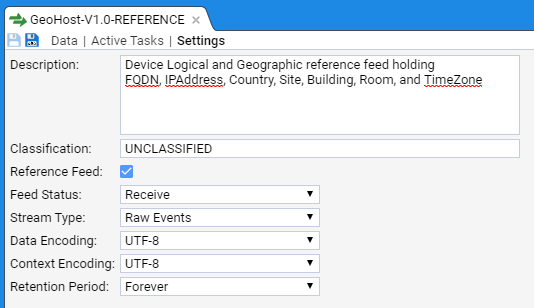

Click on the Settings sub-item in the GeoHost-V1.0-REFERENCE Feed tab to populate the initial Settings configuration.

Enter an appropriate description, classification and click on the Reference Feed check box

and we then use the Stream Type drop-down menu to set the stream type as Raw Reference.

At this point we save our configuration so far, by clicking on the

Load sample Reference data

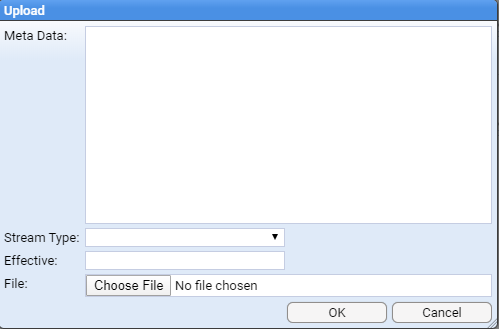

At this point we want to load our sample reference data, in order to develop our reference feed. We can do this two ways - posting the file to our Stroom web server, or directly upload the data using the user interface. For this example we will use Stroom’s user interface upload facility.

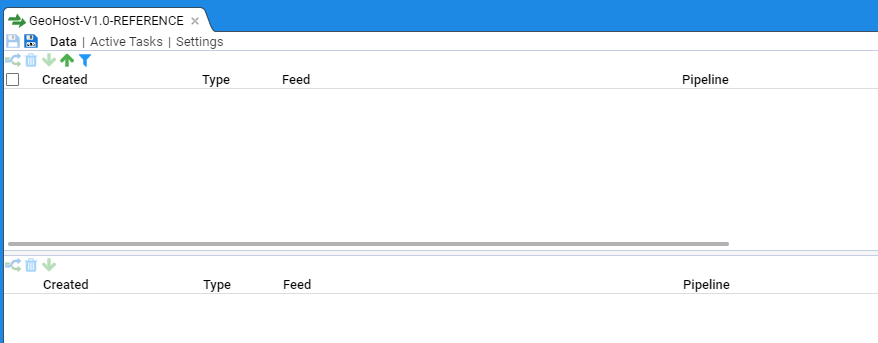

First, open the Data sub-item in the GeoHost-V1.0-REFERENCE feed configuration tab to reveal

Note the

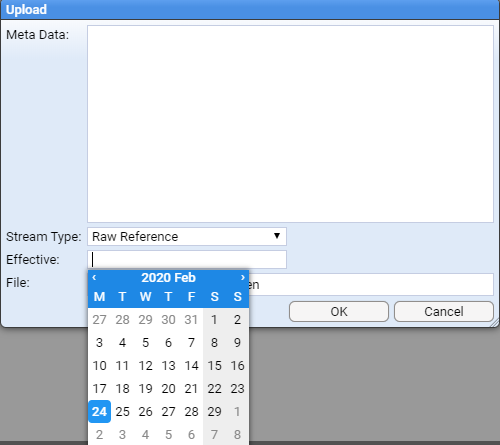

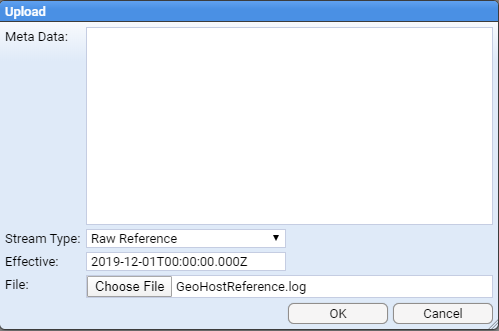

Naturally, as this is a reference feed we are creating and this is raw data we are uploading, we select a Stream Type: of Raw Reference. We need to set the Effective: date (really a timestamp) for this specific stream of reference data. Clicking in the Effective: entry box will cause a calendar selection window to be displayed (initially set to the current date).

We are going to set the effective date to be late in 2019.

Normally, you would choose a time stamp that matches the generation of the reference data.

Click on the blue Previous Month icon (a less than symbol <) on the Year/Month line to move back to December 2019.

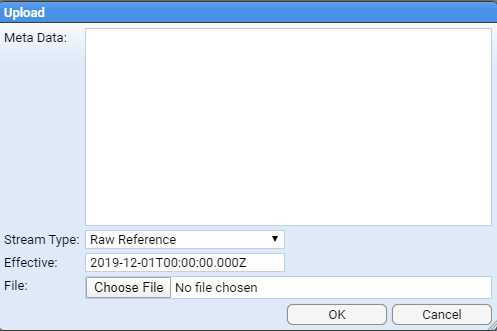

Select the 1st (clicking on 1) at which point the calendar selection window will disappear and a time of 2019-12-01T00:00:00.000Z is displayed. This is the default whenever using the calendar selection window in Stroom - the resultant timestamp is that of the day selected at 00:00:00 (Zulu time). To get the calendar selection window to disappear, click anywhere outside of the timestamp entry box.

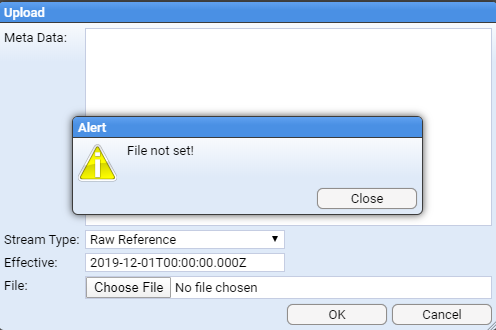

Note, if you happen to click on the button before selecting the File (or Stream Type for that matter), an appropriate Alert dialog box will be displayed

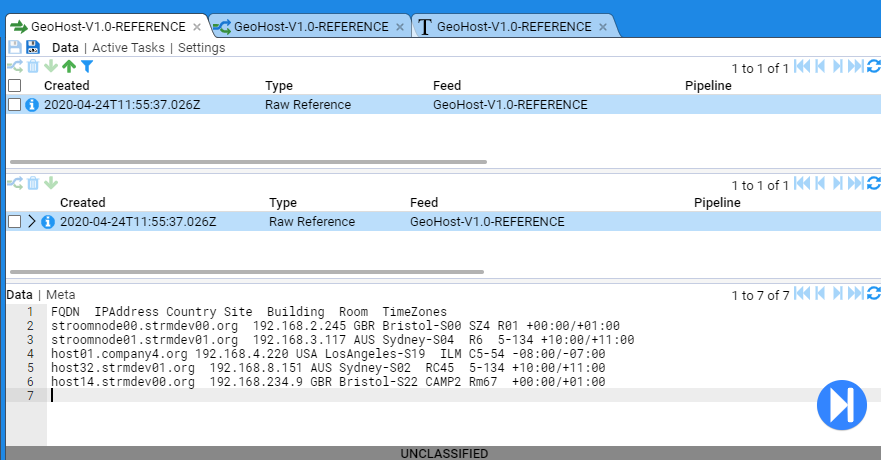

We don’t need to set Meta Data for this stream of reference data, but we (obviously) need to select the file. For the purposes of this example, we will utilise the file GeoHostReference.log you downloaded earlier in the Reference Data section of this document. This file contains a header and five lines of reference data as per

When we construct the pipeline for this reference feed, we will see how to make use of the header line.

So, click on the Choose File button to bring up a file selector window. Navigate within the selector window to the location on your location machine where you have saved the GeoHostReference.log file. On clicking Open we return to the Upload window with the file selected.

On clicking we get an Alert dialog window to advise a file has been uploaded.

at which point we press Close.

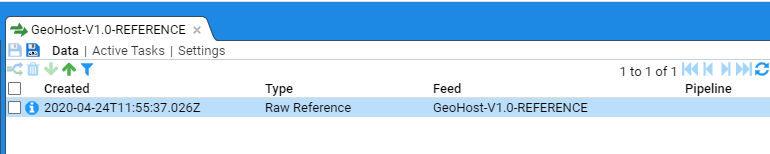

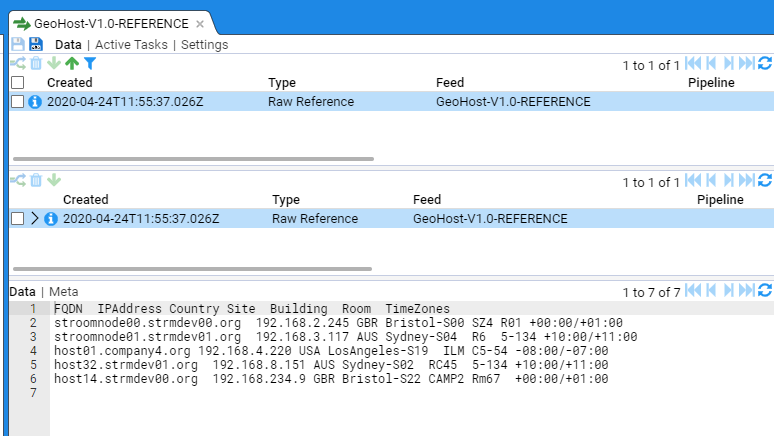

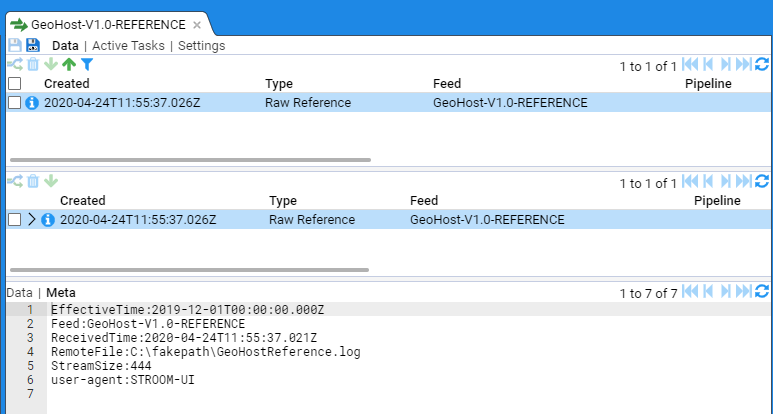

At this point, the Upload selection window closes, and we see our file displayed in the GeoHost-V1.0-REFERENCE Data stream table.

When we click on the newly up-loaded stream in the Stream Table pane we see the other two panes fill with information.

The middle pane shows the selected or Specific feed and any linked streams. A linked stream could be the resultant Reference data set generated from a Raw Reference stream. If errors occur during processing of the stream, then a linked stream could be an Error stream.

The bottom pane displays the selected stream’s data or meta-data. If we click on the Meta link at the top of this pane, we will see the Metadata associated with this stream. We also note that the Meta link at the bottom of the pane is now embolden.

We can see the metadata we set - the EffectiveTime, and implicitly, the Feed but we also see additional fields that Stroom has added that provide more detail about the data and its delivery to Stroom such as how and when it was received. We now need to switch back to the Data display as we need to author our reference feed translation.

Create Pipeline

We now need to create the pipeline for our reference feed so that we can create our translation and hence create reference data for our feed.

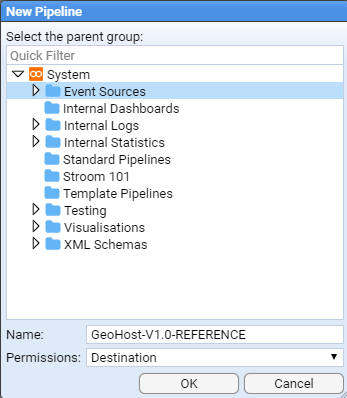

Within the Explorer pane, and having selected the Event Sources system group, right click to bring up the object context menu, then the New sub-context menu.

Move to the

Feeds and Translations system group then enter the name of the reference feed, GeoHost-V1.0-REFERENCE in the Name: text entry box.

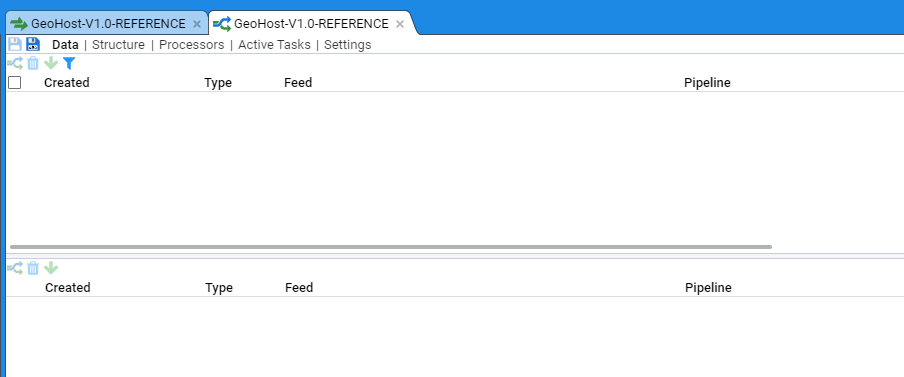

On pressing the button you will be presented with the new pipeline’s configuration tab

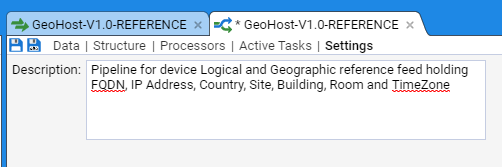

Within Settings, enter an appropriate description as per

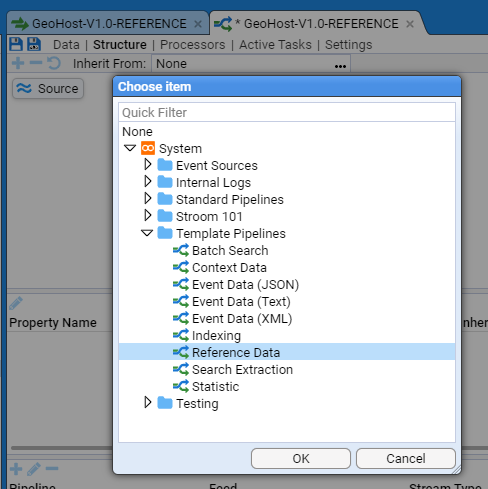

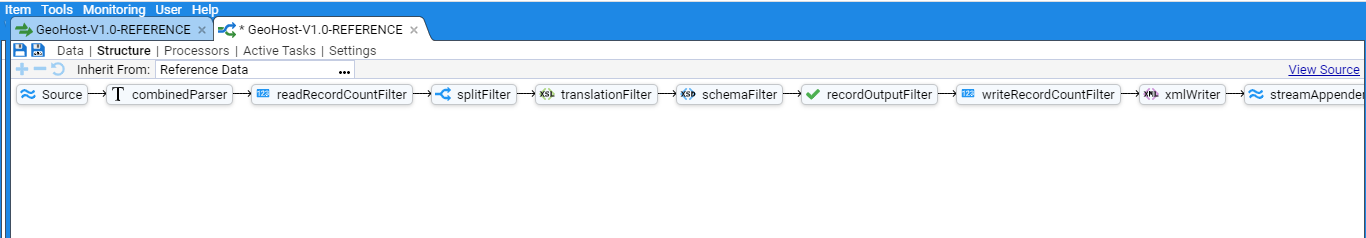

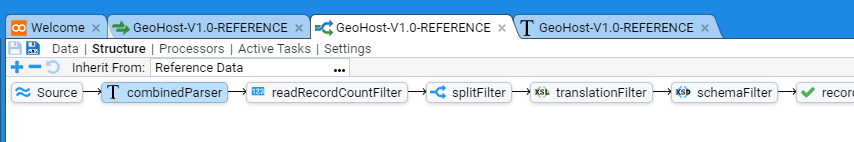

We now need to select the structure this pipeline will use. We need to move from the Settings sub-item on the pipeline configuration tab to the Structure sub-item. This is done by clicking on the Structure link, at which we will see

As this pipeline will be processing reference data, we would use a Reference Data pipeline.

This is done by inheriting it from a defined set of Standard Pipelines.

To do this, click on the menu selection icon

to the right of the Inherit From: test display box.

to the right of the Inherit From: test display box.

When the Choose item selection window appears, navigate to the Template Pipelines system group (if not already displayed), and select (left click) the

Reference Data pipeline.

You can find further information about the Template Pipelines

here

.

Then press . At this we will see the inherited pipeline structure of

Noting that this pipeline has not yet been saved - indicated by the * in the tab label and the highlighted

This ends the first stage of the pipeline creation. We need to author the feed’s translation.

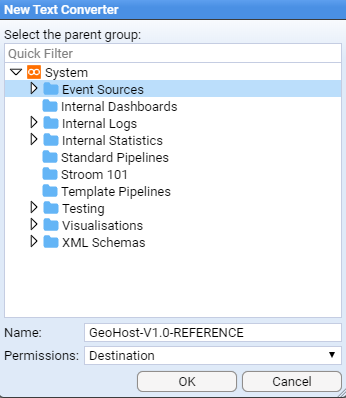

Create Text Converter

To turn our tab delimited data in Stroom reference data, we first need to convert the text into simple XML. We do this using a Text Converter. Test Converters use a Stroom Data Splitter to convert text into simple XML.

Within the Explorer pane, and having selected the Event Sources system group, right click to bring up the object context menu.

Navigate to the

When the New Text Converter selection window comes up, navigate to and select Event Sources system group, then enter the name of the feed, GeoHost-V1.0-REFERENCE into the Name: text entry box as per

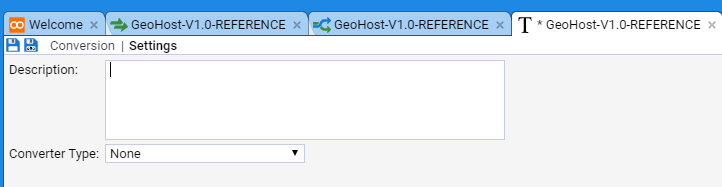

On pressing the button we see the next text converter’s configuration tab displayed.

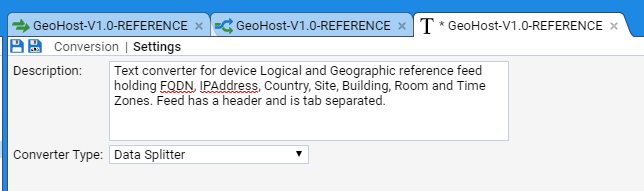

Enter an appropriate description into the Description: text entry box, for instance

Text converter for device Logical and Geographic reference feed holding FQDN, IPAddress, Country, Site, Building, Room and Time Zones.

Feed has a header and is tab separated.

Set the Converter Type: to be Data Splitter from the drop-down menu.

We next press the Conversion sub-item on the TextConverter tab to bring up the Data Splitter editing window.

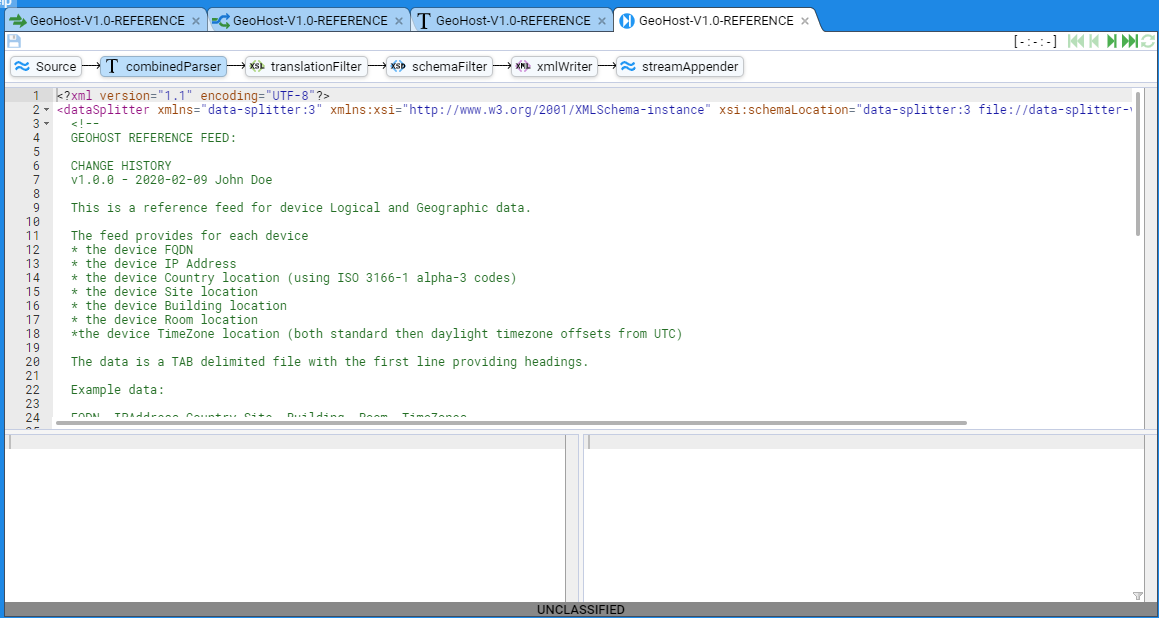

The following is our Data Splitter code (see Data Splitter documentation for more complete details)

<?xml version="1.1" encoding="UTF-8"?>

<dataSplitter xmlns="data-splitter:3" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="data-splitter:3 file://data-splitter-v3.0.1.xsd" version="3.0">

<!--

GEOHOST REFERENCE FEED:

CHANGE HISTORY

v1.0.0 - 2020-02-09 John Doe

This is a reference feed for device Logical and Geographic data.

The feed provides for each device

* the device FQDN

* the device IP Address

* the device Country location (using ISO 3166-1 alpha-3 codes)

* the device Site location

* the device Building location

* the device Room location

*the device TimeZone location (both standard then daylight timezone offsets from UTC)

The data is a TAB delimited file with the first line providing headings.

Example data:

FQDN IPAddress Country Site Building Room TimeZones

stroomnode00.strmdev00.org 192.168.2.245 GBR Bristol-S00 GZero R00 +00:00/+01:00

stroomnode01.strmdev01.org 192.168.3.117 AUS Sydney-S04 R6 5-134 +10:00/+11:00

host01.company4.org 192.168.4.220 USA LosAngeles-S19 ILM C5-54-2 -08:00/-07:00

-->

<!-- Match the heading line - split on newline and match a maximum of one line -->

<split delimiter="\n" maxMatch="1">

<!-- Store each heading and note we split fields on the TAB (	) character -->

<group>

<split delimiter="	">

<var id="heading"/>

</split>

</group>

</split>

<!-- Match all other data lines - splitting on newline -->

<split delimiter="\n">

<group>

<!-- Store each field using the column heading number for each column ($heading$1) and note we split fields on the TAB (	) character -->

<split delimiter="	">

<data name="$heading$1" value="$1"/>

</split>

</group>

</split>

</dataSplitter>

At this point we want to save our Text Converter, so click on the

A copy of this data splitter can be found here.

Assign Text Converter to Pipeline

To test our Text Converter, we need to modify our GeoHost-V1.0-REFERENCE pipeline to use it.

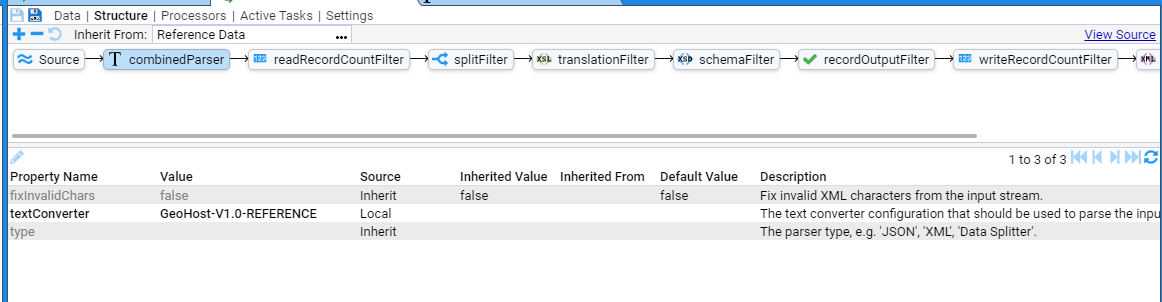

Select the GeoHost-V1.0-REFERENCE pipeline tab and then select the Structure sub-item

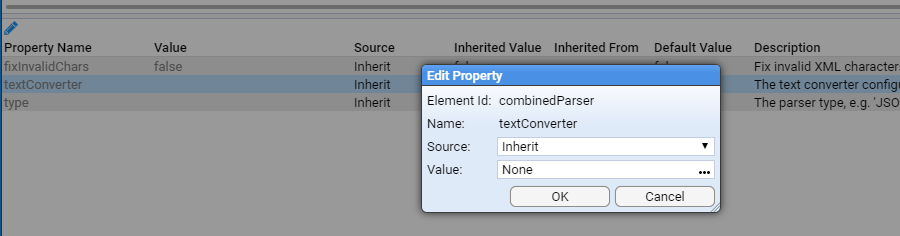

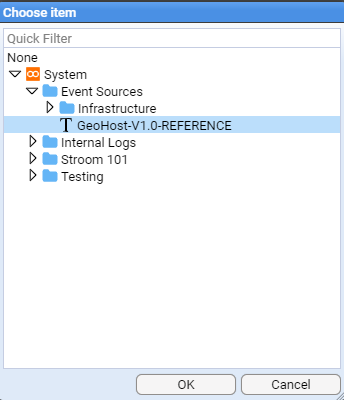

To associate our new Text Converter with the pipeline, click on the CombinedParser pipeline element then move the cursor to the Property (middle) pane then double click on the textConverter Property Name to allow you to edit the property as per

We leave the Property Source: as Inherit but we need to change the Property Value: from None to be our newly created GeoHost-V1.0-REFERENCE text Converter

then press . At this we will see the Property Value set

Again press to finish editing this property and we then see that the textConverter property has been set to GeoHost-V1.0-REFERENCE. Similarly set the type property Value to “Data Splitter”.

At this point, we should save our changes, by clicking on the highlighted

Test Text Converter

To test our Text Converter, we select the

We now want to step our data through the Text Converter.

We enter Stepping Mode by pressing the stepping button

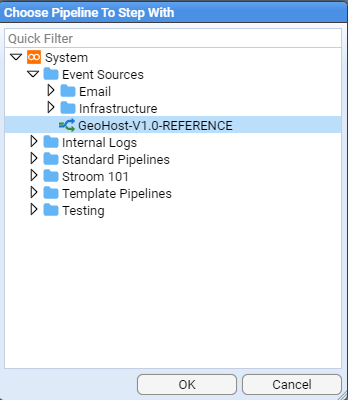

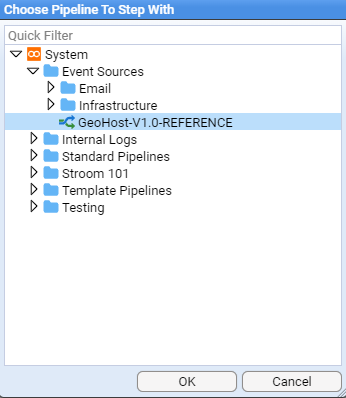

You will then be requested to choose a pipeline to step with, at which, you should navigate to the GeoHost-V1.0-REFERENCE pipeline as per

then press .

At this point we enter the pipeline Stepping tab

which initially displays the Raw Reference data from our stream.

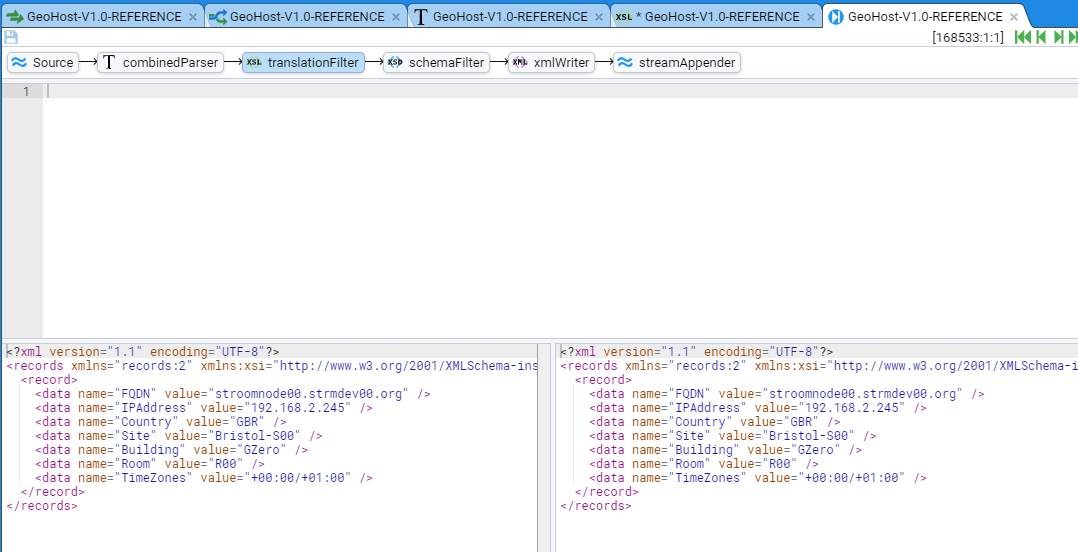

We click on the CombinedParser icon, to display.

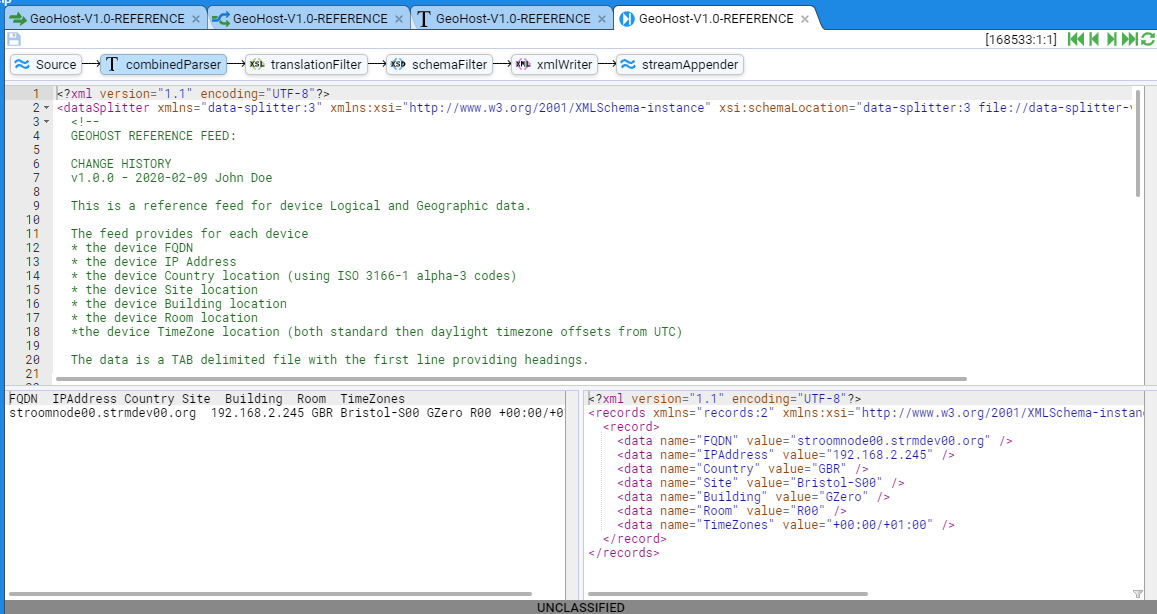

This stepping window is divided into three sub-panes. the top one is the Text Converter editor and it will allow you to adjust the text conversion should you wish too. The bottom left window displays the input to the Text Converter. The bottom right window displays the output from the Text Converter for the given input.

We now click on the pipeline Step Forward button

If we again press the

Pressing the Step Forward button

We have now successfully tested the Text Converter for our reference feed. Our next step is to author our translation to generate reference data records that Stroom can use.

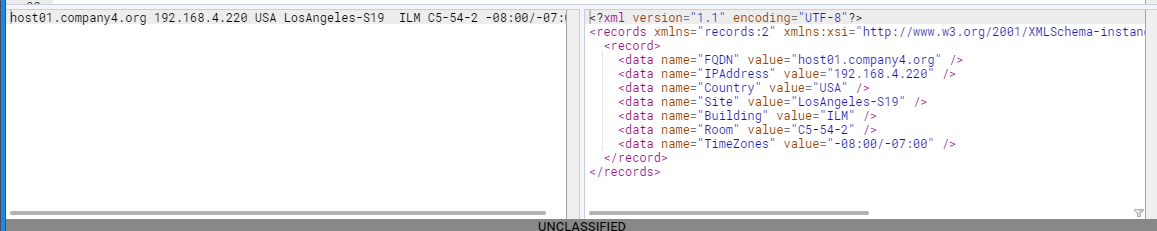

Create XSLT Translation

We now need to create our translation. This XSLT translation will convert simple records XML data into ReferenceData records - see the Stroom reference-data v2.0.1 Schema for details. More information can be found here .

We first need to create an XSLT translation for our feed.

Move back to the Explorer tree, right click on

When the New XSLT selection window comes up, navigate to the Event Sources system group and enter the name of the reference feed - GeoHost-V1.0-REFERENCE into the Name: text entry box as per

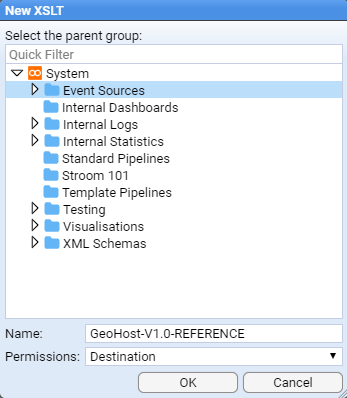

On pressing the button we see the XSL tab for our translation and as previously, we enter an appropriate description before selecting the XSLT sub-item.

On selection of the XSLT sub-item, we are presented with the XSLT editor window

At this point, rather than edit the translation in this editor and then assign this translation to the GeoHost-V1.0-REFERENCE pipeline, we will first make the assignment in the pipeline and then develop the translation whilst stepping through the raw data. This is to demonstrate there are a number of ways to develop a translation.

So, to start, save the XSLT by clicking on the

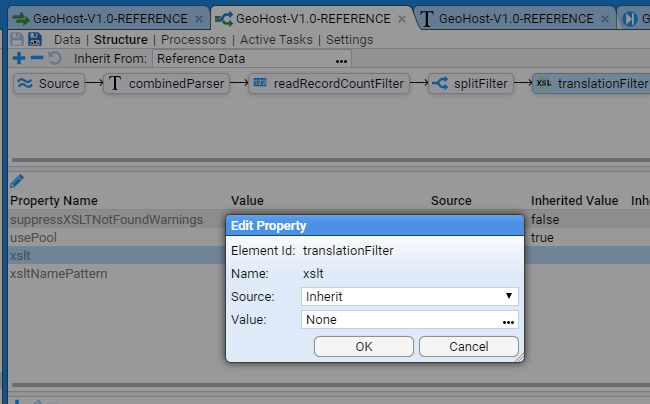

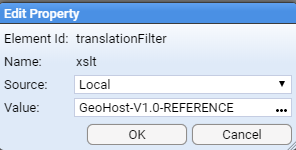

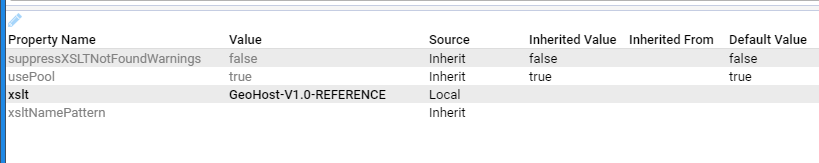

To associate our new translation with the pipeline, move the cursor to the Property Table, click on the grayed out xslt Property Name and then click on the Edit Property

We leave the Property Source: as Inherit and we need to change the Property Value: from None to be our newly created GeoHost-V1.0-REFERENCE XSL translation.

To do this, position the cursor over the menu selection icon

of the Value: chooser and right click, at which the

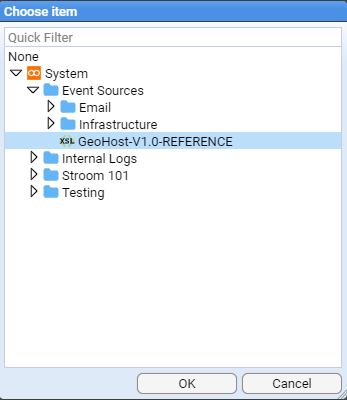

of the Value: chooser and right click, at which the Choose item selection window appears.

Navigate to the Event Sources system group then select the GeoHost-V1.0-REFERENCE xsl translation.

then press . At this point we will see the property Value: set

Again press to finish editing this property and we see that the xslt property has been set to GeoHost-V1.0-REFERENCE.

At this point, we should save our changes, by clicking on the highlighted

Test XSLT Translation

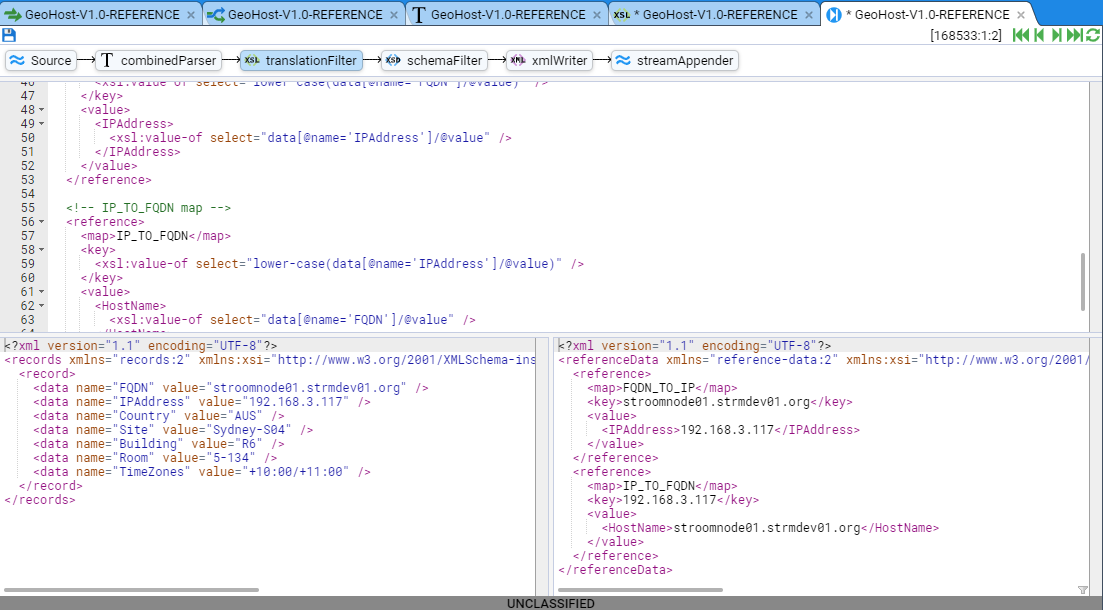

We now go back to the

We now want to step our data through the xslt Translation.

We enter Stepping Mode by pressing the stepping button

You will then be requested to choose a pipeline to step with, at which, you should navigate to the GeoHost-V1.0-REFERENCE pipeline as per

then press .

At this point we enter the pipeline through the Stepping tab

which initially displays the Raw Reference data from our stream.

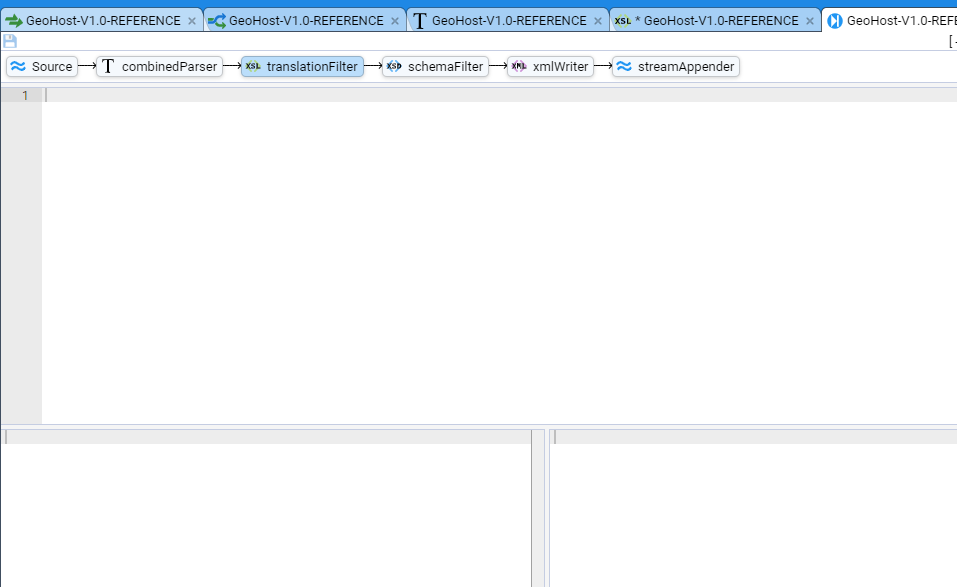

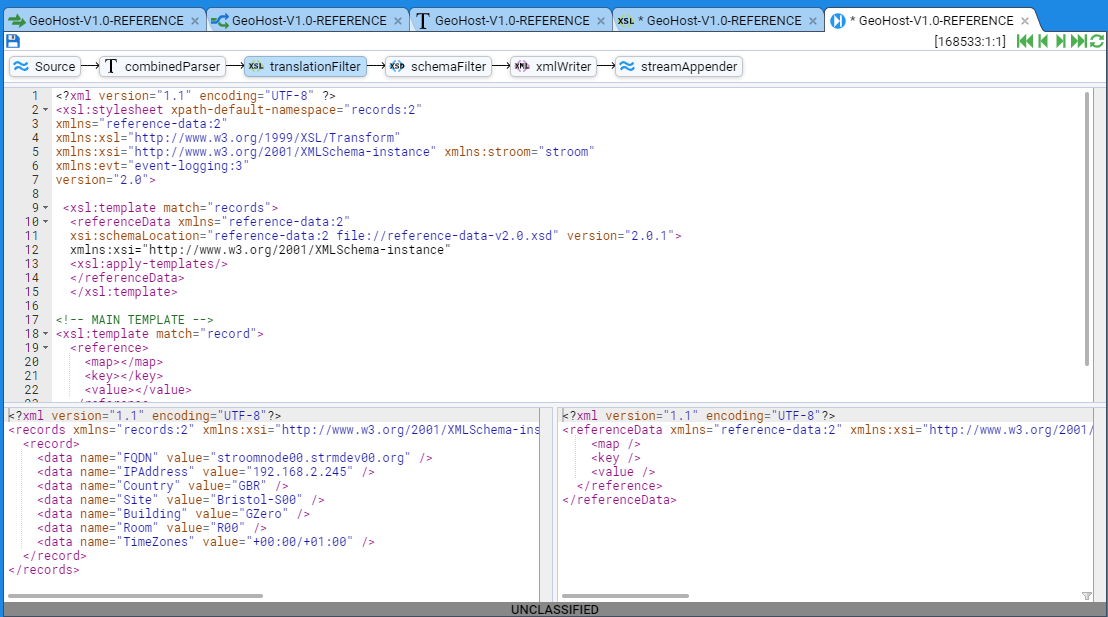

We click on the translationFilter element to enter the xslt Translation stepping window and all panes are empty.

As for the Text Converter, this translation stepping window is divided into three sub-panes. The top one is the XSLT Translation. The bottom right window displays the output from the XSLT Translation for the given input.

We now click on the pipeline Step Forward button

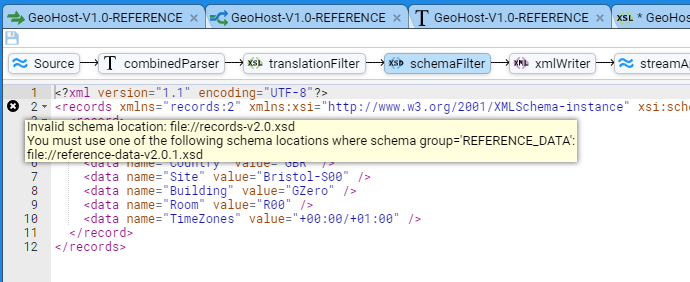

But we also note if we move along the pipeline structure to the

In essence, since the translation has done nothing, and the data is simple records XML, the system is indicating that it expects the output data to be in the reference-data v2.0.1 format.

We can correct this by adding the skeleton xslt translation for reference data into our translationFilter. Move back to the translationFilter element on the pipeline structure and add the following to the xsl window.

<?xml version="1.1" encoding="UTF-8" ?>

<xsl:stylesheet xpath-default-namespace="records:2"

xmlns="reference-data:2"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:stroom="stroom"

xmlns:evt="event-logging:3"

version="2.0">

<xsl:template match="records">

<referenceData xmlns="reference-data:2"

xsi:schemaLocation="reference-data:2 file://reference-data-v2.0.xsd" version="2.0.1"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance">

<xsl:apply-templates/>

</referenceData>

</xsl:template>

<!-- MAIN TEMPLATE -->

<xsl:template match="record">

<reference>

<map></map>

<key></key>

<value></value>

</reference>

</xsl:template>

</xsl:stylesheet>

And on pressing the refresh button

Also note that if we move to the SchemaFilter element on the pipeline structure, we no longer have an “Invalid Schema Location” error.

We next extend the translation to actually generate reference data. The translation will now look like

<?xml version="1.1" encoding="UTF-8" ?>

<xsl:stylesheet xpath-default-namespace="records:2"

xmlns="reference-data:2"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:stroom="stroom"

xmlns:evt="event-logging:3"

version="2.0">

<!--

GEOHOST REFERENCE FEED:

CHANGE HISTORY

v1.0.0 - 2020-02-09 John Doe

This is a reference feed for device Logical and Geographic data.

The feed provides for each device

* the device FQDN

* the device IP Address

* the device Country location (using ISO 3166-1 alpha-3 codes)

* the device Site location

* the device Building location

* the device Room location

*the device TimeZone location (both standard then daylight timezone offsets from UTC)

The reference maps are

FQDN_TO_IP - Fully Qualified Domain Name to IP Address

IP_TO_FQDN - IP Address to FQDN (HostName)

FQDN_TO_LOC - Fully Qualified Domain Name to Location element

-->

<xsl:template match="records">

<referenceData xmlns="reference-data:2"

xsi:schemaLocation="reference-data:2 file://reference-data-v2.0.xsd" version="2.0.1">

<xsl:apply-templates/>

</referenceData>

</xsl:template>

<!-- MAIN TEMPLATE -->

<xsl:template match="record">

<!-- FQDN_TO_IP map -->

<reference>

<map>FQDN_TO_IP</map>

<key>

<xsl:value-of select="lower-case(data[@name='FQDN']/@value)" />

</key>

<value>

<IPAddress>

<xsl:value-of select="data[@name='IPAddress']/@value" />

</IPAddress>

</value>

</reference>

<!-- IP_TO_FQDN map -->

<reference>

<map>IP_TO_FQDN</map>

<key>

<xsl:value-of select="lower-case(data[@name='IPAddress']/@value)" />

</key>

<value>

<HostName>

<xsl:value-of select="data[@name='FQDN']/@value" />

</HostName>

</value>

</reference>

</xsl:template>

</xsl:stylesheet>

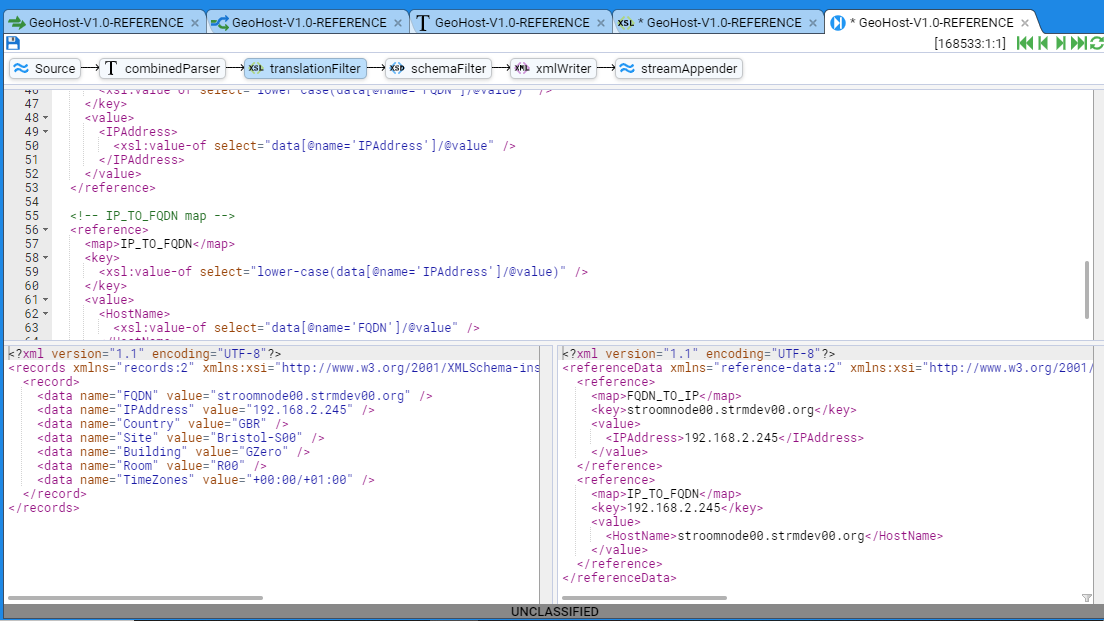

and when we refresh, by pressing the Refresh Current Step button

If we press the Step Forward button

At this point it would be wise to save our translation.

This is done by clicking on the highlighted

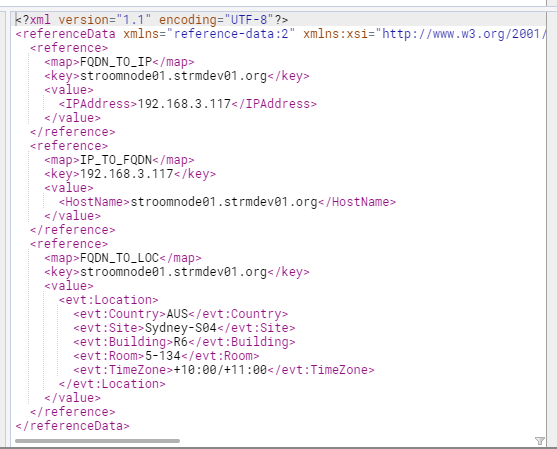

We can now further our Reference by adding a Fully Qualified Domain Name to Location reference - FQDN_TO_LOC and so now the translation looks like

<?xml version="1.1" encoding="UTF-8" ?>

<xsl:stylesheet xpath-default-namespace="records:2"

xmlns="reference-data:2"

xmlns:xsl="http://www.w3.org/1999/XSL/Transform"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:stroom="stroom"

xmlns:evt="event-logging:3"

version="2.0">

<!--

GEOHOST REFERENCE FEED:

CHANGE HISTORY

v1.0.0 - 2020-02-09 John Doe

This is a reference feed for device Logical and Geographic data.

The feed provides for each device

* the device FQDN

* the device IP Address

* the device Country location (using ISO 3166-1 alpha-3 codes)

* the device Site location

* the device Building location

* the device Room location

*the device TimeZone location (both standard then daylight timezone offsets from UTC)

The reference maps are

FQDN_TO_IP - Fully Qualified Domain Name to IP Address

IP_TO_FQDN - IP Address to FQDN (HostName)

FQDN_TO_LOC - Fully Qualified Domain Name to Location element

-->

<xsl:template match="records">

<referenceData xmlns="reference-data:2"

xsi:schemaLocation="reference-data:2 file://reference-data-v2.0.xsd" version="2.0.1">

<xsl:apply-templates/>

</referenceData>

</xsl:template>

<!-- MAIN TEMPLATE -->

<xsl:template match="record">

<!-- FQDN_TO_IP map -->

<reference>

<map>FQDN_TO_IP</map>

<key>

<xsl:value-of select="lower-case(data[@name='FQDN']/@value)" />

</key>

<value>

<IPAddress>

<xsl:value-of select="data[@name='IPAddress']/@value" />

</IPAddress>

</value>

</reference>

<!-- IP_TO_FQDN map -->

<reference>

<map>IP_TO_FQDN</map>

<key>

<xsl:value-of select="lower-case(data[@name='IPAddress']/@value)" />

</key>

<value>

<HostName>

<xsl:value-of select="data[@name='FQDN']/@value" />

</HostName>

</value>

</reference>

<!-- FQDN_TO_LOC map -->

<reference>

<map>FQDN_TO_LOC</map>

<key>

<xsl:value-of select="lower-case(data[@name='FQDN']/@value)" />

</key>

<value>

<!--

Note, when mapping to a XML node set, we make use of the Event namespace - i.e. evt:

defined on our stylesheet element. This is done, so that, when the node set is returned,

it is within the correct namespace.

-->

<evt:Location>

<evt:Country>

<xsl:value-of select="data[@name='Country']/@value" />

</evt:Country>

<evt:Site>

<xsl:value-of select="data[@name='Site']/@value" />

</evt:Site>

<evt:Building>

<xsl:value-of select="data[@name='Building']/@value" />

</evt:Building>

<evt:Room>

<xsl:value-of select="data[@name='Room']/@value" />

</evt:Room>

<evt:TimeZone>

<xsl:value-of select="data[@name='TimeZones']/@value" />

</evt:TimeZone>

</evt:Location>

</value>

</reference>

</xsl:template>

</xsl:stylesheet>

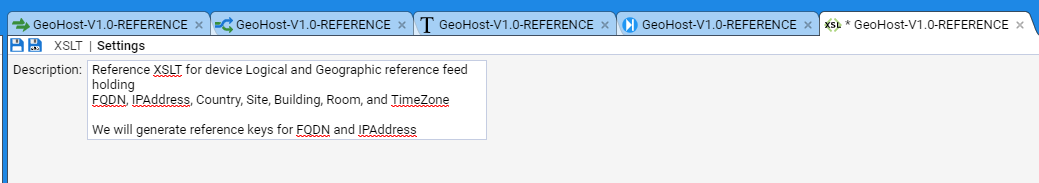

and our second ReferenceData element would now look like

We have completed the translation and have hence completed the development of our GeoHost-V1.0-REFERENCE reference feed.

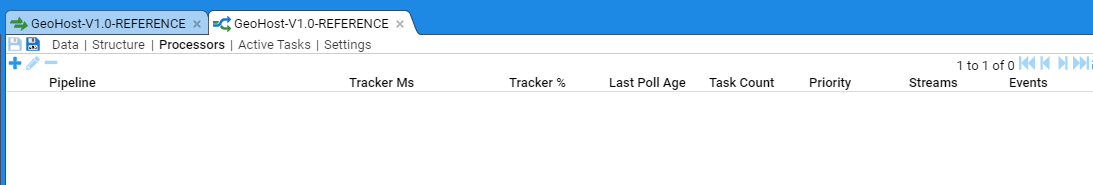

At this point, the reference feed is set up to accept Raw Reference data, but it will not automatically process the raw data and hence it will not place reference data into the reference data store. To have Stroom automatically process Raw Reference streams, you will need to enable Processors for this pipeline.

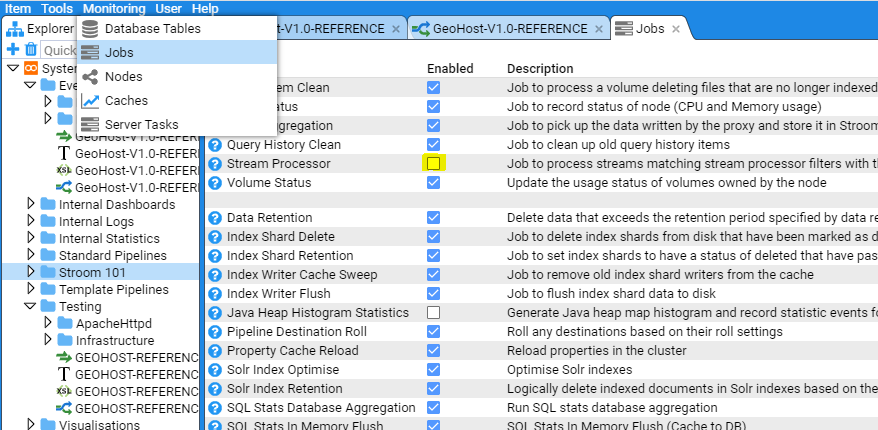

Enabling the Reference Feed Processors

We now create the pipeline Processors for this feed, so that the raw reference data will be transformed into Reference Data on ingest and save to Reference Data stores.

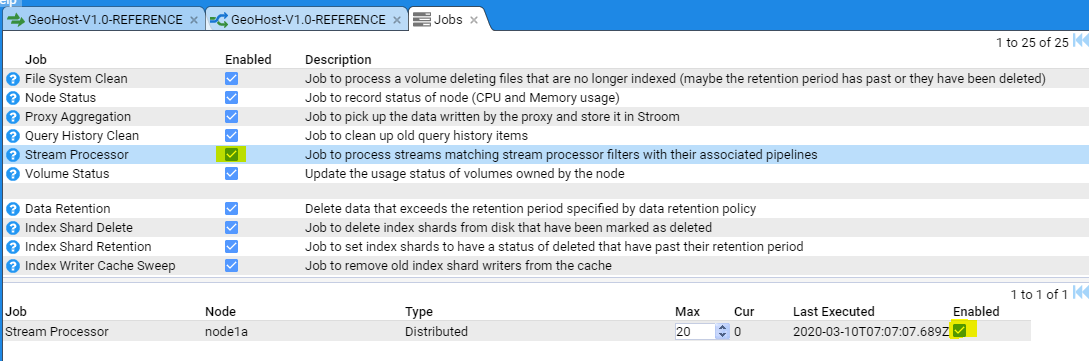

Open the reference feed pipeline by selecting the

This configuration tab is divided into two panes. The top pane shows the current enabled Processors and any recently processed streams and the bottom pane provides meta-data about each Processor or recently processed streams.

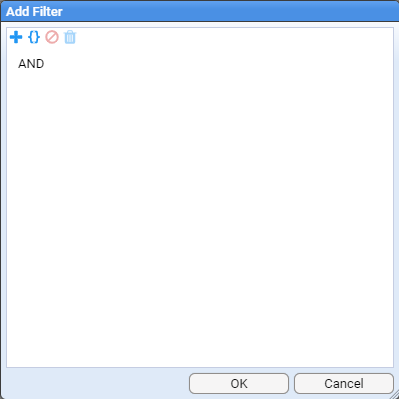

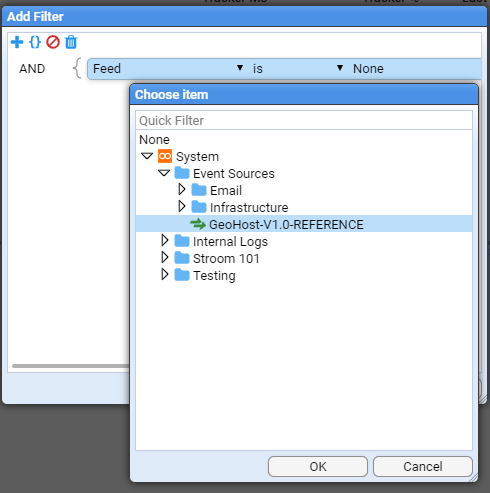

First, move the mouse to the Add Processor

This selection window allows us to filter what set of data streams we want our Processor to process. As our intent is to enable processing for all GeoHost-V1.0-REFERENCE streams, both already received and yet to be received, then our filtering criteria is just to process all Raw Reference for this feed, ignoring all other conditions.

To do this, first click on the Add Term

and press to make the selection.

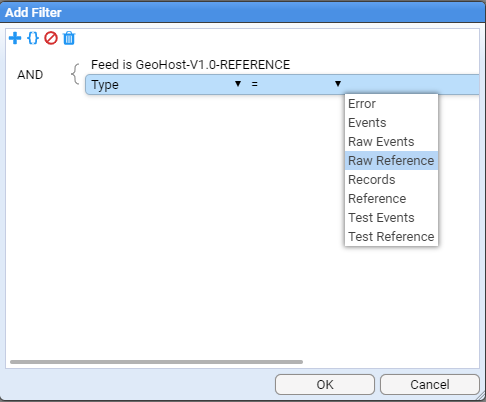

Next, we select the required stream type.

To do this click on the Add Term

at which point, select the Stream Type of Raw Referenceand then press . At this we return to the Add Processor selection window to see that the Raw Reference stream type has been added.

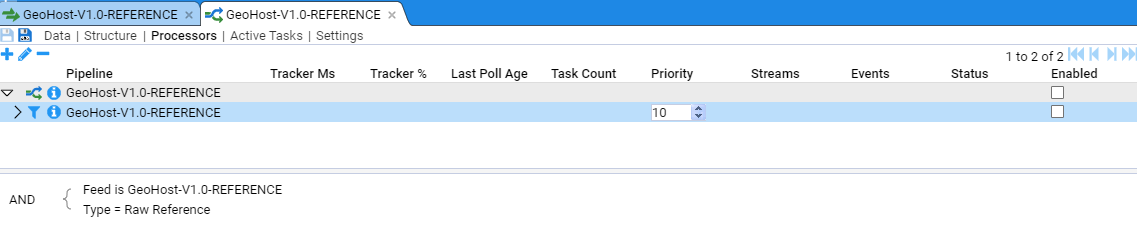

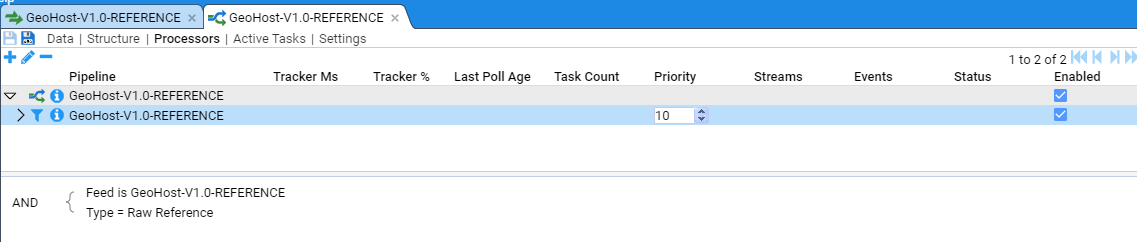

Note the Processor has been added but it is in a disabled state. We enable both pipeline processor and the processor filter

Note - if this is the first time you have set up pipeline processing on your Stroom instance you may need to check that the Stream Processor job is enabled on your Stroom instance. To do this go to the Stroom main menu and select Monitoring>Jobs> Check the status of the Stream Processor job and enable if required. If you need to enable the job also ensure you enable the job on the individual nodes as well (go to the bottom window pane and select the enable box on the far right)

Returning to the

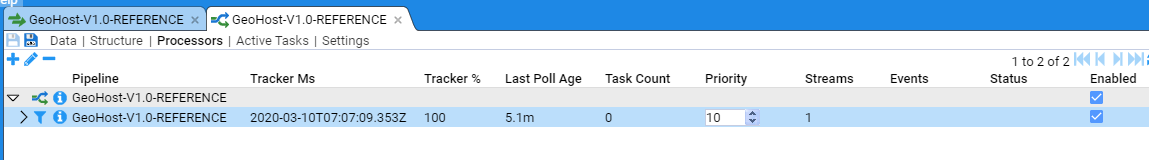

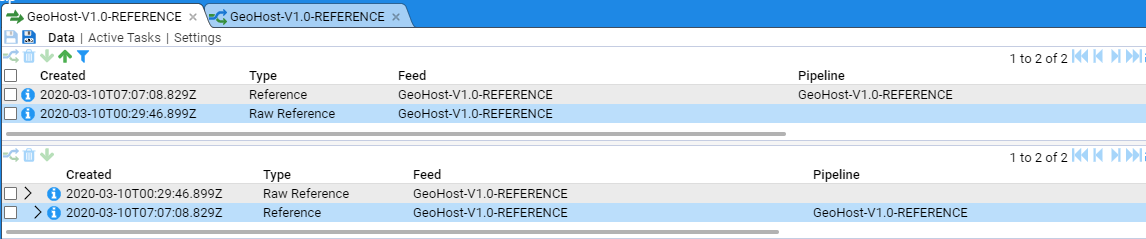

Navigating back to the Data sub-item and clicking on the reference feed stream in the Stream Table we see

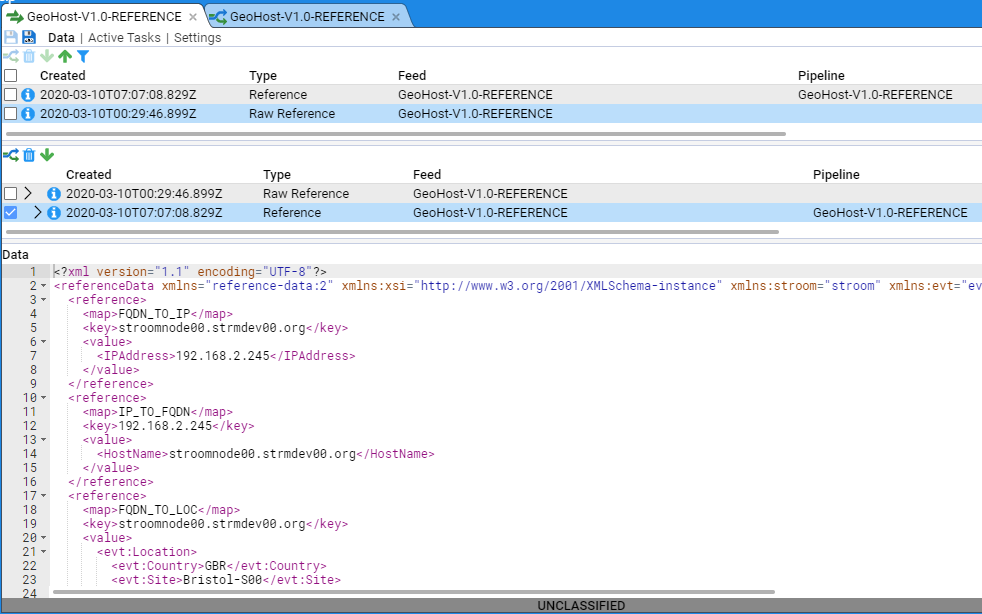

In the top pane, we see the Streams table as per normal, but in the Specific stream table we see that we have both a Raw Reference stream and its child Reference stream. By clicking on and highlighting the Reference stream we see its content in the bottom pane.

The complete ReferenceData for this stream is

<?xml version="1.1" encoding="UTF-8"?>

<referenceData xmlns="reference-data:2" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:stroom="stroom" xmlns:evt="event-logging:3" xsi:schemaLocation="reference-data:2 file://reference-data-v2.0.xsd" version="2.0.1">

<reference>

<map>FQDN_TO_IP</map>

<key>stroomnode00.strmdev00.org</key>

<value>

<IPAddress>192.168.2.245</IPAddress>

</value>

</reference>

<reference>

<map>IP_TO_FQDN</map>

<key>192.168.2.245</key>

<value>

<HostName>stroomnode00.strmdev00.org</HostName>

</value>

</reference>

<reference>

<map>FQDN_TO_LOC</map>

<key>stroomnode00.strmdev00.org</key>

<value>

<evt:Location>

<evt:Country>GBR</evt:Country>

<evt:Site>Bristol-S00</evt:Site>

<evt:Building>GZero</evt:Building>

<evt:Room>R00</evt:Room>

<evt:TimeZone>+00:00/+01:00</evt:TimeZone>

</evt:Location>

</value>

</reference>

<reference>

<map>FQDN_TO_IP</map>

<key>stroomnode01.strmdev01.org</key>

<value>

<IPAddress>192.168.3.117</IPAddress>

</value>

</reference>

<reference>

<map>IP_TO_FQDN</map>

<key>192.168.3.117</key>

<value>

<HostName>stroomnode01.strmdev01.org</HostName>

</value>

</reference>

<reference>

<map>FQDN_TO_LOC</map>

<key>stroomnode01.strmdev01.org</key>

<value>

<evt:Location>

<evt:Country>AUS</evt:Country>

<evt:Site>Sydney-S04</evt:Site>

<evt:Building>R6</evt:Building>

<evt:Room>5-134</evt:Room>

<evt:TimeZone>+10:00/+11:00</evt:TimeZone>

</evt:Location>

</value>

</reference>

<reference>

<map>FQDN_TO_IP</map>

<key>host01.company4.org</key>

<value>

<IPAddress>192.168.4.220</IPAddress>

</value>

</reference>

<reference>

<map>IP_TO_FQDN</map>

<key>192.168.4.220</key>

<value>

<HostName>host01.company4.org</HostName>

</value>

</reference>

<reference>

<map>FQDN_TO_LOC</map>

<key>host01.company4.org</key>

<value>

<evt:Location>

<evt:Country>USA</evt:Country>

<evt:Site>LosAngeles-S19</evt:Site>

<evt:Building>ILM</evt:Building>

<evt:Room>C5-54-2</evt:Room>

<evt:TimeZone>-08:00/-07:00</evt:TimeZone>

</evt:Location>

</value>

</reference>

</referenceData>

<reference>

<map>FQDN_TO_IP</map>

<key>host32.strmdev01.org</key>

<value>

<IPAddress>192.168.8.151</IPAddress>

</value>

</reference>

<reference>

<map>IP_TO_FQDN</map>

<key>192.168.8.151</key>

<value>

<HostName>host32.strmdev01.org</HostName>

</value>

</reference>

<reference>

<map>FQDN_TO_LOC</map>

<key>host32.strmdev01.org</key>

<value>

<evt:Location>

<evt:Country>AUS</evt:Country>

<evt:Site>Sydney-S02</evt:Site>

<evt:Building>RC45</evt:Building>

<evt:Room>5-134</evt:Room>

<evt:TimeZone>+10:00/+11:00</evt:TimeZone>

</evt:Location>

</value>

</reference>

<reference>

<map>FQDN_TO_IP</map>

<key>host14.strmdev00.org</key>

<value>

<IPAddress>192.168.234.9</IPAddress>

</value>

</reference>

<reference>

<map>IP_TO_FQDN</map>

<key>192.168.234.9</key>

<value>

<HostName>host14.strmdev00.org</HostName>

</value>

</reference>

<reference>

<map>FQDN_TO_LOC</map>

<key>host14.strmdev00.org</key>

<value>

<evt:Location>

<evt:Country>GBR</evt:Country>

<evt:Site>Bristol-S22</evt:Site>

<evt:Building>CAMP2</evt:Building>

<evt:Room>Rm67</evt:Room>

<evt:TimeZone>+00:00/+01:00</evt:TimeZone>

</evt:Location>

</value>

</reference>

</referenceData>

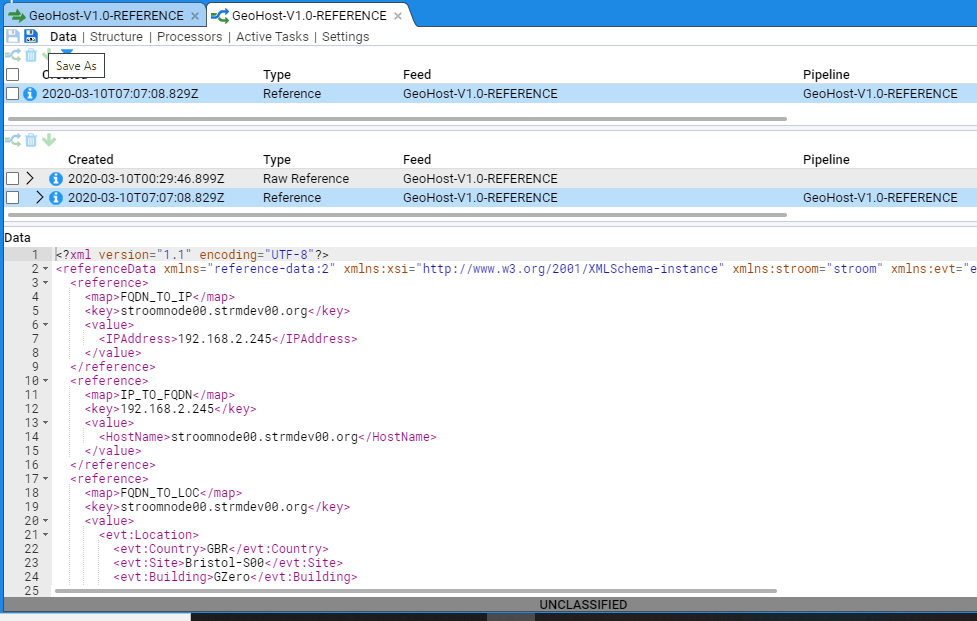

If we go back to the reference feed itself (and click on the

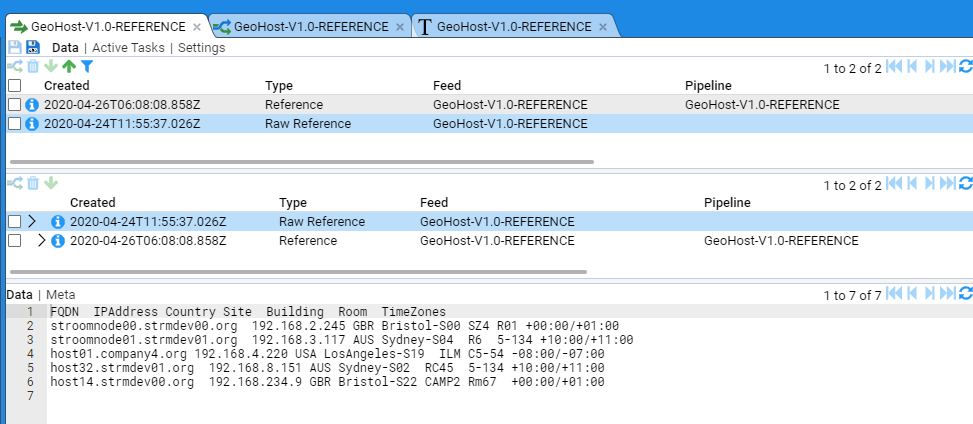

Selecting the Reference stream in the Stream Table will result in the Specific stream pane displaying the Raw Reference and its child Reference stream (highlighted) and the actual ReferenceData output in the Data pane at the bottom.

Selecting the Raw Reference stream in the Streams Table will result in the Specific stream pane displaying the Raw Reference and its child Reference stream as before, but with the Raw Reference stream highlighted and the actual Raw Reference input data displayed in the Data pane at the bottom.

The creation of the Raw Reference is now complete.

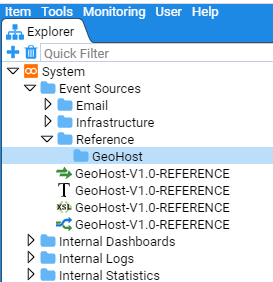

At this point you may wish to organise the resources you have created within the Explorer pane to a more appropriate location such as Reference/GeoHost.

Because Stroom Explorer is a flat structure you can move resources around to reorganise the content without any impact on directory paths, configurations etc.

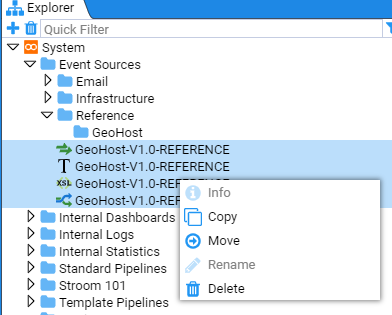

Now you have created the new folder structure you can move the various GeoHost resources to this location. Select all four resources by using the mouse right-click button while holding down the Shift key. Then right click on the highlighted group to display the action menu

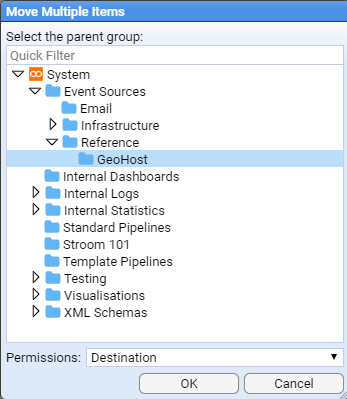

Select move and the Move Multiple Items window will display.

Navigate to the Reference/GeoHost folder to move the items to this destination.

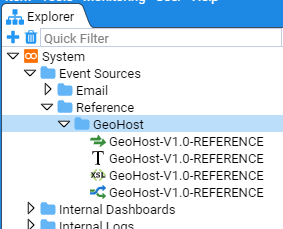

The final structure is seen below